A new brick for the MPAI architecture

At its 43rd General Assembly of 2024 April17, MPAI approved the publication of the draft AI Module Profiles (MPAI-PRF) standard with a request for Community Comments. The scope of MPAI-PRF is to provide a solution to the problem that MPAI finds more and more often: the AI Modules (AIM) it specifies in different standards have the same basic functionality but may have different features.

First two words about AIMs. MPAI develops application-oriented standards for applications that MPAI calls AI Workflows (AIW) that can be broken down into components called Ai Modules. AIWs are specified by what they do (functions), by the input and output data and by how its AIMs are interconnected (Topology). Similarly, AIMs are specified by what they do (functions) and by the input and output data. AIMs are Composite if they include interconnected AIMs or Basic if its internal structure is unknown.

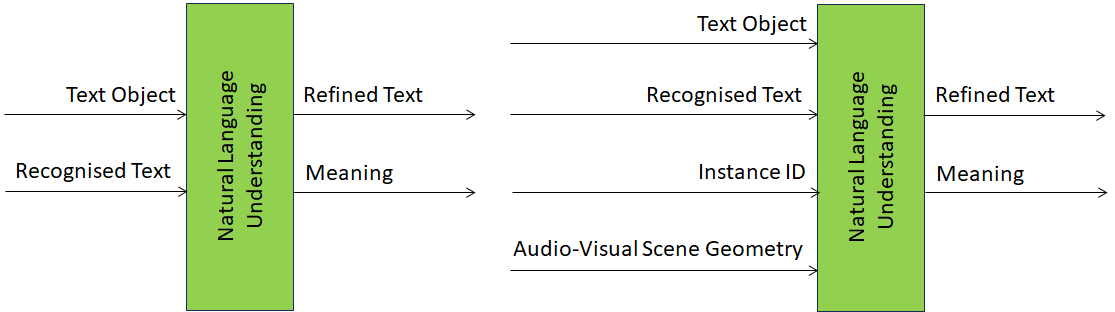

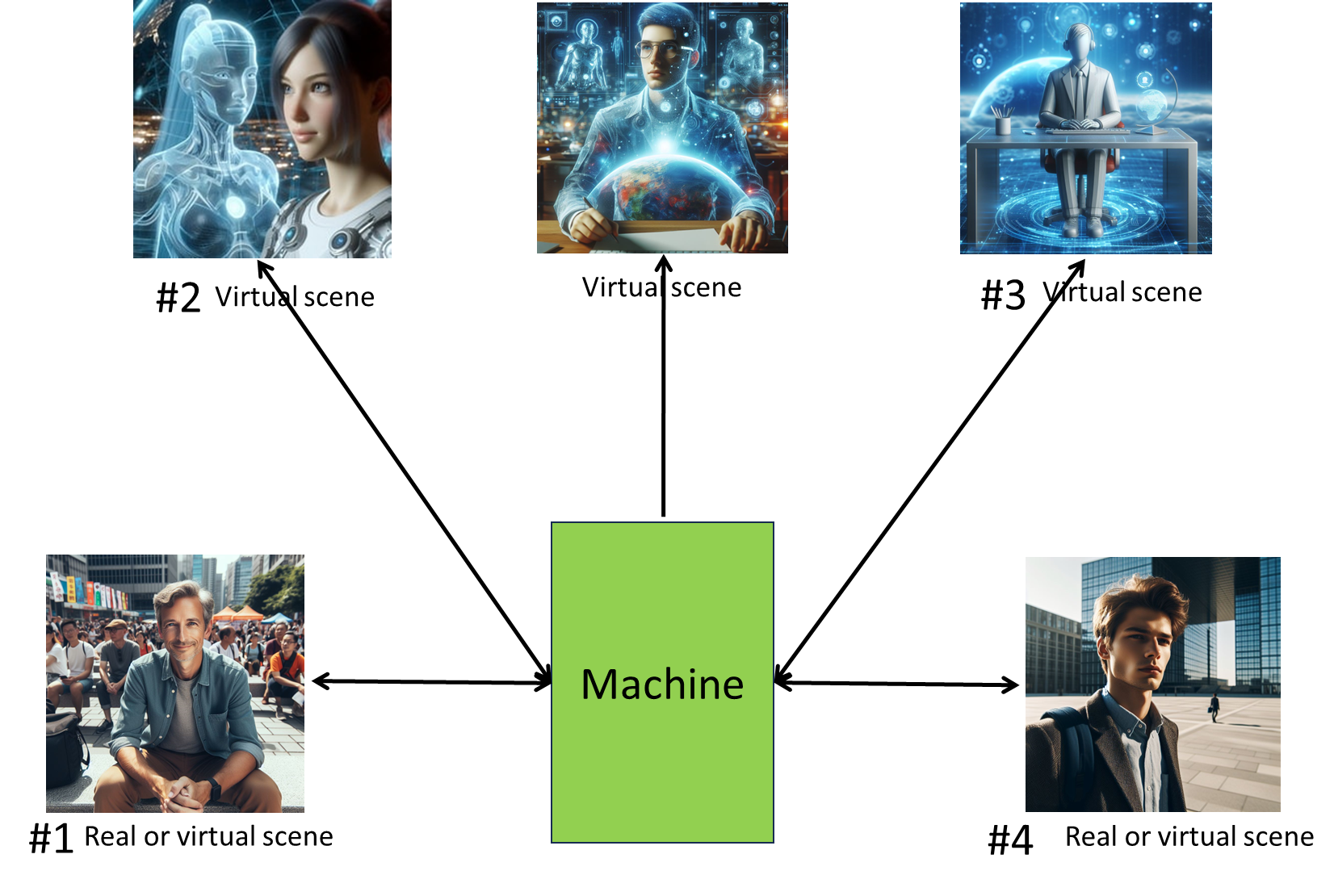

Let’s look at the Natural Language Understanding (MMC-NLU) AIM of Figure 1.

Figure 1 – The Natural Language Understanding (MMC-NLU) AIM

The NLU AIM’s basic function is to receive a Text Object – directly from a keyboard or through an Automatic Speech Recognition (ASR) AIM (in which case it is called Recognised Text) and produce a Text Object that can be Refined Text in case it is the output of an ASR AIM and the Meaning of the text. The NLU AIM, however, can also receive “spatial information” about the Audio and/or Visual Objects in terms of their position and orientation in the Scene that the machine is processing. Obviously, this additional information helps the machine produce a response that is more attuned to the context.

This a case shows that there is a need to unambiguously name these two functionally equivalent but very different instances of the same NLU AIMs.

The notion of Profiles, originally developed by MPEG in the summer of 1992 for the MPEG-2 standard and then universally adopted in the media domain comes in handy. An AIM Profile is a label that uniquely identifies the set of AIM Attributes of an AIM instance where Attribute is “input data, output data, or functionality that uniquely characterises an AIM instance”. In the case of the NLU AIM, Text Object (TXO), Recognised Text (TXR), Object Instance Identifier (OII), Audio-Visual Scene Geometry (AVG), and Meaning (TXD or Text Descriptors).

The Draft AI Module Profiles (MPAI-PRF) Standard offers two ways to signal the Attributes of an AIM: those that are supported or those that are not supported. Both can be used, but likely the first (list of those that are supported) if it is shorter than the second (list of those that are not supported) and vice versa. The Profile of an NLU AIM instance that does not handle spatial information can thus be labelled in two ways:

| List of supported Attributes | MMC-NLU-V2.1(ALL-AVG-OII) |

| List of unsupported Attributes | MMC-NLU-V2.1(NUL+TXO+TXR) |

V2.1 refers to the version of the Multimodal Conversation MPAI-MMC standards that specifies the NLU AIM. ALL signals that the Profile is expressed in “negative logic” in the sense that the removed Attributes are AVG for Audio-Visual Scene Geometry and OII. NUL signals that the Profile is expressed in “positive logic” in the sense that the added Attributes are TXO for Text Object from a keyboard and TXR for Recognised Text.

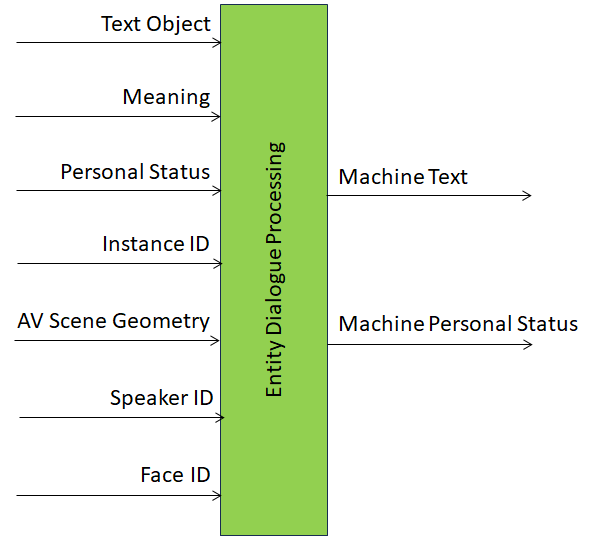

The Profile story does not end here. Attributes are not always sufficient to identify the capabilities of an AIM instance. Let’s take the Entity Dialogue Processing (MMC-EDP) of Figure 2 an AIM that uses different information sources derived from the information issued by an Entity, typically a human – but potentially also a machine – with which this machine is communicating.

Figure 2 – The Entity Dialogue Processing (MPAI-EDP)

The input data is Text Object and Meaning (output of the NLU), Audio or Visual Instance ID and Scene Geometry (already used by the NLU AIM) and Personal Status, a data type that represents the internal state of the Entity in terms of three Factors (Cognitive State, Emotion, and Social Attitude) and four Modalities (Text, Speech, Face, and Body) for each Factor.

The output of the EDP AIM is Text that can be fed to a regular Text-To-Speech AIM, but can additionally be the machine’s Personal Status, obviously pretended by the machine, but of great value for the Personal Status Display (PAF-PSD) AIM depicted in Figure 3.

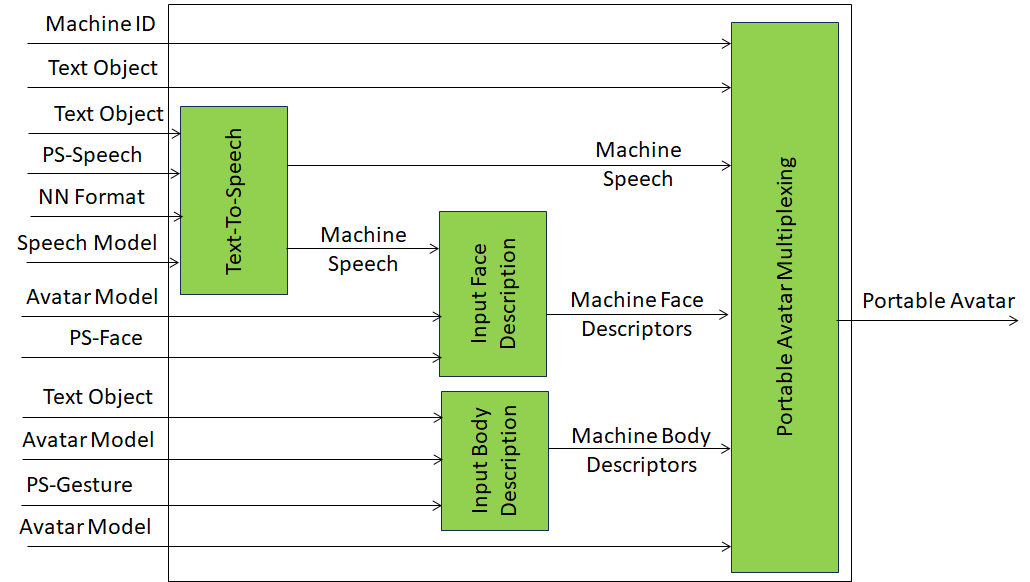

Figure 3 – The Personal Status Display AIM

This uses the machine’s Text and Personal Status (IPS) to synthesise the machine using an Avatar Model (AVM) as a speaking avatar. An AIM instance of the PSD AIM may support the Personal Status, but only its Speech (PS-Speech, PSS) and Face (PS-Face, PSF) Factors, as in the case of a PSD AIM designed for sign language. This is formally represented by the following two expressions:

| List of supported Attributes | PAF-PSD-V1.1(ALL@IPS#PSS#PSF) |

| List of unsupported Attributes | PAF-PSD-V1.1(NUL+TXO+AVM@IPS#PSF#PSG |

@IPS#PSS#PSF in the first expression indicates that the PSD AIM supports all Attributes, but the Personal Status only includes the Speech and Face Factors. In the second expression +TXO+AVM indicates that the PSD AIM supports Text and Avatar Model and @IPS#PSF#PSG that the Personal Status Factors supported are Face (PSF) and Gesture (PSG).

AI Module Profiles is another element of the AI application infrastructure that MPAI is building with its standards. Read the AI Module Profiles standard for an in-depth understanding. Anybody can submit comments to the draft by sending an email to the MPAI secretariat by 2024/05/08T23:59. MPAI will consider each comment received for possible inclusion in the final version of MPAI-PRF.