The concept of virtual reality is now well established, with the metaverse concept as an important variant. Accordingly, MPAI has established a related standard, the MPAI Metaverse Model – Technologies (MMM-TEC) standard. However, standards for the contents of an MPAI metaverse instance (M-Instance) are still in progress. This document introduces the current status of these efforts and invites participation.

The contents include Processes representing entities with agency, called Users, and other entities lacking agency – essentially, various things populating an M-Instance – called Items.

Some Users represent humans. These may be directly operated by humans (and are called H-Users), or may have a high degree of operational autonomy (and are called A-Users, or informally, agents). Both types may be rendered as avatars called Personae.

The MMM-TEC standard specifies technologies enabling Users to perform various Actions on Items (things) in an M-Instance. For example, Users may sense data from the real world or may move Items in the M-Instance, possibly in combination with other Processes. However, MMM-TEC does not yet specify how an A-User decides to perform an Action.

Thus MPAI is developing a new standard covering such decisions: what does an A-User do when deciding to do something to achieve a Goal in an M-Instance? MPAI has assembled numerous relevant technologies, but more are needed. Therefore, the 61st MPAI General Assembly (MPAI-61) has published the Call for Technologies Pursuing Goals in metaverse (MPAI-PGM) – Autonomous User Architecture (AUA). The Call requests interested parties – irrespective of their membership in MPAI – to submit responses that may enable MPAI to develop a robust A-User Architecture standard attractive to implementers and users.

The planned standard’s scope is as follows: PGM-AUA will specify functions and interfaces by which an A-User interacts with another User, either an A-User or an H-User. (Again, the term “User” means “conversational partner in the metaverse”, whether autonomous or driven by a human.) A-Users can capture text and audio-visual information originated by, or surrounding, the User; extract the User State, i.e., snapshots of the User’s cognitive, emotional, and interactional states; produce an appropriate multimodal response, rendered as a speaking Avatar; and move appropriately in the relevant virtual space.

One possible way to model an A-User’s interactions with other Users might be to train a very powerful unitary Large Language Model, able to use spatial and media information. However, because such a model would be unwieldy and difficult to manage, MPAI instead assumes the use of a relatively simple Large Language Model with basic language and reasoning capabilities. Spatial, audio-visual, and User description information will be passed to and from this Basic Model in natural language.

To handle this integration, MPAI proposes the MPAI AI Framework (MPAI-AIF) standard. This standard provides the necessary infrastructure to define a foundation for an A-User to which the necessary technologies can be added. MPAI-AIF enables specification of an AI Workflow (AIW) composed of AI Modules (AIMs). In this case, these can jointly represent an A-User in a manner that is modular, i.e., able to swap or update modules independently from other modules; transparent, i.e., able to perform clear roles and expose well-defined interfaces; and extensible, i.e., able to add or replace specific competences as needed.

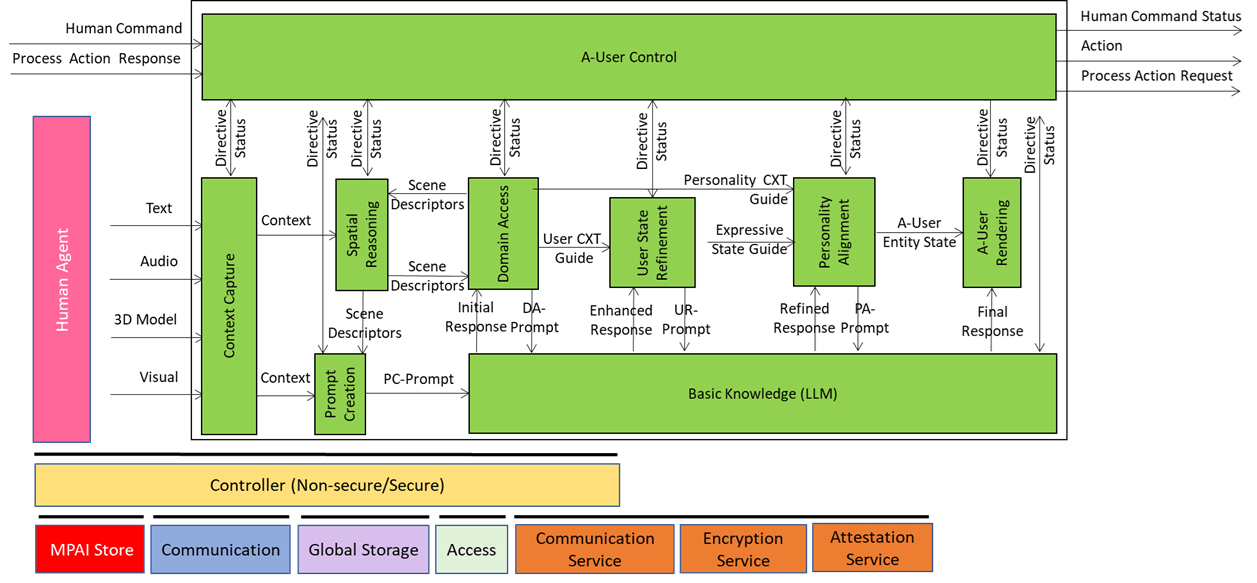

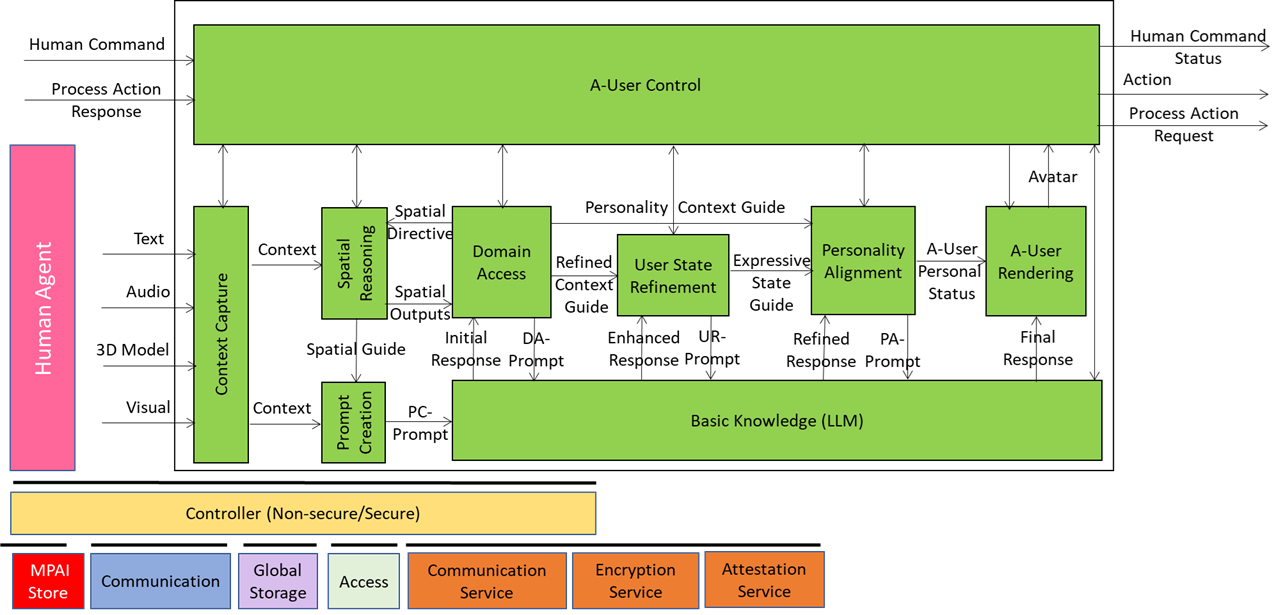

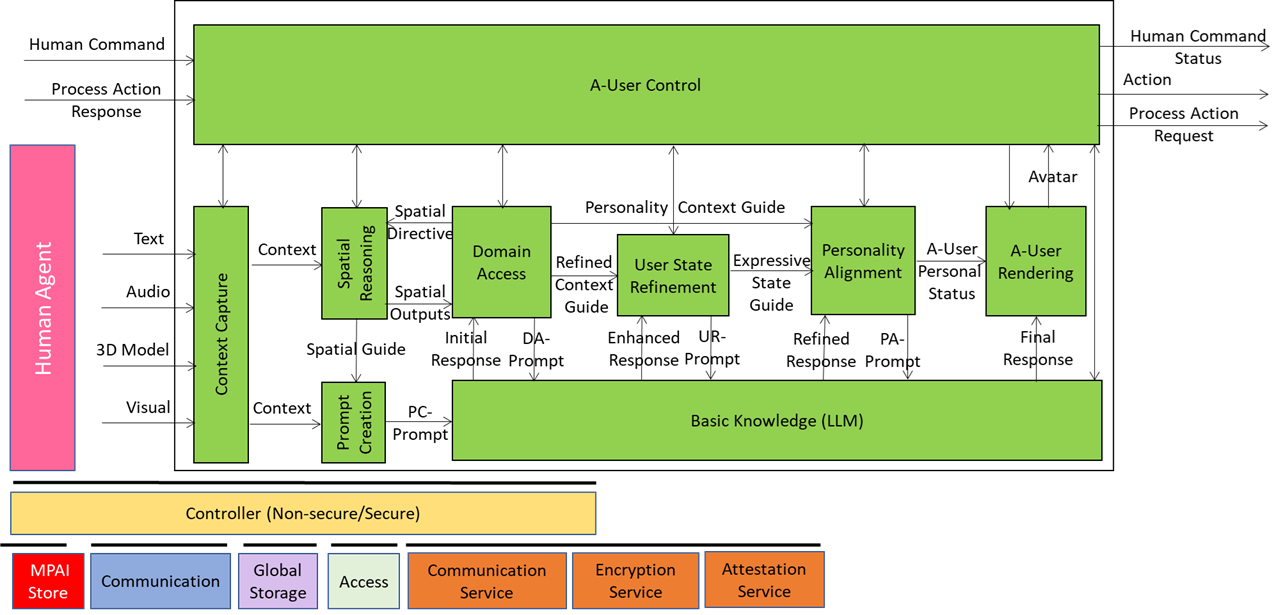

The following figure represents a tentative diagram of the A-User architecture.

Figure 1 – The reference model of the Autonomous User Architecture

The model represents a largely autonomous A-User’s (“agent’s”) interactions with another User (A-User or H-User) at a given instant. It would thus be invoked repeatedly for extended interactions.

At a high level, we see an executive element (A-User Control), which can receive as input a human command or the response to some Action, and which delivers as output its status in response to the relevant command; any related action; and any request that it may itself deliver.

NOTE: While an A-User is defined as a relatively autonomous Process, a human may take over or modify its operation via the A-User Control.

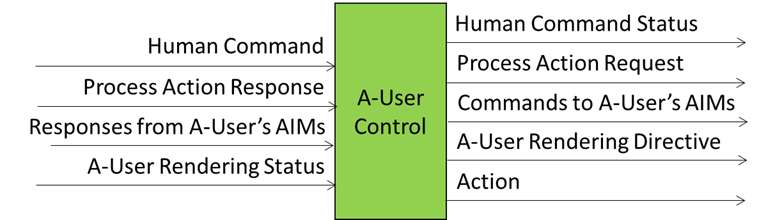

More formally, the executive

The A-User Control AIM drives A-User operation by controlling how it interacts with the environment and performs Actions and Process Actions based on the Rights it holds and the M-Instance Rules. It does so by:

- Performing or requesting another Process to perform an Action.

- Controlling the operation of AIMs, in particular A-User Rendering.

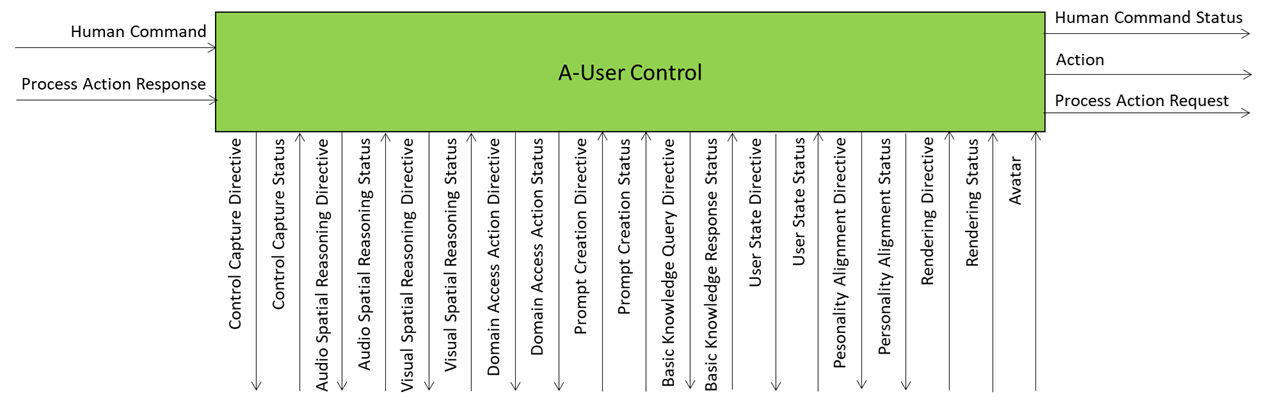

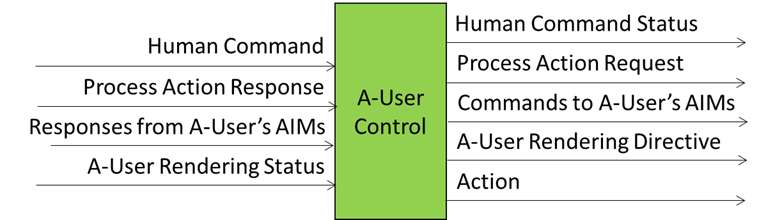

The responsible human may take over or modify the operation of the A-User Control by exercising Human Commands. Figure 2 summarises the input and output data of the A-User Control AIM

Figure 2 – Simplified view of the Reference Model of A-User Control

A Human Command received from a human will generate a Human Command Status in response. A Process Action Request to a Process – that may include another User – will generate a Process Action Response. Various types of Commands (called Directives) to the Autonomous User AI Modules (AIM) will generate responses (called Statuses). The Figure singles out the A-User Rendering Directives issued to the A-User Rendering AIM. This will generate a response typically including a Speaking Avatar that the A-User Control AIM will MM-Add or MM-Move in the metaverse. The complete Reference Model of A-User Control can be found here.

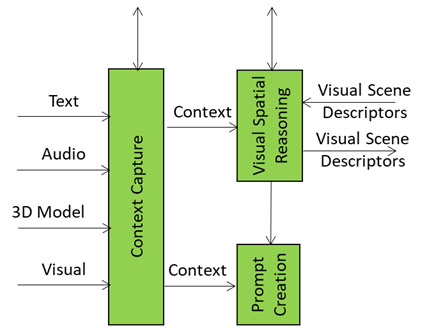

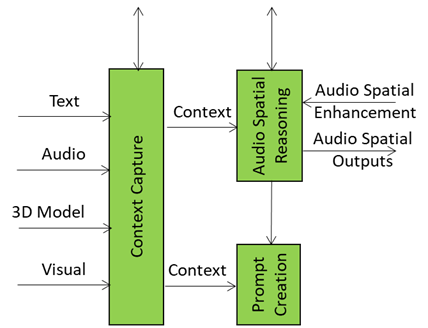

The Context Capture AIM, prompted by the A-User Control, perceives a particular location of the M-Instance – called M-Location – where the User, i.e., the A-User’s conversation partner, has MM-Added its Avatar. In the metaverse, the A-User perceives by issuing an MM-Capture Process Action Request. The multimodal data captured is processed and the result is called Context – a time-stamped snapshot of the M-Location – composed of:

- Audio and Visual Scene Descriptors describing the spatial content.

- Entity State, describing the User’s cognitive, emotional, and attentional posture.

Thus, Context represents the initial A-User’s understanding of the User and the M-Location where it is embedded.

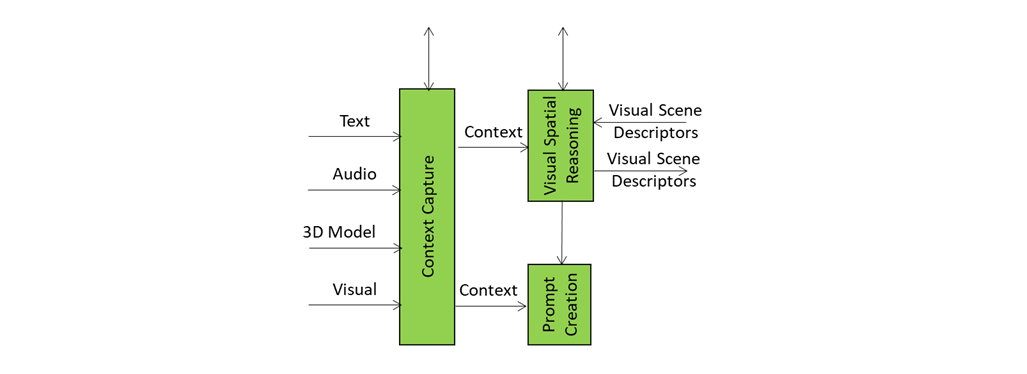

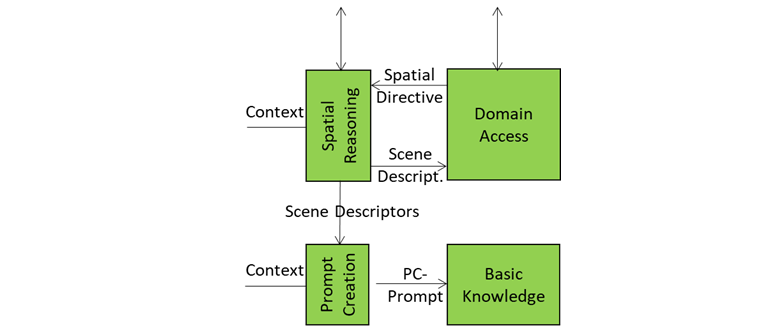

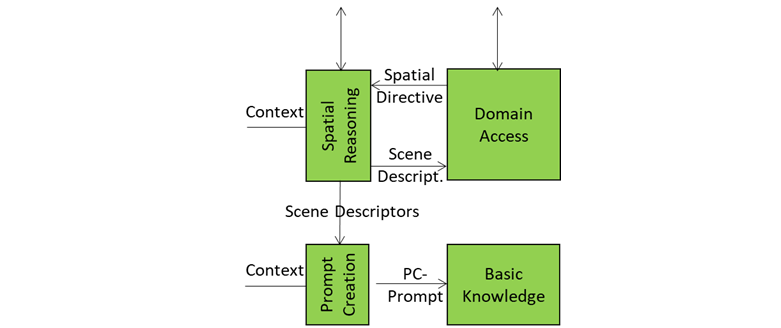

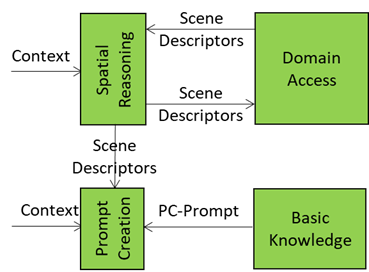

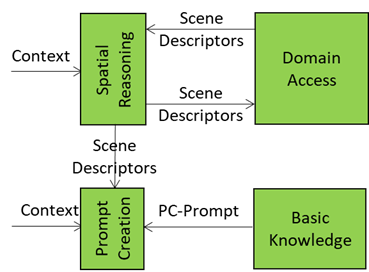

The Spatial Reasoning AIM – composed of two AIMs, Audio Spatial Reasoning and Visual Spatial Reasoning – analyses Context and sends an enhanced version of the Audio and Visual Scene Descriptors, containing audio source relevance, directionality, and proximity (Audio) and object relevance, proximity, referent resolutions, and affordance (Visual) to

- The Domain Access AIM seeking additional domain-specific information. Domain Access responds with further enhanced Audio and Visual Scene Descriptors, and

- The Prompt Creation AIM sending to the Basic Knowledge, a basic LLM, the PC-Prompt integrating:

- User Text and Entity State (from Context Capture).

- Enhanced Audio and Visual Scene Descriptors (from Spatial Reasoning).

This is depicted in Figure 3.

Figure 3 – Basic Knowledge receives PC-Prompt from Prompt Creation

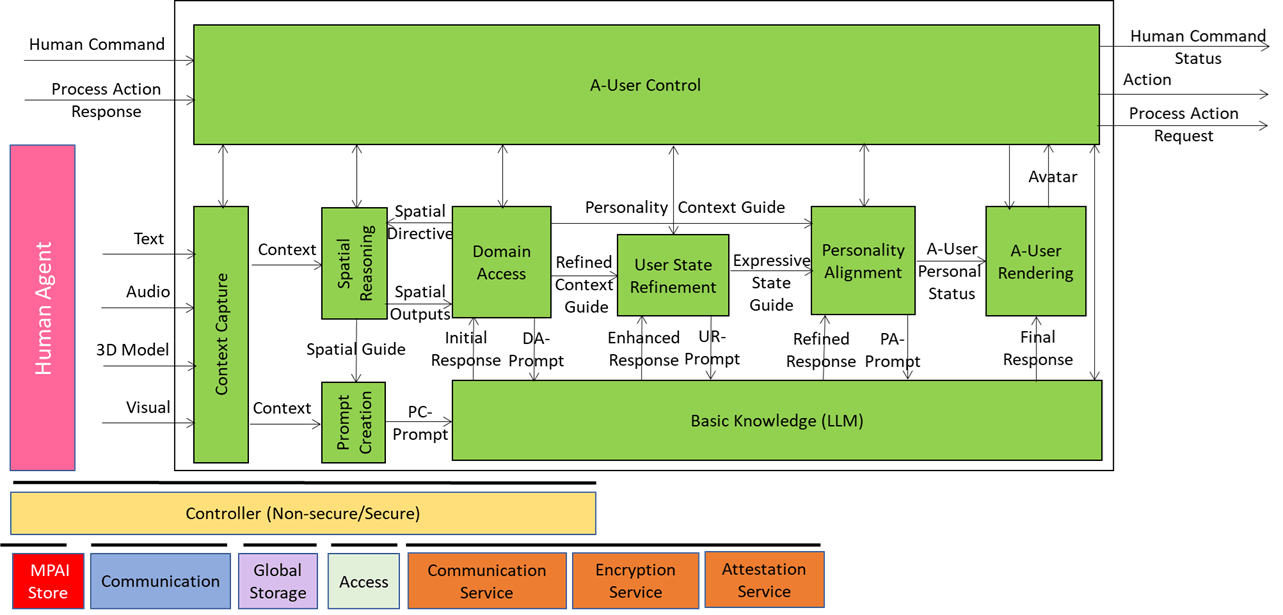

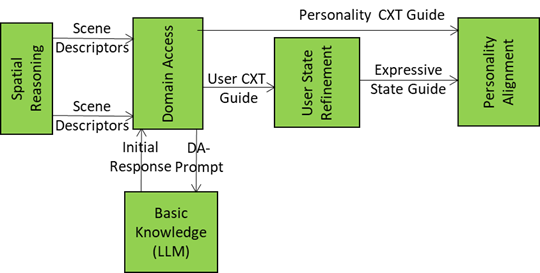

The Initial Response to PC-Prompt is sent by Basic Knowledge to Domain Access that

- Processes the Audio and Visual Scene Descriptors and the Initial Response by accessing domain-specific models, ontologies, or M-Instance services to retrieve:

- Scene-specific object roles (e.g., “this is a surgical tool”)

- Task-specific constraints (e.g., “only authorised Users may interact”)

- Semantic affordances (e.g., “this object can be grasped”)

- Produces and sends four flows:

- Enhanced Audio and Visual Scene Descriptors to Spatial Reasoning to enhance its scene understanding.

- User Context Guide to User State Refinements to enable it to update User’s Entity State.

- Personality Context Guide to Personality Alignment.

- DA-Prompt, a new prompt to Basic Knowledge including initial reasoning and spatial semantics.

Figure 4 – Domain Access serves Spatial Reasoning, Basic Knowledge, User State Refinement, and Personality Alignment

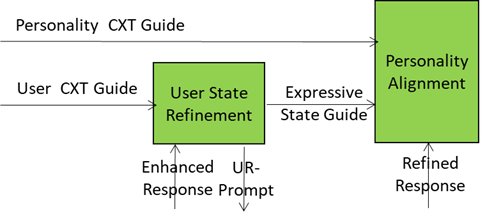

Basic Knowledge produces and sends an Enhanced Response to the User State Refinement AIM.

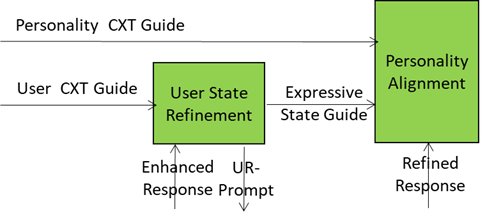

User State Refinement refines its understanding of User State using the User Context Guide, produces and sends:

- UR-Prompt to Basic Knowledge.

- Expressive State Guide to Personality Alignment providing A-User with the means to adopt a Personality that is congruent with the User’s Entity State.

Basic Knowledge produces and sends a Refined Response to Personality Alignment.

This is depicted in Figure 5.

Figure 5 – User State Refinements feeds Personality Alignment

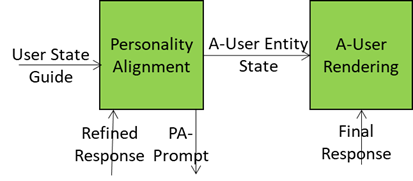

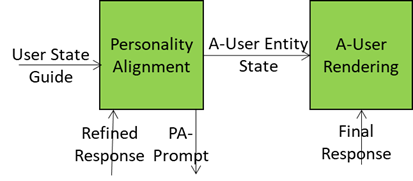

Personality Alignment

- Selects a Personality based Refined Response and Expressive State Guide and conveying a variety of elements such as : Expressivity (e.g., Tone, Tempo, Face, Gesture) and Behavioural Traits (e.g.: verbosity, humour, emotion), Type of role (e.g., assistant, mentor, negotiator, entertainer), etc.

- Formulates and sends

- An A-User Entity State reflecting the Personality to A-User Rendering.

- A PA-Prompt to Basic Knowledge reflecting the intended speech modulation, face and gesture), synchronisation cues across modalities

Basic Knowledge sends a Final Response that conveys semantic content, contextual integration, expressive framing, and personality coherence.

This is depicted in Figure 6.

Figure 6 – Personality Alignment feeds A-User Rendering

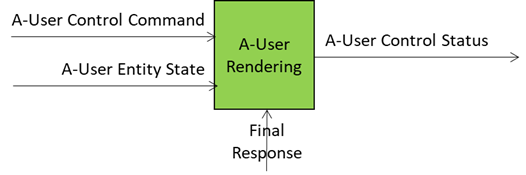

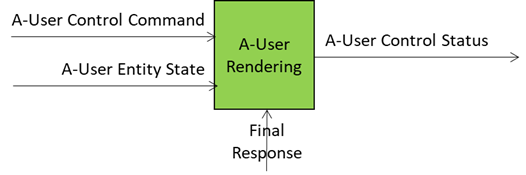

A-User Rendering uses Final Response, A-User Entity Status and A-User Control Command from A-User Control to synthesise and shape a speaking Avatar contained in the A-User Control. This is depicted in Figure 7.

Figure 7 – The result of the Autonomous User processing is fed to A-User Control

Extended Call for Technologies

The complexity of the MMM-TEC model has prompted MPAI to extend its usual practice for Calls for Technologies. In addition to the usual Call for Technologies, Use Cases and Functional Requirements, Framework Licence, and Template for Responses, the Call also refers to a Tentative Technical Specification, a document drafted as if it were an actual Technical Specification. Respondents to the Call are free to comment on, change, or extend the Tentative Technical Specification or to make any other proposals judged relevant to the Call.

Anyone, irrespective of MPAI membership status, may respond to the Call. Responses shall reach the MPAI Secretariat by 2026/01/21T23:59.

Appropriate MPAI working groups will thoroughly review the Responses and retain those deemed appropriate for the future PGM-AUA standard. MPAI may select suitable technologies from those submitted in Responses, but is not obligated to select any proposal. Respondents will be encouraged to join MPAI. If Respondents whose Responses are accepted in full or in part do not join MPAI, MPAI will discontinue consideration of their proposed technologies.