MPAI publishes new Standard, Reference Software, and Conformance Testing Specification

Geneva, Switzerland – 12 June 2024. MPAI – Moving Picture, Audio and Data Coding by Artificial Intelligence – the international, non-profit, and unaffiliated organisation developing AI-based data coding standards has concluded its 45th General Assembly (MPAI-45) by approving for publication the new AI Module Profiles, Neural Network Watermarking Reference Software, and the Multimodal Conversation Reference Software.

Technical Specification: AI Module Profiles (MPAI-PRF) V1.0 is an important addition to the MPAI architecture because it enables an AI Module to signal its capabilities in terms of input and output data and specific functionalities.

Reference Software Specification: Neural Network Watermarking (MPAI-NNW) V1.2 makes available to the community software implementing the functionalities of the Neural Network Watermarking Standard when implemented in an AI Framework and using limited capability Microcontroller Units.

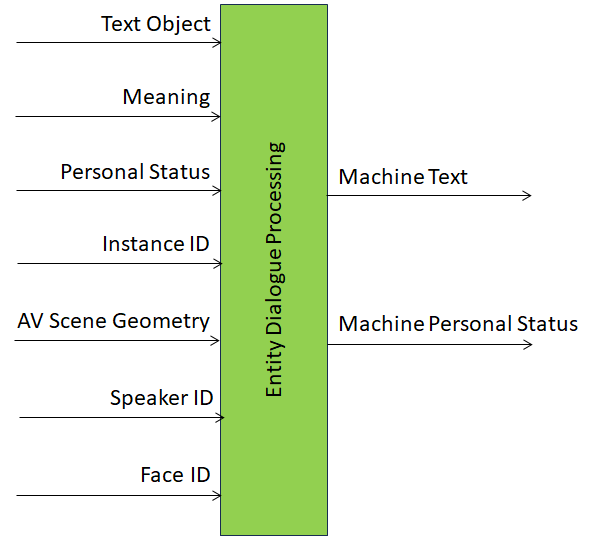

Conformance Testing Specification: Multimodal Conversation (MPAI-MMC) V2.1 publishes methods and data sets to enable a developer or a user to ascertain the claims of an implementation to conform with the specification of the Conversation with Emotion, Multimodal Question Answering, and Unidirectional Speech Translation AI Workflows.

At its previous meeting, MPAI has published three Calls for Technologies on Six Degrees of Freedom Audio, Connected Autonomous Vehicle – Technologies, and MPAI Metaverse Model – Technologies. Interested parties should check the mentioned links for update as the deadline for submission has not been reached yet.

MPAI is happy to announce that the Institute if Electrical and Electronic Engineers has adopted the companion Connected Autonomous Vehicle – Architecture standard as an IEEE standard identified as 3307-2024.

MPAI is continuing its work plan that involves the following activities:

- AI Framework (MPAI-AIF): developing open-source applications based on the AI Framework.

- AI for Health (MPAI-AIH): developing the specification of a system enabling clients to improve models processing health data and federated learning to share the training.

- Context-based Audio Enhancement (CAE-DC): preparing new projects.

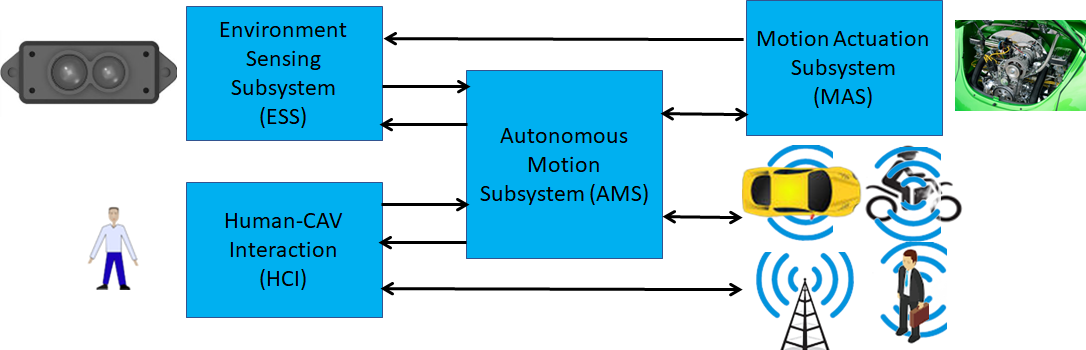

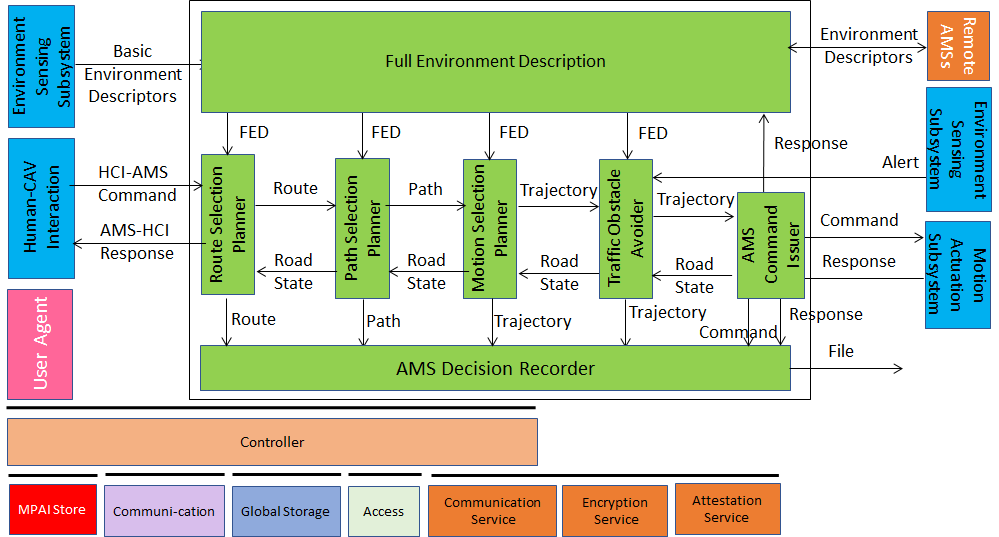

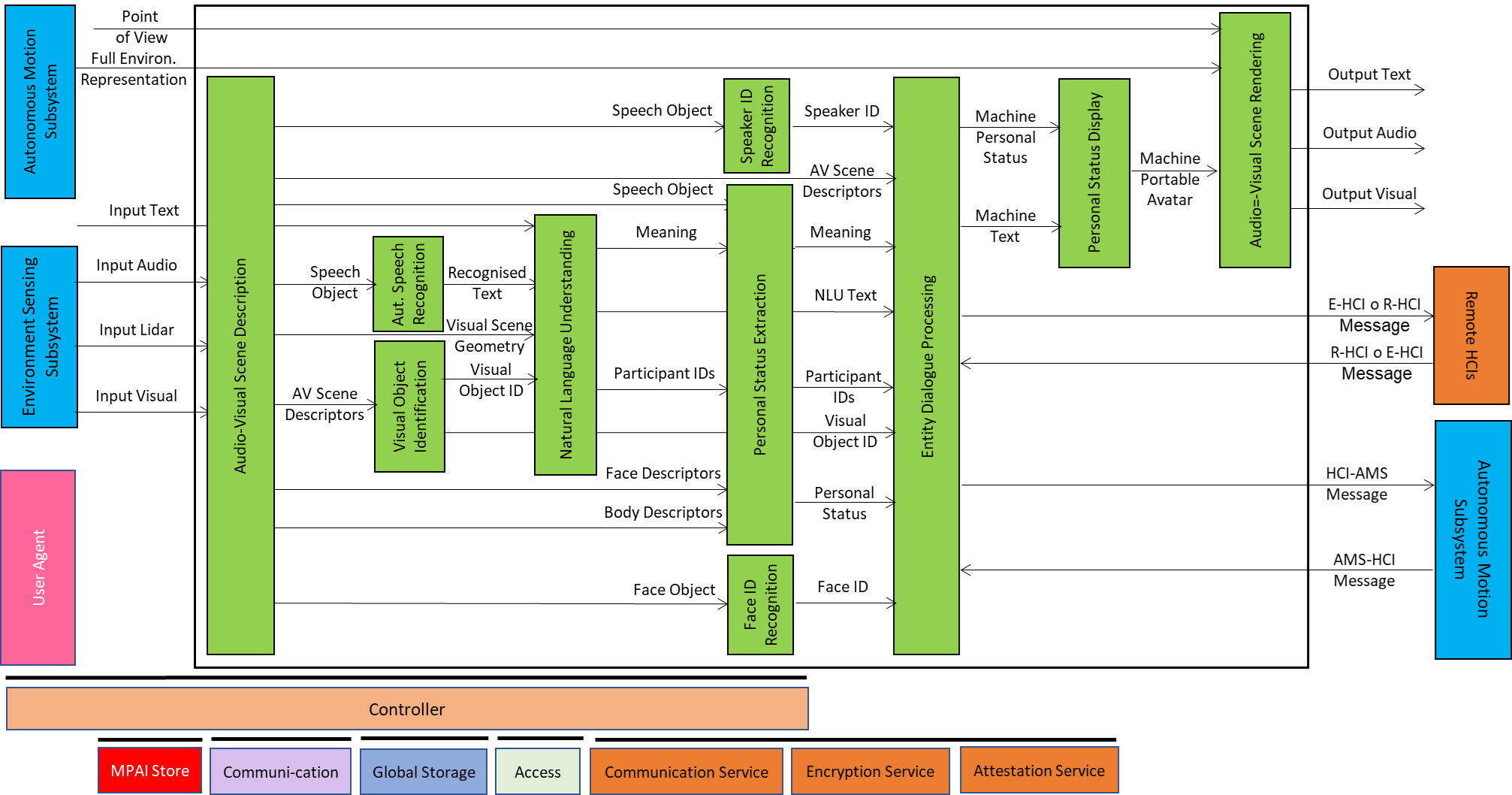

- Connected Autonomous Vehicle (MPAI-CAV): Functional Requirements of the data used by the MPIA-CAV – Architecture standard.

- Compression and Understanding of Industrial Data (MPAI-CUI): preparation for an extension to existing standard that includes support for more corporate risks.

- End-to-End Video Coding (MPAI-EEV): video coding using AI-based End-to-End Video coding.

- AI-Enhanced Video Coding (MPAI-EVC). video coding with AI tools added to existing tools.

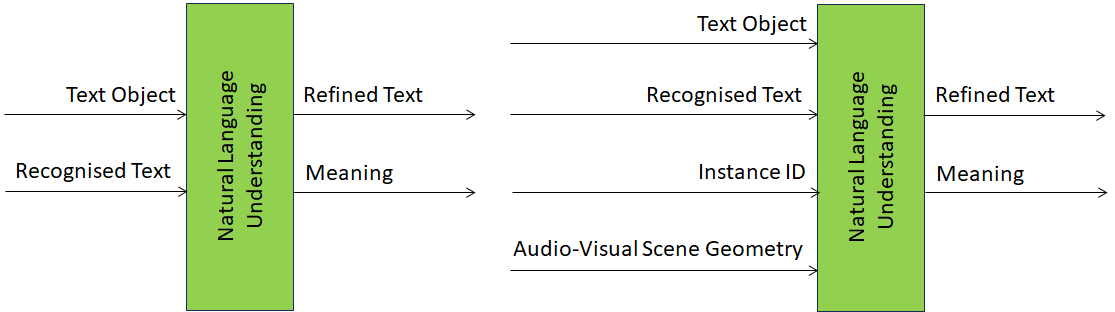

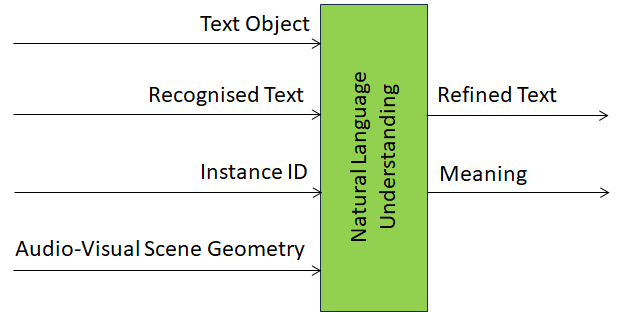

- Human and Machine Communication (MPAI-HMC): developing reference software.

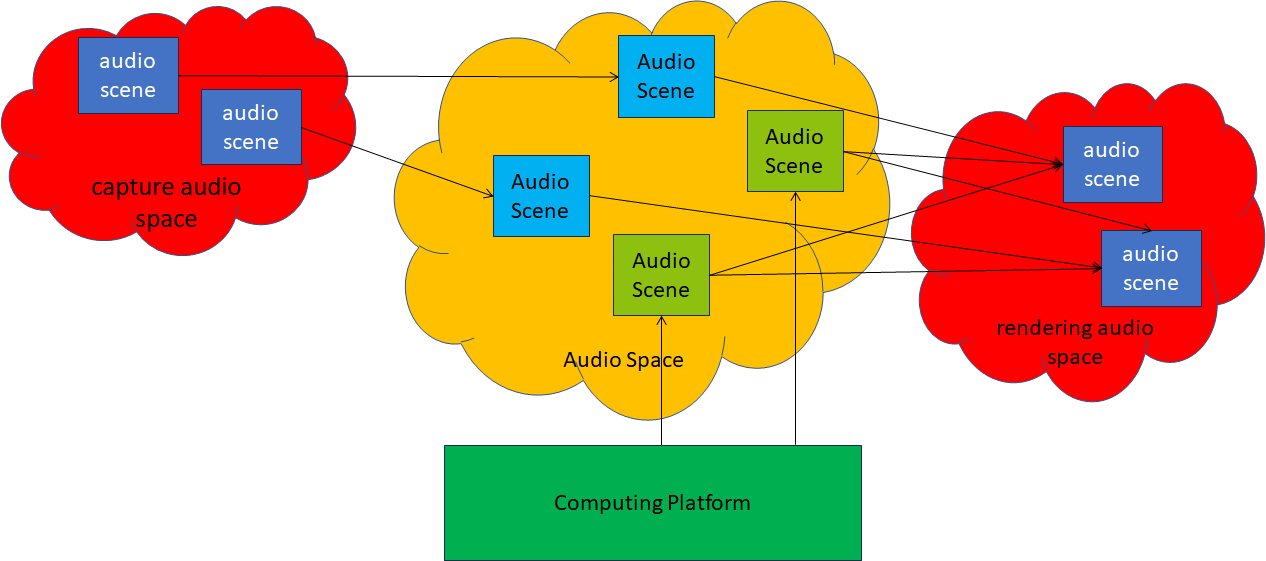

- Multimodal Conversation (MPAI-MMC): developing reference software and exploring new areas.

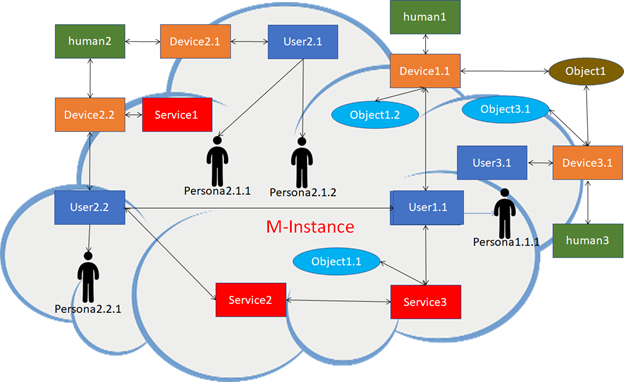

- MPAI Metaverse Model (MPAI-MMM): developing reference software specification and identifying metaverse technologies requiring standards.

- Neural Network Watermarking (MPAI-NNW): developing reference software for enhanced applications.

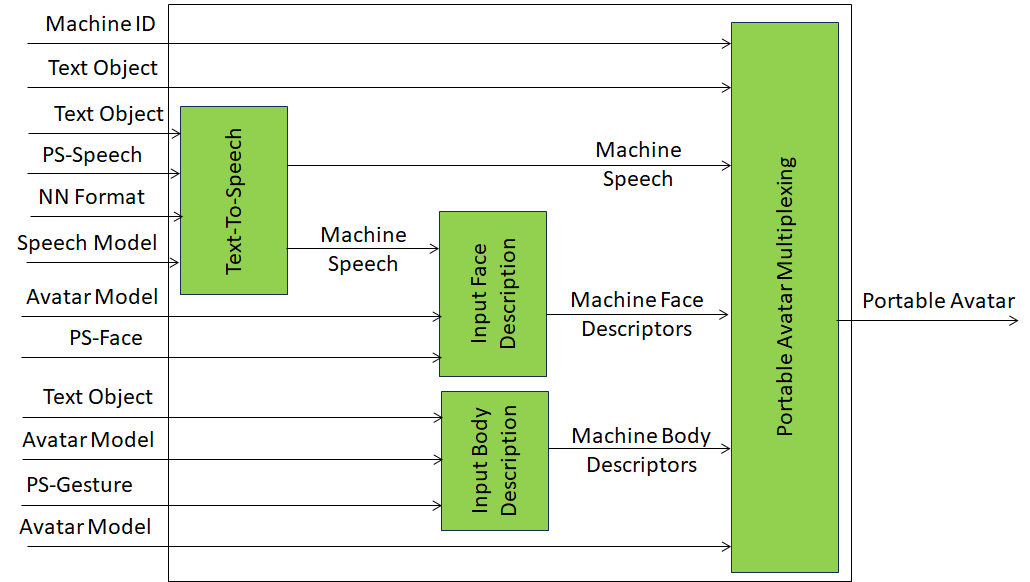

- Portable Avatar Format (MPAI-PAF): developing reference software, conformance testing and new areas for digital humans.

- AI Module Profiles (MPAI-PRF): to specify which features an AI Module supports.

- Server-based Predictive Multiplayer Gaming (MPAI-SPG): developing technical report on mitigation of data loss and cheating.

- XR Venues (MPAI-XRV): developing the standard enabling improved development and execution of Live Theatrical Performance.

Legal entities and representatives of academic departments supporting the MPAI mission and able to contribute to the development of standards for the efficient use of data can become MPAI members.

Visit the MPAI website, contact the MPAI secretariat for specific information, subscribe to the MPAI Newsletter, and follow MPAI on social media LinkedIn, Twitter, Facebook, Instagram, and YouTube.