2 A functional operation model 2

7 Initial functionality profiles. 10

Abstract

MPAI has developed a roadmap for Metaverse interoperability. The published Technical Report – MPAI Metaverse Model – Functionalities [1] claims that, as standards for a market as vast as the one expected for the Metaverse are difficult to develop, functional profiles should be developed. The published draft Technical Report – MPAI Metaverse Model – Functionality Profiles [2] develops a Metaverse functional operation model, applies it to 7 Use Cases and proposes 4 initial functionality profiles. This document is a concise summary of [2].

1 Introduction

The MPAI Metaverse Model (MPAI-MMM) aims to provide Technical Reports and Technical Specifications that apply to as many kinds of Metaverse instances as possible and enable varied Metaverse implementations to interoperate.

At present, achieving interoperability is difficult because:

- There is no common understanding of what a Metaverse is or should be, in detail.

- There is an abundance of existing and potential Metaverse Use Cases.

- Some independently designed Metaverse implementations are very successful.

- Some important technologies enabling more advanced and even unforeseen forms of the Metaverse may be uncovered in the next several years.

MPAI has developed a roadmap to deal with this challenging situation. The first milestone of the roadmap is based on the idea of collecting the functionalities that potential Users expect the Metaverse to provide, instead of trying to define what the Metaverse is. The first Technical Report of this roadmap [1] includes definitions, makes assumptions for the MPAI-MMM project, identified sources that can generate functionalities, develops an organised list of commented functionalities, and analyses the main enabling technologies. Reference [3] provides a summary of [1].

Potential Metaverse Users with different needs might require different technologies to support these needs. Therefore, an approach that tried to achieve the goal of making every M-Instance be able to interoperate with every other M-Instance would force implementers to take technologies on board that are useless for their needs and potentially costly.

Reference [1] posits that Metaverse standardisation should be based on the notion of Profiles[1] and Levels[2] successfully adopted by digital media standardisation. A Metaverse Standard that includes Profiles and Levels would enable Metaverse developers to use only the technologies they need that are offered by whatever profile is most suitable to them.

The notion of profile can mitigate the impact of having many disparate Metaverse Users with diverse requirements. Unfortunately, that notion cannot be currently implemented because some key technologies are not yet available and at this time it is unclear which technologies, existing or otherwise, will eventually be adopted. To cope with this situation, [2] only targets Functionality Profiles, i.e., profiles that are defined by the functionalities they offer, not by technologies implementing them. Functionality Profiles are not meant to fully address the interoperability problem, but rather to allow a technology-independent definition of profiles based on the functional value they provide rather than on the “influence” of specific technologies.

2 A functional operation model

A Metaverse instance – called M-Instance – is composed of interacting Processes within and without the M-Instance. An M-Instance interoperates with another M-Instance to the extent its Processes interoperate.

MPAI has identified 3 classes of Process:

- Users: Processes that represent humans using data from the real world (U-Locations) or autonomous agents (both need not be human-like).

- Devices: Processes interconnecting U-Locations to M-Locations and vice-versa.

- Services: Processes providing Functionalities.

A Process performs or requests another Process to perform Actions on Items. Item is data with attached metadata possibly including Rightss enabling a Process to perform Actions on. Rights express the ability to perform an Action on an Item.

The names of some Actions are prefixed depending on where Action is performed:

- MM- if the interAction takes place in Metaverse.

- UM- if the interAction is between Universe to Metaverse (Universe is the real world).

- MU- if the interAction is between Metaverse to Universe.

An interAction of Process #1 with Process #2 unfolds as follows:

- Process #1 requests Process #2 to performs Actions on Items.

- Process #2 executes the request if Process #1 has Rightss to call Process #2.

- Process #2 responds to Process #1’s request.

Note that Processes and Items need not be in the same M-Instance. However, the possibility of having Actions performed on Items may be more limited if they are not in the same M-Instance.

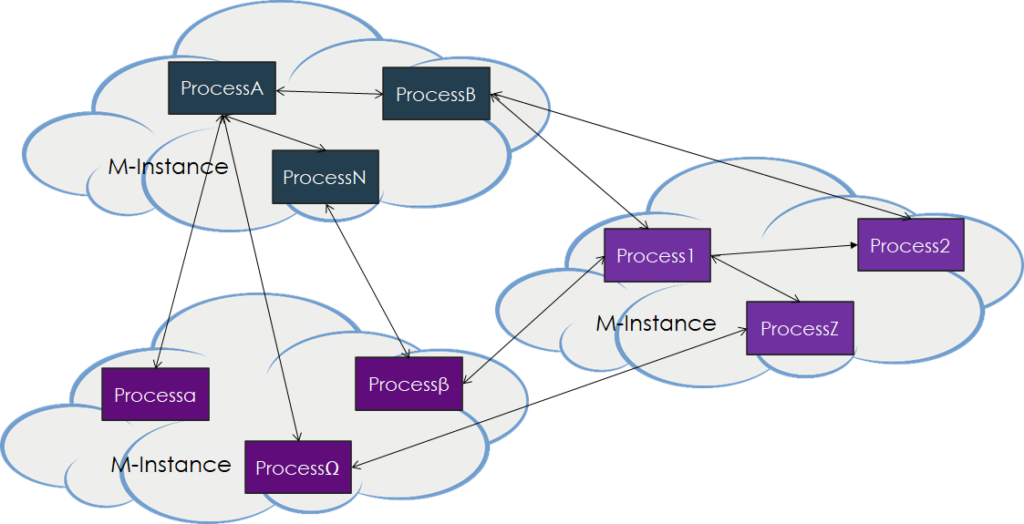

Figure 1 depicts a Metaverse configuration with interacting Processes.

Figure 1- A Metaverse configuration

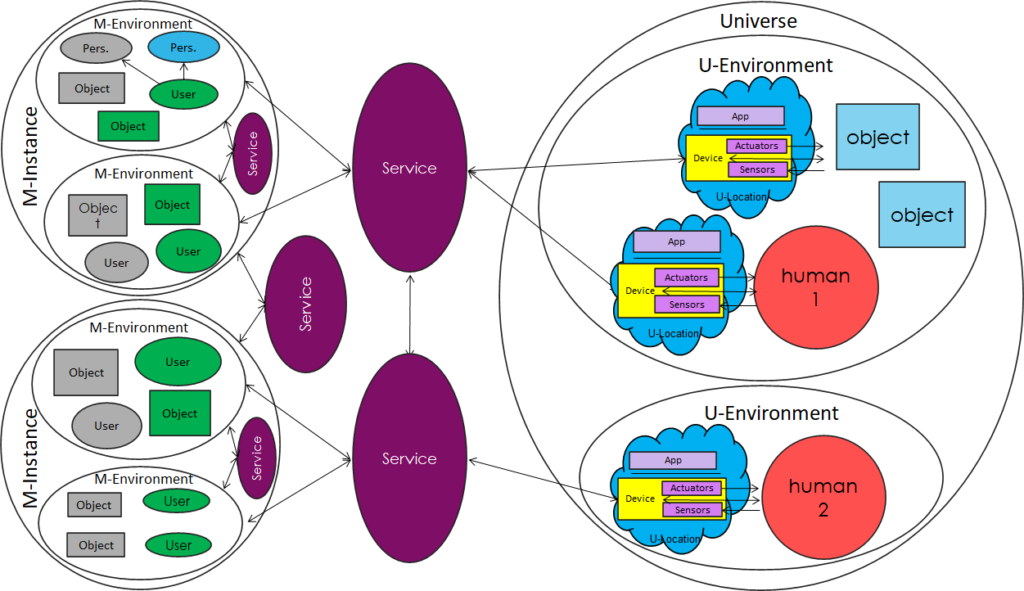

Devices connect humans and objects in a U-Location (a location in the real world – called Universe) to one or more Metaverse Service generating M-Instances as depicted in Figure 2.

Figure 2 – The relationship between the real world (Universe) and M-Instances

A Device UM-Captures scenes with its Sensors and MU-Render Entities (i.e., Item that can be perceived) though their Actuators.

To enable its User(s) to perform Actions in an M-Instance, a human may be asked to Register and provide a subset of their User data, e.g., Persona(e) and ID(s) of Wallet. The M-Instance provides an Account that univocally associates a Registered human with their Items with the following features:

- A human may have more than one Account in more than one M-Instance.

- A User has Rightss to act in the M-Instance associated with the human’s Account.

- A human may have more than one Account and more than one User per Account.

- A User exists after a human Registers with an M-Instance.

- Different Users of an Account may have different Rightss.

The Rules of an M-Instance express the obligations undertaken by the Registering human represented by the User and the terms and conditions under which a User exists in an M-Instance and operates either there or in another M-Instance. Depending on the Rules, a User of a human Registered on an M-Instance may or may not interact with another M-Instance and Rightss enforcement on some Actions performed on some Items may be forfeited.

Data entering an M-Instance, e.g., by Reading or MM-Capturing may include metadata and the Rightss granted to a Process to perform Actions on the data.

Items bear an Identifier is uniquely associated to that Item. However, an Item may bear more than one identifier. It is assumed that identifiers have the following structure:

[M-InstanceID] [ItemID]; [M-InstanceID] [M-LocationID] [ItemID].

3 Actions

A User can call a Process to perform Actions on Entities. An Entity can be:

- Authored, i.e., the User calls an Authoring Tool Service with an accompanying request to obtain Rights to act on the authored Entity.

- MM-Added, i.e., the User requests that an Entity be added to an M-Location with a Spatial Attitude.

- MM-Enabled, i.e., the User requests that a Process be allowed to MM-Capture an Entity that is MM-Added at an M-Location.

- MM-Embedded, i.e., MM-Added and MM-Enabled in one stroke.

- MM-Captured, i.e., the User requests that an Entity MM-Embedded at an M-Location be MM-Sent to a Process.

- UM-Animated, i.e., the User requests that a Process change the features of an Entity using a Stream of data.

- MM-Disabled, i.e., the User requests that the MM-Enabling of the Entity be stopped.

- Authenticated, i.e., the User calls a Service to make sure that an Item is what it claims to be.

- Interpreted, i.e., the User calls a Service to obtain an interpretation of Items in an M-Instance, e.g., translate a Speech Object MM-Embedded at an M-Location into a specific language.

- Informed, i.e., the User calls a Service to obtain data about an Item.

An example of composite Action, i.e., an Action that involves a plurality of Actions and a plurality of Items is Track that enables a User to request Services to:

- MM-Add a Persona at an M-Location with a Spatial Attitude.

- UM-Animate the Persona MM-Added at an M-Location.

- MU-Render specified Entities at the M-Location to a U-Location with Spatial Attitudes.

So far, the following Actions have been identified:

| 1 | Authenticate | 8 | Inform | 15 | MM-Enable | 22 | Track |

| 2 | Author | 9 | Interpret | 16 | MM-Send | 23 | Transact |

| 3 | Call | 10 | MM-Add | 17 | MU-Actuate | 24 | UM-Animate |

| 4 | Change | 11 | MM-Animate | 18 | MU-Render | 25 | UM-Capture |

| 5 | Create | 12 | MM-Capture | 19 | Post | 26 | UM-Render |

| 6 | Destroy | 13 | MM-Disable | 20 | Read | 27 | UM-Send |

| 7 | Discover | 14 | MM-Embed | 21 | Register | 28 | Write |

Of course, the Actions identified are not intended to be the only ones that will be needed by all M-Instances.

A Process requests another Process to perform an Action sending the following payload.

| Source | User (ID=UserID) ∨ Device (ID=DeviceID) ∨ Service (ID=ServiceID) |

| Destination | User (ID=UserID) ∨ Device (ID=DeviceID) ∨ Service (ID=ServiceID) |

| Action | Act |

| InItem | Item (ID=ItemID) |

| InLocations | M-LocationID ∨ U-LocationID ∨ Service (ID=ServiceID) |

| OutLocations | M-LocationID ∨ U-LocationID ∨ Device (ID=DeviceID) ∨ Service (ID=ServiceID) |

| OutRights | Rights (ID=RightsID) |

The requested Process will respond by sending the following payload:

| Success | OutItem | Item (ID=ItemID) |

| Error | Request | Faulty |

| IDs | Incorrect | |

| Rights | Missing or incomplete | |

| Unsupported | Item not supported | |

| Mismatch | Item type mismatch | |

| User Data | Faulty | |

| Wallet | Insufficient Value | |

| Clash | Entity clashes with another Entity | |

| M-Location | Out of range | |

| U-Location | Out of range | |

| Address | Incorrect |

4 Items

An Item can be:

- Created: from data and metadata. Metadata may include Rights.

- Changed: its Rightss are modified.

- Discovered by calling an appropriate Service.

- Written, i.e., stored.

- Posted as an Asset (i.e., an Item that can be the object of a Transaction to a Marketplace) to a marketplace.

- Transacted as an Asset.

An Item can belong to one of six categories:

- Items characterised by the fact that they can be MM-Captured by a User.

- Items that can cause an Entity to change its perceptible features.

- Items that have space and time attributes.

- Items that are finance related.

- Items that are non-perceptible.

- Items that are Process-related.

Entities are Items that can be perceived. Here are some relevant Items.

- Event: the set of Entities that are MM-Embedded at an M-Location from Start Time until End Time.

- Experience: An Event as a User MM-Captured it and the User’s Interactions with the Entities belonging to the Entity that spawned the Event.

- Object: the representation of an object and its features. Currently, only the following object types are considered: Audio, Visual, and Haptic.

- Model: An Object that can be UM-Animated by a Stream or a Process. A Persona is the Model of a human.

- Scene: a dynamic composition of Objects described by Times and Spatial Attitudes.

The finance-related Items are:

- Asset: An Item that may be the object of a Transaction and is embedded at an M-Location or Posted to a Service.

- Ledger: the list of Transactions executed on Assets.

- Provenance: the Ledger of an Asset.

- Transaction: Item representing the changed state of the Accounts and the Rights of a seller User and a buyer User on an Asset and optionally of the Service facilitating/enabling the Transaction.

- Value: An Amount expressed in a Currency.

- Wallet: A container of Currency units.

So far, the following Items have been identified:

| 1 | Account | 11 | M-Environment | 21 | Request-Authenticate | 31 | Scene |

| 2 | Activity Data | 12 | M-Instance | 22 | Request-Discover | 32 | Service |

| 3 | App | 13 | M-Location | 23 | Request-Inform | 33 | Social Graph |

| 4 | Asset | 14 | Map | 24 | Request-Interpret | 34 | Stream |

| 5 | Device | 15 | Message | 25 | Response-Authenticate | 35 | TransAction |

| 6 | Event | 16 | Model | 26 | Response-Discover | 36 | U-Location |

| 7 | Experience | 17 | Object | 27 | Response-Inform | 37 | User |

| 8 | Identifier | 18 | Personal Profile | 28 | Response-Interpret | 38 | User Data |

| 9 | InterAction | 19 | Process | 29 | Rights | 39 | Value |

| 10 | Ledger | 20 | Provenance | 30 | Rules | 40 | Wallet |

Of course, more Items will be identified as more application domains will be taken into consideration.

Each Item is specified by the following table. It should be noted that, in line with the assumptions made by the MPAI Metaverse roadmap and the current focus on functionalities, the formats of the Item Data are not specified.

| Purpose | A functional description of the Item. | ||||||||||||||||||||||||||||||||||||

| Data | In general, the Item data format(s) is(are) not provided. | ||||||||||||||||||||||||||||||||||||

| Acted on Metadata |

|

5 Data Types

Data Types are data that are referenced in Actions or in Items. Currently, the following data types have been identified.

- Address

- Amount

- Coordinates

- Currency

- Personal status

- Cognitive state

- Emotion

- Social attitude

- Point

- Spatial attitude

- position

- orientation

- Time

6 Use cases

So far 7 Use Cases have been developed to verify the completeness of Actions, Items and data types specified. Use cases are also a tool that facilitates the development of functionality profiles.

To cope with the fact that Use Cases may involve several locations and Items, a notation has been developed exemplified by the following:

- Useri MM-Embeds Persona1, Personai.2, etc.

- Useri Calls Process1, Processi.2, etc.

- Useri MM-Embeds Personaj, at M-Locationi.1, M-Locationi.2, etc.

- Useri MU-Renders Entityj at U-Locationi.1, U-Locationi.2, etc.

- Useri MM-Sends object2 to Userj.

The following is to be noted:

| Note1 | A = Audio, A-V = Audio-Visual, A-V-H = Audio-Visual-Haptic, SA=Spatial Attitude. |

| Note2 | If a Composite Action is listed, its Basic Actions are not listed, unless they are independently used by the Use Case. |

Here only two of the seven Use Cases of [2] will be presented.

6.1 AR Tourist Guide

6.1.1 Description

This Use Case describes a human who intends to develop a tourist application through an App that alerts the holder of a smart phone where the App is installed and lets them view Entities and talk to autonomous agents residing at M-Locations:

- User1

- Buys M-Location1 (parcel) in an M-Environment.

- Creates Entity1 (landscape suitable for a virtual path through n sub-M-Locations).

- Embeds Entity1 (landscape) on M-Location1.1 (parcel).

- Sells Entity1 (landscape) and M-Location1.1 (parcel) to a User2.

- User2

- Authors Entity1 to Entity2.n for the M-Locations.

- Embeds the Entities at M-Location1 to M-Location2.n.

- Sells the result to User3.

- human4

- Develops

- Map recording the pairs M-Locationi – U-Location2.i

- App alerting a human5 holding the Device with the App installed that a key U-Location has been reached.

- Sells Map and App to human3.

- Develops

- User3 MM-Embeds one or more autonomous Personae at M-Location1 to M-Location2.n.

- When human5 gets close to a key U-Location:

- App prompts Device to Request User3 to MU-Render the Entityi MM-Embedded at M-Location2.i to the key U-Location2.i.

- human5 interacts with MU-Rendered Entityi that may include an MM-Animated Persona2.i.

6.1.2 Workflow

Table 1 – AR Tourist Guide workflow.

| Who | Does | What | Where/comment |

| User1 | Transacts | M-Location1.1 | (Parcel in an M-Environment) |

| Authors | Entity1.1 | (A landscape for the parcel) | |

| MM-Embeds | Entity1.1 | M-Location1.1 | |

| Transacts | Entity1.1 | User2 (landscape) | |

| Transacts | M-Location1.1 | User2 (parcel) | |

| User2 | Authors | Entity2.1 to Entity2.n | Promotion material for U-Locations. |

| MM-Embeds | Entity2.1 to Entity2.n | M-Location2.1 to Location 2.n | |

| Writes | M-Locations | Address2.1 | |

| MM-Sends | Address2.1 | User4 | |

| Transacts | Entity1.1 | User4 (landscape) | |

| Transacts | M-Location1.1 | User4 (parcel) | |

| Transacts | Entity2.1 to Entity2.n | User4 | |

| human3 | develops | Map3.1 | (U-location2.i-M-Location2.i-Metadata2.i) |

| sells | Map and App | To human4 | |

| User4 | MM-Embeds | Persona4.1-Persona4.n | M-Location2.1 to Location 2.n w/ SA |

| MM-Animates | Persona4.1-Persona4.n | M-Location2.1 to M-Location2.n | |

| human5 | downloads | App | (To Device) |

| approaches | U-Location2.i | (App’s key point) | |

| App | prompts | Device5.1 | |

| Device5.1 | MM-Send | Message5.1 | User4 (Persona4.i) |

| Persona4.i | MU-Sends | Entity2.i | U-Location2.i |

| human5 | interacts | (W/ MU-Rendered Entity4.i and Persona4.i) |

6.1.3 Actions, Items, and Data Types

| Actions | Items | Data Types |

| Author | App | Amount |

| MM-Animate | Device | Currency |

| MM-Embed | Entity | Coordinates |

| MM-Send | Map | Spatial Attitude |

| MU-Render | M-Location | Spatial Attitude |

| Send | Persona | Value |

| Write | Service | |

| Transact | U-Location | |

| User |

6.2 Virtual Dance

6.2.1 Description

- User2 (dance teacher)

- Teaches dance at a virtual classroom.

- Works at M-Location1 where its digital twin Persona2.1 is Audio-Visually MM-Embedded.

- MM-Embeds and MM-Animates Persona2 (A-V) (another of its Personae) at M-Location2.2 as virtual secretary to attends to students coming to learn dance.

- User1 (dance student #1):

- MM-Embeds its Persona1 (A-V) at Location1.1 (its “home”).

- Audio-Visual-Haptically MM-Embeds Persona1 (A-V-H) at Location1.2 close to Location2.2.

- Sends Object1 (A) to Persona2.2 (greets virtual secretary).

- Virtual secretary:

- Sends Object1 (A) to dance students #1 (reciprocates greeting).

- Send Object2 (A) to call regular dance teacher’s Persona2.1.

- Dance teacher MM-Embeds (A-V-H) Persona1 at Location2.3 (classroom) where it dances with Persona1.1 (dance student #1).

- While Persona1 (student #1) and Persona2.1 (teacher) dance, User3 (dance student #2):

- MM-Embeds (A-V) Persona1 (its digital twin) at Location3.1 (its “home”).

- MM-Embeds (A-V-H) Persona1 to Location3.2 (close to Location2.2 where the secretary is located).

- After a while, User2 (dance teacher):

- MM-Embeds (A-V-H) Persona1 at Location2.4, (close to Location3.2 of dance student #2).

- MM-Disables Persona1 from Location2.3 where it was dancing with Persona1.1 (student #1).

- MM-Embeds (A-V-H) and MM-Animates an autonomous Persona3 replacing Persona2.1 from Location2.3 so that student #1 can continue practising dance.

- Dances with Persona1 (student #2).

6.2.2 Workflow

Table 2 – Virtual Dance workflow.

| Who | Does | What | Where/(comment) |

| User2 (Teacher) | Tracks | Persona2.1 (AV) | M-Location2.1 |

| MM-Embeds | Persona2.2 (AV) | M-Location2.2 w/ SA | |

| MM-Animates | Persona2.2 (AV) | M-Location2.2 | |

| User1 (Student) | Tracks | Persona1.1 (AV) | M-Location1.1 |

| Transacts | Value1.1 | (Lesson fees) | |

| MM-Embeds | Persona1.1 (AVH) | M-Location1.2 w/ SA | |

| MM-Disables | Persona1.1 | M-Location1.1 | |

| MM-Sends | Object1.1 (A) | Persona2.2 (greetings) | |

| User2 (Persona2.2) | MM-Sends | Object2.1 (A) | Persona1.1 (greetings) |

| MM-Sends | Object2.2 (A) | Persona2.1 (alert) | |

| User2 (Persona2.1) | MM-Embeds | Persona2.1 | M-Location2.3 w/ SA |

| MM-Disables | Persona2.2 | M-Location2.2 | |

| MM-Embeds | Object2.3 (A) | M-Location2.4 (music) | |

| Persona1.1 | (dances) | ||

| Persona2.1 | (dances) | ||

| User3 (Student) | Tracks | Persona3.1 (AV) | M-Location3.1 |

| Transacts | Value3.1 | (Lesson fees) | |

| MM-Embeds | Persona3.1 (AVH) | M-Location3.2 w/ SA | |

| MM-Disables | Persona3.1 | M-Location3.1 | |

| User2 (Teacher) | MM-Disables | Persona2.1 | M-Location2.3 |

| MM-Embeds | Persona2.3 | M-Location2.4 w/ SA | |

| Animates | Persona2.3 | M-Location2.4 | |

| Persona3.1 | (dance) | ||

| Persona2.1 | (dance) |

6.2.3 Actions, Items, and Data Types

| Actions | Items | Data Types |

| MM-Animate | Persona (AV) | Amount |

| MM-Disable | M-Location | Currency |

| MM-Embed | Object (A) | Spatial Attitude |

| MM-Send | Persona (AVH) | Value |

| Track | Service | |

| Transact | U-Location | |

| Value |

7 Initial functionality profiles

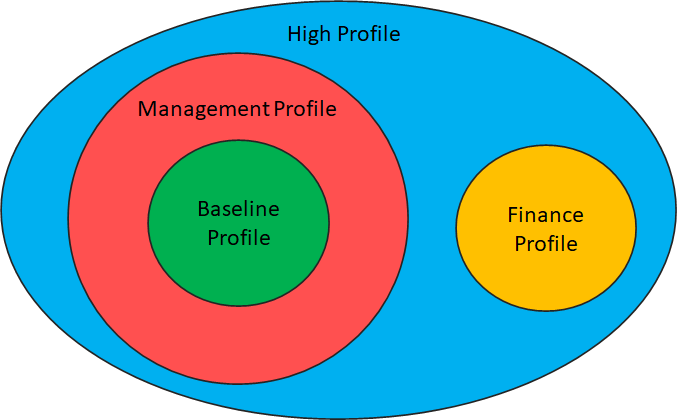

The structure of the Metaverse functionality profiles derived from the above includes hierarchical profiles and one independent profile. Profiles may have levels. As depicted in Figure 2, the currently identified profiles are baseline, management, finance, and high. The currently identified levels for baseline, management, and high profiles are audio only, audio-visual, and audio-visual-haptic.

Figure 3 – the currently identified Functionality Profiles

As an example, the baseline functionality profile enables a human equipped with a Device to allow their Users to:

- Author Entities, e.g., object models.

- Sense a scene at a U-Location:

- UM-Capture a scene.

- UM-Send data.

- MM-Embed Personae and objects:

- MM-Add Persona and object.

- UM-Animate a Persona, with a stream (UM-Animate) or using a Process (MM-Animate).

- MU-Render an Entity at an M-Location to a U-Location.

- MM-Capture Entities at an M-Location

- MM-Disable an Entity.

This Profile supports baseline lecture, meeting, and hang-out Use Cases. TransActions and User management are not supported.

Table 3 lists the Actions, Entities, and Data Types of the Baseline Functionality Profile.

Table 3 – Actions, Entities, and Data Types of the Baseline Functionality Profile

| Actions | Author | Call | Create | Destroy |

| MM-Add | MM-Animate | MM-Capture | MM-Embed | |

| MM-Disable | MM-Enable | MM-Render | MM-Send | |

| MU-Render | MU-Send | Read | Register | |

| Track | UM-Animate | UM-Capture | UM-Render | |

| UM-Send | Write | |||

| Items | Device | Event | Experience | Identifier |

| Map | M-Instance | M-Location | Model | |

| Object | Persona | Process | Scene | |

| Service | Stream | U-Environment | U-Location | |

| User | ||||

| Data Types | Address | Coordinates | Orientation | Position |

| Spatial Attitude |

The identified four profiles serve well the needs conveyed by the identified functionalities. As more functionalities will be added, the number of profiles and potentially of levels, is likely to increase.

8 Conclusions

Reference [2] demonstrates the feasibility of the first two milestones of the proposed MPAI roadmap to Metaverse interoperability. Currently, four functionality profiles supporting the selected functionalities have been identified and specified. As more basic Metaverse elements are added, however, more functional profiles are likely to be found necessary. Functionality profiles can be extended and restructured as more Functionalities will be added.

The next step of the MPAI roadmap to Metaverse interoperability is the development of Technical Specification – MPAI Metaverse Model (MPAI-MMM) – Metaverse Architecture.

9 References

- MPAI; Technical Report – MPAI Metaverse Model – Functionalities (MPAI-MMM); January 2023; https://mpai.community/standards/mpai-mmm/mpai-Metaverse-model/mmm-functionalities/

- MPAI; Technical Report – MPAI Metaverse Model – Functionality Profiles (MPAI-MMM); March 2023; https://mmm.mpai.community/

- MPAI; A roadmap to Metaverse Interoperability; February 2023; FGMV-I-012

[1] A Profile is sets of one or more base standards and, if applicable, chosen classes, subsets, options, and parameters of those standards that are necessary for accomplishing a particular function.

[2] A Level is a subdivision of a Profile indicating the completeness of the User experience.