Introduction

During the past decade, the Unmanned-Aerial-Vehicles (UAVs) have attracted increasing attention due to their flexible, extensive, and dynamic space-sensing capabilities. The volume of video captured by UAVs is exponentially growing along with the increased bitrate generated by the advancement of the sensors mounted on UAVs, bringing new challenges for on-device UAV storage and air-ground data transmission. Most existing video compression schemes were designed for natural scenes without consideration of specific texture and view characteristics of UAV videos. In MPAI EEV project, we have contributed a detailed analysis of the current state of the field of UAV video coding. Then EEV establishes a novel task for learned UAV video coding and construct a comprehensive and systematic benchmark for such a task, present a thorough review of high quality UAV video datasets and benchmarks, and contribute extensive rate-distortion efficiency comparison of learned and conventional codecs after. Finally, we discuss the challenges of encoding UAV videos. It is expected that the benchmark will accelerate the research and development in video coding on drone platforms

UAV Video Sequences

We collect a set of video sequences to build the UAV video coding benchmark from those diverse contents, considering the recording device type (various models of drone-mounted cameras), diverse in many aspects including location (in-door and out-door places), environment (traffic workload, urban and rural regions), objects (e.g., pedestrian and vehicles), and scene object density (sparse and crowded scenes).Table 1 provides a comprehensive summary of the prepared learned drone video coding benchmark for a better understanding of those videos.

Table 1: Video sequence characteristics of the proposed learned UAV video coding benchmark

| Source |

Sequence

Name |

Spatial

Resolution |

Frame

Count |

Frame

Rate |

Bit

Depth |

Scene

Feature |

|

Class A VisDrone-SOT |

BasketballGround |

960×528 |

100 |

24 |

8 |

Outdoor |

| GrassLand |

1344×752 |

100 |

24 |

8 |

Outdoor |

| Intersection |

1360×752 |

100 |

24 |

8 |

Outdoor |

| NightMall |

1920×1072 |

100 |

30 |

8 |

Outdoor |

| SoccerGround |

1904×1056 |

100 |

30 |

8 |

Outdoor |

| Class B

VisDrone-MOT |

Circle |

1360×752 |

100 |

24 |

8 |

Outdoor |

| CrossBridge |

2720×1520 |

100 |

30 |

8 |

Outdoor |

| Highway |

1344×752 |

100 |

24 |

8 |

Outdoor |

| Class C

Corridor |

Classroom |

640×352 |

100 |

24 |

8 |

Indoor |

| Elevator |

640×352 |

100 |

24 |

8 |

Indoor |

| Hall |

640×352 |

100 |

24 |

8 |

Indoor |

| Class D

UAVDT S |

Campus |

1024×528 |

100 |

24 |

8 |

Outdoor |

| RoadByTheSea |

1024×528 |

100 |

24 |

8 |

Outdoor |

| Theater |

1024×528 |

100 |

24 |

8 |

Outdoor |

The corresponding thumbnail of each video clip is depicted in Fig. 1 as supplementary information. There are 14 video clips from multiple different UAV video dataset sources [1, 2, 3]. Their resolutions and frame rates range from 2720 × 1520 down to 640 × 352 and 24 to 30 respectively.

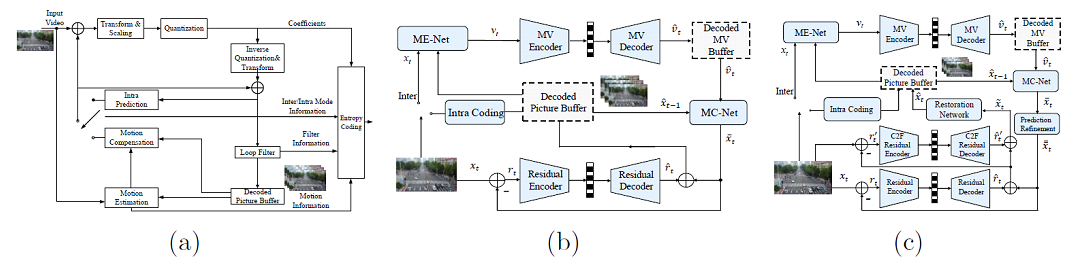

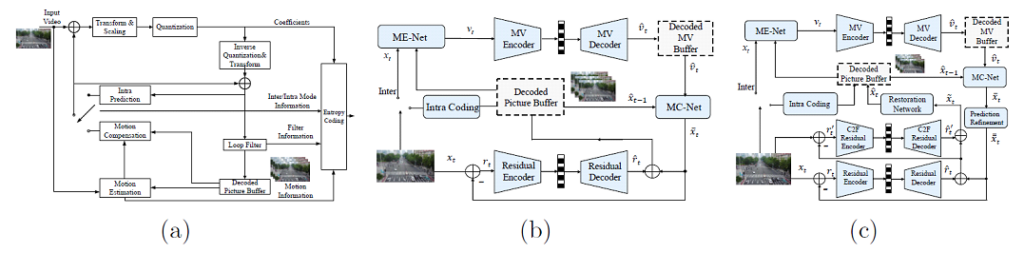

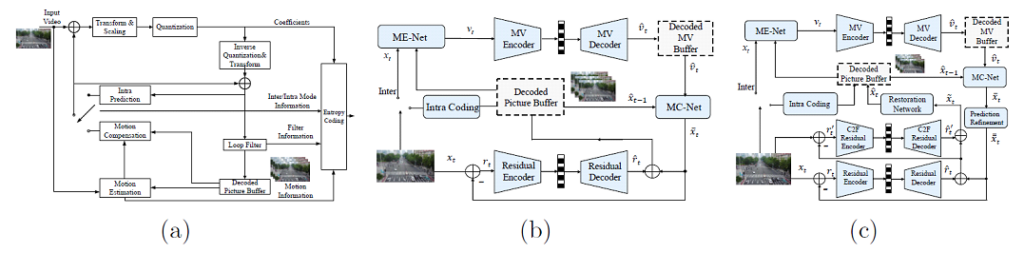

To comprehensively reveal the R-D efficiency of UAV video using both conventional and learned codecs, we encode the above-collected drone video sequences using the HEVC reference software with screen content coding (SCC) extension (HM-16.20-SCM-8.8) and the emerging learned video coding framework OpenDVC [4]. Moreover, the reference model of MPAI End-to-end Video (EEV) is also employed to compress the UAV videos. As such, the baseline coding results are based on three different codecs. Their schematic diagrams are shown in Fig. 1. The left panel represents the classical hybrid codec. The remaining two are learned codecs, OpenDVC and EEV respectively. It is easy to observe that the EEV software is an enhanced version of OpenDVC codecthat incorporates more advanced modules such as motion compensation prediction improvement, two-stage residual modelling, and in-loop restoration network.

Figure 1 Block diagram of different codecs. (a) Conventional hybrid codec HEVC. (b)

OpenDVC. (3) MPAI EEV. Zoom-in for better visualization

Another important factor for learned codecs is train-and-test data consistency. It is widely accepted in the machine learning community that train and test data should be independent and identically distributed. However, both OpenDVC and EEV are trained using the natural video dataset vimeo-90k with mean-square-error (MSE) as distortion metrics. We employ those pre-trained weights of learned codecs without fine-tuning them on drone video data to guarantee that the benchmark is general.

Evaluation.

Since all drone videos in our proposed benchmark use the RGB color space, the quality assessment methods are also applied to the reconstruction in the RGB domain. For each frame, the peak-signal-noise-ratio (PSNR) is are calculated for each component channel. Then the RGB averaged value is obtained to indicate its picture quality. Regarding the bitrate, we calculate bit-per-pixel (BPP) using the binary files produced by codecs. We report the coding efficiency of different codecs using the Bjøntegaard delta bit rate (BD-rate) measurement.

Table 1: The BD-rate performance of different codecs (OpenDVC, EEV, and HM-16.20- SCM-8.8) on drone video compression. The distortion metric is RGB-PSNR.

| Category |

Sequence

Name |

BD-Rate Reduction

EEV vs OpenDVC |

BD-Rate Reduction

EEV vs HEVC |

|

Class A VisDrone-SOT |

BasketballGround |

-23.84% |

9.57% |

| GrassLand |

-16.42% |

-38.64% |

| Intersection |

-18.62% |

-28.52% |

| NightMall |

-21.94% |

-6.51% |

| SoccerGround |

-21.61% |

-10.76% |

| Class B VisDrone-MOT |

Circle |

-20.17% |

-25.67% |

| CrossBridge |

-23.96% |

26.66% |

| Highway |

-20.30% |

-12.57% |

| Class C Corridor |

Classroom |

-8.39% |

178.49% |

| Elevator |

-19.47% |

109.54% |

| Hall |

-15.37% |

58.66% |

| Class D UAVDT S |

Campus |

-26.94% |

-25.68% |

| RoadByTheSea |

-20.98% |

-24.40% |

| Theater |

-19.79% |

2.98% |

| Class A |

-20.49% |

-14.97% |

| Class B |

-21.48% |

–3.86% |

| Class C |

-14.41% |

115.56% |

| Class D |

-22.57% |

-15.70% |

| Average |

-19.84% |

15.23% |

The corresponding PSNR based R-D performances of the three different codecs are shown in Table 1. Regarding the simulation results, it is observed that around 20% bit-rate reduction could be achieved when comparing EEV and OpenDVC codec. This shows promising performances for the learned codecs and its improvement made by EEV software.

When we directly compare the coding performance of EEV and HEVC, obvious performance gap between the in-door and out-door sequences could be observed. Generally speaking, the HEVC SCC codec outperforms the learned codec by 15.23% over all videos. Regarding Class C, EEV is significantly inferior to HEVC by clear margin, especially for the Classroom and elevator sequences. Such R-D statistics reveal that learned codecs are more sensitive to the video content variations than conventional hybrid codecs if we directly apply natural-video-trained codec to UAV video coding. For future research, this point could be resolved and modeled as an out-of-distribution problem and extensive knowledge could be borrowed from the machine learning community.

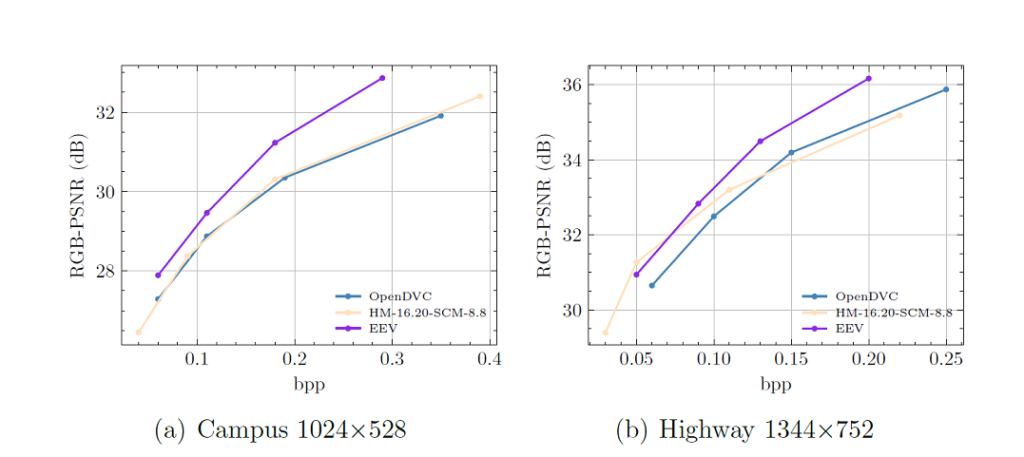

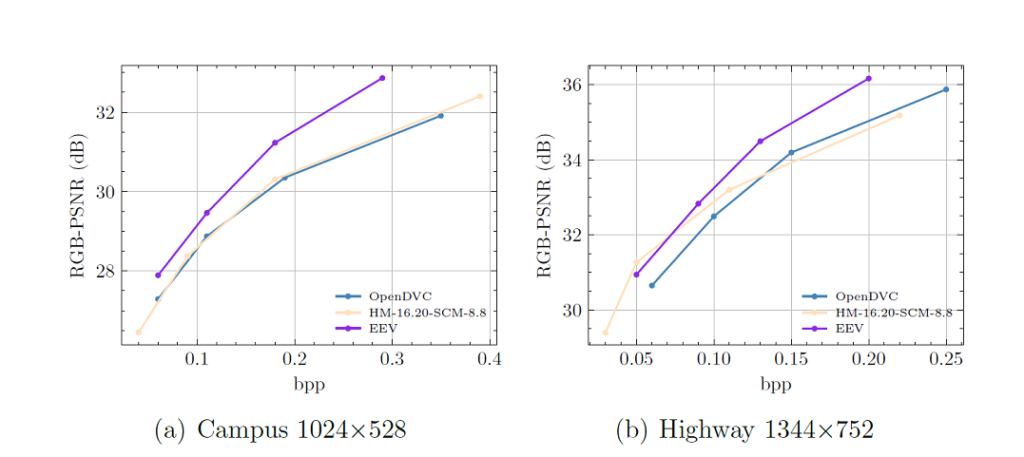

To further dive into the R-D efficiency interpretation of different codecs, we plot the R-D curves of different methods in Fig. 2. Specifically, we select Camplus and Highway for illustration. The blue-violet, peach-puff, and steel-blue curves denote EEV, HEVC, and OpenDVC codec respectively. The content characteristic of UAV videos and its distance to the natural videos shall be modeled and investigated in future research.

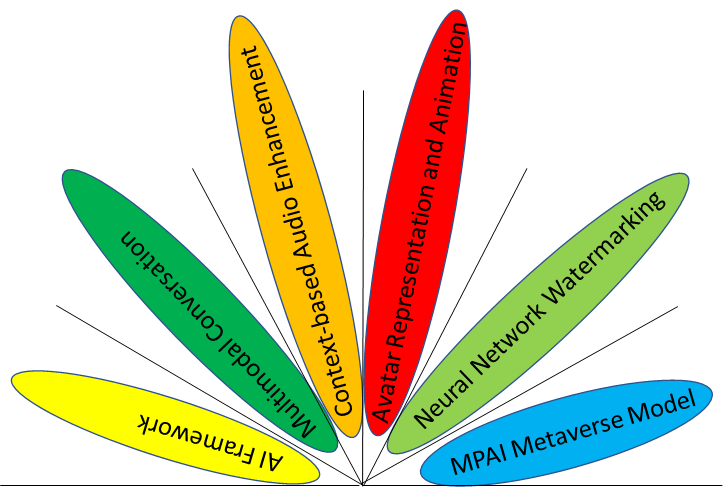

MPAI-EEV Working Mechanism

This work was accomplished in the MPAI-EEV coding project, which is an MPAI standard project seeking to compress video by exploiting AI-based data coding technologies. Within this workgroup, experts around the globe gather and review the progress, and plan new efforts every two weeks. In its current phase, attendance at MPAI-EEV meetings is open to interested experts. Since its formal establishment in Nov. 2021, the MPAI EEV has released three major versions of it reference models. MPAI-EEV plans on being an asset for AI-based end-to-end video coding by continuing to contribute new development in the end-to-end video coding field.

This work, contributed by MPAI-EEV, has constructed a solid baseline for compressing UAV videos and facilitates the future research works for related topics.

Reference

[1] Pengfei Zhu, Longyin Wen, Dawei Du, Xiao Bian, Heng Fan, Qinghua Hu, and Haibin Ling, “Detection and Tracking Meet Drones Challenge,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 11, pp. 7380–7399, 2021.

[2] A. Kouris and C.S. Bouganis, “Learning to Fly by MySelf: A Self-Supervised CNN- based Approach for Autonomous Navigation,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, 2018, pp. 5216–5223.

[3] Dawei Du, Yuankai Qi, Hongyang Yu, Yifan Yang, Kaiwen Duan, Guorong Li, Weigang Zhang, Qingming Huang, and Qi Tian, “The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking,” in European Conference on Computer Vision, 2018, 370–386.

[4] Ren Yang, Luc Van Gool, and Radu Timofte, “OpenDVC: An Open Source Imple- mentation of the DVC Video Compression Method,” arXiv preprint arXiv:2006.15862, 2020.