Conclusions

This is a small book talking about a big adventure: standards for the most sensitive objects of all – data – using the most prominent technology of all – AI – for pervasive and trustworthy use by billions of people. At the end of this book, it is thus appropriate to assess what the authors think will be the likely impact of MPAI on industry and society.

The first impact will be the availability of standards for a technology that is best used to transform the data but has not seen any so far. Standards that are driven by the same principles that guided another great adventure – MPEG – that replaced standards meant to be exclusively used by certain countries or industries with standards serving humankind.

The second impact will be a right that used to be taken for granted by implementers but has ceased to be a right some time ago. An implementer wishing to use a published standard should be allowed to do so, of course after remunerating those who invested money and talent to produce the technology enabling the standard.

The third impact is a direct consequence of the preceding two. In the mid-18th century, trade did not develop as it could because feudal traditions allowed petty lords to erect barriers to trade for the sake of a few livres or pounds. Today we do not live in a feudal age, but we still see petty lords here and there obstructing progress for the sake of a few dollars.

The fourth impact is the mirror of the third. An industry freed from shackles, with access to global AI-based data coding standards and operating in an open competitive market will be able to churn out interoperable AI-based products, services, and applications in response to consumer needs which are known today and the many more which are not yet known.

The fifth impact is a direct consequence of the fourth. An industry using sophisticated technologies such as AI and forced to be maximally competitive will have a need to foster an accelerated progress of those technologies. We can confidently look forward to a new spring of research and advancement of science in a field to which today it is difficult to place boundaries.

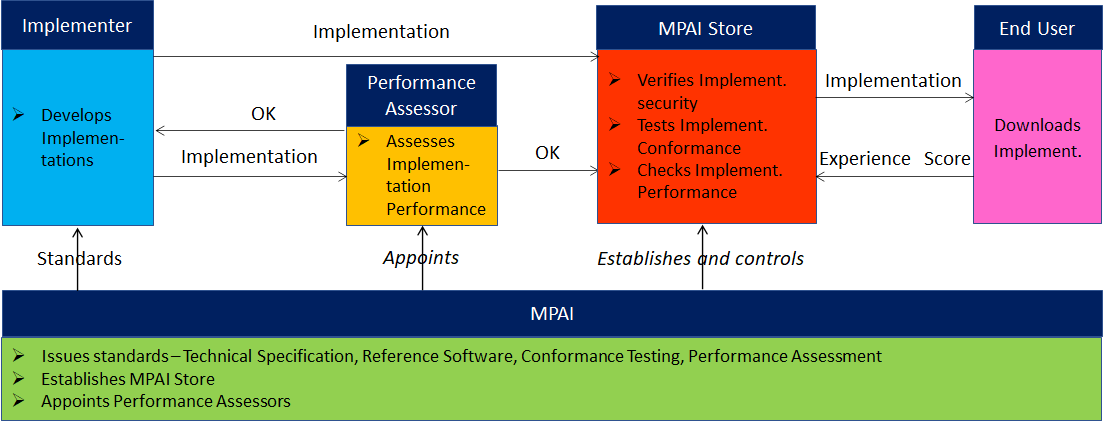

The sixth impact will be caused by MPAI’s practical Performance Assessor based solution to the concerns of many: AI technologies are as potentially harmful to humankind as they are powerful. The ability of AI technologies to hold vast knowledge without simple means for users to check how representative of the world they are – when they are used to handle information and possibly make decisions – opens our minds to apocalyptic scenarios.

The seventh impact is speculative, but no less important. The idea of intelligent machines able to deal with humans has always attracted the intellectual interest of writers. Machines dealing with humans are no longer speculations but facts. As objects embedding AI – physical and virtual – increase their ramification into our lives, more issues than “Performance” will come to the surface and will have to be addressed. MPAI, with its holistic view of AI as the technology enabling a universal data representation, proposes itself as the body where such issues as enabled by progress of technology can be addressed and ways forward found.

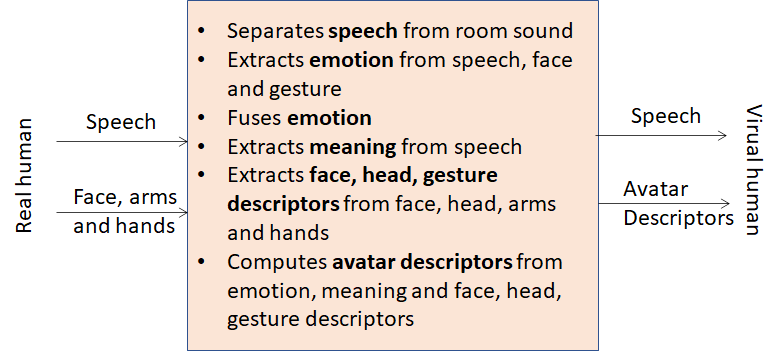

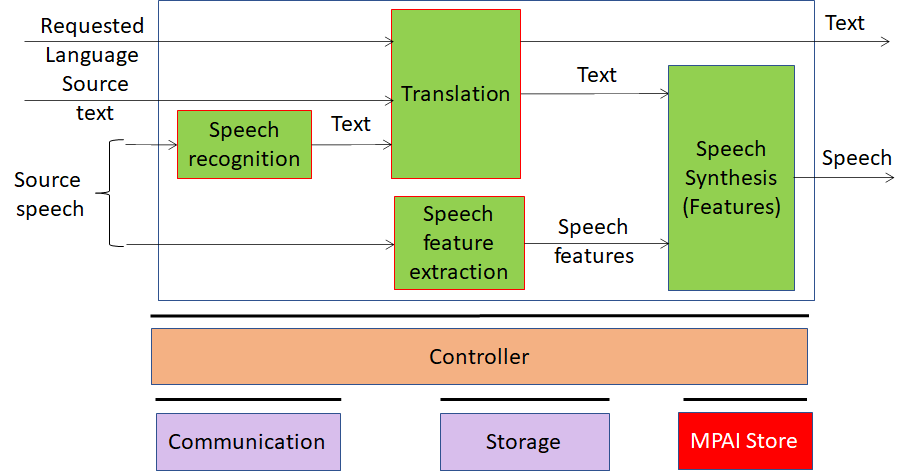

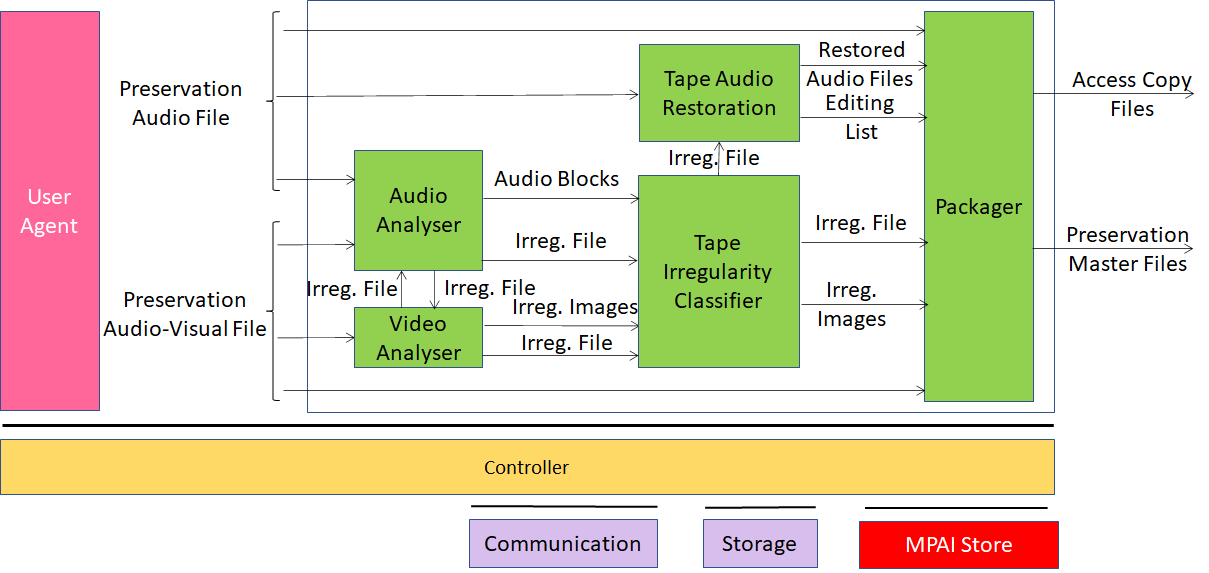

Figure 36 – The expected MPAI impacts

The results achieved by MPAI in 15 months of activity and the plans laid down for the future demonstrate that the seven impacts identified above are not just wishful thinking. MPAI invites people of good will to join and make the potential real.