| 1 Introduction

3 Governance of the MPAI Ecosystem |

5 Human-Machine dialogue

6 Connected Autonomous Vehicle |

1 Introduction

In the last few years, Artificial Intelligence (AI) has made great strides offering more efficient ways to do what used to be done with Data Processing (DP) technologies. AI is a powerful technology but the result of the processing depends on the data sets used for training which typically only the implementer knows. In some applications – such as information services – the bias in the training may have potentially devastating social impacts. In other applications – such as autonomous vehicles – the inability to trace back the machine decision may be unacceptable to society.

While DP standards have helped promote wide use of digital technologies, very few if any examples of AI standards with a impact comparable to DP standards are known. MPAI – Moving Picture, Audio, and Data Coding by Artificial Intelligence has been established to develop AI-based data coding standards. In a little more than three years of activity, MPAI has developed ten Technical Specifications.

2 AI Framework (MPAI-AIF)

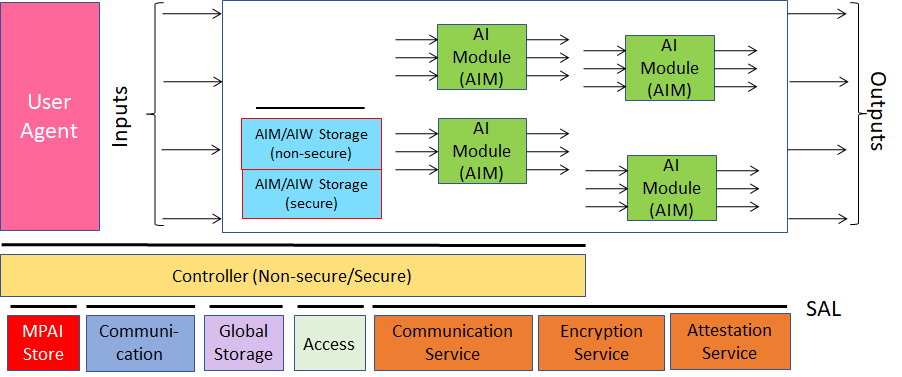

Many MPAI standards are based on the idea of specifying AI applications as AI Workflows (AIW) with known functions and interfaces composed of interconnected components called AI Modules (AIM) implemented with AI or DP technologieshaving known functions and interfaces executed in a standard AI Framework (AIF).

Technical Specification: Artificial Intelligence Framework (MPAI-AIF) V2.0 specifies architecture, interfaces, protocols, and APIs of the MPAI AI Framework specially designed for execution of AI-based implementations, but also suitable for mixed AI and traditional data processing workflows.

Figure 1 – The MPAI-AIF Reference Model

Figure 1 – The MPAI-AIF Reference Model

In principle, AI Modules do not need to run on the same hardware/software platform; remote execution hosted by other orchestrators is provided for by communication through specified interfaces. AI Modules are interoperable, i.e., an AI Module with a defined function and interface can be replaced by a different implementation having the same function and interface and possibly different performance. AIFs natively interface with the MPAI Store and its catalogue of AIWs and AIMs, providing access to validated software components.

The AIM implementation mechanism enables developers to abstract from the development environment and provide interoperable implementations running on a variety of hardware/software platforms. For instance, a module might be implemented as software running on different classes of systems, from MCUs to HPCs, or as a hardware component, or as a hybrid hardware-software solution.

The resulting AI application offers a range of desirable high-level features, e.g.:

- AI Workflows are executed in local and distributed Zero-Trust architectures, and AI Frameworks can provide access to a number of Trusted Services

- AI Frameworks can interact with other orchestrators operating in proximity.

- AI Frameworks expose a rich set of APIs, for instance offering direct support for machine learning functionalities and optional support for security services.

Figure 1 includes the following components:

- AI Workflow characterised by:

- Functions

- Input/Output Data.

- AI Modules characterised by:

- Functions

- Input/Output Data.

- AIM topology.

- AIW-specific Storage (secure or non-secure).

- Controller (secure/non-secure).

- Communication.

- Global Storage.

- Access (to static or slowly changing data).

- Security Abstraction Layer (SAL) offering Secure functionalities.

- MPAI Store from where AIMs can be downloaded.

3 Governance of the MPAI Ecosystem

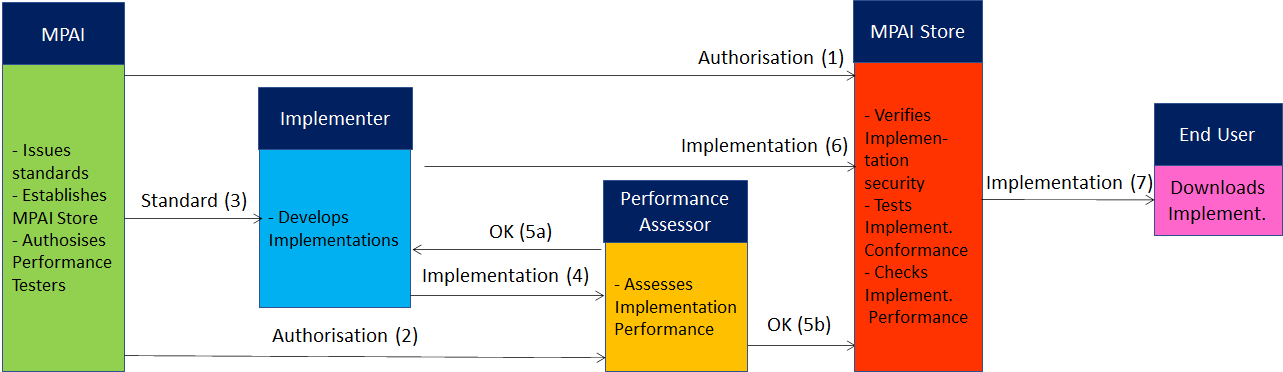

Any standard organisation enables the creation of an ecosystem comprising standards, implementers, conformance testers, and end-users. The technical foundations of the MPAI Ecosystem are provided by Technical Specification: Governance of the MPAI Ecosystem (MPAI-GME) V1.1. MPAI produces:

- Technical Specifications.

- Reference Software Specifications.

- Conformance Testing Specifications.

- Performance Assessment Specifications.

- Technical Reports.

An MPAI Standard is a collection of a variable number of the 5 document types.

Figure 2 depicts the MPAI ecosystem operation for conforming MPAI implementations:

- MPAI

- Appoints the not-for-profit MPAI Store.

- Appoints Performance Assessors.

- Publishes Standards.

- Implementers submit Implementations to Performance Assessors.

- Implementers submit Implementations to the MPAI Store and to Performance Assessors.

- Performance Assessors inform Implementers and MPAI Store of the outcome of their performance assessments.

- MPAI Store verifies security, Tests Conformance of Implementation, and publishes Implementations with attached Performance Assessment results and Users’ experience reports.

- Users download Implementations and report their experience to MPAI Store.

Figure 2 – The MPAI ecosystem operation

4 Personal Status

4.1 General

Personal Status is the set of internal characteristics of a human engaged in a conversation. Technical Specification: Multimodal Conversation (MPAI-MMC) V2.1 identifies three Factors of the internal state:

- Cognitive State a typically rational result from the interaction of a human/avatar with the Environment (e.g., “Confused”, “Dubious”, “Convinced”).

- Emotion a typically less rational result from the interaction of a human/avatar with the Environment (e.g., “Angry”, “Sad”, “Determined”).

- Social Attitude the stance taken by a human who has an Emotional and a Cognitive State (e.g., “Respectful”, “Confrontational”, “Soothing”).

The Personal Status of a human can be displayed in one of the following Modalities: Text, Speech, Face, or Gesture. More Modalities are possible, e.g., the body itself as in body language, dance, song, etc. The Personal Status may be shown only by one of the four Modalities or by two, three or all four simultaneously.

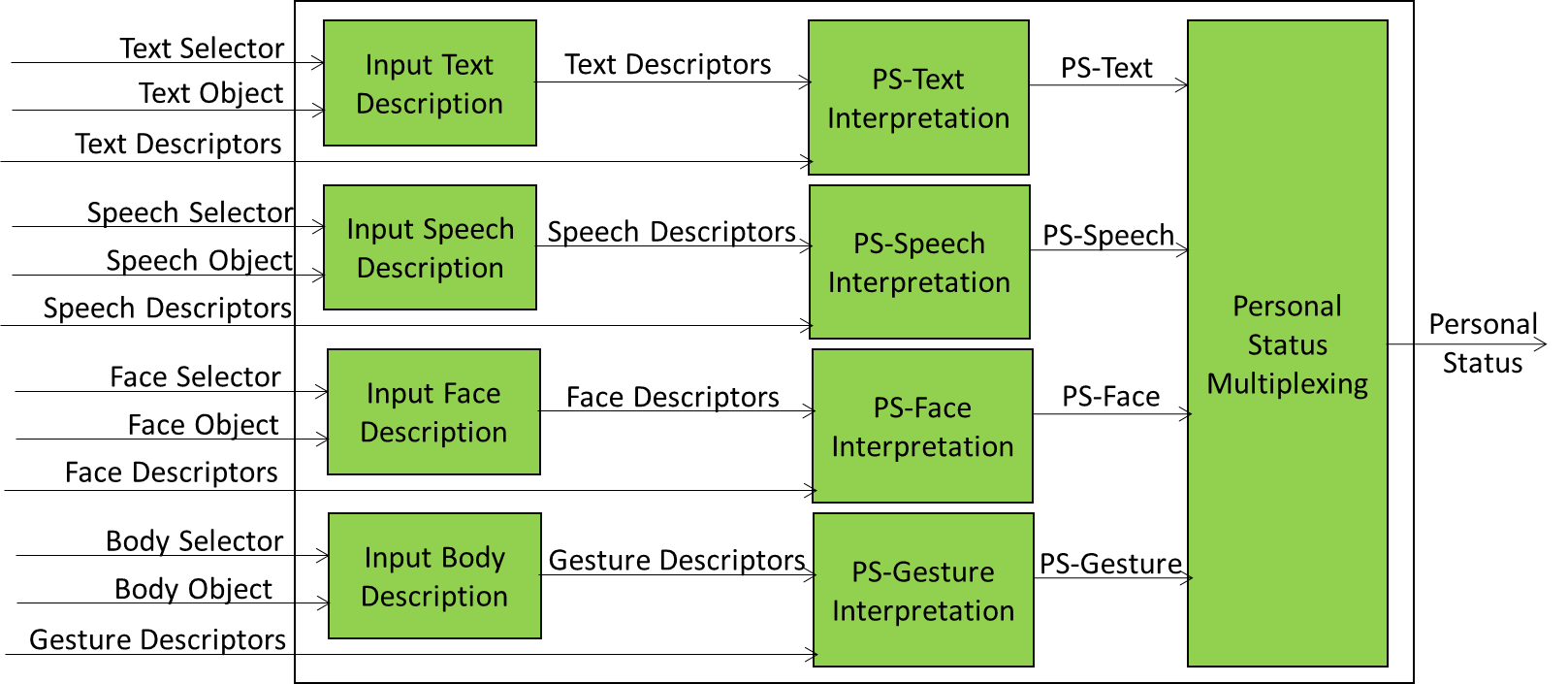

4.2 Personal Status Extraction

Personal Status Extraction (PSE) is a Composite AIM that analyses the Personal Status conveyed by Text, Speech, Face, and Gesture and provides an estimate of the Personal Status in three steps:

- Data Capture (e.g., characters and words, a digitised speech segment, the digital video containing the hand of a person, etc.).

- Descriptor Extraction (e.g., pitch and intonation of the speech segment, thumb of the hand raised, the right eye winking, etc.).

- Personal Status Interpretation (i.e., at least one of Emotion, Cognitive State, and Attitude).

Figure 3 depicts the Personal Status estimation process:

- Descriptors are extracted from Text, Speech, Face Object, and Body Object. Depending on the value of Selection, Descriptors can be provided by an AIM upstream.

- Descriptors are interpreted and the specific indicators of the Personal Status in the Text, Speech, Face, and Gesture Modalities are derived.

- Personal Status is obtained by combining the estimates of different Modalities of the Personal Status.

Figure 3 – Reference Model of Personal Status Extraction

An implementation can combine, e.g., the PS-Gesture Description and PS-Gesture Interpretation AIMs into one AIM, and directly provide PS-Gesture from a Body Object without exposing PS-Gesture Descriptors.

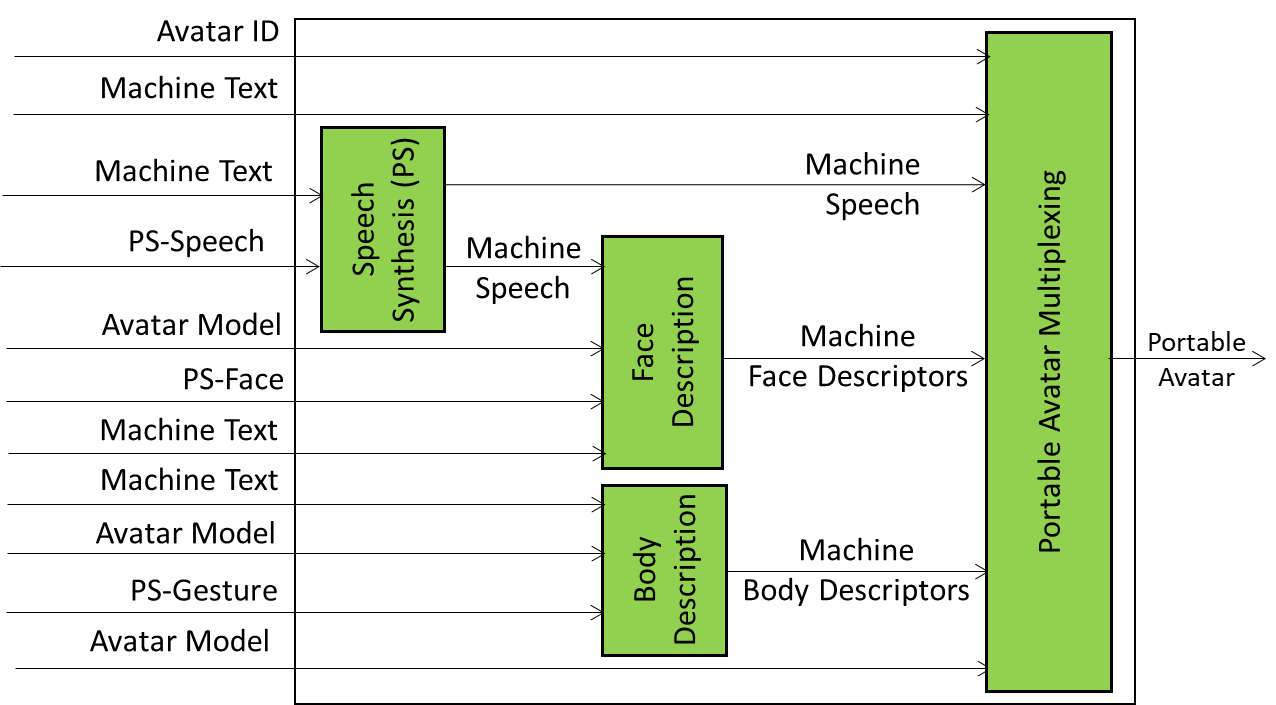

4.3 Personal Status Display

Technical Specification: Portable Avatar (MPAI-PAF) V1.1 specifies Personal Status Display (PSD) a Composite AIM receiving Text and Personal Status and generating an avatar located in a Audio-Visual Scene producing Text, uttering Speech, and displaying a Face and making Gestures all showing the intended Personal Status. The Personal Status driving the avatar can be extracted from a human or can be synthetically generated by a machine as a result of its conversation with a human or another avatar. Therefore Personal Status can be defined as the set of internal characteristics of a human or a machine – called Entity – engaged in a conversation.

Figure 4 represents the AIMs required to implement Personal Status Display.

Figure 4 – Reference Model of Personal Status Display

The Personal Status Display operates as follows:

- Avatar ID is the ID of the Portable Avatar.

- Machine Text is synthesised as Speech using the Personal Status provided by PS-Speech.

- Machine Speech and PS-Face are used to produce the Machine Face Descriptors.

- PS-Gesture and Text are used for Machine Body Descriptors using the Avatar Model.

- Portable Avatar Multiplexing produces the Portable Avatar.

5 Human-Machine dialogue

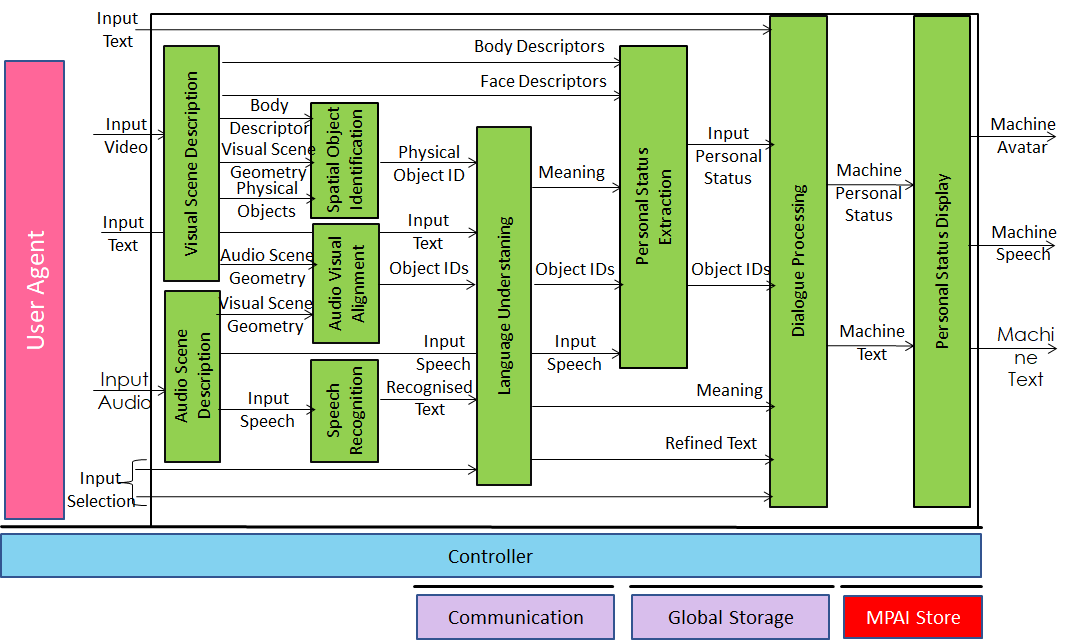

Figure 5 depicts the model of the MPAI Personal-Status-based human-machine dialogue specified by Technical Specification: Multimodal Conversation (MPAI-MMC) V2.1.

Figure 5 – Personal Status-based Human-Machine dialogue

Audio Scene Description and Visual Scene Description are two front-end AIMs. The former produces 1) Physical Objects, Face and Body Descriptors of the humans, and Visual Scene Geometry; the latter produces Audio Objects and Audio Scene Geometry. A necessary AIM for many applications is Audio-Visual Alignment establishing relationships between Audio and Visual Objects.

Body Descriptors, Physical Objects and Visual Scene Geometry are used by the Spatial Object Identification AIM. This provides the identifier of the Physical Object the human body is indicating by using the Body Descriptors and the Scene Geometry. The Speech extracted from the Audio Scene Descriptor is recognised and passed to the Language Understanding AIM together with the Physical Object ID. The AIM provided a refined text (Text (Language Understanding)) and Meaning (semantic, syntactic, and structural information extracted from input data).

Face and Body Descriptors, Meaning and Speech are used by Personal Status Extraction to extract the Personal Status of the human. Dialogue Processing produces a textual response with an associated machine Personal Status that is congruent with the input Text (Language Understanding) and human Personal Status. The Personal Status Display AIM produces a synthetic Speech and an avatar representing the machine.

6 Connected Autonomous Vehicles

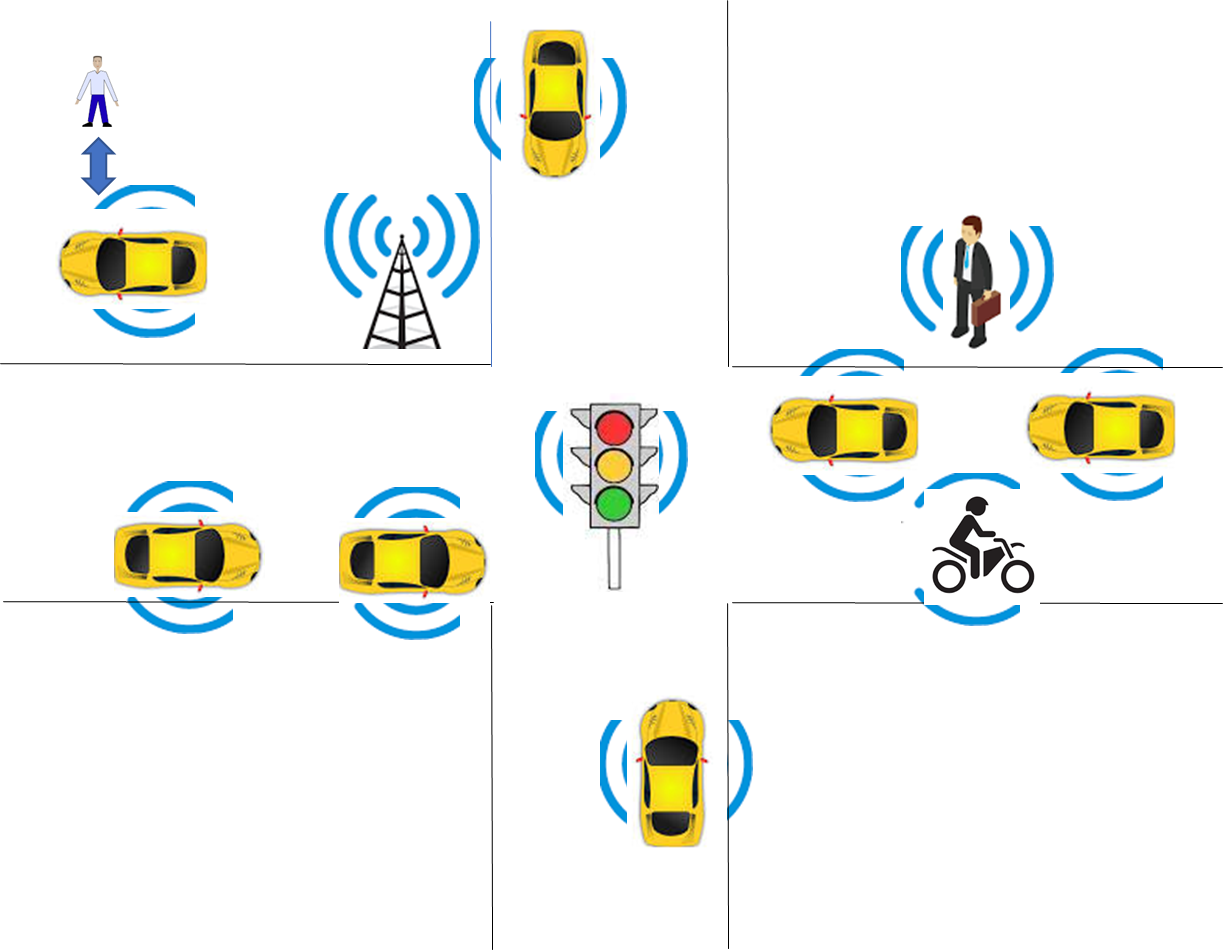

Technical Specification: Connected Autonomous Vehicle – Architecture V1.0 defines a Connected Autonomous Vehicle (CAV) as the information technology-related components of a vehicle enabling it to autonomously reach a destination by:

- Conversing with humans by understanding their utterances, e.g., a request to be taken to a destination.

- Acquiring information with a variety of sensors on the physical environment where it is located or traverses like the one depicted in Figure 6.

- Planning a Route enabling the CAV to reach the requested destination.

- Autonomously reaching the destination by:

- Actuating motion in the physical environment.

- Building Digital Representations of the Environment.

- Exchanging elements of such Representations with other CAVs and CAV-aware entities.

- Making decisions about how to execute the Route.

- Acting on the CAV motion actuation to implement the decisions.

|

|

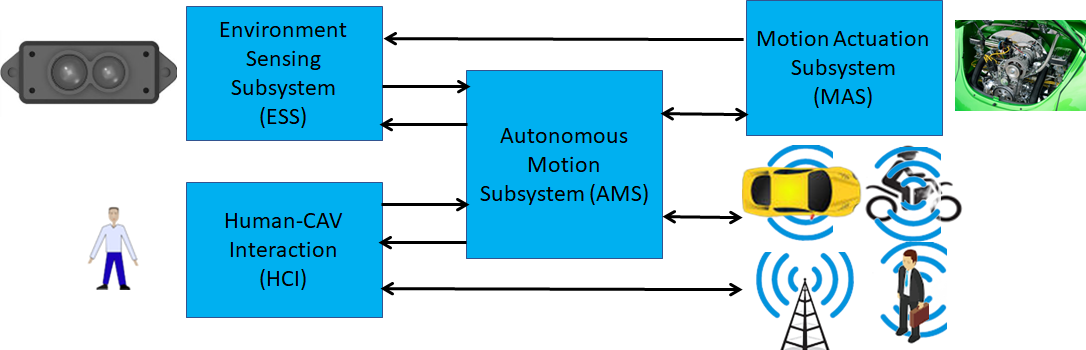

| Figure 6 – An environment of CAV operation | Figure 7 – The MPAI-CAV subsystems |

MPAI believes in the capability of standards to accelerate the creation of a global competitive CAV market and has published Technical Specification: Connected Autonomous Vehicle (MPAI-CAV) – Architecture that includes (see also Figure 7):

- A CAV Reference Model broken down into four Subsystems.

- The Functions of each Subsystem.

- The Data exchanged between Subsystems.

- A breakdown of each Subsystem in Components of which the following is specified:

- The Functions of the Components.

- The Data exchanged between Components.

- The Topology of Components and their Connections.

- Subsequently, MPAI will develop Functional Requirements of the Data exchanged.

- Eventually, MPAI will develop standard technologies for the Data exchanged.

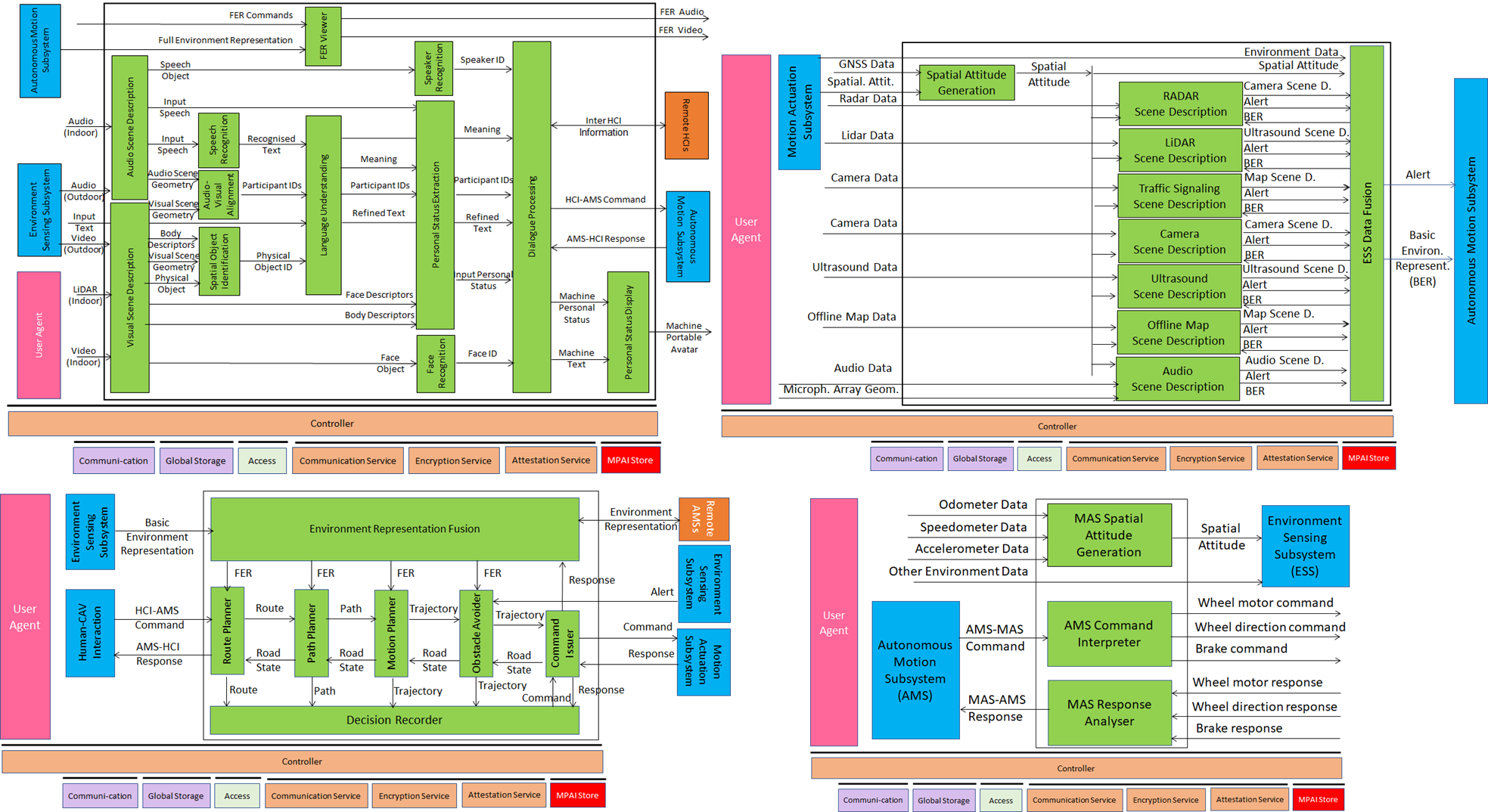

Figure 8 – The MPAI-CAV Subsystems with their Components

Subsystems are implemented as AI Workflows and Components as AI Modules according to Technical Specification: AI Framework (MPAI-AIF).

The Processes of a CAV generate a persistent Metaverse Instance (M-Instance) resulting from the integration of:

- The Environment Representation generated by the Environment Sensing Subsystem by UM-Capturing the U-Location being traversed by the CAV.

- The M-Locations of the M-Instances produced by other CAVs in range CAV that reproduce the U-Locations being traversed by such CAVs to improve the accuracy of the Ego CAV’s M-Locations.

- Relevant Experiences of the Autonomous Motion Subsystem at the M-Location.

Some operations of an implementation of MPAI-CAV can be represented according to the MPAI-MMM – Architecture.

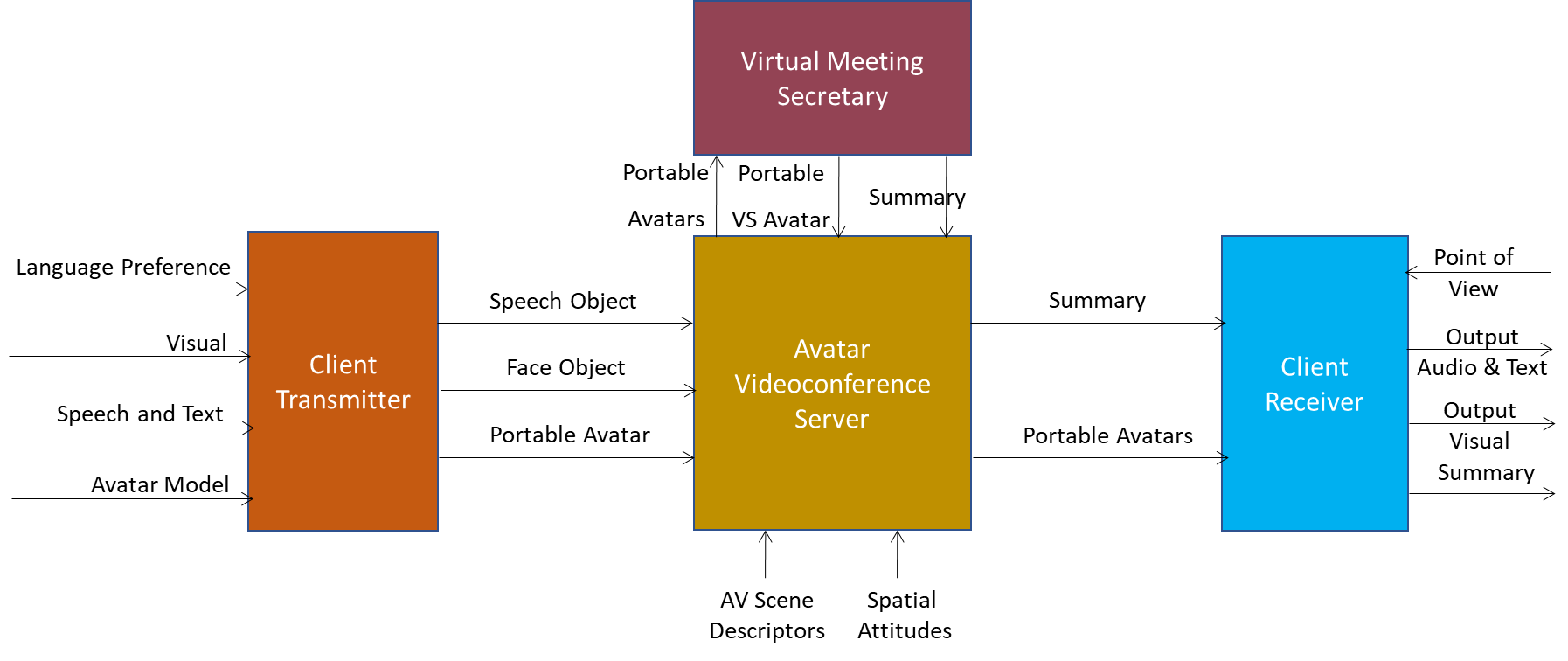

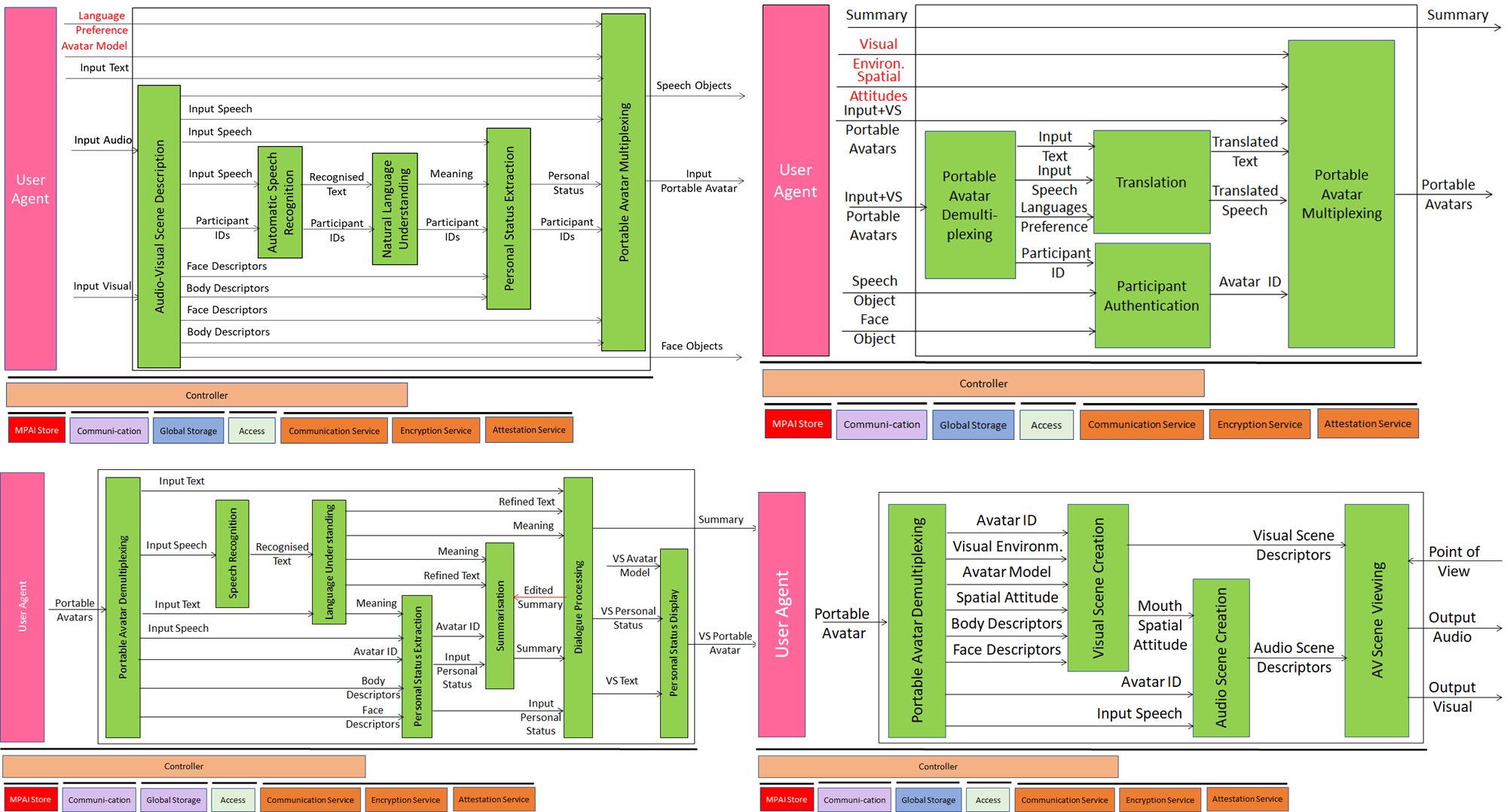

7 Avatar-Based Videoconference

Technical Specification: Portable Avatar (MPAI-PAF) V1.1 specifies AIWs and AIMs of the Avatar-Based Videoconference Use Case (PAF-ABV) where geographically distributed humans hold a videoconference represented by their avatars having the participants’ visual appearance and uttering their real voice (Figure 9).

Figure 9 – Avatar-Based Videoconference end-to-end diagram

Figure 9 – Avatar-Based Videoconference end-to-end diagram

Figure 10 contains the reference architectures of the four AW Workflows constituting the Avatar-Based Videoconference: Videoconference Client Transmitter, Avatar Videoconference Server, Virtual Meeting Secretary, and Videoconference Client Receiver.

Figure 10 – The AIWs of Avatar-Based Videoconference

8 MPAI Metaverse Model

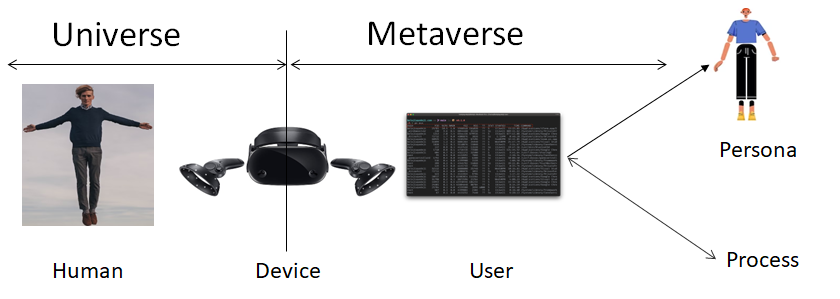

MPAI has published two Technical Reports and recently published Technical Specification: MPAI Metaverse Model (MPAI-MMM) – Architecture V1.1.

MPAI-MMM Architecture assumes that an M-Instance is composed of a set of processes running on a computing environment where each process provides a function. There are several types of processes:

- Device has the task to “connect” a U-Environment with an M-Environment. To enable a safe governance, a Device should be connected to an M-Instance under the responsibility of a human.

- User “represents” a human in the M-Instance and acts on their behalf.

- Service performs specific functions such as creating objects.

- App runs on a Device. An example of App is a User that is not executing in the metaverse platform but rather on the Device.

An M-Instance includes objects connected with a U-Instance; some objects, like digitised humans, mirror activities carried out in the Universe; activities in the M-Instance may have effects on U-Environments. The operation of an M-Instance is governed by Rules, mandating, e.g., that a human wishing to connect a Device to, or deploy Users in an M-Instance should register and provide some data.

Examples of data required to register are the human’s Personal Profile, Device IDs, User IDs, Persona, i.e., an Avatar Model that the User process can utilise to render itself, and Wallet.

Figure 1 pictorially represents some of the points made so far.

Figure 1 – Universe-Metaverse interaction

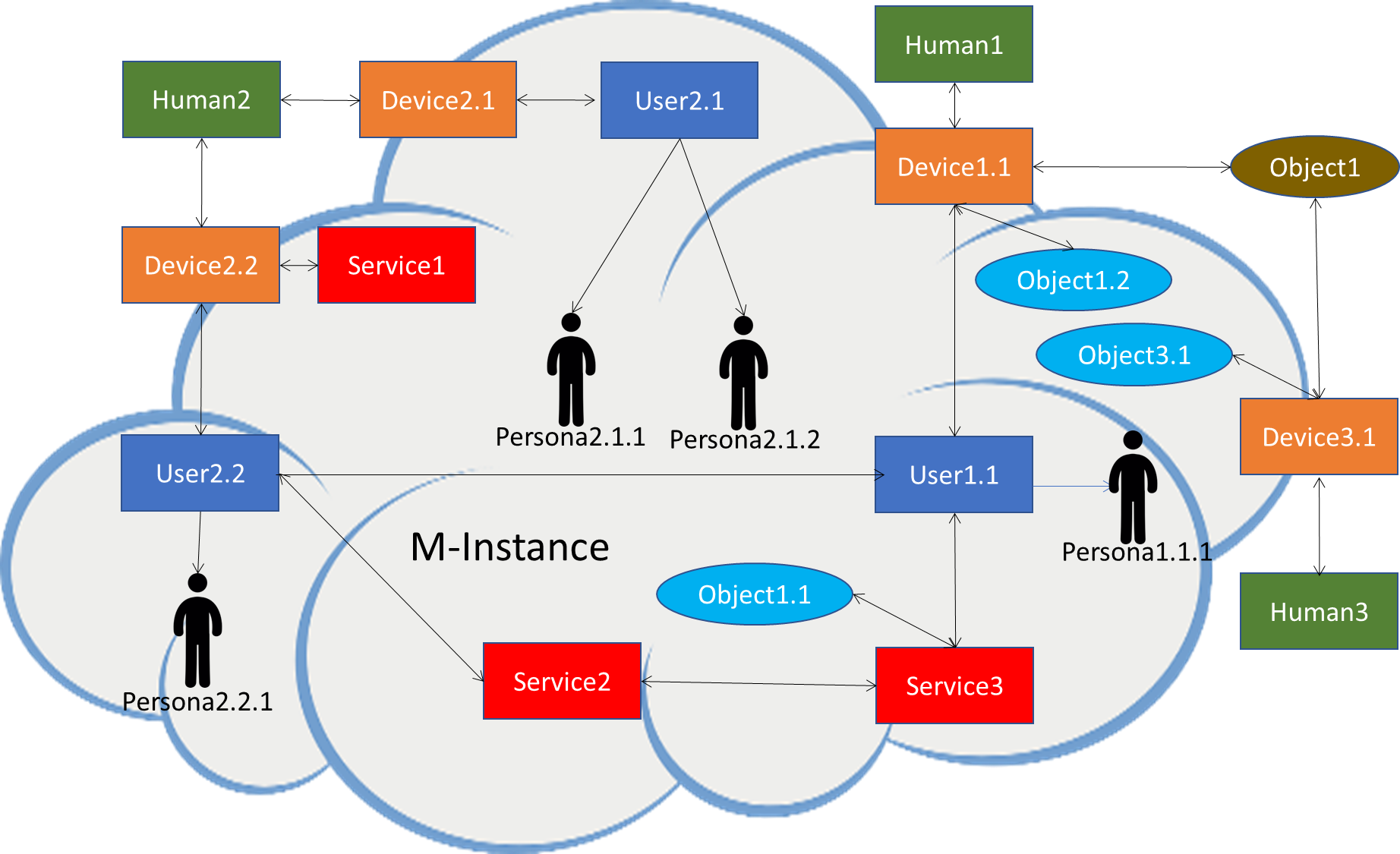

A graphical representation of the MPAI Metaverse Model is given by Figure 2.

Figure 2 – Graphical representation of the MMM

In Figure 3 we see that:

- Human1 and Human3 are connected to the M-Instance via a Device, but Human2 is connected to the M-Instance with two Device.

- Human1 has deployed one User, User 2 two Users and Human3

- 1 of Human1 is rendered as one Persona1.1.1, User 2.1 of Human2 as two Personae (Persona2.1.1 and Persona2.1.2), and User2.2 as one Persona2.2.1.

- Object1 in the U-Environment is captured by 1 and Device3.1 and mapped as two distinct Objects: Object1.2 and Object3.1.

- Users and Services variously interact.

A User may have the capability to perform certain Actions on certain Items but more commonly a User may ask a Device to do something for it, like capture an animation stream and use it to animate a Persona. The help of the Device may not be sufficient because MPAI assumes that an animation stream is not an Item until it gets Identified as such. Hence, the help of the Identify Service is also needed.

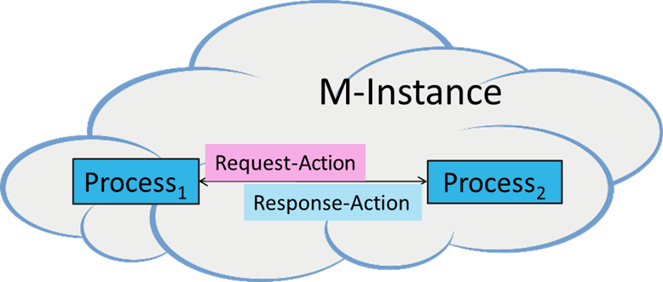

MPAI has defined the Inter-Process Communication Protocol: a process creates, identifies and sends a Request-Action Item to the destination process. This may or may not perform the action requested and sends a Response-Action. Figure 3 depicts the protocol.

Figure 3 – Interaction protocol

The Interaction Protocol enables a Process to request another Process to perform an Action, i.e. a function on an Item, a data type recognised in the M-Instance.

MPAI has specified the functional requirements of a set of Actions:

- General Actions (Register, Change, Hide, Authenticate, Identify, Modify, Validate, Execute).

- Call a Service (Author, Discover, Inform, Interpret, Post, Transact, Convert, Resolve).

- M-Instance to M-Instance (MM-Add, MM-Animate, MM-Disable, MM-Embed, MM-Enable, MM-Send).

- M-Instance to U-Environment (MU-Actuate, MU-Render, MU-Send, Track).

- U-Environment to M-Instance (UM-Animate, UM-Capture, UM-Render, UM-Send).

This is a classified list of the Item defined by MMM-Architecture:

- General (M-Instance, M-Capabilities, M-Environment, Identifier, Rules, Rights, Program, Contract)

- Human/User-related (Account, Activity Data, Personal Profile, Social Graph, User Data).

- Process Interaction (Message, P-Capabilities, Request-Action, Response-Action).

- Service Access (AuthenticateIn, AuthenticateOut, DiscoverIn, DiscoverOut, InformIn, InformOut, InterpretIn, InterpretOut).

- Finance-related (Asset, Ledger, Provenance, Transaction, Value, Wallet).

- Perception-related (Event, Experience, Interaction, Map, Model, Object, Scene, Stream, Summary).

- Space-related (M-Location, U-Location).

MPAI-MMM – Architecture specifies:

- Terms and Definitions

- Operation Model

- Functional Requirements of Processes, Actions, Items, and Data Types

- Scripting Language

- Functional Profiles

enabling Interoperability of two or more metaverse instances (M-Instances) if they:

- Rely on the Operation Model, and

- Use the same Profile Architecture, and

- Either the same technologies, or

- Independent technologies while accessing Conversion Services that losslessly transform Data of an M-InstanceA to Data of an M-InstanceB.

9 Object and Scene Description

Standard technologies to represent objects and scenes in a Virtual Space and possibly rendered to a real space is required by several MPAI Use Cases. Technical Specification: Object and Scene Description (MPAI-OSD) V1.0 specifies the syntax and semantics of the following Data Types:

- Spatial Attitude

- Audio Scene Geometry

- Audio Object

- Audio Scene Descriptors

- Visual Scene Geometry

- Visual Object

- Audio Scene Geometry

- Audio Object

- Audio Scene Descriptors

- Scene Descriptors

- Audio-Visual Scene Geometry

- Audio-Visual Object

- Audio-Visual Scene Descriptors.