| <–MPAI mission and organisation | A renewed life for the patent system–> |

As previously discussed, AI-based technologies are very powerful and also the object of a high level of concern and scrutiny by regulators over their use in sensitive or mission-critical contexts. A poorly designed AI component can conceal bias or privacy breaches, and any AI module is ultimately only as good as its training set is; in addition, hidden critical vulnerabilities might become evident only when the component is run on unexpected input data (for instance, the case of “typographic attacks”).

That is why, during the MPAI design phase, special attention has been put to the concept that the AI-based components provided by MPAI should be trustworthy – anyone willing to use them, including end-users who are not necessarily technical experts in the field, should feel reassured that nothing untoward will happen during operation. The same should happen when AI components offered by MPAI are combined together – and the user should be clearly made aware of what the limits and applicability scope of each component are. In fact, such requirements are arguably the ones having the most far-reaching consequences as far as the overall design of MPAI was concerned. One could say that being able to provide trustworthy AI components has been placed at the centre of MPAI philosophy, structure, and ecosystem.

To cope with this issue, MPAI has introduced the notion of performance of an implementation. An implementation “performs” well if the measurement of a set of attributes is above a certain threshold. The performance attributes are described in Section 14.4.

The performance assessment specification of an MPAI standard indicates which of the four attributes an implementation should support and to what extent (“grade”).

It should be noted that performance is not (technical) conformance. Conformance is measured for most technical standards as the adherence to the stated design and inter-operability parameters; however, an AI-based component might well be conformant to its specification (i.e., have the correct number and type of connection channels interfacing with other components as prescribed) and still present design flaws (such as hidden biases or poor training) that prevent it from performing well. So MPAI components shall be tested for conformance and may be assessed for performance. This is a significant difference with respect to the way technical standardising bodies have been operating so far and is a necessary MPAI response to cope with the specific problems and risks presented by AI as a technology. It complicates governance a bit, but the additional guarantees it provides the user with are well worth the effort.

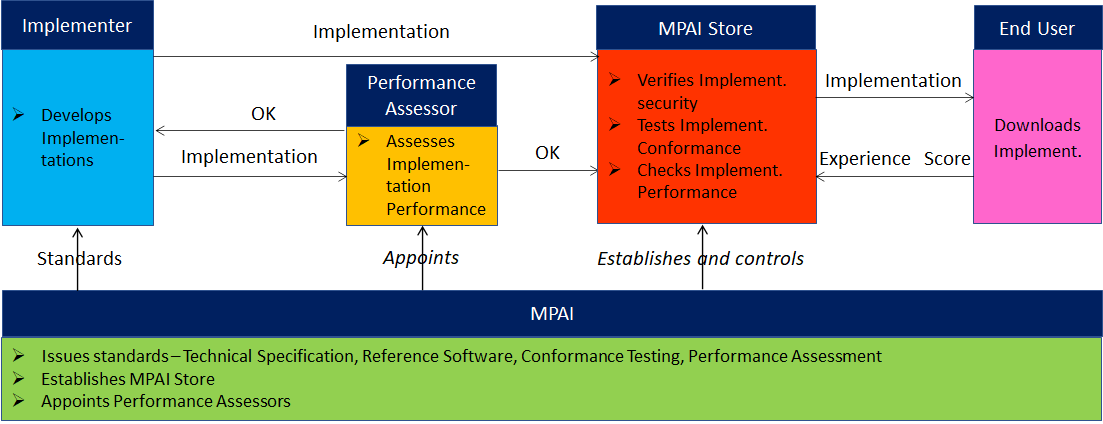

In general, MPAI’s commitment to pave the way for an ethical, democratic, and inclusive technology is reflected in the governance of its ecosystem and, more specifically, in the definition of the tests each novel technology being standardised by MPAI is subjected to. The full operation of the MPAI Ecosystems is depicted in Figure 26.

The foundations of the ecosystem governance rely on MPAI as a root of trust. MPAI develops the four components of a new standard as follows:

-

Technical Specification (TS)

-

Reference Software (RS)

-

Conformance Testing (CT)

-

Performance Assessment (PT)

Once the TS is defined, MPAI develops for each MPAI Standard a set of specifications containing the tools, the process and the data that shall be used in the future to estimate the level of performance of an implementation. As explained above, performance is defined as a function of a collection of attributes quantifying reliability, robustness, replicability, and fairness. Independent performance assessors are appointed by MPAI to carry out these procedures for each implementation.

The actors enabling the MPAI ecosystem to operate include the MPAI store, an independent not-for-profit entity established and controlled by MPAI. to end users, guaranteeing availability, reliability, performance, and trust.

Figure 26 – The governance of the MPAI ecosystem

The MPAI Store occupies a central place in the overall architecture – it acts as a gateway through which implementers make their products available In particular, the MPAI store receives implementations of MPAI standards, tests them for security and conformance, receives the results of the performance tests and stores them. The MPAI store also assigns the AIWs and AIMs provided by each implementer to security experts for testing; it receives AIFs, AIWs and AIMs distribution licenses, and makes implementations available to users.

So, to make the implementation of an MPAI standard available to end users, a number of elements have to come into place:

-

The experts of the appropriate MPAI development committee develop the Technical Specification, provide the Reference Software, and develop the Conformance Testing and Performance Assessment specifications

-

An implementer creates an application based on the MPAI Technical Specification

-

The application is evaluated for security and correct operation by an MPAI-appointed assessor following the Conformance Testing and Performance Assessment specifications

-

The way the application performs is graded according to the assessor’s evaluation.

In addition, most MPAI standards will rely on the AI Framework, MPAI-AIF, which, as previously explained, is the overarching MPAI standard specifying how different AI-based AI modules (AIMs) can be connected into AI workflows (AIWs) to generate more complex applications and executed on an MPAI controller (a.k.a. an AIF implementation). To execute an implementation of any MPAI standard, a user will also need an implementation of MPAI-AIF, which in turn, MPAI-AIF being a regular standard, will have been subjected to conformance and performance tests – all for the sake of reliable AI technology!

To summarise, the MPAI ecosystem and its AI-specific design allow end users to access implementations of AIFs, AIWs and AIMs through the MPAI Store that are trustworthy and secure. In addition, by mandating the specification of performance tests and appointing independent performance assessors, MPAI guarantees that each AI component it provides will offer a minimum level of performance, as specified in the standard defining the component. Through the governance and the structure of its ecosystem, MPAI addresses several problems inherently associated with AI applications and provides the implementers and end users with reliable solutions.

| <–MPAI mission and organisation | A renewed life for the patent system–> |