MPAI is barely 5 months old, but its community is expanding. So, we thought that it might be useful to have a slim and effective communication channel to keep our extended community informed of the latest and most relevant news. We plan to have a monthly newsletter.

We are keen to hear from you, so don’t hesitate to give us your feedback.

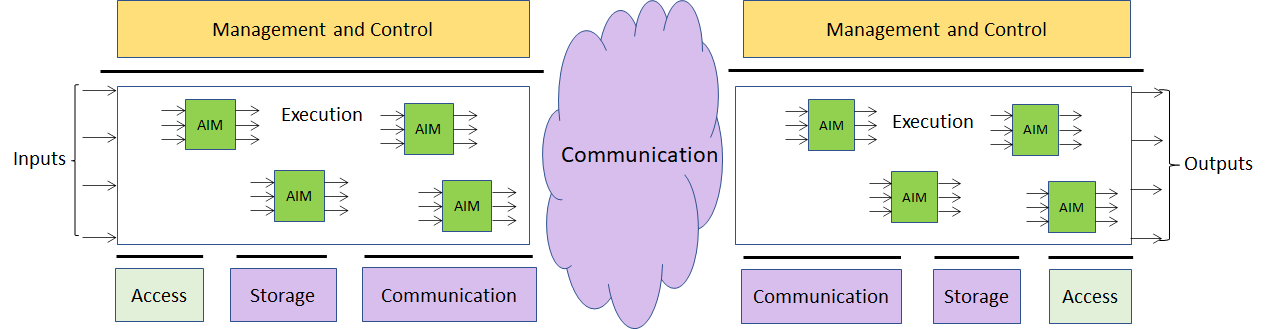

MPAI has started the development of its first standard: MPAI-AIF.

In December last year, MPAI issue a Call for Technologies for its first standard. The call concerned “AI Framework”, an environment capable to assemble and execute AI Modules (AIMs), components that perform certain functions that achieve certain goals.

The call requested technologies to support the life cycle of single and multiple AIMs, and to manage machine learning and workflows.

The standard is expected to be released in July 2021

MPAI is looking for technologies to develop its Context-based Audio Enhancement standard

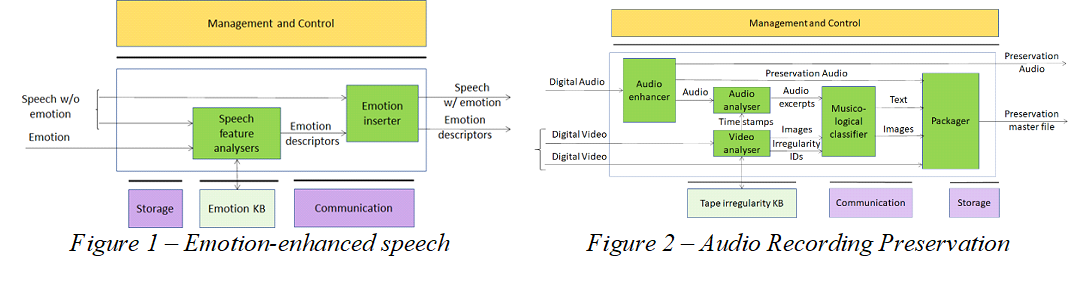

In September last year, 3 weeks before MPAI was formally established, the group of people who was developing the MPAI organisation had already identified Context-based Audio Enhancement as an important target of MPAI standardisation. The idea was to improve the user experience in several audio-related applications including entertainment, communication, teleconferencing, gaming, post-production, restoration etc. The intention was promptly announced in a press release.

A lot has happened since then. Finally, in February 2021 the original intention took shape with the publication of a Call for Technologies for the upcoming Context-based Audio Enhancement (MPAI-CAE) standard.

The Call envisages 4 use cases. In Emotion-enhanced speech (left) an emotion-less synthesised or natural speech is enhanced with a specified emotion with specified intensity. In Audio recording preservation (right) sound from an old audio tape is enhanced and a preservation master file produced using a video camera pointing to the magnetic head;

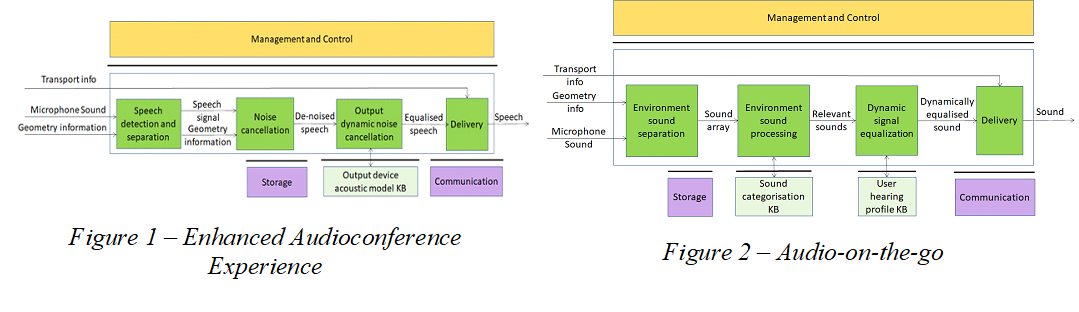

In Enhanced audioconference experience (left) speech captured in an unsuitable (e.g. at home) enviroment is cleaned of unwanted sounds. In Audio on the go (right) the audio experienced by a user in an environment preserves the external sounds that are considered relevant.

MPAI needs more technologies

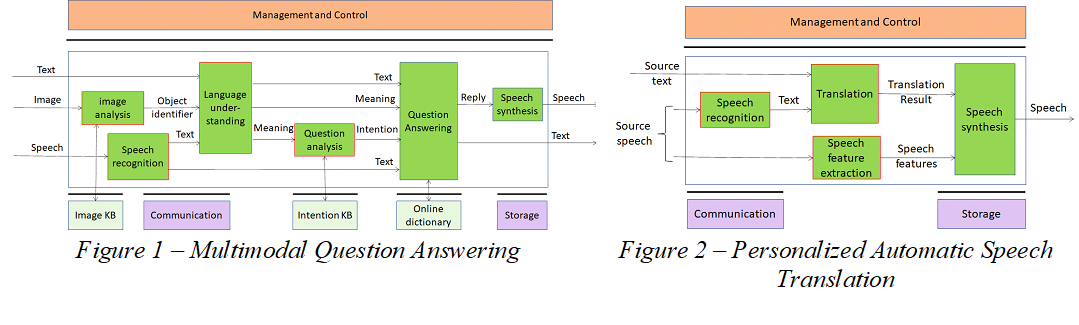

On the same day the MPAI-CAE Call was published, MPAI published another Call for Technologies for the Multimodal Conversation (MPAI-MMC) standard. This broad application area can vastly benefit from AI.

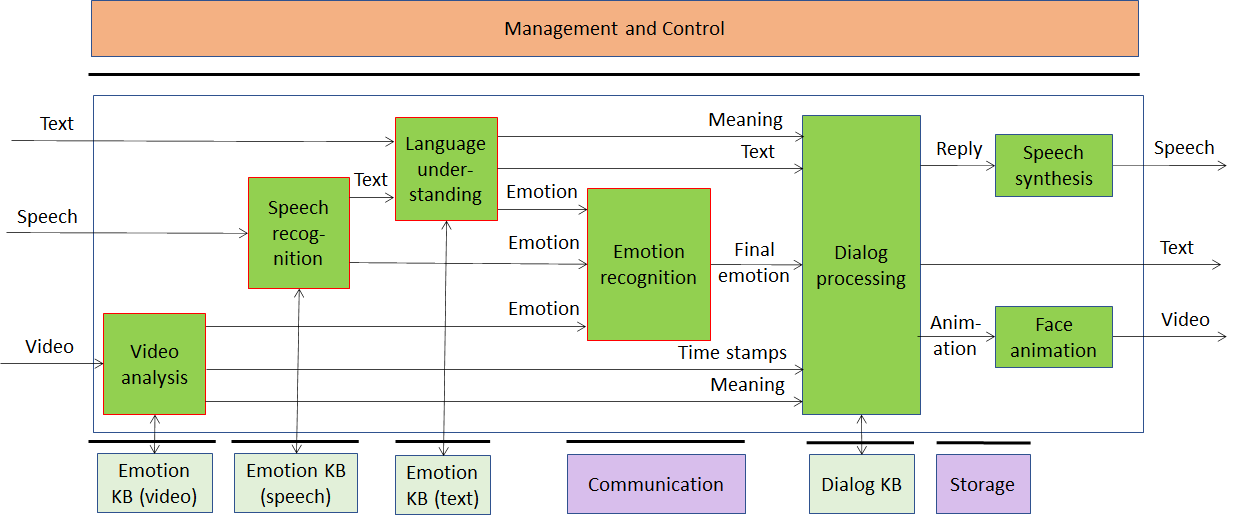

Currently, the standard supports 3 use cases where a human entertains an audio-visual conversation with a machine emulating human-to-human conversation in completeness and intensity. In Conversation with emotion, the human holds a dialogue with speech, video and possibly text with a machine that responds with a synthesised voice and an animated face.

In Multimedia question answering (left), a human requests information about an object while displaying it. The machine responds with synthesised speech. In Personalized Automatic Speech Translation (right), a sentence uttered by a human is translated by a machine using a synthesised voice that preserves the human speech features.