Highlights

- Online presentation of Neural Network Watermarking Call for Technologies

- The Neural Network Watermarking Call for Technologies

- Soon MPAI will be five – part 1

- Meetings in the coming June/July 2025 meeting cycle

Online presentation of Neural Network Watermarking Call for Technologies

The 57th MPAI General Assembly has published a new Call for Neural Network Traceability Technologies (NNW-TEC).

An online presentation will be made on 2025/07/01T15:00UTC)

Please register at https://bit.ly/4mW6AWX to attend.

The Neural Network Watermarking Call for Technologies

Introduction

During the last decade, Neural Networks have been deployed in an increasing variety of domains and the production of Neural Networks became costly, in terms of both resources (GPUs, CPUs, memory) and time. Moreover, users of Neural Network based services more and more express their needs for a certified service quality.

NN Traceability offers solutions to satisfy both needs, ensuring that a deployed Neural Network is traceable and any tampering detected.

Inherited from the multimedia realm, watermarking assembles a family of methodological and application tools allowing to imperceptibly and persistently insert some metadata (payload) into an original NN model. Subsequently, detecting/decoding this metadata from the model itself or from any of its inferences provides the means to trace the source and to verify the authenticity.

An additional traceability technology is fingerprinting that relates to a family of methodological and applicative tools allowing to extract some salient information from the original NN model (a fingerprint) and to subsequently identify that model based on the extracted information.

Therefore, MPAI has found the application area called “Neural Network Watermarking” to be relevant for MPAI standardization as there is a need for both Nural Network Traceability technologies and for assessing the performances such technologies.

MPAI available standards

In response to these needs, MPAI has established the Neural Network Watermarking Development Committee (NNW-DC). The DC has developed Technical Specification: Neural Network Watermarking (MPAI-NNW) – Traceability (NNW-NNT) V1.0. This specifies methods to evaluate the following aspects of Active (Watermarking) and Passive (Fingerprinting) Neural Network Traceability Methods:

- The ability of a Neural Network Traceability Detector/Decoder to detect/decode/match Traceability Data when the traced Neural Network has been modified,

- The computational cost of injecting, extracting, detecting, decoding, or matching Traceability Data,

- Specifically for active tracing methods, the impact of inserted Traceability Data on the performance of a neural network and on its inference.

MPAI-NNW Future Standards

During its 57th GA held on June 11th, MPAI released a Call for Neural Network Watermarking (MPAI-NNW) – Technologies (NNW-TEC). This call requests Neural Network Traceability Technologies that make it possible to:

- verify that the data provided by an Actor, and received by another Actor is not compromised, i.e. it can be used for the intended scope,

- identify the Actors providing and receiving the data, and

- evaluate the quality of solutions supporting the previous two items.

An Actor is a process producing, providing, processing, or consuming information.

MPAI NNT Actors

Four types of Actors are identified as playing a traceability-related role in the use cases.

- NN owner – the developer of the NN, who needs to ensure that ownership of NN can be claimed.

- NN traceability provider – the developer of the traceability technology able to carry a payload in a neural network or in an inference.

- NN customer – the user who needs the NN owner’s NN to make a product or offer a service.

- NN end-user – the user who buys an NN-based product or subscribes to an NN-based service.

Examples of Actors are:

- Edge-devices and software

- Application devices and software

- Network devices and software

- Network services

MPAI NNT Use cases

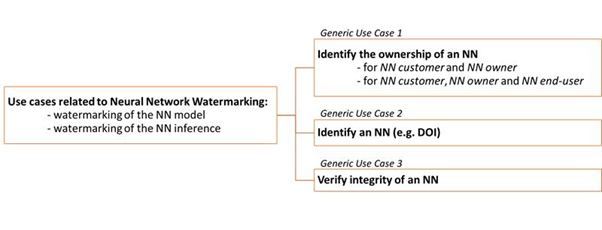

MPAI use cases relate to both the NN per se (i.e., to the data representation of the model) and to the inference of that NN (i.e., to the result produced by the network when fed with some input data), as illustrated in Figure 1.

Figure 1: Synopsis of NNT generic use cases: Identify the ownership of an NN, Identify an NN (e.g. DOI) and Verify integrity of an NN

The NNW-TEC use cases document is available; it includes sequence diagrams describing the positions and actions of the four main Actors in the workflow.

MPAI NNT Service and application scenarios

MPAI NNT is relevant for services and applications benefitting from one or several conventional NN tasks such as:

- Video/image/audio/speech/text classification

- Video/image/audio/speech/text segmentation

- Video/image/audio/speech/text generation

- Video/image/audio/speech decoding

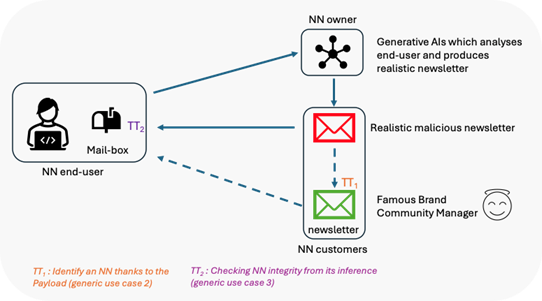

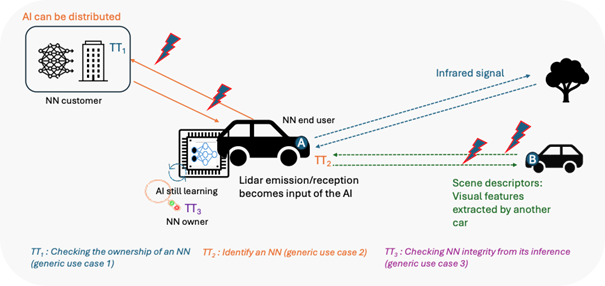

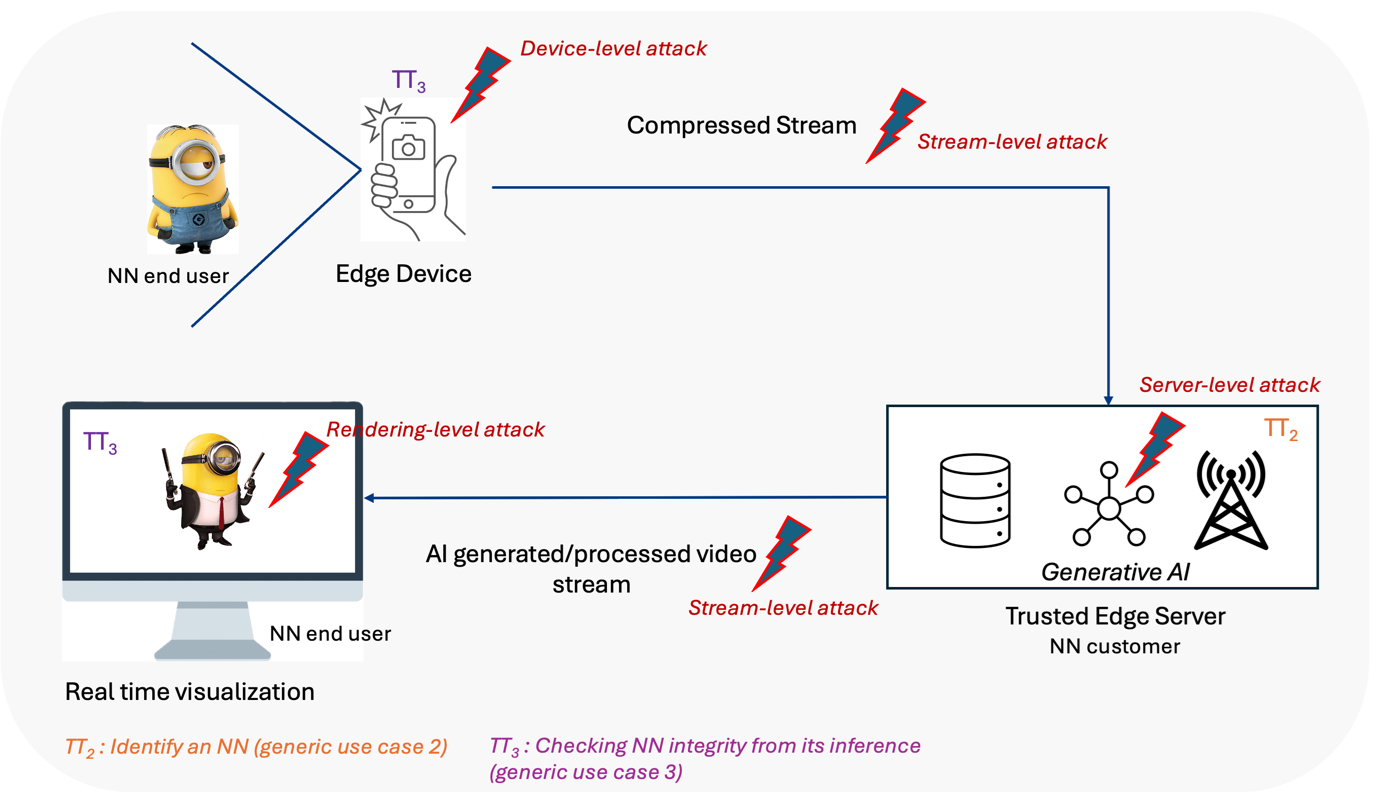

Figures 2, 3 and 4 present three typologies of services and applications aggregating the generic use cases presented above.

The first example (Traceable newsletter service – Figure 2) covers the case where an end-user subscribes to a newsletter that is produced by a Generative AI service (provided by an NN customer), according to the end-user profile. In such a use case, a malicious user might try to temper the very production of the personalized content or to modify it during its transmission.

The second example (Autonomous vehicle services – Figure 3) deals with the traceability and authenticity of the multimodal content that is exchanged in various ways: (1) the car A (acting as an NN end-user) sends to a server (acting as an NN customer or owner) acquired signals for data-processing, (2) An embedded AI transmits instructions such as braking, turning, or accelerating to the car (NN owner and end-user), (3) Another vehicle B in the environment transmits environmental information to vehicle A. Various types of malicious attacks with critical consequences can be envisaged: AI interception and corruption (e.g. adversarial learning), les données can be corrupted in their transmission from and/or to the autonomous vehicle (forced connection interruption or data modification).

The third example (AI generated or processed information services – Figure 4) shows how NNT can be beneficial when real images are modified by a deepfake process. A user capturing a video sequence by a connected camera would like to appear as the archetype secret agent (say, James Bond) by interacting with a generative AI service remotely accessible in the network. This module synthesizes novel audiovisual content, which is then rendered on a large display for the user to enjoy. Such services are not immune from security threats: the attacker can intercept the encoded stream prior to its arrival at the trusted AI server and processed is through a malicious edge-deployed generative AI or it can affect the very trusted generative AI service (e.g. by employing some adversarial training techniques).

Figure 2: Autonomous vehicle services

Figure 3: AI-generated newsletter example

Figure 4: AI generated or processed information services

Soon MPAI will be five – part 1

Abstract

In five years, MPAI – Moving Picture, Audio, and Data Coding by Artificial Intelligence, the international, unaffiliated, not-for-profit association developing standards for AI-based data coding – has been carrying out its mission, making its results widely known and its status recognised. However, not all are clear why MPAI was established, which are its distinctive features, and why it stands out among the organisations developing standards.

This is the first of a series of articles that will revisit the driving force that allowed MPAI to develop 15 standards in a variety of fields where AI can make the difference and have half a score under development. The articles will pave the way for the next five years of MPAI.

1. Standards have a divine nature

MPAI was established as a Swiss not-for-profit organisation on 30 September 2020 with the mission of developing data coding standards based on Artificial Intelligence. The first element that has driven MPAI into existence is the very word STANDARD. If you ask people “what is a standard?” you are likely to obtain a wide range of responses. The simplest, most effective, and although rather obscure answer is instead “a tool that connects minds”. A standard establishes a paradigm that allows a mind to interpret what another mind expresses in words or by other means of communication.

The easiest example of standard is language itself. A language assigns labels – words – to physical and intellectual objects. Apparently, the language-defined labels are what make humans different from animals, even though there are innumerable forms of language used by animals that are less rich than the human one.

Words have a divine nature. So, it is no surprise that St. John’s Gospel starts with the sentence “In the beginning was the Word, and the Word was with God, and God was the Word”.

Is this divine nature only applicable to language? Well, no. If we say that 20 mm of rain fell in 4 hours, we are using the language to convey information about rain that would be void of a quantitative value if there was not a standard for length called meter and a standard for time called hour.

Next to these lofty goals, standards have other practical purposes, A technical standard enacted by a country may be used as a tool to limit and sometimes even outlaw products not conforming to that standard, in that country. The existence of standards used for this purpose was the main driver toward the establishment of the Moving Picture Experts Group (MPEG): a single digital video standard that eventually uprooted scores of analogue television standards, with myriads of sub-standards. The intention was to nullify this malignant use of standards.

The goal of MPEG was to serve humans by enabling them to hear and see, thanks to machines that were able to “understand” the bits generated by other machines. In other words, it was necessary that machines be made able to understand the “words” of other machines.

Come 2020, Artificial Intelligence was not yet in the headlines, but the direction was clear. AI systems would be endowed with more and more “intelligence”, communicate between each other, and let humans communicate with them. What forms the “word” would take were not clear, but that the forms would be manifold was.

While MPEG had ushered in a new spirit in standardisation, it had also fostered a transformation in the way the need for standards would take shape and how standards would be exploited. In the early MPEG days, most industries still had research laboratories where the advancement of technology was monitored and fostered with a view of exploiting technology for new products and services. New findings would be patented and new patent-based products launched. The market would bless the winner among different products doing more or less the same thing with different technologies.

MPEG rendered useless the costly step of battling on patents in the market bringing to the standards committee a battle on standards. As a patent in a successful standard was highly remunerative, it did not take much time for the industry to invest in any patentable research. By industry, we intend “any” industry, actually not even industries that had a business in products and services. The idea was that a day would come that a patent would be needed in “a” standard. That single patent would repay the tens-hundreds-thousands of unused patents.

This was significant progress in terms of optimising efforts to generate innovation, and letting more actors join the fray. That progress, however, came at a cost, the disconnect of exploitation from generating innovation. An industry that had made an innovation to achieve an investment had every interest to see that innovation deployed. A company that has a portfolio of 10,000 patents may decide not to license a patent (in practice, by dragging its feet in releasing a patent) if that helps it license a more remunerative patent instead.

Certainly, this epochal evolution has resulted in a more efficient generation of innovation but is actually hindering exploitation of valuable innovations.

(continue)

Meetings in the coming June/July 2025 meeting cycle

| Group name | 16-20 June | 23-27 June | 30 June – 4 July | 07-11 July | Time (UTC) |

| AI Framework | 16 | 23 | 30 | 7 | 16 |

| AI-based End-to-End Video Coding | 18 | 2 | 14 | ||

| AI-Enhanced Video Coding | 18 | 25 | 2 | 8 | 13 |

| Artificial Intelligence for Health | 16 | 8:30 | |||

| 23 | 10 | ||||

| Communication | 19 | 3 | 13:30 | ||

| Compression & Understanding of Industrial Data | 18 | 25 | 9:30 | ||

| Connected Autonomous Vehicle | 19 | 26 | 3 | 10 | 15 |

| Context-based Audio enhancement | 17 | 24 | 1 | 8 | 16 |

| Industry and Standards | 20 | 4 | 16 | ||

| MPAI Metaverse Model | 20 | 27 | 4 | 11 | 15 |

| Multimodal Conversation | 17 | 24 | 1 | 8 | 14 |

| Neural Network Watermarking | 17 | 24 | 1 | 8 | 15 |

| Portable Avatar Format | 20 | 27 | 4 | 11 | 10 |

| XR Venues | 17 | 24 | 1 | 8 | 17 |

| General Assembly (MPAI-58) | 9 | 15 |