Highlights

- Learn about the Up-sampling Filter for Video applications (EVC-TEC) standard!

- Exploring the Up-sampling Filter for Video applications (EVC-TEC) standard

- Soon MPAI will be five – part 2

- Meetings in the coming July/August 2025 meeting cycle

Learn about the Up-sampling Filter for Video applications (EVC-TEC) standard!

The Up-sampling Filter for Video applications (EVC-TEC) standard substantially improves video up- sampling These are the options you have to learn about it:

- Read the online text of the standard here.

- Read a brief introduction to the standard here.

- Attend the online presentation on 23 July at 13 UTC by registering here.

In any case, provide your comments to the MPAI Secretariat by 2025/08/18 T23:59 UTC.

Exploring the Up-sampling Filter for Video applications (EVC-TEC) standard

We are living in an unprecedented age where we are ubiquitously surrounded by digital devices enhancing our lives with large amounts of video streaming data. Consumer demand for high-quality video delivery is constantly increasing. To address this need, there is a need for new and more efficient compression tools providing high compression rates while preserving high video quality. New algorithms departing from the traditional block-based hybrid coding a respond to this need.

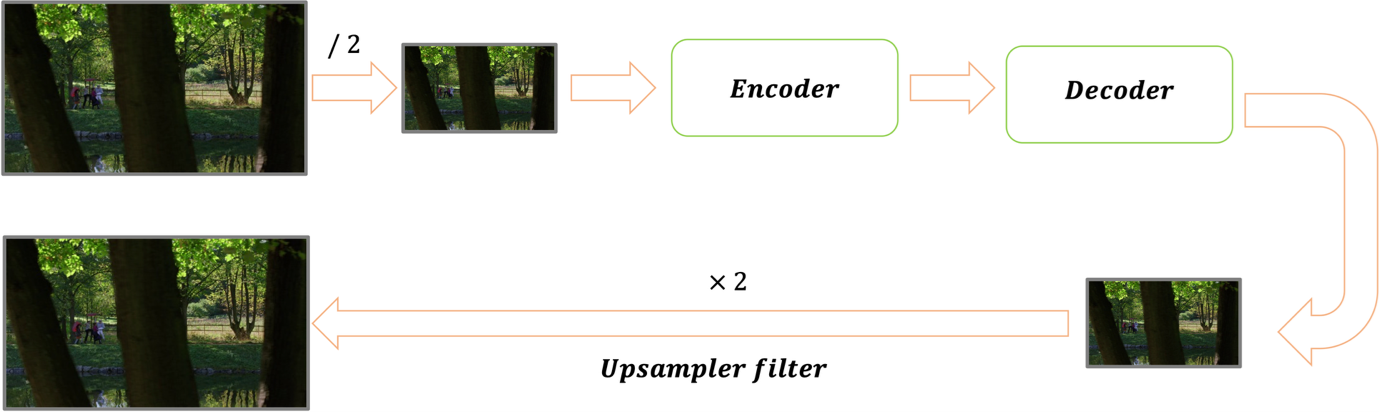

A method typically used in video coding is to down-sample to half the input video frame before encoding. This reduces the computational cost but requires an up-sampling filter to recover the original video resolution in the decoded video to reduce as much as possible the loss in visual quality. Currently used filters are bicubic and Lanczos approaches,

Figure 1 – Up-sampling Filters for Video application (EVC-UFV)

In the last few years, Artificial Intelligence (AI), Machine Learning (ML), and especially Deep Learning (DL) techniques, have demonstrated their capability to enhance the performance of various image and video processing tasks. MPAI has performed an investigation to assess how video coding performance could be improved by replacing traditional coding blocks with deep-learning ones. The outcome of this study has shown that deep-learning based up-sampling filters significantly improve the performance of existing video codecs.

MPAI issued a Call for Technologies for up-sampling filters for video applications in October 2024. This was followed by an intense phase of development that enabled MPAI to approve Technical Specification: AI-Enhanced Video Coding (MPAI-EVC) – Up-sampling Filter for Video application (EVC-UFV) V1.0 with a request for Community Comments at its 58th General Assembly (MPAI-58).

EVC-UFV standard enables efficient and low complexity up-sampling filters applied to video with different bit-depth of 8 and 10 bit per pixels per component, in standard YCbCr colour space with 4:2:0 sub-sampling, encoded with a variety of encoding technologies using different encoding features such as random access and low delay.

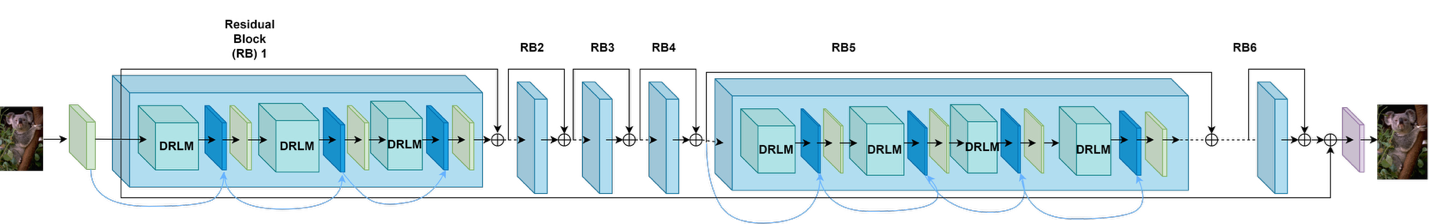

As depicted in Figure 2, the filter is a Densely Residual Laplacian Super-Resolution network (DRLN), offering a novel deep-learning approach.

Figure 2 – Densely Residual Laplacian Super-Resolution network (DRLN).

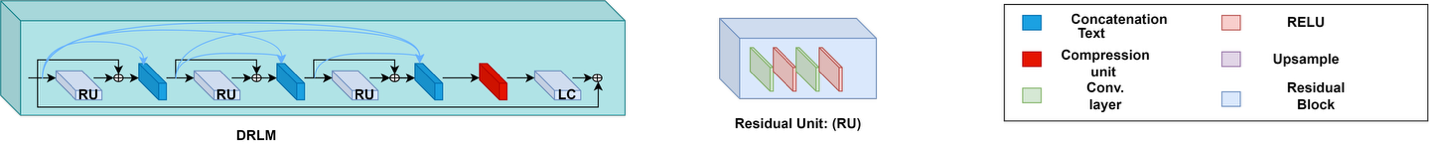

The complexity of the filter is reduced in two steps. First, a drastic simplification of the deep-learning structure that reduces the numbers of blocks provides a much lighter network while keeping similar performances of the baseline DRLN. This is achieved by identifying the DRLN’s principal components and understanding the impact of each component on the output video frame quality, memory size, and computational costs.

As shown in Figure 2, the main component of the DRLN architecture is a Residual Block which is composed of the Densely Residual Laplacian Modules (DRLM) and a convolutional layer. Each DRLM contains three Residual Units, as well as one compression unit and one Laplacian attention unit (a set of Convolutional Layers with a square filter size and Dilation that is greater than or equal the filter size). Each Residual Unit consists of two convolutional layers and two ReLU Layers. All DRLM modules in each Residual Block and all Residual Units in each DRLM are densely connected. The Laplacian attention unit consists of three convolutional layers with filter size 3×3 and dilation (a technique for expanding a convolutional kernel by inserting holes or gaps between its elements) equal to 3, 5, 7. All convolutional layers in the network, except the Laplacian one, have filter size 3×3 with dilation equal to 1. Throughout the network, the number of feature maps (the outputs of convolutional layers) is 64.

Based on this structural analysis, reducing the number of the main Residual Blocks, adding more DRLMs, and reducing the complexity of the Residual Unit and the number of hidden convolutional layers and features map drastically accelerates execution speed and reduces memory management but does not substantially affect the network’s visual quality performance.

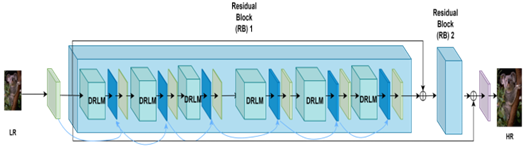

Figure 3 depicts the resulting EVC-UFV Up-sampling Filter,

Figure 3 – Structure of the EVC-UFV Up-sampling Filter

The parameters of the original and complexity-reduced network are given in Table 1.

Table 1 – Parameters of the original and the complexity-reduced network

| Original | Final | |

| Residual Blocks | 6 | 2 |

| Dram’s per Residual Block | 3 | 6 |

| Residual Unit per DRLM | 3 | 3 |

| Hidden Convolutional Layers per Residual Unit | 2 | 1 |

| Input Feature Maps | 64 | 32 |

Further, by pruning the parameters and weights of the network, the network complexity is reduced by 40%. The loss in performance is less than 1% in BD-rate. This is achieved, by first using the well-known DeepGraph technique, modified to work with deep-learning based up-sampling filter, understanding the dependency of the different components’ layers of the simplified deep-learning network. This facilitates grouping components sharing a common pruning approach that can be applied without introducing dimensional inconsistencies among the inputs and outputs of the layers.

Verification Tests of the technology has been performed on:

| Standard sequences | CatRobot, FoodMarket4, ParkRunning3. |

| Bits/sample | 8 and 10 bit-depth per component. |

| Colour space | YCbCr with 4:2:0 sub sampling. |

| Encoding technologies | AVC, HEVC, and VVC. |

| Encoding settings | Random Access and Low Delay at QPs 22, 27, 32, 37, 42, 47. |

| Up-sampling | SD to HD and HD to UHD. |

| Metrics | BD-Rate, BD-PSNR and BD-VMAF |

| Deep-learning structure | Same for all QPs |

Results show an impressive improvement for all coding technologies, and encoding options for all three objective metrics when compared with the currently used traditional bicubic interpolation. In Table 2, LD stands for low delay and RA for Random Access

Table 2 – Performance of the EVC-UFV Up-sampling Filter

| HEVC (LD) | VVC (LD) | HEVC (RA) | VVC (RA) | |

| SD to HD (using own trained filter) | 12.2% | 13.8% | 17.3% | 22.5% |

| HD to UHD (using own trained filter) | 6% | 6.5% | 6.0% | 7.9% |

| SD to HD (using HD to UHD filter) | 11.6% | 11.4% | 15.3% | 19.9% |

All results are obtained with the 40% pruned network.

Soon MPAI will be five – Part 2

This is the second episode of a series of articles that will revisit the driving force that allowed MPAI to develop 15 standards in five years in a variety of fields where AI can make the difference and have half a score under development. The articles intend pave the way for the next five years of MPAI.

2 The inside of MPAI

MPAI has been established to uphold the social value of standards, to adapt the notion of standard to the new Artificial Intelligence domain, and to rescue the right of society to exploit innovation. Let’s review how this has been implemented.

Laws pay much attention to standards because the patents that enable them give monopoly rights to exploit the patents to holders for a significant time span. This is the reason why a standards body requests that a proposal of a standard be accompanied by a declaration that, in simplified form, boils down to answering one of the three possibilities: if there are patent rights to the enabling technologies, does the submitter agree to allow the use of the patents for free, or are royalties applied, or is the use of patents not allowed? Setting aside the 1st and 3rd cases, in the second case the submitter is asked to declare that it will license the patents “to an unrestricted number of applicants on a worldwide, non-discriminatory basis and on reasonable terms and conditions to make, use and sell implementations of the above document” (i.e., the standard).

In past ages this used to be good enough, but is this still OK in an age when technology moves fast? Is it OK if it takes ten years for the declaration to be implemented and if, ten years later, a user discovers that the licensing terns are not acceptable?

A reasonable answer is that, no, this handling of patents in standards is not acceptable because society is deprived of technologies that may bring significant benefits to it. It may also not be acceptable to some patent holders because they are deprived of their ability to monetise the fruits of their efforts.

The MPAI handling of patents is exactly the opposite on this point. MPAI Members engaged in the development of a standard agree on a set of statements called “Framework Licence” that remove some uncertainty on the time and terms of the eventual licence.

Why only “some” and not all uncertainty? Because there are so-called antitrust laws that forbid this full clarification as this could be maliciously used to distort the market. As Monsieur de Montesquieu used to say: “Better is the enemy of good”, MPAI wants to make things that are good and not perfect things that create problems.

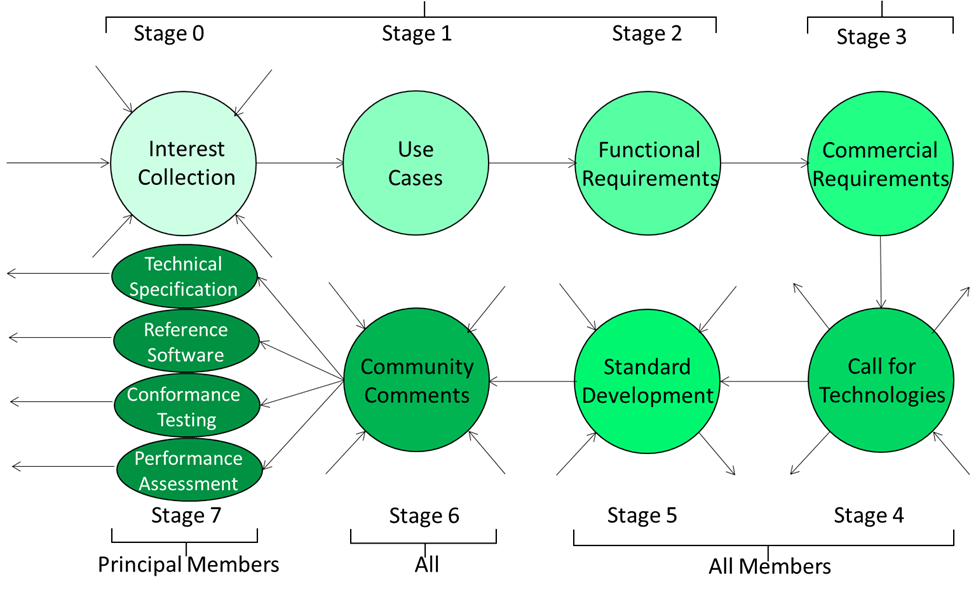

MPAI has developed a model process to develop standards which is based on a combination of openness – when the purpose of a standard is developed – and restriction – when the technologies making up the standard are developed. Figure 1 depicts the eight steps of a standard.

Figure 2 – The MPAI standard life cycle

A standard may be proposed by anybody (Stage 0). To define the scope of the standard, use cases are developed (Stage 1). To define what the standard should exactly do, Functional Requirements are developed (Stage 2). Participation in these stages is open to anybody.

To define the conditions of use of the standard, Commercial Requirements are developed (Stage 3). Of course, MPAI is not engaged in any sort of commerce. “Commercial Requirements” are what above have been called Framework Licence. When the two types of requirements are ready, a Call for Technologies is issued (Stage 4).

Anybody can answer the call (Stage 5), but submitted technologies must satisfy the requirements. Proposed technologies are used to develop the exclusive standard with the participation of MPAI Members and the respondents to the call if they have joined MPAI. If they do not, their submission is discarded.

When the standard has reached a stability plateau, MPAI may publish the current draft before final publication (Stage 6). Anybody is entitled to send their comments to the MPAI Secretariat. Comments will be considered for inclusion in the published standard (Stage 7).

Stage 7 is divided in four Steps. The first Step concerns the publication of the standard, but a standard usually includes other Steps. Step #2 is the reference software, a conforming implementation of the standard that is included in the standard itself with reference to the MPAI Git from where the software – published with a BSD 3 Clause licence – can be downloaded. The third Step is Conformance Testing. An implementation of an AI Workflow conforms with MPAI-MMC if it accepts as input and produces as output Data and/or Data Objects (the combination of Data of a Data Type and its Qualifier) conforming with those specified by MPAI-MMC (see later for more about Qualifiers).

The last Step is Performance Assessment. Performance is a multidimensional entity because it can have various connotations, and the Performance Assessment Specification should provide methods to measure how well an AIW performs its function, using a metric that depends on the nature of the function, such as:

- Quality: the Performance of an AI System that Answers Questionscan measure how well it answers a question related to an image.

- Bias: Performance of the same AI System can measure the quality of responses depending on the type of images, i.e., the ability of the System to provide balanced unbiased answers.

- Legal compliance: the Performance of an AIW can measure the compliance of the AI System to a regulation, e.g., the European AI Act.

- Ethical compliance: the Performance Assessment of an AI System can measure its compliance to a target ethical standard.

(continue)

Meetings in the coming July/August 2025 meeting cycle

| Group name | 14-18 July | 21-25 July | 28 July – 1 Aug | 04-08 Aug | 11-15 Aug | 18-22 Aug | Time (UTC) |

| AI Framework | 14 | 21 | 28 | 4 | 11 | 18 | 16 |

| AI-based End-to-End Video Coding | 16 | 30 | 13 | 14 | |||

| AI-Enhanced Video Coding | 16 | 23 | 30 | 6 | 13 | 19 | 13 |

| Artificial Intelligence for Health | 21 | 14 | |||||

| Communication | 17 | 2 | 14 | 13:30 | |||

| Compression & Understanding of Industrial Data | 23 | 20 | 8 | ||||

| Connected Autonomous Vehicle | 17 | 24 | 31 | 7 | 14 | 21 | 15 |

| Context-based Audio enhancement | 15 | 22 | 29 | 5 | 12 | 19 | 16 |

| Industry and Standards | 18 | 1 | 15 | 16 | |||

| MPAI Metaverse Model | 18 | 25 | 1 | 8 | 15 | 22 | 15 |

| Multimodal Conversation | 15 | 22 | 29 | 5 | 12 | 19 | 14 |

| Neural Network Watermarking | 15 | 22 | 29 | 5 | 12 | 19 | 15 |

| Portable Avatar Format | 18 | 25 | 1 | 8 | 15 | 22 | 10 |

| XR Venues | 15 | 22 | 29 | 5 | 12 | 19 | 17 |

| General Assembly (MPAI-59) | 20 | 15 |