| 1. Functions | 2. Reference Model | 3. Input/Output Data |

| 4. JSON Metadata | 5. SubAIMs | 6. Profiles |

| 7. Reference Software | 8. Conformance Testing | 9. Performance Assessment |

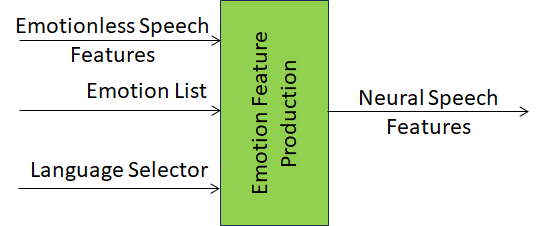

Emotion Feature Production (MMC-EFP):

| Receives | Emotionless Speech Features from Emotion Feature Production. |

| Emotion List. | |

| Language Selector | |

| Produces | Neural Speech Features |

Figure 1 depicts the Emotion Feature Production (MMC-EFP) AIM:

Figure 1 – Reference Model of Emotion Feature Production (MMC-EFP) AIM

Table 1 specifies the Input/Output Data of the Emotion Feature Production (MMC-EFP)

Table 1 – Input/Output Data of the Emotion Feature Production (MMC-EFP)

| Input data | Semantics |

| Emotionless Speech Features | Speech Features of an Emotionless Speech utterance. |

| Emotion List | A List of Emotions to be added to Emotionless Speech, |

| Language Selector | The preferred language. |

| Output data | Semantics |

| Neural Speech Features | Speech Features to be added to the Emotionless Speech. |

4 JSON Metadata

https://schemas.mpai.community/CAE1/V2.4/AIMs/EmotionFeatureProduction.json

5 SubAIMs

No SubAIMs.

6 Profiles

No Profiles

7. Reference Software

Reference Software not available.

8. Conformance Testing

| Receives | Emotionless Speech Features | Shall validate against the Speech Features schema. |

| Emotion List | Shall validate against the Emotion schema. | |

| Language Selector | Shall validate against the Selector schema. | |

| Produces | Neural Speech Features | Shall validate against the Speech Features schema. |

9 Performance Assessment

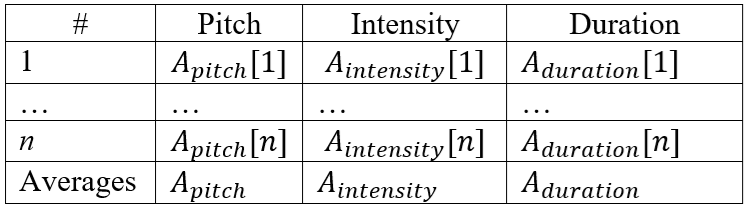

Table 8 gives the input/output data of the Emotion Feature Production AIM.

Table 8 – I/O Data of Emotion Enhanced Speech (EES) Emotion Feature Production

| AIM | Input Data | Output Data |

| Emotion Feature Production | Emotionless Speech

Speech Features1 |

Speech with Emotion |

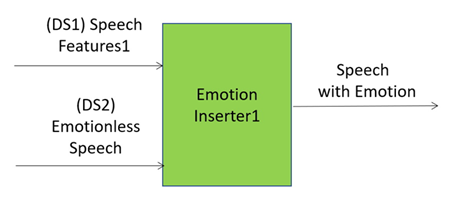

Table 9 gives the Emotion Enhanced Speech (EES) Emotion Feature Production Means and how they are used.

Table 9 – AIM Means and use of Emotion Enhanced Speech (EES) Emotion Feature Production (AIM2 in Figure 3)

| Means | Actions |

| Conformance Testing Dataset | DS1: a dataset of at least x > M Emotionless Speeches.

DS2: a dataset of x Speech Features 1, each corresponding to a specific Emotionless Speech. |

| Procedure | For each of the x input pairs of DS1 and DS2:

Then, compute the Average for each of the three properties among the n Model Utterances. |

| Evaluation | 1. Condition 3 shall be respected.

2. Given the three Averages computed at the end of the Procedure and denoting them with , where p represents one among the three properties (pitch, intensity and duration), if:

the Emotion Inserter 1 module under test has passed the Conformance Test. 3. Otherwise, the submitter of Emotion Inserter 1 is given the opportunity to submit an implementation of Speech Feature Analyser 1. 4. The MPAI Store will test the combination of the two submitted AIMs. 5. If the quality of the output of the submitted combination of AIM1 and AIM2 is above threshold, Emotion Inserter 1 passes the Conformance Test as long as the corresponding Speech Feature Analyser 1 is made available to the MPAI Store. 6. Else, Emotion Inserter 1 does not pass the Conformance Test. |

Figure 4 – EES Emotion Inserter1.

After the Tests, Conformance Tester shall fill out Table 10.

Table 10 – Conformance Testing form of Emotion Enhanced Speech (EES) Emotion Inserter1

| Conformance Tester ID | Unique Conformance Tester Identifier assigned by MPAI |

| Standard, Use Case ID and Version | Standard ID and Use Case ID, Version and Profile of the standard in the form “CAE:EES:V:P”. |

| Name of AIM | Emotion Inserter1 |

| Implementer ID | Unique Implementer Identifier assigned by MPAI Store. |

| AIM Implementation Version | Unique Implementation Identifier assigned by Implementer. |

| Neural Network Version* | Unique Neural Network Identifier assigned by Implementer. |

| Identifier of Conformance Testing Dataset | Unique Dataset Identifier assigned by MPAI Store. |

| Test ID | Unique Test Identifier assigned by Conformance Tester. |

| Actual output | Actual output provided as a matrix of n+1 rows containing all computed Average values:

Result: Threshold: m Final evaluation: Passed / Not passed |

| Execution time* | Duration of test execution. |

| Test comment* | |

| Test Date | yyyy/mm/dd. |

* Optional field