| 1. Functions | 2. Reference Model | 3. Input/Output Data |

| 4. JSON Metadata | 5. SubAIMs | 6. Profiles |

| 7. Reference Software | 8. Conformance Testing | 9. Performance Assessment |

1 Functions

Neural Emotion Insertion (CAE-NEI)

| Receives | Neural Speech Features from Emotion Feature Producer. |

| Emotionless Speech. | |

| Integrates | (Emotional) Neural Speech Features with those of the Emotionless Speech input. |

| Produces | Emotionally modified utterance Speech with Emotion. |

2 Reference Model

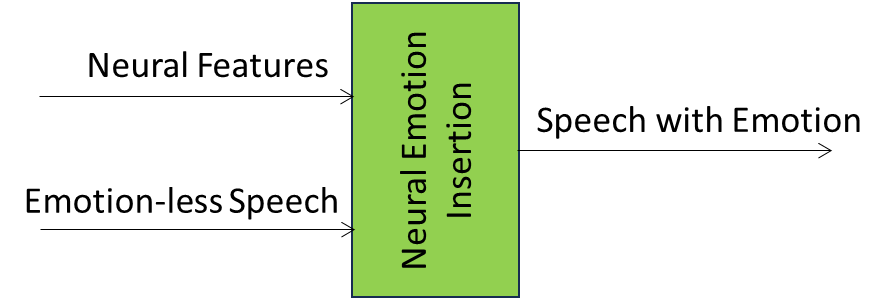

Figure 1 depicts the Reference Model of Neural Emotion Insertion (CAE-NEI)

Figure 1 – Reference Model of Neural Emotion Insertion (CAE-NEI)

3 Input/Output Data

Table 1 provides the Input/Output Data of Neural Emotion Insertion (CAE-NEI)

Table 1 – Input/Output Data of Neural Emotion Insertion (CAE-NEI)

| Input data | Semantics |

| Neural Speech Features | Speech Features of the Emotion Feature Producer. |

| Emotionless Speech | The speech without emotion to which emotion is added, |

| Output data | Semantics |

| Speech with Emotion | The Emotionless Speech to which emotion has been added |

4 JSON Metadata

https://schemas.mpai.community/CAE1/V2.4/AIMs/NeuralEmotionInsertion.json

5 SubAIMs

No SubAIMs.

6 Profiles

No Profiles

7 Reference Software

No Reference Software.

8 Conformance Testing

| Receives | Neural Speech Features | |

| Emotionless Speech | Shall validate against the Speech Object schema. The Qualifier shall validate against the Speech Qualifier schema. The values of any Sub-Type, Format, and Attribute of the Qualifier shall correspond with the Sub-Type, Format, and Attributes of the Speech Object Qualifier schema. |

|

| Produces | Speech with Emotion | Shall validate against the Speech Object schema. The Qualifier shall validate against the Speech Qualifier schema. The values of any Sub-Type, Format, and Attribute of the Qualifier shall correspond with the Sub-Type, Format, and Attributes of the Speech Object Qualifier schema. |

9 Performance Assessment

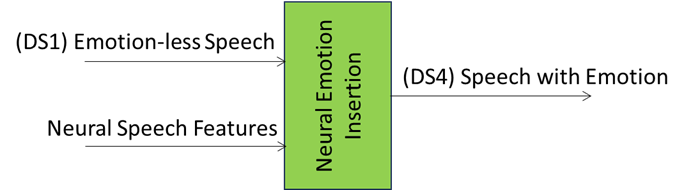

Table 18 gives the Emotion Enhanced Speech (EES) Neural Emotion Insertion Means and how they are used.

Table 18 – AIM Means and use of Emotion Enhanced Speech (EES) Neural Emotion Insertion

| Means | Actions |

| Conformance Testing Dataset | DS1: a dataset of at least y > N Emotionless Speech Segments.

DS2: a dataset of y Emotion Lists. DS3: a dataset of one element, specifying the Language in question. DS4: a dataset of y Speech with Emotion Segments, where each is associated with specific elements of DS1, DS2, and DS3 used as input, and thus represents one correct output, given this input. |

| Procedure | Given a reference Speech Feature Analyser 2 (ID: sfa2), a reference Emotion Feature Producer (ID: efp) and an Emotion Inserter 2 module that we want to test, we measure the quality of Emotion Inserter 2 in relation to the reference modules as follows:

|

| Evaluation |

|

Figure 8 – Neural Emotion Inserter.

After the Tests, Conformance Tester shall fill out Table 19.

Table 19 – Conformance Testing form of Emotion Enhanced Speech (EES) Neural Emotion Insertion

| Conformance Tester ID | Unique Conformance Tester Identifier assigned by MPAI | ||||||||

| Standard, Use Case ID and Version | Standard ID and Use Case ID, Version and Profile of the standard in the form “CAE-EES-V2.4”. | ||||||||

| Name of AIM | Neural Emotion Insertion | ||||||||

| Implementer ID | Unique Implementer Identifier assigned by MPAI Store. | ||||||||

| AIM Implementation Version | Unique Implementation Identifier assigned by Implementer. | ||||||||

| Neural Network Version* | Unique Neural Network Identifier assigned by Implementer. | ||||||||

| Identifier of Conformance Testing Dataset | Unique Dataset Identifier assigned by MPAI Store. | ||||||||

| Test ID | Unique Test Identifier assigned by Conformance Tester. | ||||||||

| Actual output | The Conformance Tester will provide the following matrix related to the modules utilized for the tests. Denoting with i and j, and , the record number in DS1 and DS2 respectively, the matrices reflect the results obtained with a limited number of random multiple inputs and the corresponding outputs.

Example:

Language: DS3 |

||||||||

| Execution time* | Duration of test execution. | ||||||||

| Test comment* | In case step 1 of Conformance Testing fails, the Conformance Tester shall request the implementer to provide a Speech Feature Analyser2 and Emotion Feature Producer AIMs.

In case step 4 or 5 of Conformance Testing also fails, the Conformance Tester shall inform the implementer that the Emotion Inserter2 did not pass the CT. |

||||||||

| Test Date | yyyy/mm/dd. |

* Optional field