| 1 Function | 2 Reference Model | 3 Input/Output Data |

| 4 SubAIMs | 5 JSON Metadata | 6 Profiles |

| 7 Reference Software | 8 Conformance Texting | 9 Performance Assessment |

1. Function

- Detects and corrects speed, equalisation and reading backwards errors in Preservation Audio File.

- Sends Restored Audio Files and Editing List to Packaging for Audio Recording.

2. Reference Model

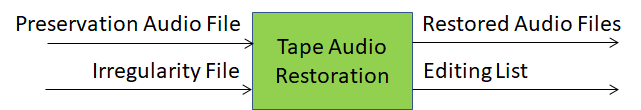

Figure 1 – Reference Model of Tape Audio Restoration

3. Input/Output Data

| Input data | Semantics |

| Irregularity File | Irregularity File produced by Tape Irregularity Classification. |

| Preservation Audio File | The input Audio File containing the digitised version of an audio open-reel tape. |

| Output data | Semantics |

| Editing List | A JSON file whose syntax and semantics is specified by CAE-ARP. |

| Restored Audio Files | Audio Files obtained by restoring the Preservation Audio File per Editing List. |

4. SubAIMs

No SubAIMs.

5. JSON Metadata

https://schemas.mpai.community/CAE1/V2.4/AIMs/TapeAudioRestoration.json

6. Profiles

No Profiles.

7. Reference Software

The CAE-TAR Reference Software can be downloaded from the MPAI Git.

8. Conformance Testing

| Receives | Irregularity File | Shall validate against the Irregularity File schema. |

| Preservation Audio File | Shall validate against the Audio Object schema. The Qualifier shall validate against the Audio Qualifier schema. The values of any Sub-Type, Format, and Attribute of the Qualifier shall correspond with the Sub-Type, Format, and Attributes of the Audio Object Qualifier schema. |

|

| Produces | Editing List | Shall validate against the Editing List schema. |

| Restored Audio Files | Shall validate against the Audio Object schema. The Qualifier shall validate against the AudioQualifier schema. The values of any Sub-Type, Format, and Attribute of the Qualifier shall correspond with the Sub-Type, Format, and Attributes of the Audio Object Qualifier schema. |

9. Performance Assessment

Table 32 gives the Audio Recording Preservation (ARP) Tape Audio Restoration Means and how they are used.

Table 32 – AIM Means and use of Audio Recording Preservation (ARP) Tape Audio Restoration.

| Means | Actions |

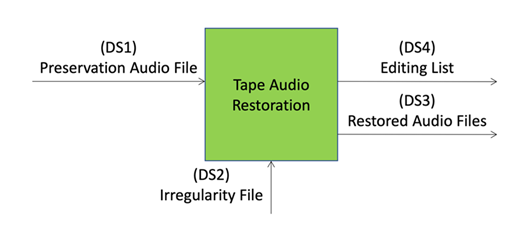

| Performance Assessment Dataset | DS1: n Preservation Audio Files created with (i) SB, (ii) SSV or (iii) ESV errors. These kinds of errors can occur singularly or superimposed one over another.

DS2: n Irregularity Files related to DS1 coming from Tape Irregularity Classifier. DS3: n Restored Audio Files arrays containing the corrected files generated from DS1 with the information contained in DS2. DS4: n Editing Lists JSONs containing the edits made in DS3. |

| Procedure | 1. Feed Tape Audio Restoration under Assessment with DS1 and DS2.

2. Compare the output Editing Lists with DS4. 3. Compare the samples of output Restored Audio Files with DS3 for SB and SSV correction evaluation. 4. Compare the samples and the spectral content of output Restored Audio Files with DS3 for ESV correction evaluation. |

| Evaluation | 1. Verify the conditions:

a. The Editing Lists are syntactically correct and conforming to the JSON schema provided in CAE Technical Specification. b. The output Editing Lists contain the same edits listed in DS4. c. All output Restored Audio Files are conforming to RF64 file format [7]. 2. Whenever SB or SSV corrections are required, each file of the output Restored Audio Files array: a. Shall be of the same duration of the corresponding file in DS3. b. Shall present the maximum value of the Cross-Correlation function for . Considering the Cross-Correlation definition in Error! Reference source not found., is the Restored Audio File under evaluation and is the corresponding file in DS3. 3. Whenever an ESV correction is required, each file of the output Restored Audio Files shall have: a. Time domain samples with RMSE < 0.1*A. Considering the RMSE definition in Error! Reference source not found., is the Restored Audio File under evaluation and is the corresponding file in DS3. Where A is the Amplitude of the signal from DS3. b. for in [20, 20k] Hz, where is the PSD of the AIM (Tape Audio Restoration) under Assessment, is the PSD of the corresponding file in DS3 and is the PSD of the corresponding Preservation Audio File Audio Segment. 4. If, for any of the n input tuples, the above conditions are not satisfied, the Tape Audio Restoration module under Assessment does not pass the Performance Assessment. |

Figure 13 – Tape Audio Restoration.

After the Assessment, Performance Assessor shall fill out Table 33.

Table 33 – Performance Assessment form of Audio Recording Preservation (ARP) Tape Audio Restoration.

| Performance Assessor ID | Unique Performance Assessor Identifier assigned by MPAI | ||||||||||||||||||||||||||||||||

| Standard, Use Case ID and Version | Standard ID and Use Case ID, Version and Profile of the standard in the form “CAE-ARP-V2.4”. | ||||||||||||||||||||||||||||||||

| Name of AIM | Tape Audio Restoration | ||||||||||||||||||||||||||||||||

| Implementer ID | Unique Implementer Identifier assigned by MPAI Store. | ||||||||||||||||||||||||||||||||

| AIM Implementation Version | Unique Implementation Identifier assigned by Implementer. | ||||||||||||||||||||||||||||||||

| Neural Network Version* | Unique Neural Network Identifier assigned by Implementer. | ||||||||||||||||||||||||||||||||

| Identifier of Performance Assessment Dataset | Unique Dataset Identifier assigned by MPAI Store. | ||||||||||||||||||||||||||||||||

| Assessment ID | Unique Assessment Identifier assigned by Performance Assessor. | ||||||||||||||||||||||||||||||||

| Actual output | Actual output provided as a matrix of n rows containing output assertions.

For example:

Final evaluation: T/F Legend: – #: CT dataset tuple number. – Irr: Irregularity types present on the Preservation Audio File – Editing List errors: number of edits incorrectly performed. It has negative impact if different from 0 – Duration: flag to check the Restored Audio Files duration – Xcorr: flag to check the cross-correlation measures – RMSE: flag to check the RMSE measures (only for ESV) – PSD: flag to check the PSD measures (only for ESV) – Final assertion: AND operation between previous results |

||||||||||||||||||||||||||||||||

| Execution time* | Duration of Assessment execution. | ||||||||||||||||||||||||||||||||

| Assessment comment* | Comments on Assessment results and possible needed actions. | ||||||||||||||||||||||||||||||||

| Assessment Date | yyyy/mm/dd. |

* Optional field