The purpose of the ESS is to acquire all sorts of electromagnetic and acoustic data directly from its sensors and other physical data of the Environment (e.g., temperature, pressure, humidity etc.) and of the CAV (Pose, Velocity, Acceleration) from Motion Actuation Subsystem with the main goal of creating the Basic World Representation.

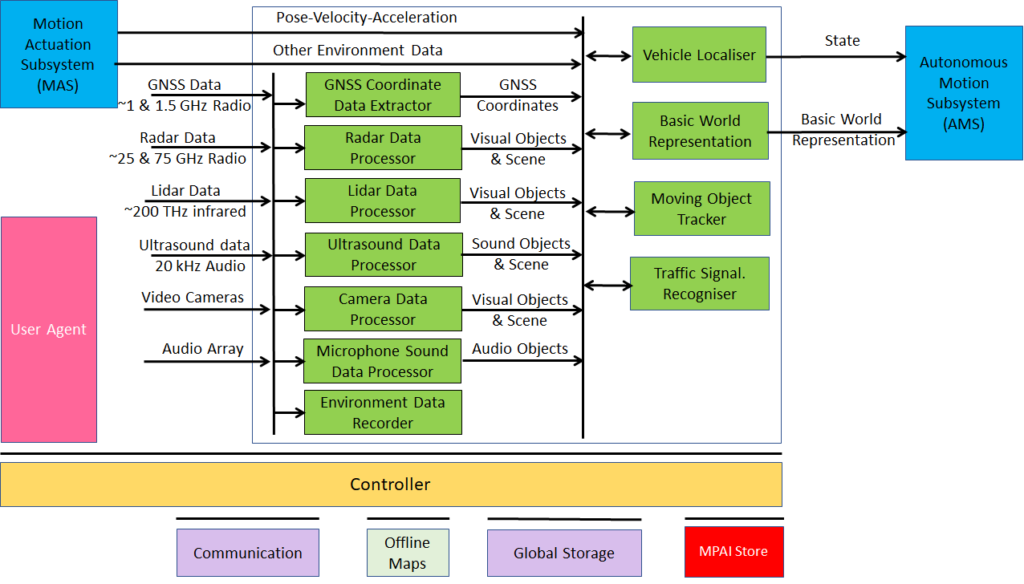

Figure 5 gives the Environment Sensing Subsystem Reference Model.

Figure 1 – Environment Sensing Subsystem Reference Model

The typical series of operations carried out by the Environment Sensing Subsystem (ESS) is given below. The sequential description of steps does not imply that an action is only carried out after the preceding one has been completed.

- The CAV gets its Pose and other environment data from:

- Global Navigation Satellite System (GNSS).

- Vehicle Localiser in ESS.

- Other Environment data (e.g., weather, air pressure etc.).

- Coordinates data (Pose, Orientation and their time derivatives).

- The CAV creates a Basic World Representation (BWR) by:

- Acquiring available Offline maps of its current Pose.

- Fusing Visual, Lidar, Radar and Ultrasound data.

- Updating the Offline maps with

- Other static objects.

- All moving objects.

- All traffic signalisation.

- The CAV compresses and stores a subset of the sensor data on board the ESS.

Table 1 gives the input/output data of Environment Sensing Subsystem.

Table 1 – I/O data of Environment Sensing Subsystem

| Input data | From | Comment |

| Pose-Velocity-Acceleration | Motion Actuation Subsystem | To be fused with GNSS data |

| Other Environment Data

|

Motion Actuation Subsystem | Temperature etc. to be added to Basic World Representation |

| Global Navigation Satellite System (GNSS) | ~1 & 1.5 GHz Radio | Get Pose from GNSS |

| Radio Detection and Ranging (RADAR) | ~25 & 75 GHz Radio | Get RADAR view of Environment |

| Light Detection and Ranging (LIDAR) | ~200 THz infrared | Get LiDAR view of Environment |

| Ultrasound | Audio (>20 kHz) | Get 20 kHz view of Environment |

| Cameras (2/D and 3D) | Video (400-800 THz) | Get visible view of Environment |

| Microphones | Audio (16 Hz-16 kHz) | Get Audible view of Environment |

| Output data | To | Comment |

| State | Autonomous Motion Subsystem | For Route, Path and Trajectory |

| Basic World Representation | Autonomous Motion Subsystem | Locate CAV in Environment |

Table 2 gives the AI Modules of Environment Sensing Subsystem.

Table 2 – AI Modules of Environment Sensing Subsystem

| AIM | Function |

| GNSS Data Coordinate Extractor | Computes global coordinates of CAV. |

| Radar Data Processor | Extracts visual scene and objects from radar. |

| Lidar Data Processor | Extracts visual scene and objects from lidar. |

| Ultrasound Data Processor | Extracts visual scene and objects from ultrasound. |

| Camera Data Processor | Extracts visual scene and objects from camera. |

| Environment Sound Data Processor | Extracts audio scene and objects from microphone. |

| Environment Data Recorder | Compresses/records a subset of data produced by CAV sensors and processed data at a given time. |

| Vehicle Localiser | Estimates the current CAV State in the Offline Maps. |

| Moving Objects Tracker | Detects, tracks and represents position and velocity of Environment moving objects. |

| Traffic Signalisation Recogniser | Detects and recognises traffic signs to enable the CAV to correctly move in conformance with traffic rules. |

| Basic World Representation Fusion | Creates Basic World-Representation by fusing Offline Map, moving and traffic objects, and other sensor data |

Return to the About MPAI-CAV home page