1 Functions of Environment Sensing Subsystem.. 17

2 Reference Architecture of Environment Sensing Subsystem.. 18

3 I/O Data of Environment Sensing Subsystem.. 18

4 Functions of Environment Sensing Subsystem’s AI Modules. 19

5 I/O Data of Environment Sensing Subsystem’s AI Modules. 20

1 Functions of Environment Sensing Subsystem

The Environment Sensing Subsystem (ESS) of a Connected Autonomous Vehicle (CAV):

- Uses all Subsystem devices to acquire as much as possible information from the Environment as electromagnetic and acoustic data.

- Receives an initial estimate of the Ego CAV’s Spatial Attitude generated by the Motion Actuation Subsystem

- Receives Environment Data (e.g., temperature, pressure, humidity, etc.) from the Motion Actuation Subsystem.

- Produces a sequence of Basic Environment Representations (BER) for the journey.

- Passes the Basic Environment Representations to the Autonomous Motion Subsystem.

2 Reference Architecture of Environment Sensing Subsystem

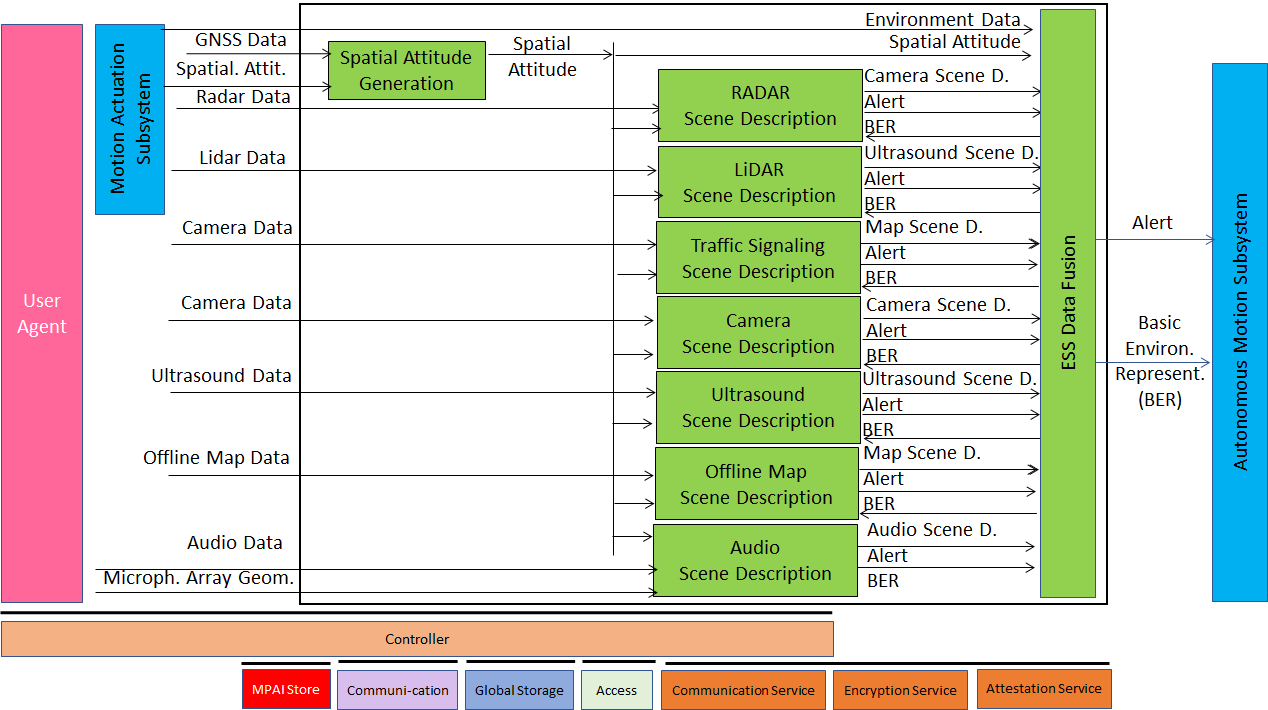

Figure 4 gives the Environment Sensing Subsystem Reference Model.

Figure 4 – Environment Sensing Subsystem Reference Model

The typical sequence of operations of the Environment Sensing Subsystem is:

- Compute the CAV’s Spatial Attitude using the initial Spatial Attitude provided by the Motion Actuation Subsystem and the GNSS.

- Receives Environment Sensing Technology (EST)-specific Data, e.g., RADAR Data provided by the RADAR EST.

- Produce and send EST-specific Alert, if necessary, to Autonomous Motion Subsystem.

- Access the Basic Environment Representation at a previous time if necessary.

- Produce EST-specific Scene Descriptors, e.g., the RADAR Scene Descriptors.

- Integrate the Scene Descriptors from different ESTs into the Basic Environment Representation.

Note that Figure 4 assumes that:

- Traffic Signalisation Recognition produces the Road Topology by analysing Visual Data. The model of Figure 4 can easily be extended to the case where Data from other ESTs is processed to compute or help compute the Road Topology.

- Environment Sensing Technologies are individually processed. An implementation may combine a single Scene Descriptors from two or more ESTs preserving the relevant interfaces.

3 I/O Data of Environment Sensing Subsystem

The currently considered Environment Sensing Technologies (EST) are:

- Global navigation satellite system or GNSS (~1 & 1.5 GHz Radio).

- Geographical Position and Orientation, and their time derivatives up to 2nd order (Spatial Attitude).

- Visual Data in the visible range, possibly supplemented by depth information (400 to 700 THz).

- LiDAR Data (~200 THz infrared).

- RADAR Data (~25 & 75 GHz).

- Ultrasound Data (> 20 kHz).

- Audio Data in the audible range (16 Hz to 20 kHz).

- Spatial Attitude (from the Motion Actuation Subsystem).

- Other environmental data (temperature, humidity, …).

Offline Map data can be accessed either from MPAI Store information or online.

Table 6 gives the input/output data of the Environment Sensing Subsystem.

Table 6 – I/O data of Environment Sensing Subsystem

| Input data | From | Comment |

| Radar Data | ~25 & 75 GHz Radio | Capture Environment with Radar |

| Lidar Data | ~200 THz infrared | Capture Environment with Lidar |

| Visual Data | Video (400-800 THz) | Capture Environment with cameras |

| Ultrasound Data | Audio (>20 kHz) | Capture Environment with Ultrasound |

| Offline Map Data | Local storage or online | cm-level data at time of capture |

| Audio Data | Audio (16 Hz-20 kHz) | Capture Environment or cabin with Microphone Array |

| Microphone Array Geometry | Microphone Array | Microphone Array disposition |

| Global Navigation Satellite System (GNSS) Data | ~1 & 1.5 GHz Radio | Get Pose from GNSS |

| Spatial Attitude | Motion Actuation Subsystem | To be fused with GNSS data |

| Other Environment Data | Motion Actuation Subsystem | Temperature etc. added to Basic Environment Representation |

| Output data | To | Comment |

| Alert | Autonomous Motion Subsystem | Critical information from an EST. |

| Basic Environment Representation | Autonomous Motion Subsystem | ESS-derived representation of external Environment |

1.4 Functions of Environment Sensing Subsystem’s AI Modules

Table 7 gives the functions of all AIMs of the Environment Sensing Subsystem.

Table 7 – Functions of Environment Sensing Subsystem’s AI Modules

| AIM | Function |

| RADAR Scene Description | Produces RADAR Scene Descriptors and Alert from RADAR Data. |

| LiDAR Scene Description | Produces LiDAR Scene Descriptors and Alert from LiDAR Data. |

| Traffic Signalisation Recognition | Produces Road Topology of the Environment from Visual and LiDAR Data. |

| Visual Scene Description | Produces Visual Scene Descriptors and Alert from Visual Data. |

| Ultrasound Scene Description | Produces Ultrasound Scene Descriptors and Alert from Ultrasound Data. |

| Online Map Scene Description | Produces Online Map Data Scene Descriptors from Online Map Data. |

| Audio Scene Description | Produces Audio Scene Descriptors and Alert from Audio Data. |

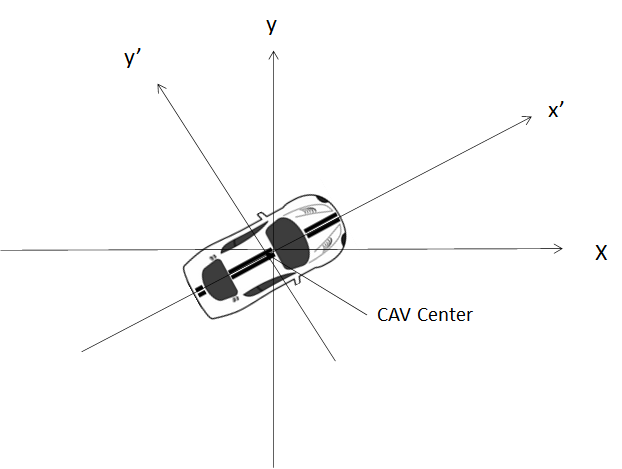

| Spatial Attitude Generation | Computes the CAV Spatial Attitude using information received from GNSS and Motion Actuation Subsystem with respect to CAV Centre. |

| Environment Sensing Subsystem Data Fusion | Receives critical Environment Representation as Alert and Scene Descriptors from the different ESTs.

The Basic Environment Representation (BER) includes all available information from ESS that enables the CAV to define a Path in the Decision Horizon Time. The BER results from the integration of: 1. The different Scene Descriptors generated by the different EST-specific Scene Description AIMs. 2. Environment Data. 3. The Spatial Attitude of the Ego CAV (Figure 5). |

Figure 5 – Spatial Attitude in a CAV

1.5 I/O Data of Environment Sensing Subsystem’s AI Modules

For each AIM (1st column), Table 8 gives the input (2nd column) and the output data (3rd column) of the Environment Sensing Subsystem. Note that the Basic Environment Representation in column 2 refers to the previously produced BER.

Table 8 – I/O Data of Environment Sensing Subsystem’s AI Modules

| AIM | Input | Output |

| Radar Scene Description | Radar Data Basic Environment Representation |

Alert Radar Scene Descriptors |

| Lidar Scene Description | Lidar Data

Basic Environment Representation |

Alert Lidar Scene Descriptors |

| Traffic Signalisation Recognition | Visual Data Basic Environment Representation |

Alert

Road Topology |

| Visual Scene Description | Visual Data Basic Environment Representation |

Alert Lidar Scene Descriptors |

| Ultrasound Scene Description | Ultrasound Data Basic Environment Representation |

Alert Ultrasound Scene Descriptors |

| Map Scene Description | Offline Map Data Basic Environment Representation |

Alert Map Scene Descriptors |

| Audio Scene Description | Audio Data Basic Environment Representation |

Alert Audio Scene Descriptors |

| Spatial Attitude Generation | GNSS Data Spatial Attitude form MAS |

Spatial Attitude |

| Environment Sensing Subsystem Data Fusion | RADAR Scene Descriptors LiDAR Scene Descriptors Road Topology Lidar Scene Descriptors Ultrasound Scene Descriptors Map Scene Descriptors Audio Scene Descriptors Spatial Attitude Other Environment Data |

Alert Basic Environment Representation |