| 1 Functions | 2 Reference Model | 3 I/O Data |

| 4 Functions of AI Modules | 5 I/O Data of AI Modules | 6 AIW, AIMs, and JSON |

| 7 Reference Software | 8 Conformance Testing | 9 Performance Assessment |

1 Functions

The Environment Sensing Subsystem (ESS) of a Connected Autonomous Vehicle (CAV) senses the environment’s electromagnetic and acoustic sources, receives weather information from the Motion Actuation Subsystem (MAS), computes its Spatial Attitude using GNSS and spatial data from the MAS, produces and passes to the AMS a sequence of Basic Environment Descriptors by processing data acquired by a variety of sensors (Audio, Visual, LiDAR, RADAR, Ultrasound, and Offline Map).

2 Reference Model

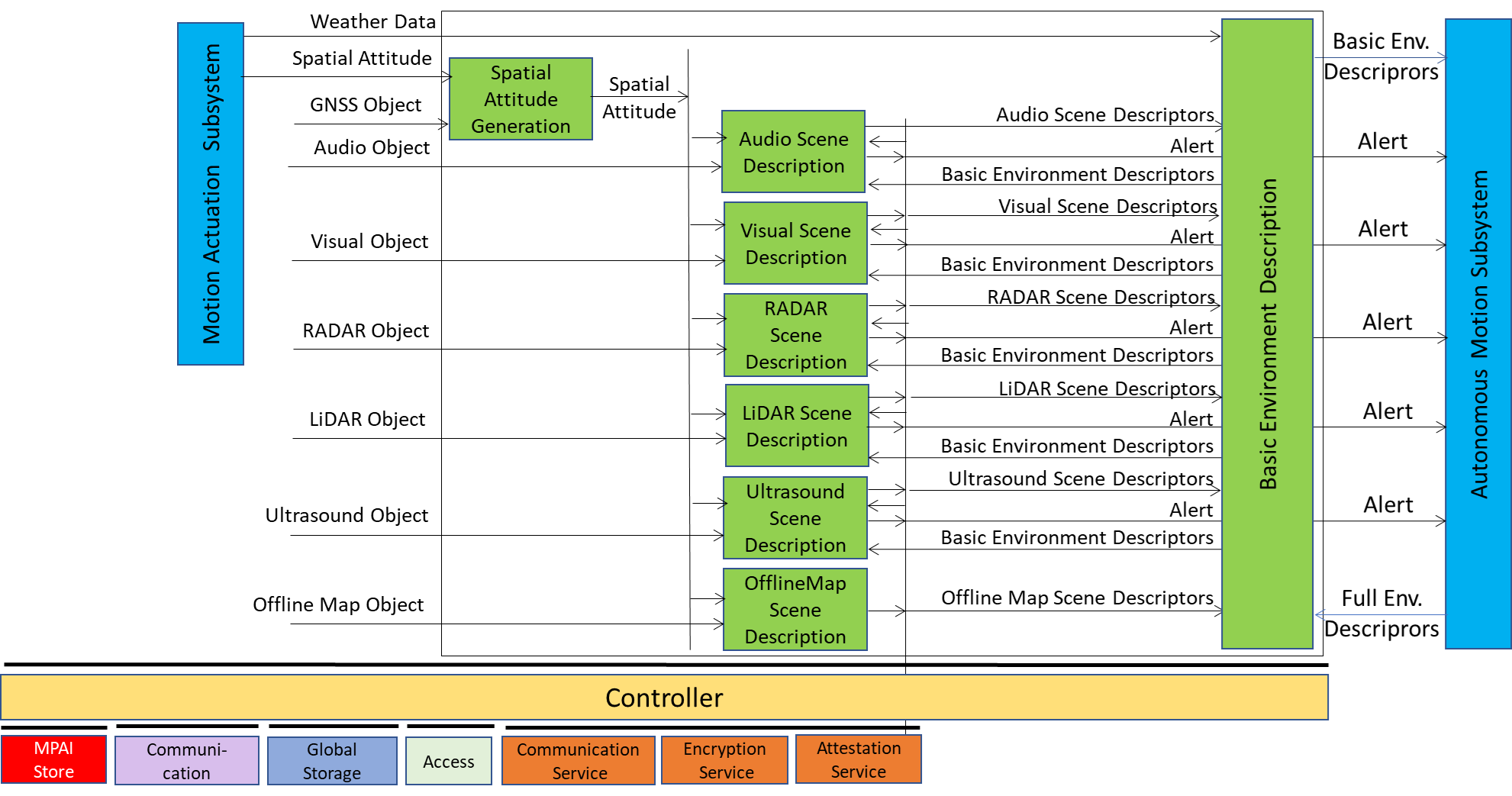

Figure 1 gives the Reference Model of the Environment Sensing Subsystem.

Figure 1 – Environment Sensing Subsystem Reference Model

Figure 1 – Environment Sensing Subsystem Reference Model

The sequence of operations of the Environment Sensing Subsystem unfolds as follows:

- The Spatial Attitude Generation AIM computes the CAV’s Spatial Attitude using the initial Motion Actuation Subsystem’s Spatial Attitude and GNSS Object.

- All EST-specific Scene Description AIMs available onboard:

- Receive EST-specific Data Objects, e.g., the RADAR Scene Descriptions AIM receives a RADAR Object provided by the RADAR EST (not shown in Figure 1). The Online Map is considered as an EST.

- Produce and send Alerts, if necessary, to the Autonomous Motion Subsystem.

- Accesses Basic Environment Descriptors of previous times, if needed.

- Produce EST-specific Scene Descriptors, e.g., the RADAR Scene Descriptors.

- The Basic Environment Description AIM integrate the different EST-specific Scene Descriptors, Weather Data, and Road State into the Basic Environment Descriptors.

Note 1: Although Figure 1 shows individually processed ESTs, an implementation may combine two or more Scene Description AIMs to handle two or more ESTs, provided the relevant interfaces are preserved.

Note 2: The Objects in the BEDs may carry Annotations specifically related to traffic signalling,, e.g.:

- Position and Orientation of traffic signals in the environment:

- Traffic Policemen

- Road signs (lanes, turn right/left on the road, one way, stop signs, words on the road).

- Traffic signs – vertical signalisation (signs above the road, signs on objects, poles with signs).

- Traffic lights

- Walkways

- Traffic sound (siren, whistle, horn).

3 I/O Data

Table 1 gives the input/output data of the Environment Sensing Subsystem.

Table 1 – I/O data of Environment Sensing Subsystem

| Input data | From | Comment |

| RADAR Object | ~25 & 75 GHz Radio | Environment Capture with Radar |

| LiDAR Object | ~200 THz infrared | Environment Capture with Lidar |

| Visual Object | Video (400-800 THz) | Environment Capture with visual cameras |

| Ultrasound Object | Audio (>20 kHz) | Environment Capture with Ultrasound |

| Offline Map Object | Local storage or online | cm-level data at time of capture |

| Audio Object | Audio (16 Hz-20 kHz) | Environment or cabin Capture with Microphone Array |

| GNSS Object | ~1 & 1.5 GHz Radio | Get Pose from GNSS |

| Spatial Attitude | Motion Actuation Subsystem | To be fused with Pose from GNSS Data |

| Weather Data | Motion Actuation Subsystem | Temperature, Humidity, etc. |

| Full Environment Descriptors | Autonomous Motion Subsystem | FED refers to a previous time. |

| Output data | To | Comment |

| Alert | Autonomous Motion Subsystem | Critical information from an EST. |

| Basic Environment Descriptors | Autonomous Motion Subsystem | ESS-derived Environment Descriptors |

4 Functions of AI Modules

Table 2 gives the functions of all AIMs of the Environment Sensing Subsystem.

Table 2 – Functions of Environment Sensing Subsystem’s AI Modules

| AIM | Function |

| Spatial Attitude Generation | Computes the CAV Spatial Attitude from CAV Centre using GNSS Object and MAS’s initial Spatial Attitude. |

| Audio Scene Description | Produces Audio Scene Descriptors and Alert. |

| Visual Scene Description | Produces Visual Scene Descriptors and Alert. |

| LiDAR Scene Description | Produces LiDAR Scene Descriptors and Alert. |

| RADAR Scene Description | Produces RADAR Scene Descriptors and Alert. |

| Ultrasound Scene Description | Produces Ultrasound Scene Descriptors and Alert. |

| Offline Map Scene Description | Produces Offline Map Scene Descriptors. |

| Basic Environment Description | Produces Basic Environment Descriptors. |

5 I/O Data of AI Modules

For each AIM (1st column), Table 3 gives the input (2nd column) and the output data (3rd column) of the Environment Sensing Subsystem. Note that the Basic Environment Descriptors in column 2 refers to a previously produced BED.

Table 3 – I/O Data of Environment Sensing Subsystem’s AI Modules

6 AIW, AIMs, and JSON

Table 4 – AIW, AIMs, and JSON Metadata

| AIW | AIM | Name | JSON |

| CAV-ESS | Environment Sensing Subsystem | X | |

| OSD-ASD | Audio Scene Description | X | |

| CAV-BED | Basic Environment Description | X | |

| CAV-LSD | LiDAR Scene Description | X | |

| CAV-OSD | Offline Map Scene Description | X | |

| CAV-RSD | RADAR Scene Description | X | |

| CAV-SAG | Spatial Attitude Generation | X | |

| CAV-USD | Ultrasound Scene Description | X | |

| OSD-VSD | Visual Scene Description | X |

7 Reference Software

8 Conformance Testing