<-Go to AI Workflows Go to ToC Conversation about a scene->

| 1 Functions | 2 Reference Architecture | 3 I/O Data |

| 4 Functions of AI Modules | 5 I/O Data of AI Modules | 6 AIW, AIMs, and JSON Metadata |

| 7 Reference Software | 8 Conformance Texting | 9 Performance Assessment |

1 Functions

The Answer to Multimodal Question MMC-AMQ) receives a question expressed as a Text Object or a Speech Object and an Image and provides Text and/or Speech giving information in response to the question.

2 Reference Model

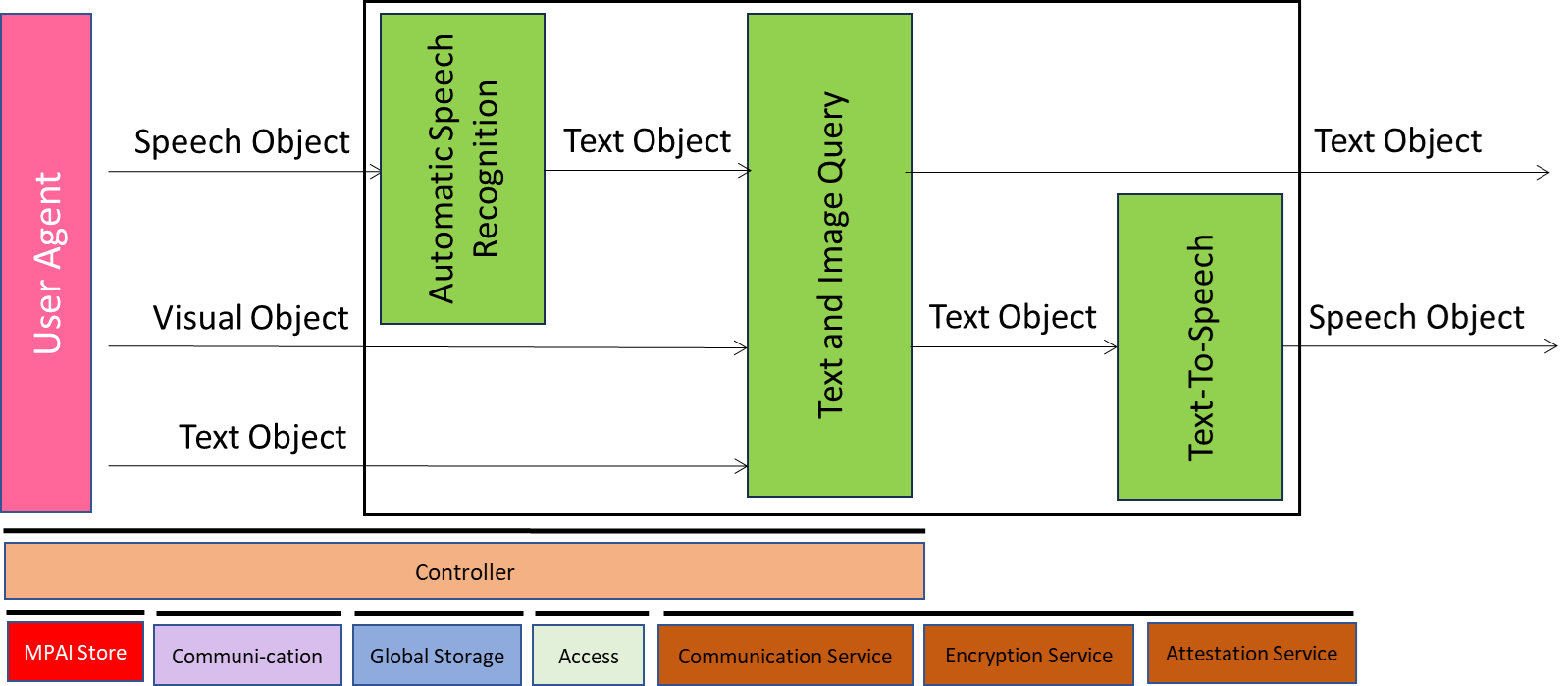

Figure 1 specifies the Answer to Multimodal Question (MMC-AMQ) Reference Model including the input/output data, the AIMs, and the data exchanged between and among the AIMs.

Figure 1 – Reference Model of Answer to Multimodal Question (MMC-AMQ)

The operation of Answer to Multimodal Question (MMC-AMQ) develops in the following way:

- A user provides

- Text Object or Speech Object

- An Image

- The machine provides the answer expressed as Text Object and/or Speech Object.

3 I/O Data

The input and output data of the Answer to Multimodal Question (MMC-AMQ) Use Case are:

Table 1 – I/O Data of Multimodal Question Answering

| Input | Descriptions |

| Text Object | Text typed by the human as a replacement for Input Speech. |

| Image | Image about which a question is asked. |

| Speech Object | Speech question to the Machine. |

| Output | Descriptions |

| Machine Text | The Text generated by Machine in response to human input. |

| Machine Speech | The Speech generated by Machine in response to human input. |

4 Functions of AI Modules

Table 2 provides the functions of the Answer to Multimodal Question (MMC-AMQ) Use Case.

Table 2 – Functions of AI Modules of Multimodal Question Answering (MMC-AMQ)

| AIM | Function |

| Automatic Speech Recognition | Recognises Speech. |

| Text and Image Query | Produces Text response to the query. |

| Text-to-Speech | Synthesises Speech from Text. |

5 I/O Data of AI Modules

The AI Modules of Multimodal Question Answering (MMC-AMQ) are given in Table 3.

Table 3 – AI Modules of Multimodal Question Answering (MMC-AMQ)

| AIM | Receives | Produces |

| Automatic Speech Recognition | Speech Object | Recognised Text |

| Text and Image Query | 1. Input or Recognised Text 2. Image |

Machine Text |

| Text-to-Speech | Machine Text | Machine Speech |

6 AIW, AIMs, and JSON Metadata

Table 4 provides the links to the AIW and AIM specifications and to the JSON syntaxes. AIMs/1 indicates that the column contains Composite AIMs and AIMs/2 indicates that the column contains their Basic AIMs.

Table 4 – AIW, AIMs, and JSON Metadata

| AIM | AIMs | Name | JSON |

| MMC-AMQ | Answer to Multimodal Question | X | |

| MMC-ASR | Automatic Speech Recognition | X | |

| MMC-TIQ | Text and Image Query | X | |

| MMC-TTS | Text-to-Speech | X |

7 Reference Software

7.1 Disclaimers

- This MMM-AMQ Reference Software Implementation is released with the BSD-3-Clause licence.

- The purpose of this Reference Software is to demonstrate a working Implementation of MMC-AMQ, not to provide a ready-to-use product.

- MPAI disclaims the suitability of the Software for any other purposes and does not guarantee that it is secure.

- Use of this Reference Software may require acceptance of licences from the respective repositories. Users shall verify that they have the right to use any third-party software required by this Reference Software.

7.2 Guide to the AMQ code

Use of this AI Workflow is for developers who are familiar with Python and downloading models from HuggingFace,

A wrapper for three model is provided the Whisper (ASR), BLIP (TIQ), and speech5 (TTS):

- Manages input files and parameters: Speech Object, Visual Object, Text Object

- Executes the AIW to perform the Answer to Multimodal Question on each individual pair of Speech/Text and Visual Object.

- Outputs the answer as Speech Object and Text Object.

The OSD-AQM Reference Software is found at the NNW gitlab site. It contains:

- The python code implementing the AIW.

- The required libraries are: pytorch, transformers (HuggingFace), datasets (HuggingFace), soundfile, and pillow

7.3 Acknowledgements

This version of the MMC-AMQ Reference Software has been developed by the MPAI Neural Network Watermarking Development Committee (NNW-DC).

8 Conformance Testing

| Input Data | Data Type | Input Conformance Testing Data |

| Text Object | Unicode | All input Text files to be drawn from Text Files. |

| Speech Object | .wav | All input Speech files to be drawn from Audio Files. |

| Input Image | JPEG | All input Image files to be drawn from Images. |

| Output Data | Data Type | Input Conformance Testing Data |

| Machine Text | Unicode | All Text files produced shall conform with Text files. |

| Machine Speech | .wav | All Speech files produced shall conform with Speech files. |

9 Performance Assessment