What are the dimensions targeted by the new MPAI standard? To enable humans to experience virtual replicas of an audio scene of the real world from different perspectives while moving in it and orienting their heads. The standard will be called Six Degrees of Freedom Audio, and the acronym will be CAE-6DF.

As a rule, before developing a new standard, MPAI publishes a Call for Technologies where it describes the purpose of the Call and what a respondent to the Call should do to have it accepted for consideration. The Call is complemented by two documents – one specifying the functional requirements and one the commercial requirements the planned standard should satisfy.

The 44th MPAI General Assembly has published three Calls for Technology, one for Six Degrees of Freedom Audio. The standard will be developed by the Context-based Audio Enhancement Development Committee (CAE-DC). An online presentation of this Call will be held on 2024/05/28 (Tuesday) at 16 UTC. If you wish to attend the presentation (recommended if you intend to respond), please register.

State-of-the-art VR headsets provide high-quality realistic visual content by tracking both the user’s orientation and position in 3D space. This capability opens new opportunities for enhancing the degree of immersion in VR experiences. VR games have become increasingly immersive over the years based on these developments.

However, despite the success of synthetic virtual environments such as 3D first-person games, those that feature content dynamically captured from the real world are yet to be widely deployed. Recent developments, such as dynamic Neural Radiance Fields (NERFs) and 4D Gaussian splatting, promise to give users the ability to be fully immersed in visual scenes populated by both static and dynamic entities.

Capturing audiovisual scenes with both static and dynamic entities promises a full immersion experience, but visual immersion alone is not sufficient without an equally convincing auditory immersion. CAE-6DF should enable users to experience an immersive theatre production through a VR headset, for example walking around actors and getting closer to different conversations, or a concert where a user can choose different seats to experience the performance with a 360° video associated with those viewing positions. Additionally, CAE-6DF should enable experiencing the acoustics of the concert hall from different perspectives.

The CAE-6DF Call is seeking innovative technologies that enable and support such experiences, specifically looking for technologies to efficiently represent content of scene-based or object-based formats, or a mixture of these, process it with low latency and provide high responsiveness to user movements. It should be possible to render the audio scene over loudspeakers or headphones. These technologies should also consider audio-visual cross-modal effects to present a high level of auditory immersion that complements the visual immersion provided by state-of-the-art volumetric environments.

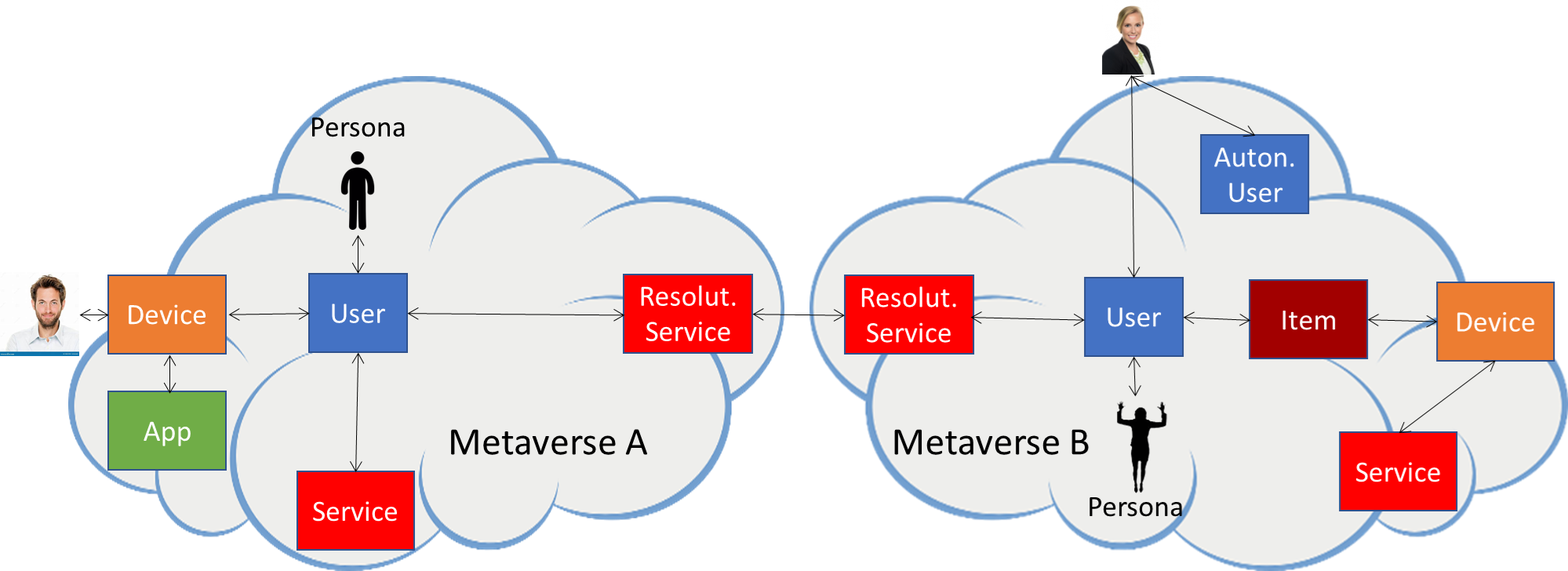

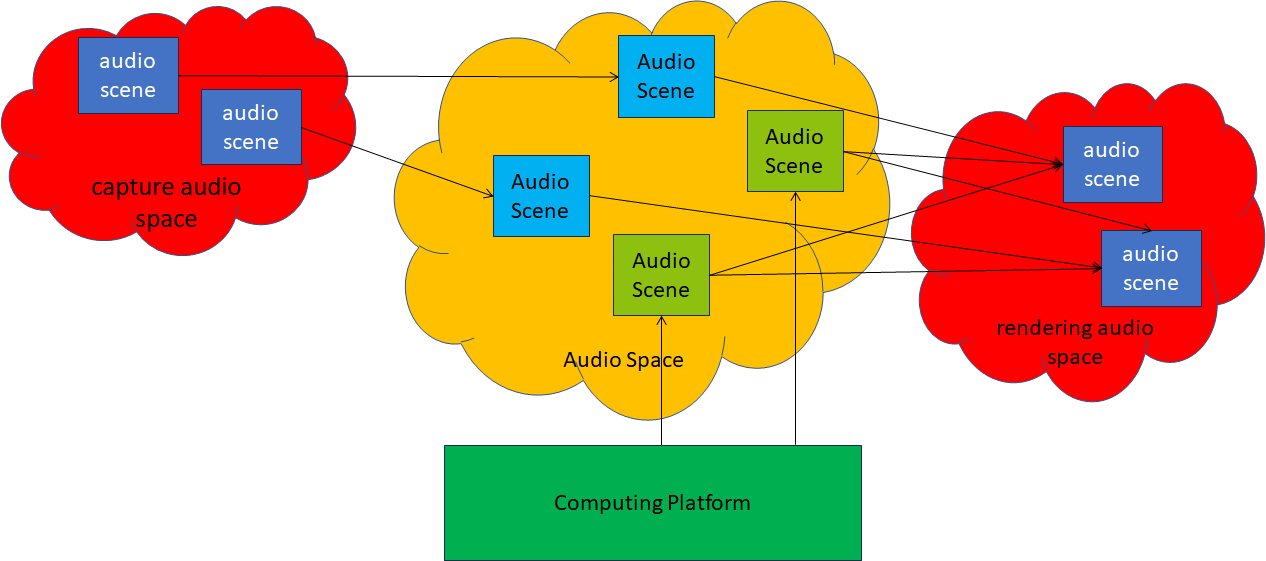

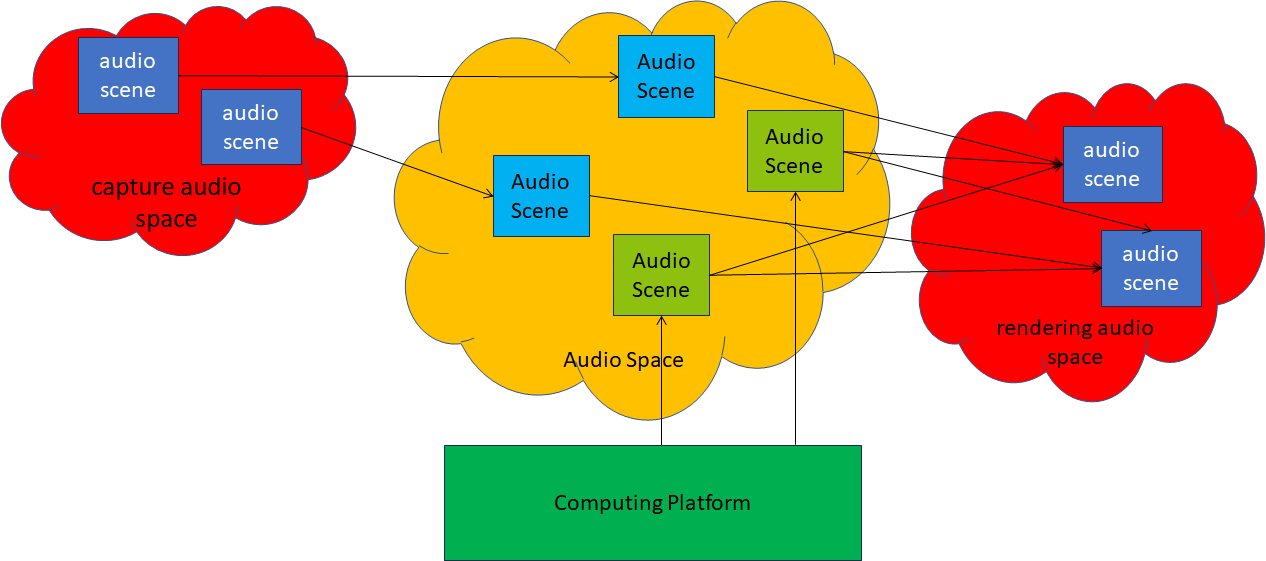

Figure 1 depicts a reference model of the planned CAE-6DF standard where a lowercase or Capital initial letter of a term implies that the term represents an entity that is either part of the real space or of a Virtual Space.

Figure 1 – real spaces and Virtual Spaces in CAE-6DF

On the left-hand side there are real audio spaces. In the middle there is a Virtual Space generated by a computing platform which host digital representations of acoustical scenes and synthetic Audio Objects generated by the platform. Rendering of arbitrary user-selected Points of Views of the Audio Scene is performed on the right-hand side real space in a perceptually veridical fashion.

The Use Cases and Functional Requirements document attached to the Call considers four use cases:

- Immersive Concert Experience (Music plus Video).

- Immersive Radio Drama (Speech plus Foley/Effects).

- Virtual lecture (Audio plus Video).

- Immersive Opera/Ballet/Dance/Theatre experience (Music, Drama with 360° Video/6DoF Visual).

From these, a set of Functional Requirements are derived.

- Audio experience and impact of visual conditions on the Audio experience:

- Audio-Visual Contract, i.e. alignment of audio scenes with visual scenes.

- Effects of locomotion on human audio-visual perception.

- Orientation response, i.e., turning toward a sound source of interest.

- Distance perception where visual and auditory experiences affect each other.

- Content profiles:

- Scene-based: the captured Audio Scene, for example using Ambisonics, is accurately reconstructed with a high degree of correspondence to the audio scene’s acoustic ambient characteristics.

- Object-based: the Audio Scene comprises Audio Objects and associated metadata to allow synthesising a perceptually veridical, but not necessarily physically accurate, representation of the captured Audio Scene.

- Mixed: a combination of scene-based and object-based profiles where Audio Objects can be overlaid or mixed with Scene-based Content.

- Rendering modalities:

- Loudspeaker-based, i.e., the content is rendered through at least two loudspeakers.

- Headphone-based, i.e., the content is rendered through headphones.

- Characteristics of rendering space when content is rendered through loudspeakers:

- Shape and dimensions: Not larger than the captured space.

- Acoustic ambient characteristics:

- Early decay time (EDT) lower than the captured space.

- Frequency mode density lower than the captured space.

- Echo density lower than the captured space.

- Reverberation time (T60) lower than the captured space.

- Energy decay curve characteristics same or lower than the captured space.

- Background noise less than 50dB(A) SPL.

- The rendering space, if the headphones block ambient acoustical characteristics of the rendering space, should have the following characteristics:

- Shape and dimensions: Not larger than the captured space.

- Acoustic ambient characteristics: No constraints on the ambient characteristics as defined in point 2.2

- User movement in the rendering space:

- May be the result of actual locomotion/orientation of the User as tracked by sensors.

- May be the result of virtual locomotion/orientation as actuated by controlling devices.

- The maximum responsive latency of the audio system to user movement should be 20 ms or less (some applications may have higher latency).

A comment about the mentioned “Commercial Requirements” is that this is a misnomer because MPAI is not “selling” anything. Even the MPAI standards are freely downloadable from the MPAI web site. Indeed, the formal name used by MPAI is Framework Licence and it is a document that includes a set of guidelines that a submitter of a proposal commits to adopt when the standard will be approved and a licence for the use of patented items is issued. The CAE-6DF Framework Licence is available.

Finally, to facilitate the work of those submitting a response, MPAI is providing a document called Template for Responses.

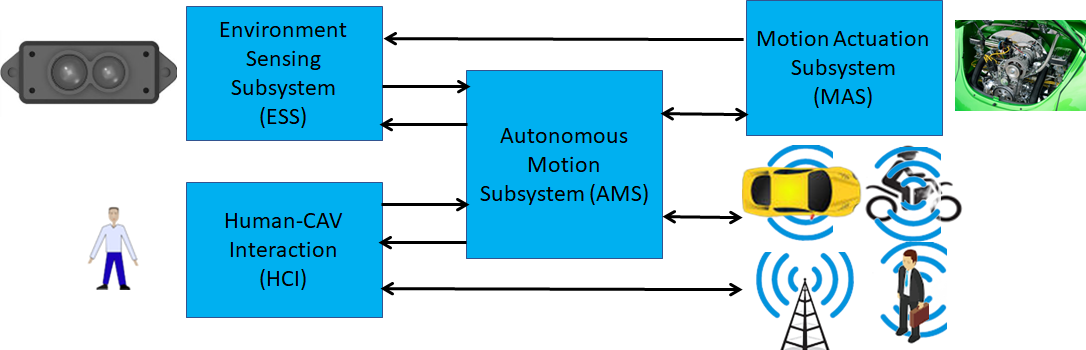

CAE-6DF will join the growing list of MPAI standards: eleven standards have already been published – on application environment, audio, connected autonomous vehicle, company performance prediction, ecosystem governance, human and machine communication, object and scene description, and portable avatar format – and is about to publish a new one on AI Module Profiles. Reference software and conformance testing specifications are in the course of being published. The standards are revised and extended and new versions published when necessary. New standards are under development such as online gaming, AI for health, and XR venues and several projects in new areas such as AI-based video coding are being investigated.