| 1. Functions | 2. Reference Model | 3. Input/Output Data |

| 4. Functions of AI Modules | 5. Input/output Data of AI Modules | 6. AIW, AIMs, and JSON Metadata |

1. Functions

The sensors of an XRV-LTP capture data from both the Real and Virtual Environments including audio, video, volumetric or motion capture (mocap) data from stage performers, signals from control surfaces and more. This Input Data is processed and converted into actionable commands required by the Real (the “stage”) and Virtual (the “metaverse”) Environments – according to their respective Real and Virtual Venue Specifications to enable multisensory experiences (as defined by the Script) in both the Real and Virtual Environments.

2. Reference Model

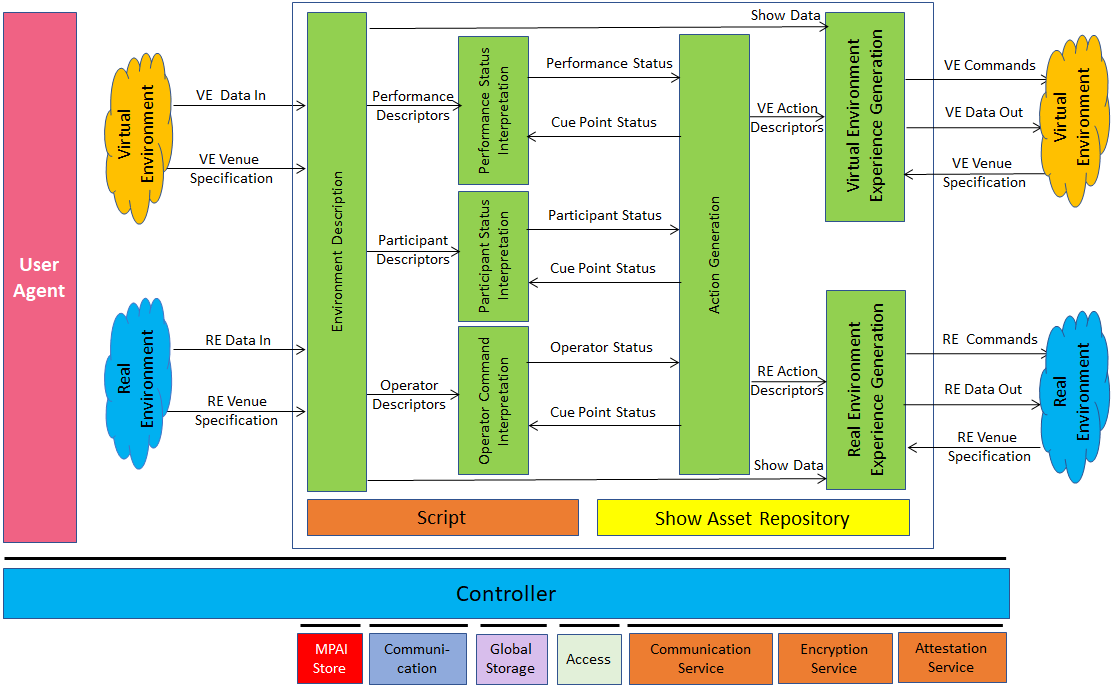

Figure 1 depicts the Reference Architecture of Live Theatrical Stage Performance.

Figure 1 – Reference Model of Live Theatrical Stage Performance

This is the flow of operation of the LTSP Reference Model:

- A range of sensors collect Data from both the Real and Virtual Environments including audio, video, volumetric or motion capture (mocap) data from stage performers, signals from control surfaces and more.

- Participant Description and Performance Description extract features from participants and performers and provide them as Participant (in general, a description of position and orientation of objects on the stage) and Performance Descriptors (in general, an assessment of the audience’s reactions).

- Participant Status Interpretation and Performance Status Interpretation interpret Descriptors to determine Participants Status and the Cue Point in the show (according to the Script).

- Operator Command Interpreter interpret Data from the Show Control computer or control surface, consoles for audio, DJ, VJ, lighting and FX (typically commanded by operators) – if needed.

- Action Generation accepts Participant Status, Cue Point and Interpreted Operator Commands and uses them to direct action in both the Real and Virtual Environments via Scene and Action Descriptors.

- Virtual Environment Experience Generation and Real Environment Experience Generation convert Scene Descriptors and Action Descriptors into actionable commands required by the Real and Virtual Environments – according to their Venue Specifications – to enable multisensory Experience Generation in both the Real and Virtual Environments.

3. Input/Output Data

Table 2 specifies the Input and Output Data.

Table 2 – I/O Data of MPAI-XRV – Live Theatrical Stage Performance

| Input | Description |

| Volumetric | A set of samples representing the value of visual 3D data represented as textured meshes, points clouds, or UV + depth. |

| Input Audio-Visual | Audio and Video collected from Real and Virtual Environments. |

| Controller | Data from a manual control interface for participants, performers, or operators, consisting spatial tracking, interactive button pushes (e.g., actuators), optic flow data from cameras looking at the audience. |

| App Data | Data generated by participants though mobile apps, web interfaces, mobile, or VR interfaces in the Real and Virtual Environments, including messaging text, selections, and preferences. |

| Lidar | Spatial depth and intensity map generated by a device able to detect the contour of objects and the value of each point intensity. |

| MoCap | Data captured from sensors attached performers or set pieces conveying information on the absolute position and motion of the point where the sensor is attached. |

| Biometric Data | Numeric data representing heart rate, electromyographic (EMG) signal or skin conductance plus metadata specifying individual performer and/or participant. |

| Sensor Data | Additional data from sensors such as positional feedback from rigging or props, floor sensors, or accelerometers placed on performers or objects. |

| Venue Data | Other miscellaneous data from Real or Virtual Environment. |

| Audio/VJ/DJ | Data from control surfaces including audio mixing consoles, video mixing consoles, and DJ consoles for live audio performances. |

| Show Control | Data from Show Control consoles for overall show automation and performance. May include control of rigging, props, and master control of audio, lighting and FX. |

| Lighting/FX | Data from lighting control console and FX controllers such as pyro, 4D FX, fog, etc. |

| Skeleton/Mesh | Data from the Virtual Environment representing 3D objects including characters, and environmental elements. |

| Output | Description |

| Virtual Environment Descriptors | A variety of controls for 3D geometry, shading, lighting, materials, cameras, physics, etc. The actual format used may depend on the Virtual Environment Venue Specification. |

| Output Audio-Visual | Data and commands for all A/V experiential elements, including audio, video, and capture cameras/microphones. |

| Virtual Environment Venue Specification | An input to the Real Experience Generation AIM defining protocols, data, and command structures for the specific Real Environment Venue. This could include number, type, and placement of lighting fixtures, special effects, sound and video reproduction resources. |

4. Functions of AI Modules

Table 2 specifies the Function of the AI Modules.

Table 2 – Functions of AI Modules

5. Input/output Data of AI Modules