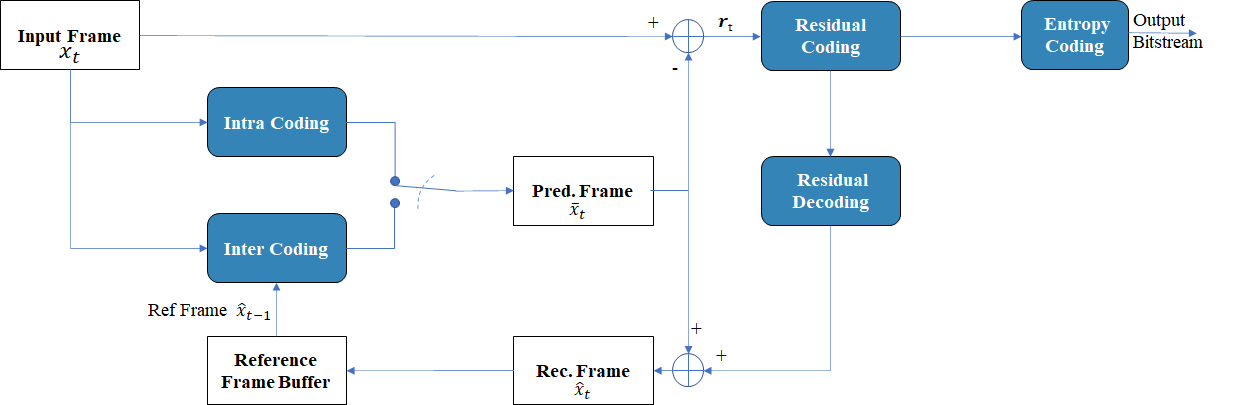

The last 30 years of video coding have been productive because, over the years, compression rate has improved enabling digital video to take over all existing and extend to new video applications. The coding algorithm has been tweaked over and over, but it is still based on the same original scheme.

In the last decade, the “social contract” that allowed inventors to innovate, innovation to be brought into standards, standards to be used and inventors to be remunerated has stalled. The HEVC standard is used but the entire IP landscape is clouded by uncertainty.

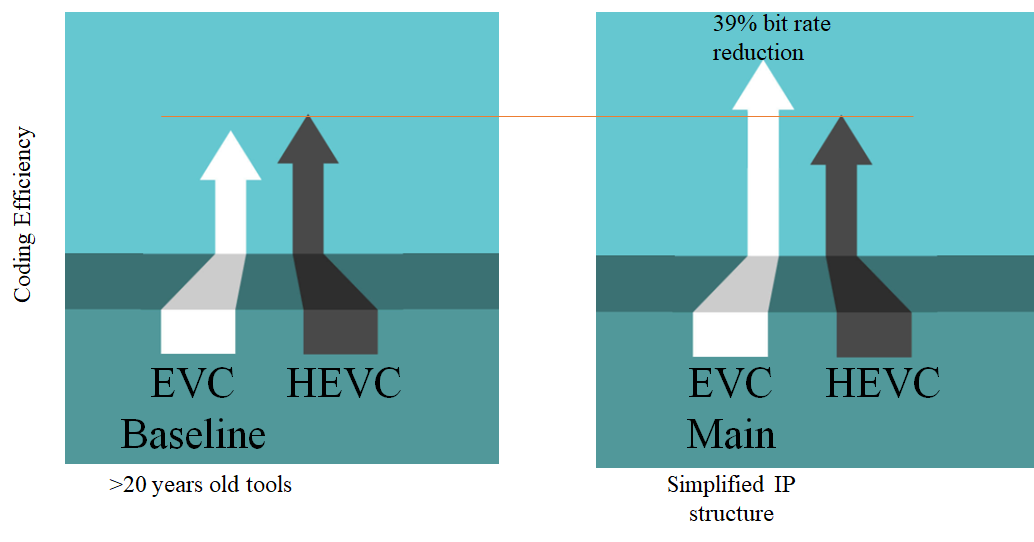

The EVC standard, approved in 2020, 7 years after the HEVC was approved, has shown that even with an inflow of technologies from a reduced number of sources can provide outstanding results, as shown in the figure:

The EVC baseline, a profile that uses 20+ years old technologies, reaches the performance of EVC. The main profile offers a bitrate reduction of 39% over HEVC, a whisker away from the performance of the lasted VVC standard.

In 1997 the match between IBM Deep Blue and the (human) chess champion of the time made headlines: IBM Deep Blue beat Garry Kasparov. It was easy to herald the age when machines will overtake human non just in keeping accounts, but also in one of the noblest intellectual activities: chess.

This was achieved by writing a computer program that explored more alternatives that a human could reasonably do, although a human’s intuition can look far into the future. In that sense, Deep Blue operated much like MPEG video coding.

Google DeepMind’s AlphaGo did the same in 2015 by beating the Go champion Sedol Lee. The Go rules are simpler than chess rules, but the alternatives in Go are way more numerous. There is only one type of piece (the stone) onstead of six (king, queen, bishop, knight, rook and pawn), but the Go board has 19×19 boxes instead of 8×8 of chess. While DeepBlue made a chess move by brute-force exploring future moves, AlphaGo made go moves relying on neural networks which had learned moves.

That victory signalled a renewed interest in a 3/4 of a century old technology – neural networks.

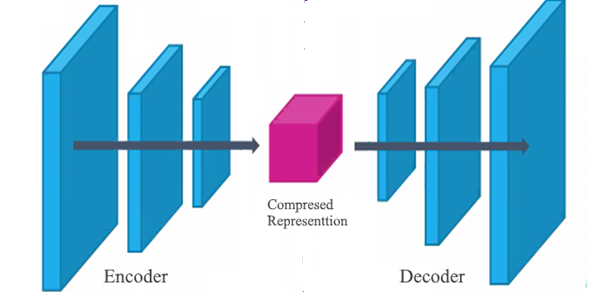

In neural networks data are processed by different layers that extract essential information until a compressed representation of the input data is achieved (left-hand side). At the decoder, the inverse process takes place.

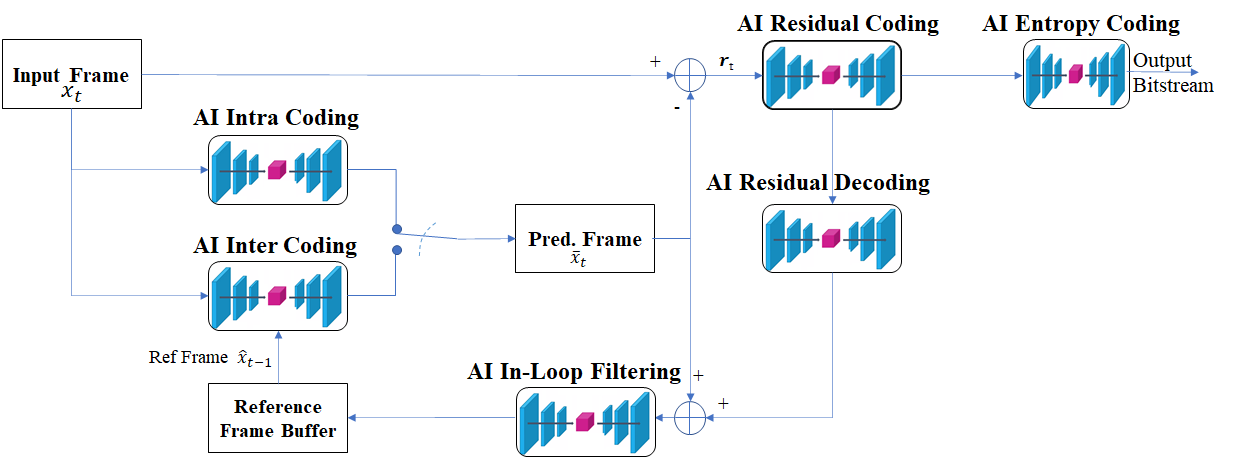

MPAI has established the AI-Enhanced Video Coding (MPAI-EVC) standard project. This is based on an MPAI study collecting published results where individual HEVC coding tools have been replaced by neural networks (in the following we call then AI tools). By summing up all the published gains and improvement of 29% is obtained.

This is an interesting, but far from being a scientifically acceptable result because the different tools used were differently trained. Therefore, MPAI is currently engaged in the MPAI-EVC Evidence Project that can be exemplified by the following figure:

Here all coding tools have been replaced by AI tools. We intend to train these new tools with the same source material (a lot of it) and assess the improvement obtained.

We expect to obtain an objectively measured improvement of at least 25%.

After this MPAI will engage in the actual development of MPAI-EVC. We expect to obtain an objectively measured improvement of at least 35%. Our experience suggests that the subjectively measured improvement will be around 50%.

Like in Deep Blue, old tools had a priori statistical knowledge is modelled and hardwired in the tools, but in AI, knowledge is acquired by learning the statistics.

This is the reason why AI tools are more promising than traditional data processing tools.

For a new age you need new tools and a new organisation tuned to use those new tools.