1 Introduction

MPAI develops standards using a rigorous process described below. See the overview of An introduction to Moving Picture, Audio, and Data Coding by Artificial Intelligence.

MPAI’s standards development is based on projects evolving through a workflow extending on 7 + 1 stages. The 7th stage is split in 4: Technical Specification, Reference Software Specification, Conformance Testing Specification, and Performance Assessment Specification. Technical Reports can also be developed to investigate new fields.

| # | Acr | Name | Description |

| 0 | IC | Interest Collection | Collection and harmonisation of use cases proposed. |

| 1 | UC | Use cases | Proposals of use cases, their description and merger of compatible use cases. |

| 2 | FR | Functional Requirements | Identification of the functional requirements that the standard including the Use Case should satisfy. |

| 3 | CR | Commercial Requirements | Development and approval of the framework licence of the standard. |

| 4 | CfT | Call for Technologies | Preparation and publication of a document calling for technologies supporting the functional and commercial requirements. |

| 5 | SD | Standard Development | Development of the standard in a specific Development Committee (DC). |

| 6 | CC | Community Comments | When the standard has achieved sufficient maturity it is published with request for comments. |

| 7 | MS | MPAI standard | The standard is approved by the General Assembly comprising 4 documents. |

| 7.1 | TS | Technical Specification | The normative specification to make a conforming implementation. |

| 7.2 | RS | Reference Software | The descriptive text and the software implementing the Technical Specification |

| 7,3 | CT | Conformance Testing | The Specification of the steps to be executed to test an implementation for conformance. |

| 7.4 | PA | Conformance Assessment | The Specification of the steps to be executed to assess an implementation for performance. |

A project progresses from one stage to the next by resolution of the General Assembly.

The stages of currently (MPAI-56) active MPAI projects are graphically represented by Table 1.

Legend: TS: Technical Specification, RS: Reference Software, CT: Conformance Testing, PA: Performance Assessment; TR: Technical Report; V2: Version 2.

Table 1 – Snapshot of the MPAI work plan (MPAI-59)

| # | Work area | IC | UC | FR | CR | CfT | SD | CC | TS | RS | CT | PA | TR |

| AIF | AI Framework | 2.1 | 1.1 | ||||||||||

| AIH | AI Health Data | ||||||||||||

| -HSP | Health Secure platform | 1.0 | |||||||||||

| CAE | Context-based Audio Enhancement | ||||||||||||

| -USC | – Use Cases | 2.4 | 1.4 | 2.3 | |||||||||

| -6DF | – Six Degrees of Freedom | 1.0 | |||||||||||

| CAV | Connected Autonomous Vehicles | ||||||||||||

| -ARC | – Architecture | 1.1 | |||||||||||

| -TEC | – Technologies | 1.1 | |||||||||||

| CUI | Compression & Understanding of Industrial Data | ||||||||||||

| -CPP | – Company Performance Prediction | 2.0 | 1.1 | 1.1 | 1.1 | 1.1 | |||||||

| EEV | AI-based End-to-End Video Coding | X | |||||||||||

| EVC | AI-Enhanced Video Coding | ||||||||||||

| -UFV | – Up-sampling Filter for Video applications | 1.0 | |||||||||||

| GME | Governance of the MPAI Ecosystem | 2.0 | |||||||||||

| GSA | Integrated Genomic/Sensor Analysis | X | |||||||||||

| HMC | Human and Machine Communication | 2.1 | |||||||||||

| MMC | Multimodal Conversation | 2.4 | 2.3 | ||||||||||

| MMM | – MPAI Metaverse Model | 2.1 | |||||||||||

| -FNC | – Functionalities | 1.0 | |||||||||||

| -FPR | – Functionality Profiles | 1.0 | |||||||||||

| -ARC | – Architecture | 1.2 | |||||||||||

| -TEC | – Technologies | 2.1 | |||||||||||

| NNW | Neural Network Watermarking | ||||||||||||

| -NNT | – Neural Network Traceability | 1.1 | 1.2 | ||||||||||

| -TEC | – Technologies | 1.0 | |||||||||||

| OSD | Object and Scene Description | 1.4 | |||||||||||

| PAF | Avatar Representation & Animation | 1.5 | |||||||||||

| PRF | Profiles | 1.0 | |||||||||||

| AI Modules | 1.1 | ||||||||||||

| AI Workflows | 1.0 | ||||||||||||

| SPG | Server-based Predictive Multiplayer Gaming | ||||||||||||

| -MDL | Mitigation of Data Los Effects | 1.0 | 1.0 | ||||||||||

| XRV | XR Venues | ||||||||||||

| -LTP | – Live Theatrical Performance | 1.0 | |||||||||||

| -CIL | – Collaborative Immersive Laboratories | X |

2 MPAI-AIF

The MPAI approach to AI standards is based on the belief that large AI applications broken up into smaller elements called AI Modules (AIM), combined in workflows called AI Workflows (AIW), exchanging processed data with known semantics to the extent possible, improve explainability of AI applications and promotes a competitive market of components with standard interfaces.

Technical Specification: AI Framework (MPAI-AIF) V2.0 enables dynamic configuration, initialisation, and control of mixed Artificial Intelligence – Machine Learning – Data Processing workflows in a standard environment called AI Framework (AIF).

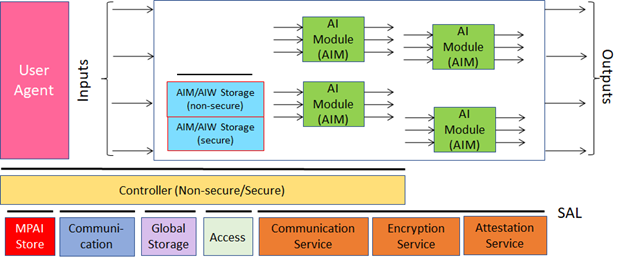

MPAI-AIF V1 assumed that the AI Framework was secure but did not provide support to developers wishing to execute an AI application in a secure environment. MPAI-AIF V2 responds to this requirement. As shown in Figure 1, the standard defines a Security Abstraction Layer (SAL). By accessing the SAL APIs, a developer can indeed create the required level of security with the desired functionalities.

Figure 2 – Reference model of the MPAI AI Framework (MPAI-AIF) V2

MPAI-AIF V2.1 includes V1 as Basic Profile. The Security Profile is a superset of the Basic Profile.

The MPAI-AIF Technical Specification V2.0 is available.

3 MPAI-AIH

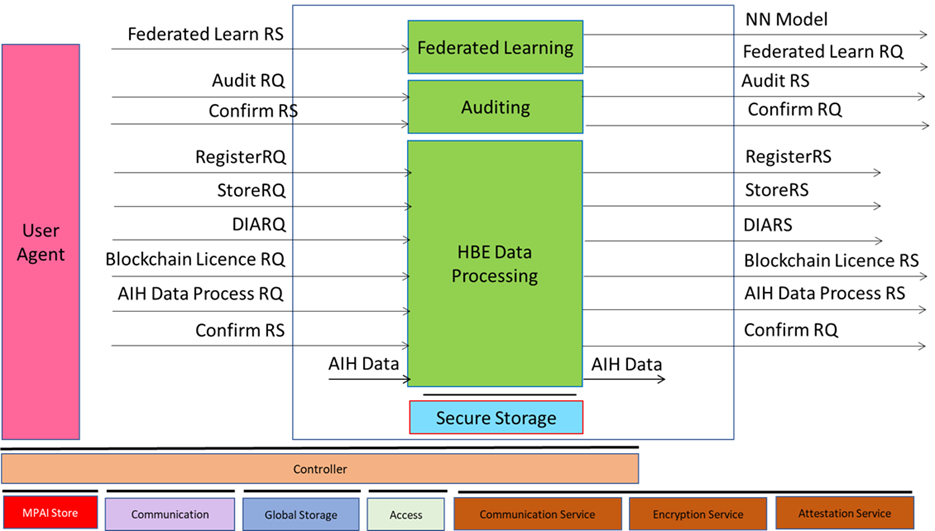

Artificial Intelligence for Health data (MPAI-AIH) is an MPAI project aiming to specify the interfaces and the relevant data formats of a system called AI Health Platform (AIH Platform) where:

- End Users use handsets with an MPAI AI Framework (AI Health Frontends) to acquire and process health data.

- An AIH Backend collects processed health data delivered by AIH Frontends with associated Smart Contracts specifying the rights granted by End Users.

- Smart Contracts are stored on a blockchain.

- Third Party Users can process their own and End User-provided data based on the relevant smart contracts.

- The AIH Backend periodically collects the AI Models trained by the AIH Frontends while processing the health data, updates its AI Model and distributes it to AI Health Platform Frontends (Federated Learning).

The Back End is depicted in Figure 3 .

Figure 3 – AIH-HBE Reference Model

MPAI-AIH is at the Standard Development stage. The collection of public documents is available here.

4 MPAI-CAE

Context-based Audio Enhancement (MPAI-CAE) improves the user experience for several audio-related applications including entertainment, communication, teleconferencing, gaming, post-production, restoration etc. in a variety of contexts such as in the home, in the car, on-the-go, in the studio etc. using context information to act on the input audio content using AI.

4.1 CAE-USC

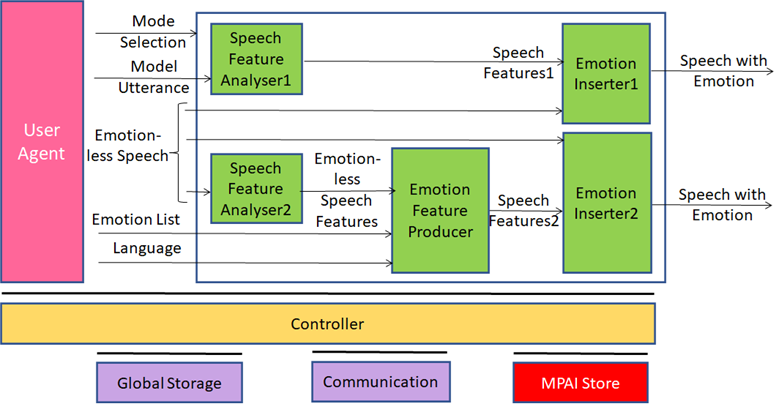

MPAI CAE is subdivided in two parts: Use Cases (USC) and 6 Degrees of Freedom (6DF). Figure 4 is the reference model of Emotion-Enhanced Speech.

Figure 4 – A CAE-USC V2.3 Use Case: Emotion-Enhanced Speech

The CAE-USC Technical Specification V2.3, MPAI-CAE Reference Software V1.4 and MPAI-CAE Conformance Testing V1.4 have been approved and available.

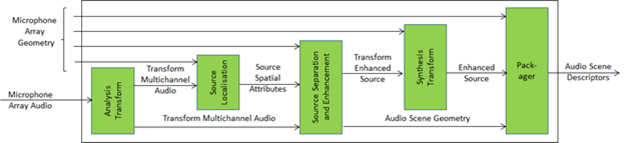

MPAI has developed the specification of the Audio Scene Description Composite AIM as part of the CAE-USC V2.3 standard.

Figure 5 – Audio Scene Description Composite AIM

The MPAI-CAE Technical Specification V2.3 has been approved and is available.

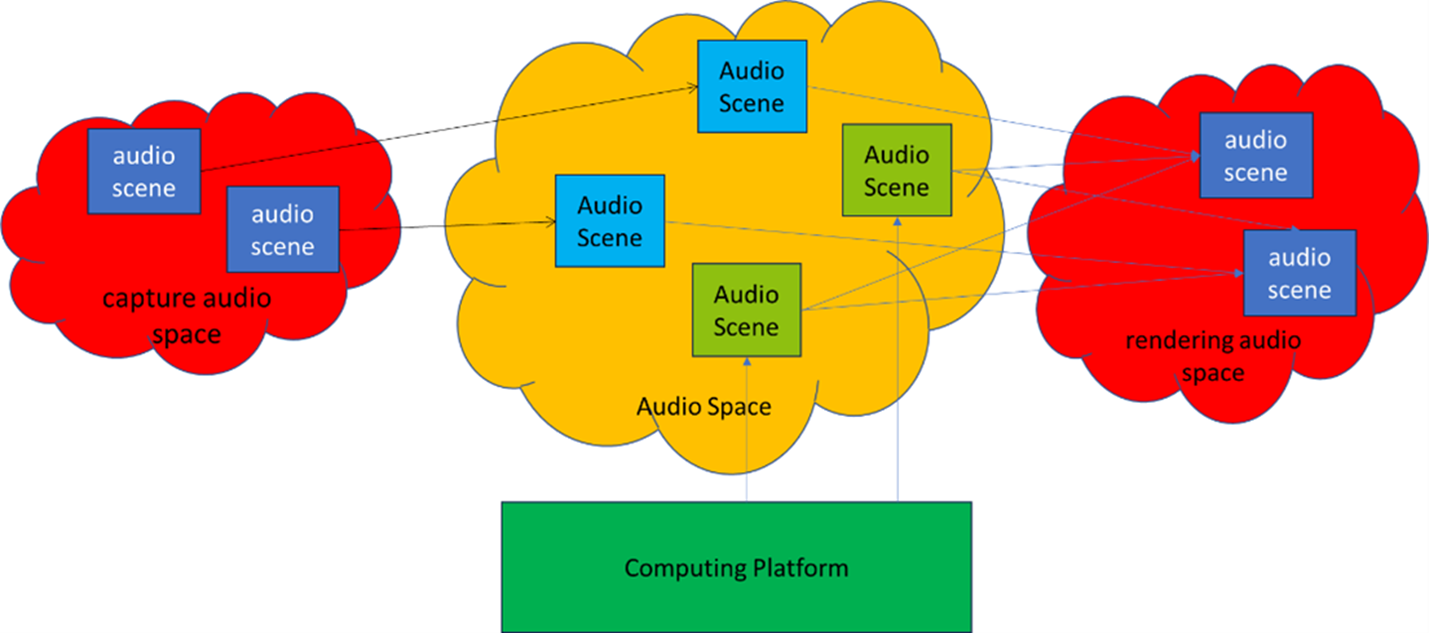

4.2 6 Degrees of Freedom

This standard will support Audio Scenes composed of digital representations of acoustical scenes and other synthetic Audio Objects that can be rendered in a perceptually veridical fashion of arbitrary user-selected points of the audio scene. This is depicted in Figure 1 (words in lowercase refer to the real space, in uppercase to Virtual Space.

Figure 6 – real spaces and Virtual Spaces in CAE-6DF

5 MPAI-CAV

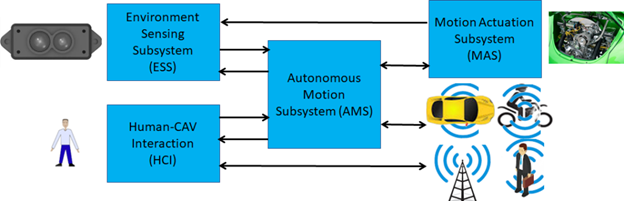

Connected Autonomous Vehicles (CAV) is an MPAI project developing Technical Reports and Specifications targeting the Architecture of a Connected Autonomous Vehicle (CAV) based on Subsystems and Components with standard functions and interfaces. The high-level model is in Figure 7:

Figure 7 -– The CAV subsystems

The latest published standard was published as Technical Specification: Connected Autonomous Vehicle (MPAI-CAV) – Technologies V1.0 specifying the Architecture of a Connected Autonomous Vehicle (CAV) based on a Reference Model comprising:

- A CAV broken down into Subsystems for each of which the following is specified:

- The Functions

- The input/output Data

- The Topology of Components

- Each Subsystem broken down into Components of which the following is specified:

- The Functions

- The input/output Data.

Figure 8 depicts the Human-CAV Interaction (HCI) Subsystem Reference Model.

Figure 8 – Reference Model of the Human-CAV Interaction Subsystem

Technical Specification: Connected Autonomous Vehicle (MPAI-CAV) – Technologies V1.0 is available.

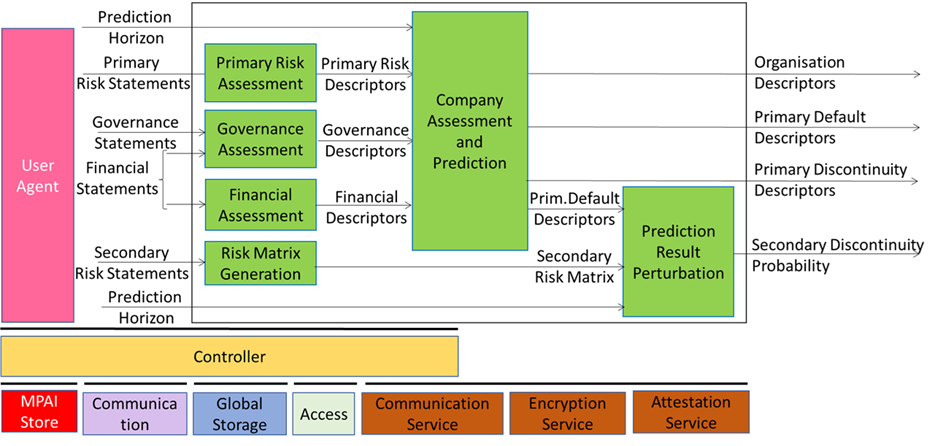

6 MPAI-CUI

Compression and understanding of industrial data (MPAI-CUI) aims to enable AI-based filtering and extraction of key information to predict company performance by applying Artificial Intelligence to governance, financial and risk data. This is depicted in Figure 9.

Figure 9 – The MPAI-CUI Use Case

The MPAI-CUI Technical Specification V1.1 has been published and is available. V2.0 is being developed.

7 MPAI-EEV

There is consensus in the video coding research community that the so-called End-to-End (E2E) video coding schemes can yield significantly higher performance than those target, e.g., by MPAI-EVC. AI-based End-to-End Video Coding intends to address this promising area.

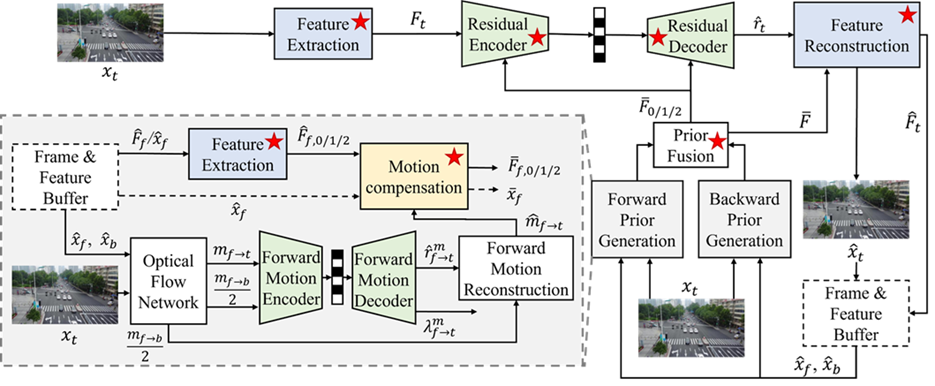

MPAI has extended the OpenDVC model producing five versions of the EEV Reference Model and is currently developing version 0.6 (Figure 10). The latest – EEV0.5 – exceeds the performance of the MPEG-EVC standard.

Figure 10 – MPAI-EEV Reference Model

EEV0.6 has a significantly higher performance that VVC.

The collection of public documents is available here.

8 MPAI-EVC

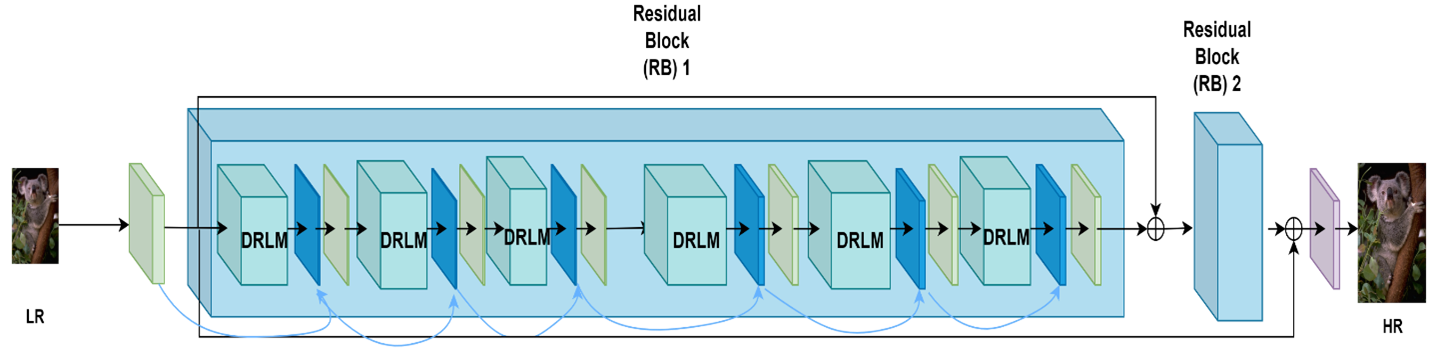

AI-Enhanced Video Coding (MPAI-EVC) is currently developing the Upsampling Filter for Video applications standard (EEV-UFV) V1.0. The standard will specify a general procedure to design up-sampling filters for video applications based on super resolution techniques, the parameters of two specific filters for standard definition to high definition and high definition to ultra high definition, and methodologies to reduce the complexity of the designed filters without significant loss in performance.

Figure 11 – Up-sampling filter

The collection of public documents is available here.

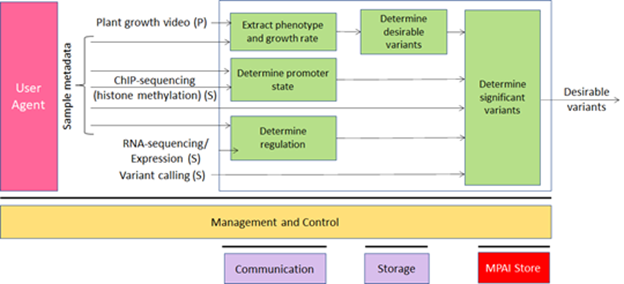

9 MPAI-GSA

Integrative Genomic/Sensor Analysis (MPAI-GSA) uses AI to understand and compress the result of high-throughput experiments combining genomic/proteomic and other data, e.g., from video, motion, location, weather, medical sensors.

Figure 10 addresses the Smart Farming Use Case.

Figure 12 – An MPAI-GSA Use Case: Smart Framing

The collection of public documents is available here.

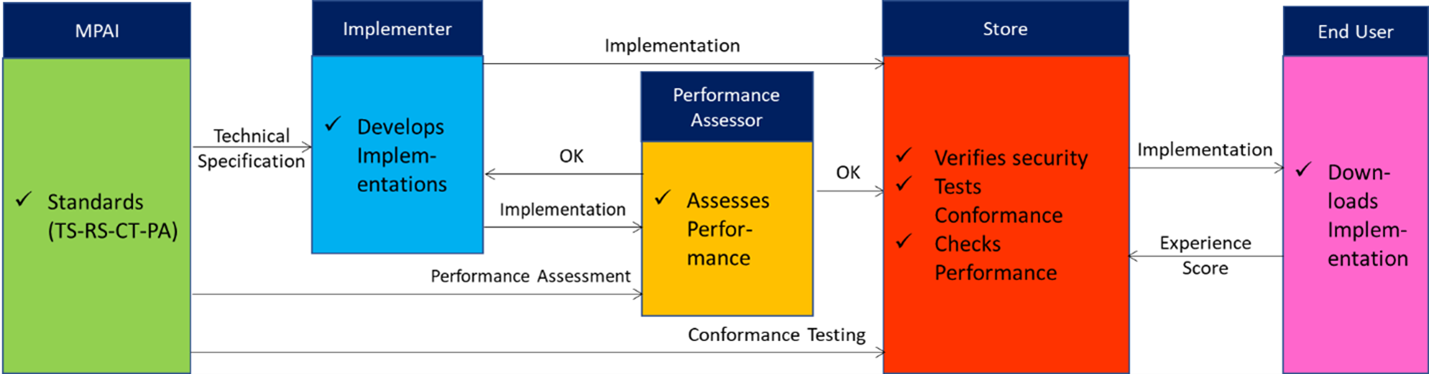

10 MPAI-GME

Technical Specification: Governance of the MPAI Ecosystem lays down the foundations of the MPAI Ecosystem. MPAI develops and maintains the following documents the following technical documents:

- Technical Specification.

- Reference Software Specification.

- Conformance Testing.

- Performance Assessment.

- Technical Report

An MPAI Standard is a collection of a variable number of the 5 document types.

Figure 11 depicts the operation of the MPAI ecosystem generated by MPAI Standards.

Figure 13 – The MPAI ecosystem operation

The MPAI-GME Technical Specification V2.0 has been approved and is available.

11 MPAI-HMC

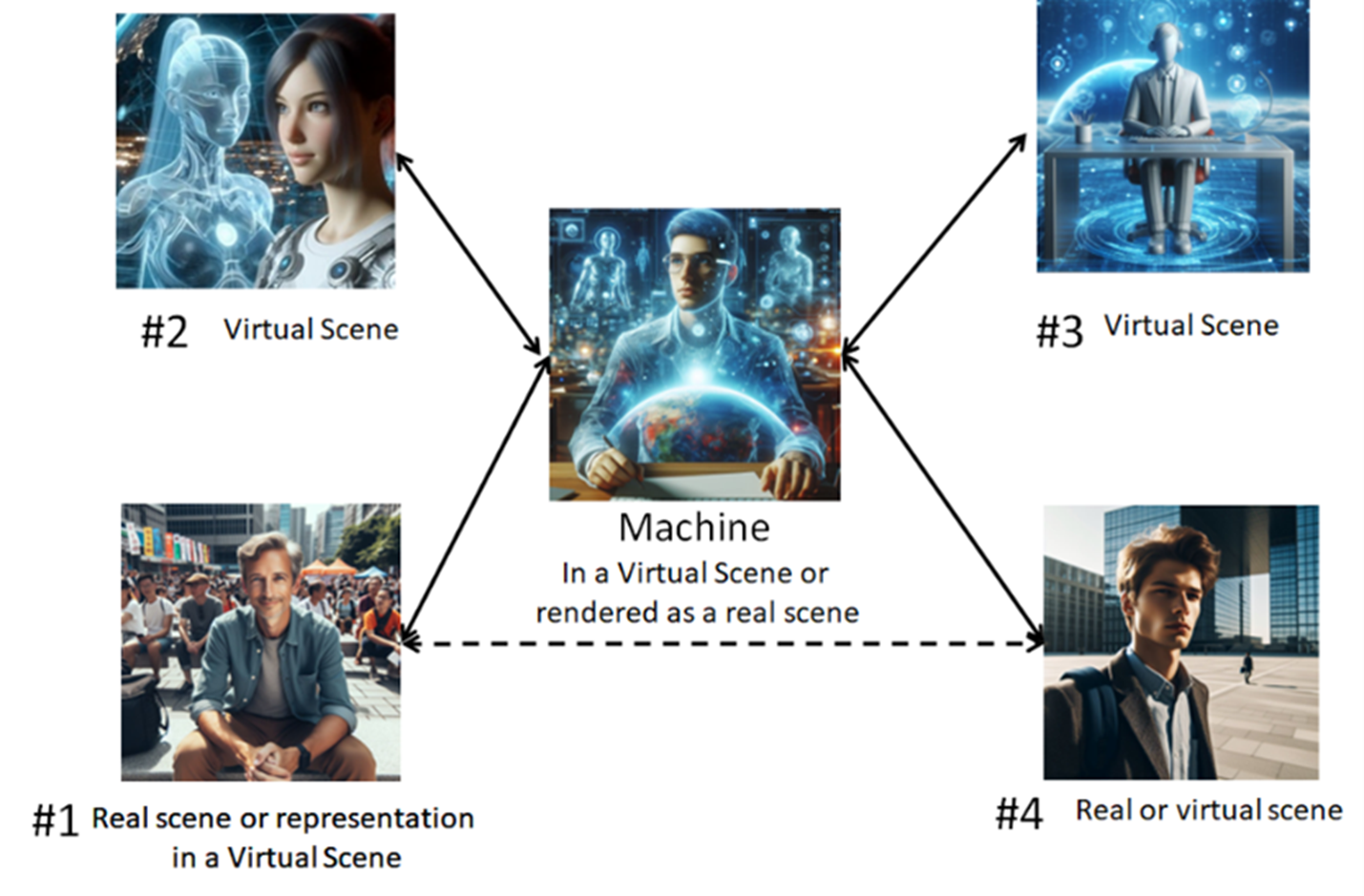

Human and Machine Communication (MPAI-HMC) leverages five Technical Specifications: Multimodal Conversation, Context-based Audio Enhancement, Portable Avatar Format, and MPAI Metaverse Model. All of them deal with real and digital humans communicating in real or virtual environments, specifying the Human and Machine Communication Use Case in a single document. MPAI-HMC is designed to enable multi-faceted or general-purpose applications as information kiosks, virtual assistants, chatbots, information services, metaverse applications etc in multilingual contexts where Entities – humans present in a real space or represented in a virtual space as speaking avatars or machines represented in a virtual space as speaking avatars all acting in contexts – use text, speech, face, gesture, and the audio-visual scene in which they are embedded.

Figure 12 illustrates the Human and Machine communication setting target of MPAI-HMC. The terms Machine followed by a number indicates an MPAI-HMC instance.

Figure 14 – Examples of MPAI-HMC communication

The MPAI-HMC Technical Specification V2.0 has been approved and is available.

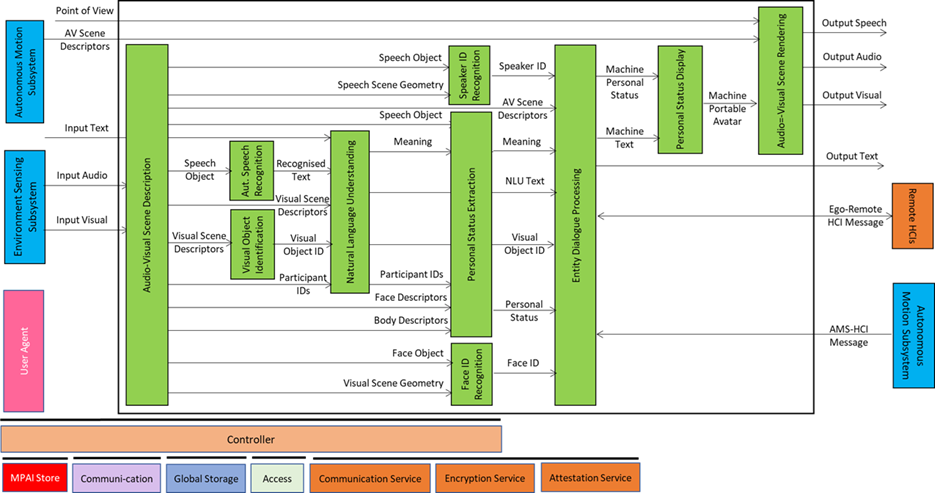

12 MPAI-MMC

Multi-modal conversation (MPAI-MMC) aims to enable human-machine conversation that emulates human-human conversation in completeness and intensity by using AI.

The MPAI mission is to develop AI-enabled data coding standards. MPAI believes that its standards should enable humans to select machines whose internal operation they understand to some degree, rather than machines that are “black boxes” resulting from unknown training with unknown data. Thus, an implemented MPAI standard breaks up monolithic AI applications, yielding a set of interacting components with identified data whose semantics is known, as far as possible.

MPAI-MMC introduces Personal Status, an internal status of humans that a machine needs to estimate and that it artificially creates for itself with the goal of improving its conversation with the human or, even with another machine. Personal Status is applied to MPAI-MMC specific Use Cases, such as Conversation about a Scene, Virtual Secretary for Videoconference, and Human-Connected Autonomous Vehicle Interaction.

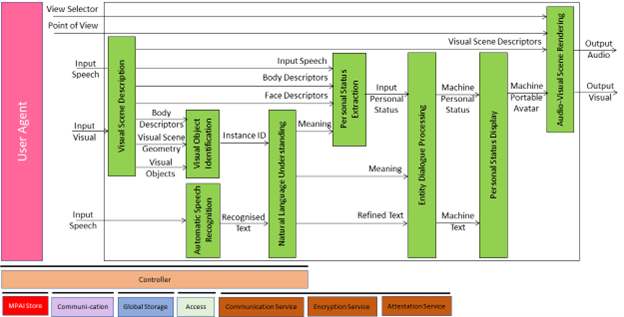

Several new Use Cases have been specified for Technical Specification: Multi-modal conversation (MPAI-MMC) V2.2. One of them is Conversation About a Scene (CAS) of which Figure 13 is the reference model (Human-CAV Interaction is also an MMC standard).

Figure 15 – An MPAI-MMC V2 Use Case: Conversation with Personal Status

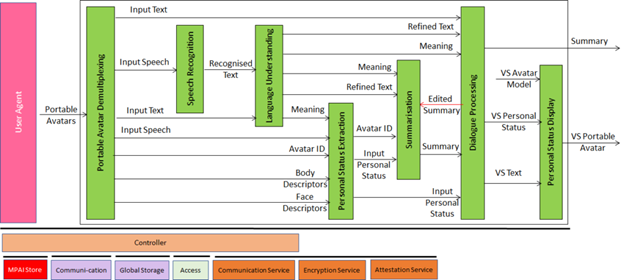

Figure 14 gives the Reference Model of a second use case: Virtual Secretary (used by the Avatar-Based Videoconference use case).

Figure 16 – Reference Model of Virtual Meeting Secretary

The MPAI-MMC Technical Specification V2.3 has been published and is available

13 MPAI-MMM

The MPAI Metaverse Model represents a system that captures data from the real world, processes it, and combines it with internally generated data to create virtual environments that users can interact with.

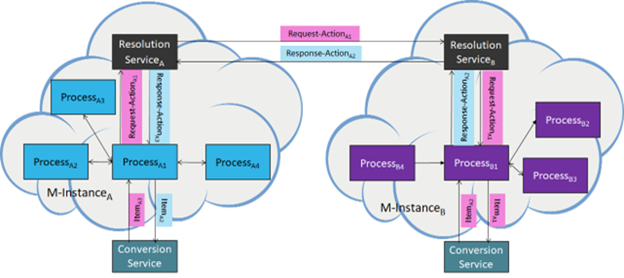

The MPAI Metaverse Model (MMM) is an MPAI project targeting a series of deliverables for Metaverse Interoperability. Two MPAI Technical Reports – Functionalities and Functionality Profiles have laid down the groundwork. Technical Specification – MPAI Metaverse Model (MPAI-MMM) – Architecture V1.1, provides initial tools by specifying the Functional Requirements of Processes, Items, Actions, and Data Types that allow two or more metaverse instances to Interoperate via a Conversion Service if they implement the Operation Model and produce Data whose Format complies with the Specification’s Functional Requirements.

Figure 15 depicts one aspect of the Specification where a Process in an M-Instance requests a Process in another M-Instance to perform an Action by relying on their Resolution Services.

Figure 17 – Resolution and Conversion Services

Technical Specification MPAI-MMM – Technologies V2.0 and Technical Specification MPAI-MMM – Technologies V1.0 have been approved and are available.

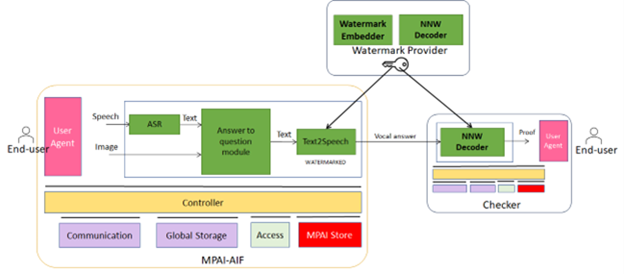

14 MPAI-NNW

Neural Network Watermarking is an MPAI project developing Technical Reports and Specifications on the application of Watermarking and other content management technologies to Neural Networks.

The current MPAI-NNW Technical Specification enables watermarking technology providers to qualify their products by providing the means to measure, for a given size of the watermarking payload, the ability of:

- The watermark inserter to inject a payload without deteriorating the NN performance.

- The watermark detector to recognise the presence of the inserted watermark when applied to

- A watermarked network that has been modified (e.g., by transfer learning or pruning)

- An inference of the modified model.

- The watermark decoder to successfully retrieve the payload when applied to

- A watermarked network that has been modified (e.g., by transfer learning or pruning)

- An inference of the modified model.

- The watermark inserter to inject a payload at a measures computational cost on a given processing environment.

- The watermark detector/decoder to detect/decode a payload from a watermarked model or from any of its inferences, at a low computational cost, e.g., execution time on a given processing environment.

Figure 18 – MPAI-NNW implemented in MPAI-AIF

Technical Specification: Neural Network Watermarking (MPAI-NNW) V1.0 and Reference Software Specification MPAI-NNW V1.2 have been published and are available. The NNW project has also developed Technical Specification: Neural Network Traceability (NNW-NNT) V1.0 that adds support to fingerprinting and has published a Call for Technologies for NNW-Technologies.

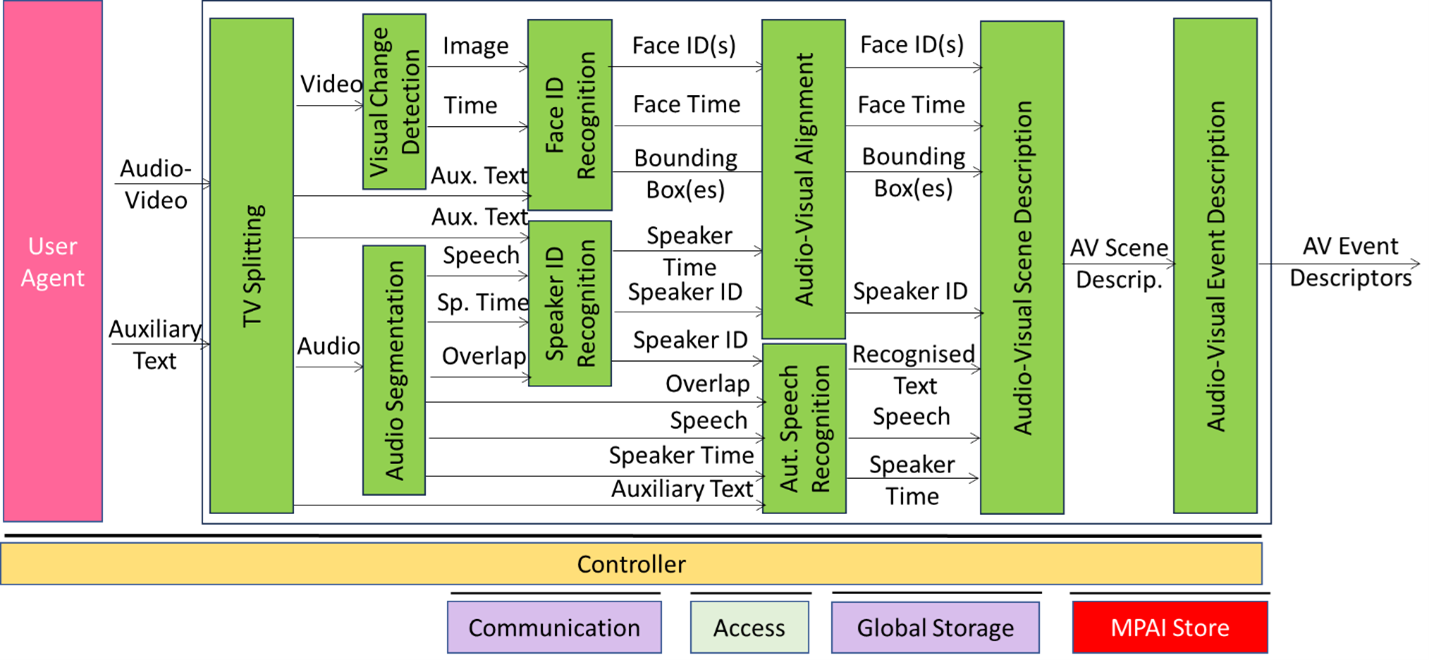

15 MPAI-OSD

Visual object and scene description is an MPAI project project developing Technical Reports and Specifications targeting digital representation of spatial information of Audio and Visual Objects and Scenes for use across MPAI Technical Specifications. Examples are: Spatial Attitude and Point of View; Objects; Scene Descriptors, and Event Descriptors.

MPAI-OSD is a cornerstone for many MPAI standards.

MPAI-OSD specifies and provides a reference software implementation of the Television Median Analysis depicted in Figure 17.

Figure 19 -The Television Media Analysis Use Case

Technical Specification: Object and Scene Descriptors (MPAI-OSD) V1.31 has been published an id available.

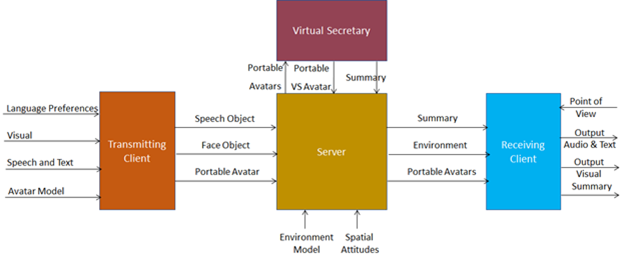

16 MPAI-PAF

There is a long history of computer-created objects called “digital humans”, i.e., digital objects that can be rendered to show a human appearance. In most cases the underlying assumption of these objects has been that creation, animation, and rendering is done in a closed environment. Such digital humans had little or no need for standards. However, in a communication and even more in a metaverse context, there are many cases where a digital human is not constrained within a closed environment. For instance, a client sends may data to a remote client that should be able to unambiguously interpret and use the data to reproduce a digital human as intended by the transmitting client.

These new usage scenarios require forms of standardisation. Technical Specification: Portable Avatar Format (MPAI-PAF) is a first response to the need of a user wishing to enable their transmitting client to send data that a remote client can interpret to render a digital human, having the body movement and the facial expression representing their own movements and expression.

Figure 18 is the system diagram of the Avatar-Based Videoconference Use Case enabled by MPAI-PAF.

Figure 20 – End-to-End block diagram of Avatar-Based Videoconference

Technical Specification: Portable Avatar Format (MPAI-PAF) V1.4 has been published and is available.

17 MPAI-PRF

Some AIMs receive more/less input and produce more/less output data than an AIM with the same name that is used in other AIWs even though they nominally perform the same functions. Since it is not realistic to require that all AIMs be equipped with the additional logic required to exploit features that are not required, Technical Specification: AI Module Profiles (MPAI-PRF) V1.0 provides a mechanism that unambiguously signals which characteristics of an AIM – called Attributes in the following – are supported by the AIM.

For instance, the Profile of a Natural Language Understanding (HMC-NLU) AIM that does not handle spatial information is labelled in two ways, allows more compact signalling matched to the number of Attributes supported by an AIM:

| Removing unsupported Attributes | MMC-NLU-V2.1(ALL-AVG-OII) |

| Adding supported Attributes | MMC-NLU-V2.1(NUL+TXO+TXR) |

Attributes, however, are not always sufficient to identify the capabilities of an AIM instance. For instance, an AIM instance of Personal Status Display (PAF-PSD) may support Personal Status, but only the Speech (PS-Speech) and Face (PS-Face) Personal Status Factors. This is illustrated by the following two examples:

| Removing unsupported Attributes | PAF-PSD-V1.1(ALL@IPS#SPE#FCE) |

| Adding supported Attributes | PAF-PSD-V1.1(NUL+TXT+AVM@IPS#FCE#GST |

Here @ prefixed to IPS signals that the AIM supports Personal Status, but only of Speech and Face and of Face and Gesture represented by PSS, PSF, and GST, the codes of the PS-Speech, PS-Face, and PS-Gesture Sub-Attributes, respectively (the full list of Personal Status Sub-Attributes is provided. The second case may apply for a sign-language capable AIM.

Technical Specification: AI Module Profiles (MPAI-PRF) V1.0 has been published and is available.

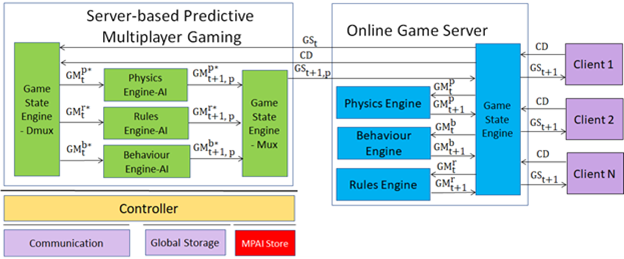

18 MPAI-SPG

Server-based Predictive Multiplayer Gaming (MPAI-SPG) aims to minimise the audio-visual and gameplay discontinuities caused by high latency or packet losses during an online real-time game. In case information from a client is missing, the data collected from the clients involved in a particular game are fed to an AI-based system that predicts the moves of the client whose data are missing. The same technologies provide a response to the need to detect who amongst the players is cheating.

Figure 19 depicts the MPAI-SPG reference model including the cloud gaming model.

Figure 21 – The SPG-MDL Reference Model

Technical Report: Guidelines for data loss mitigation in online multiplayer gaming (SPG-MDL) V1.0 has been developed. The collection of public documents is available here.

19 MPAI-TFA

Data Types, Formats, and Attributes (MPAI-TFA) specifies a Data Type including Sub-Types, Formats, and Attributes providing information related to a Data Type instance, such as “media” Data Types (e.g., Speech, Audio, and Visual), and Metaverse (e.g., Contract and Rules) that facilitate or even enable the operation of an AI Module receiving that Data Type instance.

MPAI-TFA classifies that additional information as:

- Sub-Types, information related to the different forms that can be taken by a Data Type instance, for example, Colour Space is a Sub-Type of the Visual Data Type.

- Formats, the different ways in which a Data Type can be digitally represented or transported, for example, AAC is a Format of the Speech Data Type.

- Attributes, the different types of information providing details on a Data Type instance, for example, the ID of an Object in a picture.

Technical Specification: Data Types, Formats and Attributes (MPAI-TFA) V1.3 has been published and is available.

20 MPAI-XRV

XR Venues (MPAI-XRV) – Live Theatrical Performance addresses Broadway theatres, musicals, dramas, operas, and other performing arts increasingly use video scrims, backdrops, and projection mapping to create digital sets rather than constructing physical stage sets. This allows animated backdrops and reduces the cost of mounting shows. The use of immersion domes – especially LED volumes – promises to surround audiences with virtual environments that the live performers can inhabit and interact with.

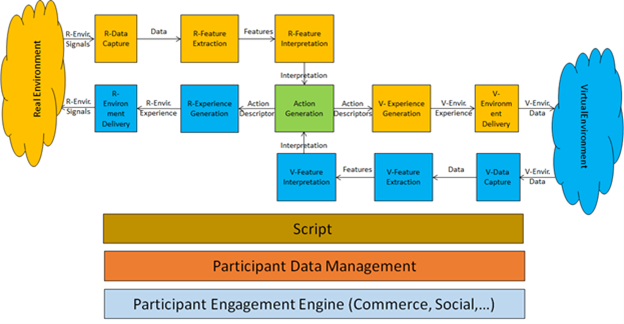

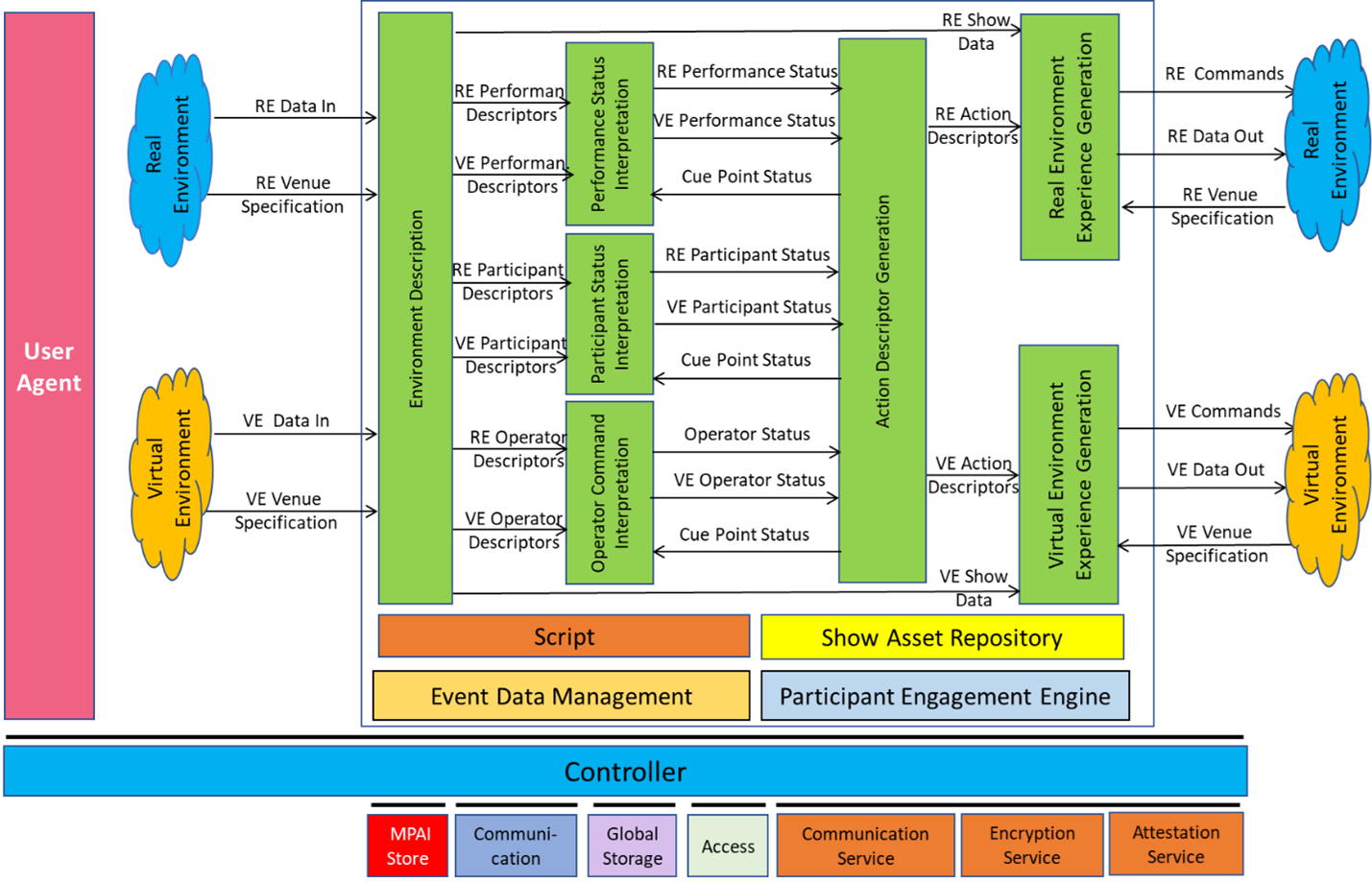

MPAI-XRV has developed a reference model that describes the components of the Real-to-Virtual-to-Real scenario depicted in Figure 20.

Figure 22 – General Reference Model of the Real-to-Virtual-to-Real Interaction

The MPAI XR Venues (XRV) – Live Theatrical Stage Performance (XRV-LTP) V1.0 project, a use case of MPAI-XRV intends to define AI Modules that facilitate setting up live multisensory immersive stage performances which ordinarily require extensive on-site show control staff to operate. With XRV it will be possible to have more direct, precise yet spontaneous show implementation and control that achieve the show director’s vision but free staff from repetitive and technical tasks letting them amplify their artistic and creative skills.

An XRV Live Theatrical Stage Performance can extend into the metaverse as a digital twin. In this case, elements of the Virtual Environment experience can be projected in the Real Environment and elements of the Real Environment experience can be rendered in the Virtual Environment (metaverse).

Figure 21 shows how the XRV system captures the Real (stage and audience) and Virtual (metaverse) Environment, AI-processes the captured data, injects new components into the Real and Virtual Environments.

Figure 23 – Reference Model of MPAI-XRV – Live Theatrical Stage Performance

MPAI-XRV – Live Theatrical Stage Performance is at the Standard Development stage.

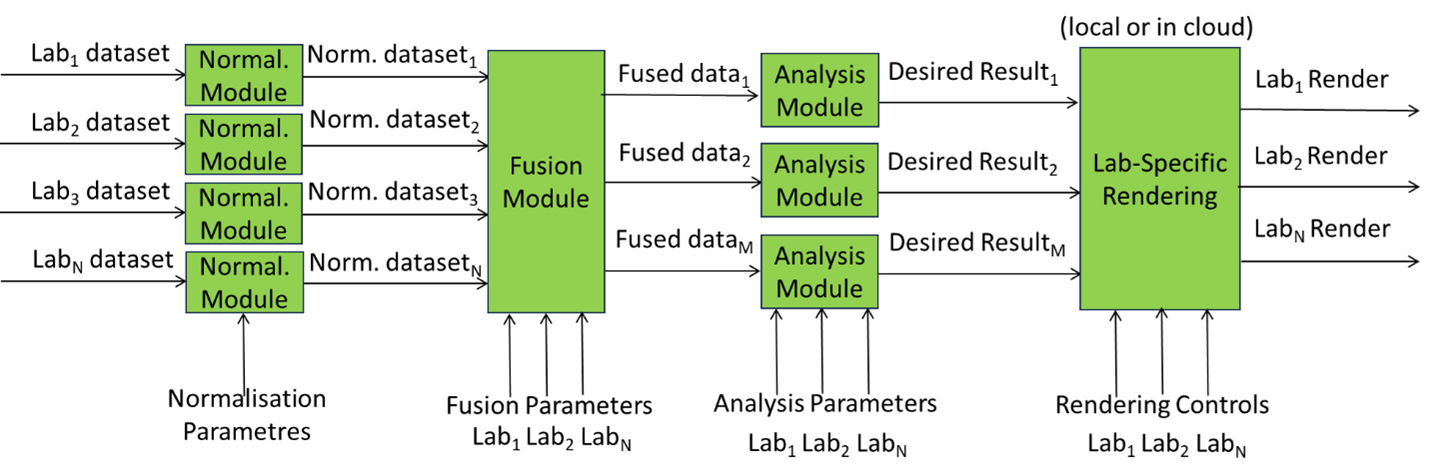

MPAI Is in the initial phases of the Collaborative Immersive Laboratory project. In Figure 22, there are N geographically distributed Labs providing datasets acquired with different technologies related to a particular application domain (A lab may provide more than one dataset). Technology-specific AIMs normalise the datasets. A Fusion AIM/AIW controlled by Fusion Parametres from different Labs provide M Fused Data (# is independent of # of input datasets). Fused Data are processed by Analysis AIMs driven by Analysis Parametres possibly coming from different Labs. Analysis AIMs produce Desired Results possibly used by different Labs. Desired Results are Rendered and viewed by different Labs.

Figure 24 – Collaborative Immersive Laboratory

The collection of public documents is available here.

21 MPAI-WMG

MPAI-WMG – considers all AI Workflows and a subset of relevant AI Modules specified by MPAI in the following Technical Specifications:

- Connected Autonomous Vehicle (MPAI-CAV) – Technologies (CAV-TEC) V1.0

- Context-based Audio Enhancement (MPAI-CAE) – Use Cases (CAE-USC) V2.3

- Human and Machine Communication (MPAI-HMC) V2.0

- Multimodal Conversation (MPAI-MMC) V2.3

- Object and Scene Description (MPAI-OSD) V1.3

- Portable Avatar Format (MPAI-PAF) V1.4

for the following purposes:

- To clarify the text of the AIW and AIM specification where appropriate.

- To analyse issues regarding the implementation of all AIWs and a subset of their AIMs.

- To study the applicability of the Perceptible and Agentive AI (PAAI) model to all AIWs and a subset of their AIMs.