The MPAI Store has been established

The MPAI Store has been established by a group of MPAI participants who are now the MPAI Store members. But what is the MPAI Store and why do we need it?

Standards specify interfaces between systems. You understand what a reporter’s article says because there is a standard (e.g., Latin or another alphabet) defining the graphical signs. But, understanding the signs may not be sufficient to understand the article if the language used is not yours. Indeed, language is a very sophisticated standard because it intertwines with the lives of millions of people.

MPAI standards are sophisticated in a different way. They refer to basic processing elements called AI Modules (AIM) that can be combined in AI Workflows (AIW) implementing an AI application and executed in an environment called AI Framework (AIF). Users of an MPAI application standard expect that the AIF correctly executes an AI Workflow acquired from a party, an AIW is acquired from another party and the set of AIMs required by the AI Workflow are all acquired form different parties.

This can be done in practice if the ecosystem composed of MPAI, implementers and users is properly governed. The Governance of the MPAI Ecosystem standard developed by MPAI (MPAI-GME) introduces two more ecosystem players: the first is Performance Assessors, potentially for-profit entities appointed by MPAI to assess the performance of implementations, i.e., their Reliability, Robustness, Replicability and Fairness; and the second is the MPAI Store, a not-for-profit commercial entity tasked with verifying security, testing conformance, collecting the results of performance assessment, and guaranteeing that Consumers have a high-availability access to implementations.

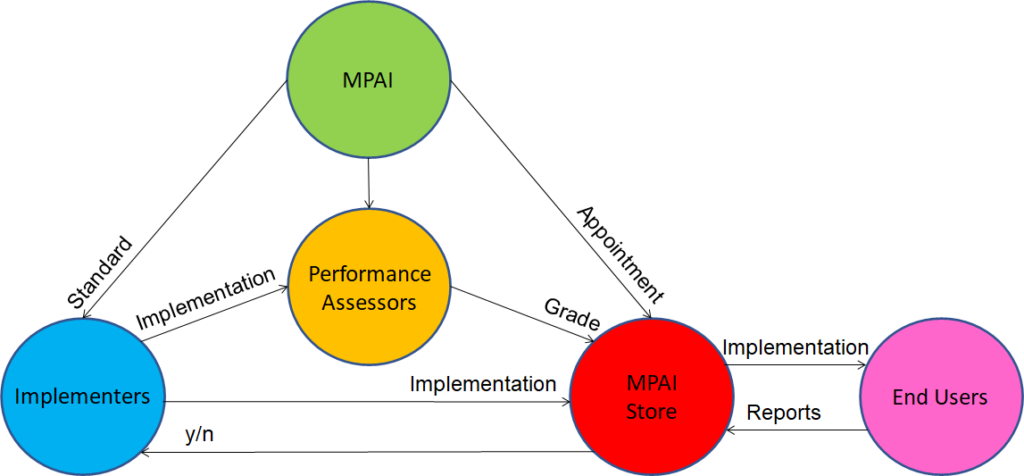

The MPAI Ecosystem is depicted in Figure 1

Figure 1 – Actors and relations of the MPAI Ecosystem

This is how the MPAI Ecosystem operates:

- MPAI produces standards, i.e., the sets of four documents: technical specification, reference software, conformance testing and performance assessment.

- An implementer uses a standard to develop an implementation and submits it to the MPAI Store.

- The MPAI Store verifies security and tests that the implementation conforms with MPAI-AIF, if the implementation’s interoperability level is 1, and with the claimed application standard if the interoperability level is 2.

- If security and conformance tests are passed, the MPAI Store publishes the AIM or AIW declaring the interoperability level.

- If the implementer so desires, the implementation can also be submitted to a performance assessor who will carry out the assessment using MPAI’s performance assessment specification and report the grade of performance to the MPAI Store. If the assessment is positive, the MPAI Store will upgrade the interoperability level of the implementation to Level 3.

- A User who likes an implementation will download and use it and may send the MPAI Store their review of the implementation to the MPAI Store.

- The MPAI Store will publish users’ reviews.

MPAI Store members are implementing the legal, technical, and business details that derive from the establishment of the MPAI Store.

Personal Status

Multimodal Conversation is one of the standards for which MPAI has published a Call for Technologies. With it, MPAI gives more substance to its claim to enable forms of human-machine conversation that emulate human-human conversation in completeness and intensity.

The Conversation with Emotion Use Case of the MPAI-MMC V1 standard relied on Emotion to enhance the human-machine conversation. Emotion was extracted from Speech, from the recognised text and from the face of the human. The machine the human converses with produces the text of its response with a synthetic emotion that the machine finds to be suitable for its response.

In MPAI-MMC V2, MPAI has introduced a new notion with a significantly wider scope than Emotion: Personal Status, the set of internal characteristics of a person or avatar. MPAI currently considers Emotion, Cognitive State, and Attitude. It can be conveyed by Modalities, currently: Text, Speech, Face, and Gesture.

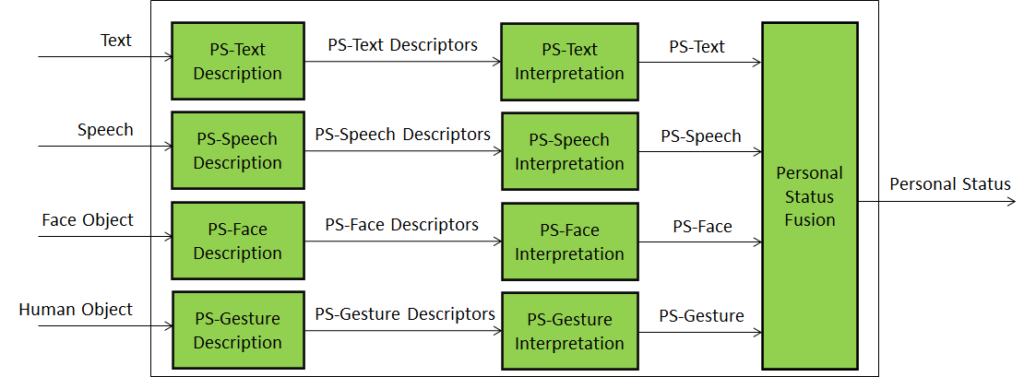

Personal Status Extraction (PSE) is a composite AIM that analyses the Manifestation of a Personal Status conveyed by any of the Text, Speech, Face, and Gesture Modalities and estimates the Personal Status. Note that Personal Status applies to both humans and avatars. This Composite AIM is used in the MPAI-MMC Use Cases as a replacement for the combination of the AIMs depicted in Figure 2. It is assumed that in general a Personal Status can be extracted from a Modality in two steps: Feature Extraction and Feature Interpretation. An implementation, however, can combine the two steps into one.

Figure 2 – Reference Model of Personal Status Extraction

The estimate of the Personal Status of a human or an avatar is the result of an analysis carried out on each available Modality as depicted in Figure 4.

Feature (e.g., pitch and intonation of the speech segment, thumb of the hand raised, the right eye winking, etc.). Features are extracted from Text, Speech, Face Objects, and Human Objects and expressed as Descriptors.

Feature Interpretation. Descriptors are interpreted and the specific Manifestations of the Personal Status are derived from the Text, Speech, Face, and Gesture Modalities.

The different estimates of the Personal Status in the different Modalities are then combined into the Personal Status.

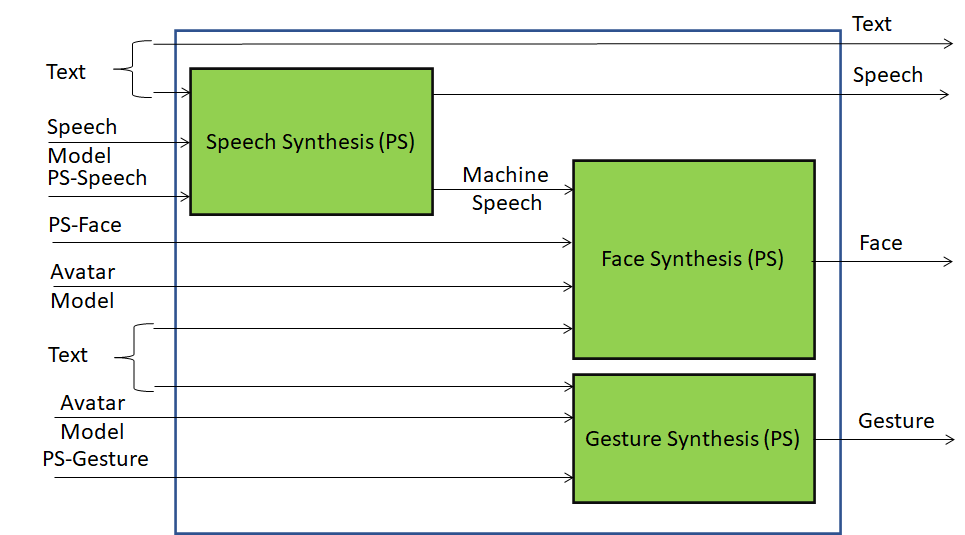

The Personal Status Display (PSD) is a Composite AIM depicted in Figure 5. It generates a speaking avatar animated by a machine’s Personal Status. PSD receives Text and Personal Status from Response Generation and generates an avatar producing:

- Synthesised Machine Speech using Text and Personal Status of PS (Speech).

- Avatar Face using Machine Speech, Text, and PS (Face).

- Avatar Gesture using PS (Gesture) and Text.

The avatar can optionally forward the input text to the PSD.

Figure 5 – Reference Model of Personal Status Display

MPAI and Health

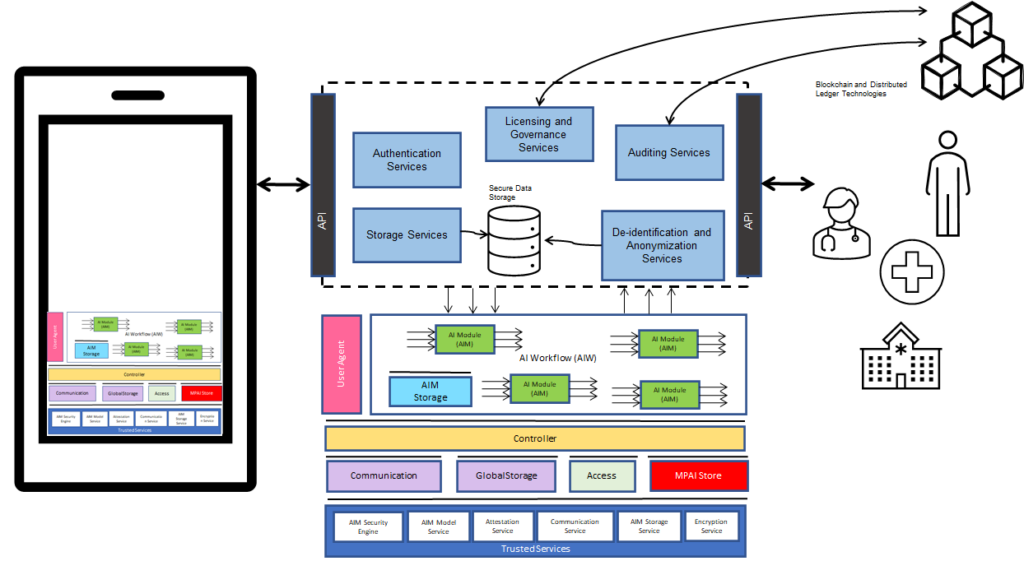

A few months ago, MPAI opened a new project called AI Health (MPAI-AIH). As depicted in Figure 4, the project assumes that an end-user (e.g., a patient) is equipped with a smartphone that has an embedded AI Framework and runs an AIH App (i.e., one or a set of AI Workflows) connected to different data sources, either external sensors or internal apps, and to the AIH System.

Figure 4 – Overview of the AI Health System.

The operation of the end-to-end system is described by the following steps.

- The user signs into the health system called AIH System.

- The AIH System initialises the user’s secure data vault.

- The user configures the AIH App to connect to different data sources.

- The AIH App starts collecting user data and securely stores it on the smartphone.

- The user may decide to give permission to contribute some or all of its data via a smart contract to the AIH System.

- The data is collected, de-identified and anonymised.

- Any registered and authenticated third party may request data access, based on a smart contract, select specific tools to process data and extract intelligence from the data.

The system is complemented by a federated learning process:

- Local machine learning (ML) models are trained on local heterogeneous datasets provided by individual users.

- The parameters of the models are periodically exchanged between AIH Apps, and between AIH Apps and the AIH System.

- A federated learning process builds a shared global model in a distributed fashion or through the AIH System.

- The model of each AIH App is updated via the MPAI Store.

This is an example of a large-scale AI system relying on a large number of clients interacting among themselves and with a backend, carrying out AI-based data processing and improving their performance by means of federated learning.

Meetings in August-September

The list of meeting until the 30th of September the date of the 24th General Assembly and of the 2nd anniversary of the MPAI establishment is given below. Non-members may attend the meetings in italic.

|

Group name |

29 Aug- 2 Sep |

05-09 Sep | 12-16 Sep | 19-23 Sep | 26-30 Sep | Time (UTC) | |

| AI Framework | 29 | 5 | 12 | 19 | 26 | 15 | |

| Governance of MPAI Ecosystem | 29 | 5 | 12 | 19 | 26 | 16 | |

| Multimodal Conversation | 30 | 6 | 13 | 20 | 27 | 14 | |

| Neural Network Watermaking | 30 | 6 | 20 | 27 | 15 | ||

| Context-based Audio enhancement | 30 | 6 | 13 | 20 | 27 | 16 | |

| XR Venues | 30 | 6 | 13 | 20 | 27 | 17 | |

| Connected Autonomous Vehicles | 31 | 7 | 14 | 21 | 28 | 12 | |

| AI-Enhanced Video Coding | 7 | 21 | 14 | ||||

| AI-based End-to-End Video Coding | 31 | 14 | 28 | 14 | |||

| Avatar Representation and Animation | 1 | 8 | 15 | 22 | 29 | 13:30 | |

| Server-based Predictive Multiplayer Gaming | 1 | 15 | 22 | 14:30 | |||

| Communication | 1 | 22 | 15 | ||||

| Industry and Standards | 2 | 23 | 16 | ||||

| Artificial Intelligence for Health Data | 9 | 14 | |||||

| MPAI-24 (General Assembly#24) | 30 | 15 |

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected

We are keen to hear from you, so don’t hesitate to give us your feedback