MPAI’s raison d’être is developing standards. So, including the word in the title looks like a pleonasm. But there is a reason: the word standard can be used to mean several things. Let’s first explore which ones.

In my order of importance the first is “information representation”. If there were no standard saying that 65 (in 7 or 8 bits) means “A” and 97 means “a” there would be no email and no WWW. Actually there would not have been a lot more things before them. Similarly, if there were no standard saying that 0111 means that there is a sequence of 3 white pixels and 11 a sequence of 3 black pixels, there would be no digital fax (not that it would make a lot of difference today, but even 10 years ago it would have been a disaster). Going into more sophisticated fields, without standard there would be no MP3, which is about digital representation of a song.

A second, apparently different, shade of the word standard is found in the Encyclopaedia Britannica where it says that a standard “permits large production runs of component parts that are readily fitted to other parts without adjustment”, which I can label as “componentisation”. Today, no car manufacturer would internally develops the nuts and bolts used in their cars (and a lot of more sophisticated components as well). They can do that because there are standards for nuts and bolts, e.g., ISO 4014:2011 Hexagon head bolts – Product grades A and B which specifies “the characteristics of hexagon head bolts with threads from M1,6 up to and including M64 etc.”.

MPAI is developing standards that fit the first definition but it is also involved in standards that fit the second one. For sure, it does neither for Hexagon head bolts. Actually, its first four standards to be published shortly, cover both areas. Let’s see how.

MPAI develops its standards focusing on application domains. For instance, MPAI-CAE targets Context-based Audio Enhancement and MPAI-MMC targets Multimodal Communication. Within these broad areas MPAI identifies Use Cases that fall in the application area and are conducive to meaningful standards. An example of MAI-CAE Use Case is Emotion Enhanced Speech (EES). You pronounce a sentence without particular “colour”, you give a model utterance and you ask the machine to provide your sentence with the “colour” of the given utterance. An example of MPAI-MMC Use Case is Unidirectional Speech Translation (UST): you pronounce a sentence in your language and with your colour and you ask the machine to interpret your sentence and pronounce it in another specified language with your own colour.

If the role of MPAI stopped there its standards would be easy to write. In the case of CAE-EES, you specify the input signals – plain speech, model utterance – and the output signal – speech with colour. In the case of MMC-UST, you specify the input signals – speech in your language and the target language – and the output signal – speech in the target language.

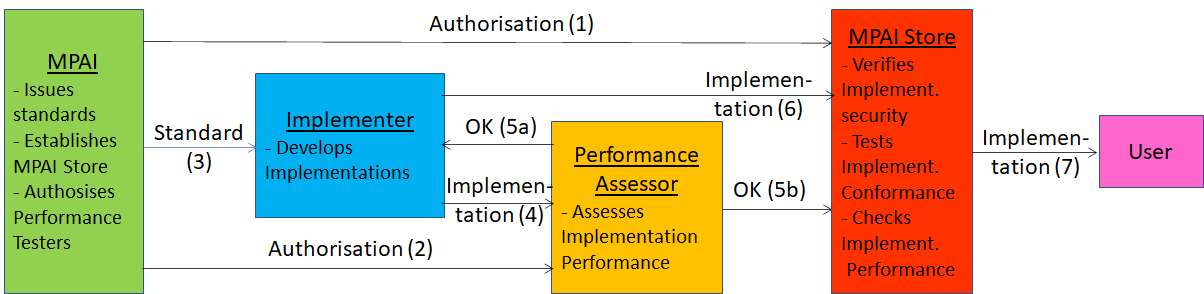

As such standards would be of limited use, MPAI tackles the problem from a different direction. AI-based products and services typically require training. What guarantee does a user have that the box has been properly trained? What if the box has major – intentional or unintentional – performance holes? Reverse engineering an AI-based box is a dauntingly complexity problem.

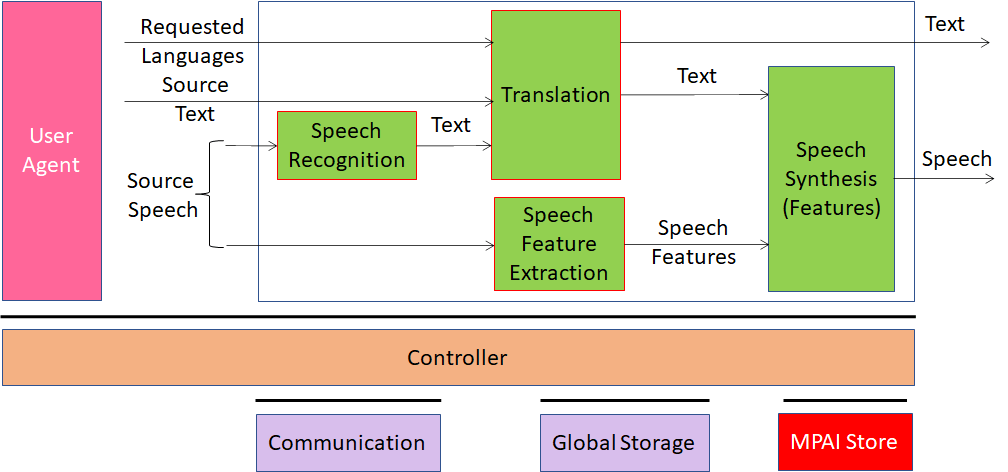

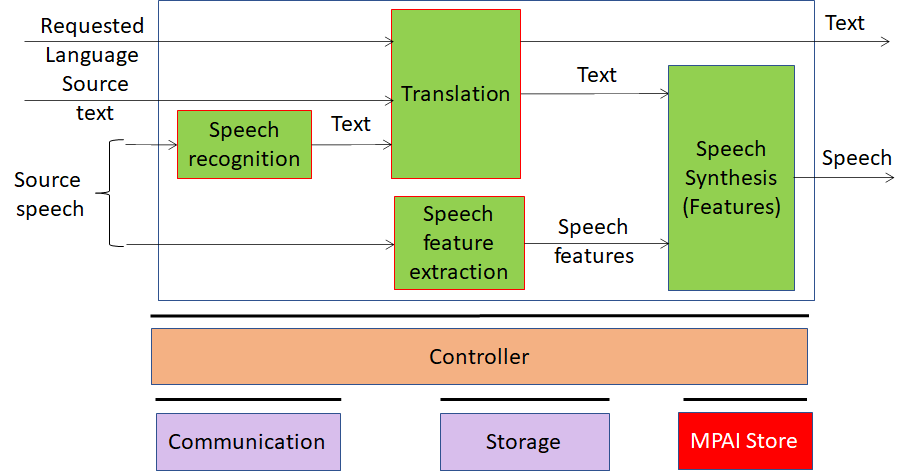

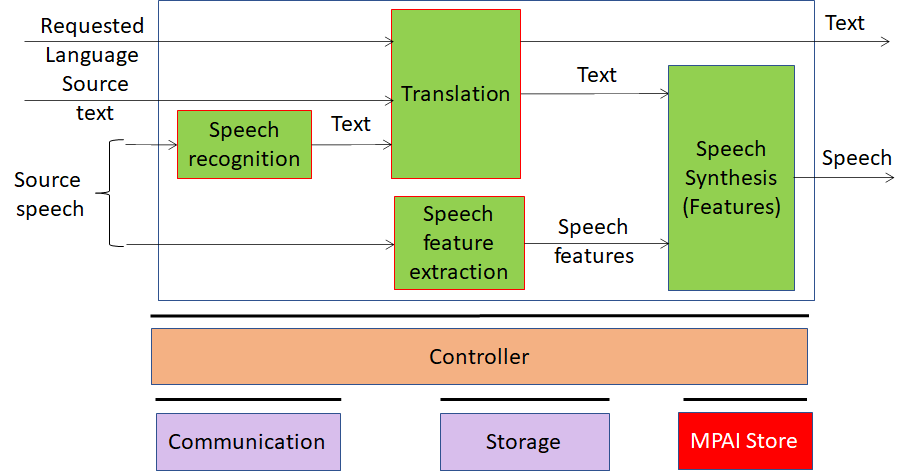

To decrease the complexity of the problem, MPAI splits a complex box in components. Let’s see how MPAI has modelled its Unidirectional Speech Translation (UST) Use Case. Looking at Figure 1, we can see that the UST box contains 4 sub-boxes:

- A Speech Recognition box receiving speech as input and providing text as output.

- A Translation box receiving text either from a user or from the output of Speech Recognition, in addition to a signal telling which is the desired output language

- A Speech Feature Extraction box able to extract what is specific of the input speech in terms of intonation, emotion ecc.

- A Speech Synthesis box using not only text as input but also the features of the speech input to the UST box.

MPAI standards are defined at two levels:

- The UST box level, by defining the input and output signals, and the function of the UST box

- Th UST sub-box levels (that MPAI calls AI Modules – AIM), by defining the input and output signals, and the function of the each AIM

Figure 1 – The MPAI Unidirectional Speech Translation (UST) Use Case

There are at least two advantages from the MPAI approach

- It is possible to trace back a specific UST box output to the UST box input that generated it. This is the “information representation” part of MPAI standards.

- It is possible to replace individual AIMs in a UST box because the functions of the AIMs are normatively defined and so is the syntax and semantics of each AIM input and output data. This is the “componentisation” part of MPAI standards.

Which guarantee do you have that by replacing an AIM in an implementation you get a working system? The answer is “Conformance Testing”. Each MPAI Technical Specification has a corresponding Conformance Testing specification that you can run to make sure that an implementation of a Use Case or of an AIM is technically correct.

That may not be enough if you also want to know whether the AIMs do a proper job. What if the Speech Feature Extraction AIM has been poorly trained and that your interpreted voice does not really look like your voice?

The MPAI answer to this question is called “Performance Assessment”. Each MPAI Technical Specification has a corresponding Performance Testing specification that you can run to make sure that an implementation of a Use Case or of an AIM has an acceptable grade of performance.

All this is interesting, but when will MPAI standards be actually available? The first standard (planned to be approved by the 12th MPAI General Assembly on 30 September ) will support the AI-based Company Performance Prediction. You feed the relevant data of a company into a box and you are told what is the organisation adequacy and the default probability of the company.

There is no better example than this first planned standard to understand that AI boxes cannot be treated like the Oracle of Delphi.