MPAI concludes 2021 approving new Context-based Audio Enhancement standard

Geneva, Switzerland – 22 December 2021. Today the Moving Picture, Audio and Data Coding by Artificial Intelligence (MPAI) standards developing organisation has concluded the year 2022, its first full year of operation approving its fifth standard for publication.

The standards developed and published by MPAI so far are:

- Context-based Audio Enhancement (MPAI-CAE) – approved today – supports 4 identified use cases: adding a desired emotion to an emotion-less speech segment, preserving old audio tapes, restoring audio segments and improving the audio conference experience.

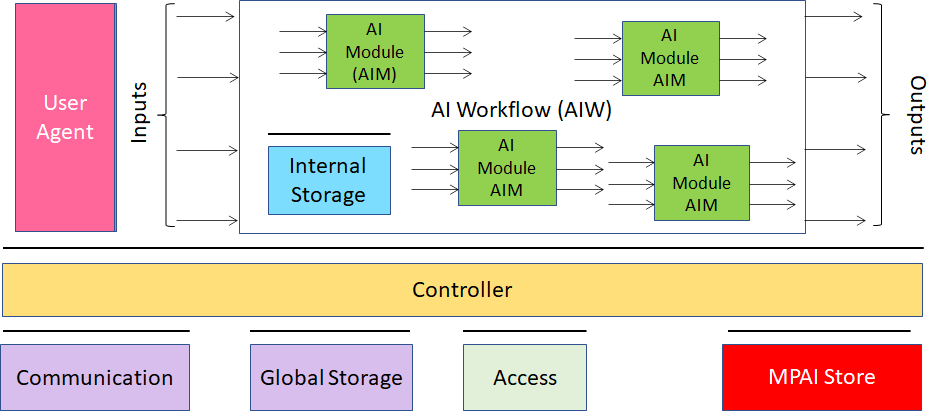

- AI Framework (MPAI-AIF) enables creation and automation of mixed Machine Learning, Artificial Intelligence, Data Processing and inference workflows. The Framework can be implemented as software, hardware, or hybrid software and hardware.

- Compression and Understanding of Industrial Data (MPAI-CUI) gives the financial risk assessment industry new, powerful and extensible means to predict the performance of a company several years into the future.

- Multimodal Conversation (MPAI-MMC) enables advanced human-machine conversation forms such as: holding an audio-visual conversation with a machine impersonated by a synthetic voice and an animated face; requesting and receiving information via speech about a displayed object; interpreting speech to one, two or many languages using a synthetic voice that preserves the features of the human speech.

- Governance of the MPAI Ecosystem (MPAI-GME) lays down the rules governing an ecosystem of implementers and users of secure MPAI standard implementations guaranteed for Conformance and Performance, and accessible through the not-for-profit MPAI Store.

The Book “Towards Pervasive and Trustworthy Artificial Intelligence” illustrates the results achieved by MPAI in its 15 months of operation and the plans for the next 12 months.

MPAI is currently working on several other standards, some of which are:

- Server-based Predictive Multiplayer Gaming (MPAI-SPG) uses AI to train a network that compensates data losses and detects false data in online multiplayer gaming.

- AI-Enhanced Video Coding (MPAI-EVC), a candidate MPAI standard improving existing video coding tools with AI and targeting short-to-medium term applications.

- End-to-End Video Coding (MPAI-EEV) is a recently launched MPAI exploration promising a fuller exploitation of the AI potential in a longer-term time frame that MPAI-EVC.

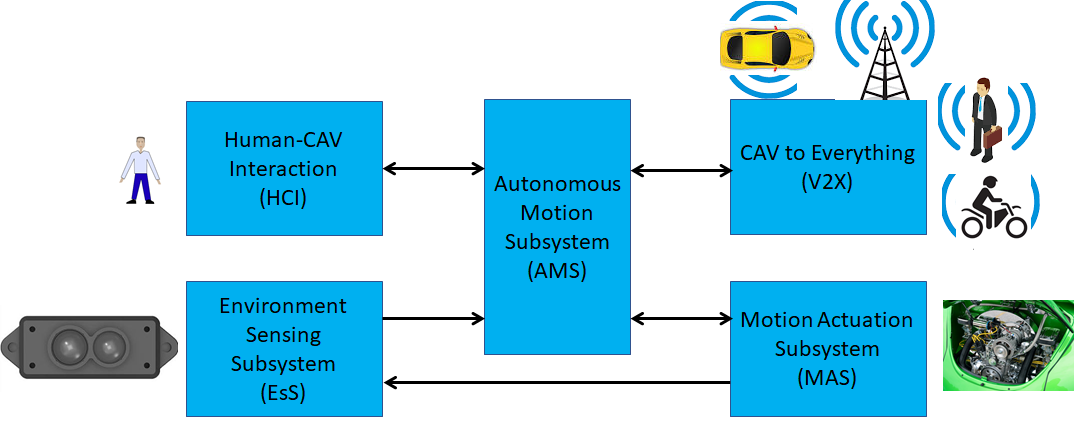

- Connected Autonomous Vehicles (MPAI-CAV) uses AI in key features: Human-CAV Interaction, Environment Sensing, Autonomous Motion, CAV to Everything and Motion Actuation.

- Mixed Reality Collaborative Spaces (MPAI-MCS) creates AI-enabled mixed-reality spaces populated by streamed objects such as avatars, other objects and sensor data, and their descriptors for use in meetings, education, biomedicine, science, gaming and manufacturing.

MPAI develops data coding standards for applications that have AI as the core enabling technology. Any legal entity supporting the MPAI mission may join MPAI if able to contribute to the development of standards for the efficient use of data.

Visit the MPAI web site and contact the MPAI secretariat for specific information.