2022/07/25

MPAI calls for technologies supporting three new standards

By issuing 3 new Calls for Technologies on AI Framework Version 2, Multimodal Conversation Version 2, and Neural Network Watermarking at its last 22nd General Assembly (2022/07/19), MPAI is starting a new round of standards.

Read here how we got to this pointand what will happen next by reviewing how MPAI processes ideas of standard.

What is AI Framework Version 2?

MPAI’s AI Framework (MPAI-AIF) specifies architecture, interfaces, protocols, and Application Programming Interfaces (API) of the MPAI AI Framework (AIF), an environment specially designed for execution of AI-based implementations, but also suitable for mixed AI and traditional data processing workflows.

The main features of MPAI-AIF V1 are:

- Operating System independence.

- Modular component-based architecture with specified interfaces.

- Abstraction from the development environment by encapsulating component interfaces.

- Interface with the MPAI Store enabling access to validated components.

- Component implementable as software, hardware or mixed hardware-software.

- Components execution in local and distributed Zero-Trust architectures, interacting with other implementations operating in proximity

- Support of Machine Learning functionalities.

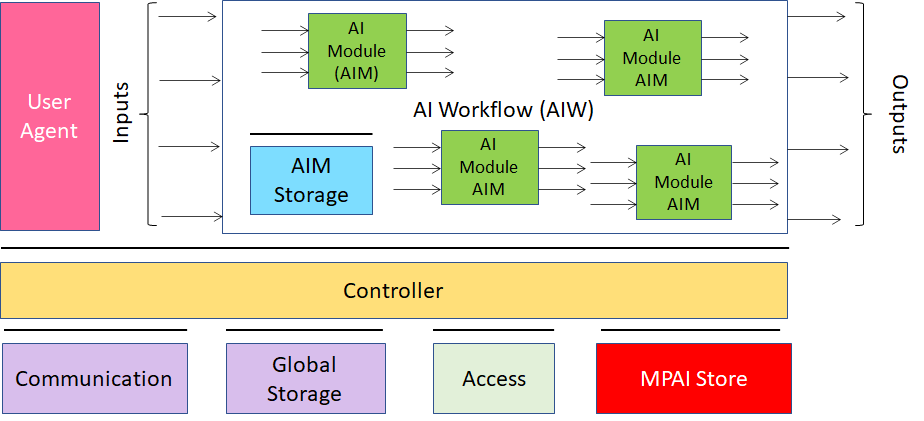

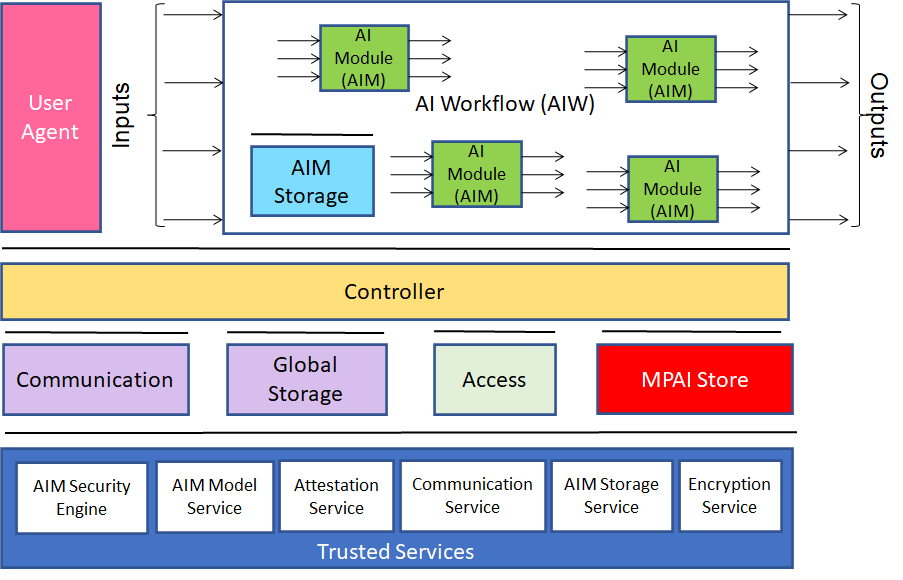

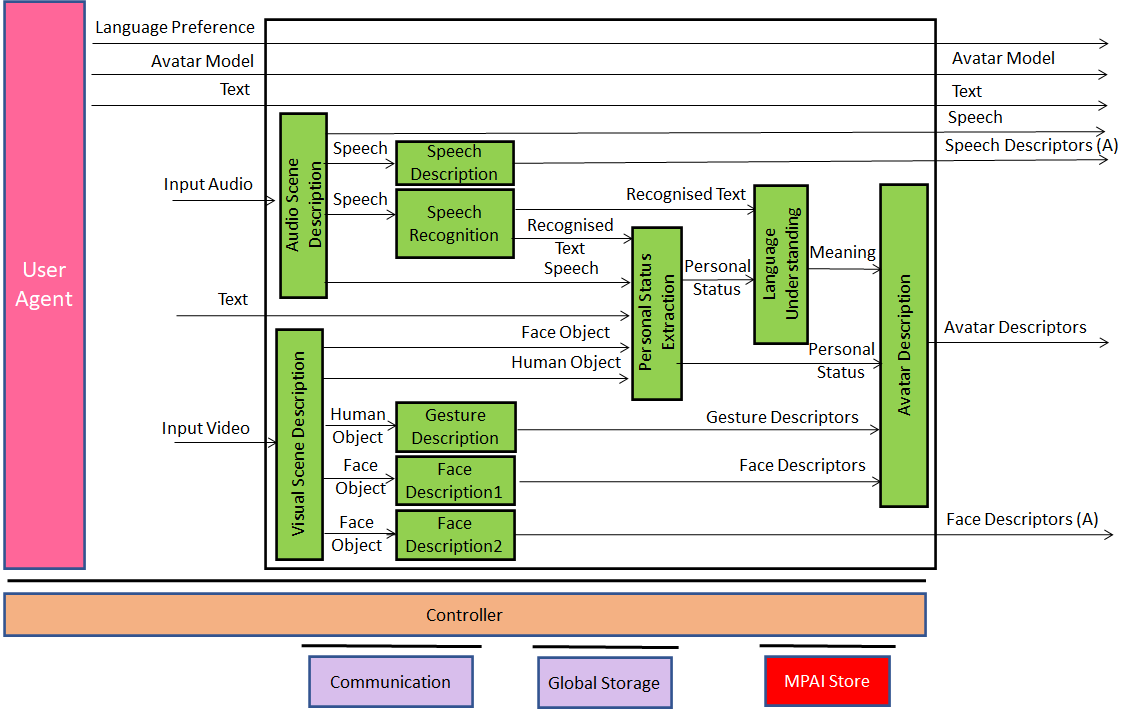

Figure 1 represents the Reference Models of MPAI-AIF V1 and V2.

|

|

Figure 1 – Reference Models of MPAI-AIF V1 and V2

As MPAI-AIF V1 does not provide the trusted services needed to implement a Trusted Zone, the AIF-V2 Call identifies a set of functional requirements enabling AIF Components to access trusted services via APIs:

- AIM Security Engine.

- Trusted AIM Model Services

- Attestation Service.

- Trusted Communication Service.

- Trusted AIM Storage Service

- Encryption Service.

The AIF Components shall be able to call Trusted Services APIs after establishing the developer-specified security regime based on the following requirements:

- The AIF Components shall access high-level implementation-independent Trusted Services API to handle:

- Encryption Service.

- Attestation Service.

- Trusted Communication Service.

- Trusted AIM Storage Service including the following functionalities:

- AIM Storage Initialisation (secure and non-secure flash and RAM)

- AIM Storage Read/Write.

- AIM Storage release.

- Trusted AIM Model Services including the following functionalities:

- Secure and non-secure Machine Learning Model Storage.

- Machine Learning Model Update (i.e., full, or partial update of the weights of the Model).

- Machine Learning Model Validation (i.e., verification that the model is the one that is expected to be used and that the appropriate rights have been acquired).

- AIM Security Engine including the following functionalities:

- Machine Learning Model Encryption.

- Machine Learning Model Signature.

- Machine Learning Model Watermarking

- The AIF Components shall be easily integrated with the above Services.

- The AIF Trusted Services shall be able to use hardware and OS security features already existing in the hardware and software of the environment in which the AIF is implemented.

- Application developers shall be able to select the application’s security either or both by:

- Security level including a defined set of security features for each level, i.e., APIs available to either select individual security services or to select a standard security level available in the implementation.

- Developer-defined security, i.e., a combination of a developer-defined set of security features.

- The specification of the AIF V2 Metadata shall extendthe AIF V1 Metadata supporting security with either or both standardised levels and a developer-defined combination of security features.

6. MPAI welcomes the submission of use cases and their respective threat models.

Those wishing to get additional information on the MPAI-AIF V2 Call for Technologies should read/view:

- The “About MPAI-AIF” web page provides some general information about MPAI-AIF.

- The 1’ min 20 sec video (YouTube) and video (non-YouTube) give a concise illustration of the MPAI-MMC V2 Call for Technologies.

- The slides and the video recording of the online presentation (Youtube, non-YouTube) made at the online 11 July presentation give a complete overview of MPAI-AIF V2.

- The 3 documents Call for Technologies, Use Cases and Functional Requirements, and Framework Licence are a “must read” for those who intend to respond to the MPAI-AIF V2 Call for Technologies

Responses to the Call for Technologies shall be received by the MPAI secretariat by 10 October 2022 at 23:39 UTC.

MPAI-MMC V2 for better human-machine conversation

Human-machine conversation is an exciting and promising area of research. Indeed, a smart conversation can get more from a machine just like a smart conversation can get more from a human.

Multimodal Conversation Version 1 (MPAI-MMC V1) provided a set of standard technologies based on some major simplifying assumptions:

- The machine has available the face and the speech of just one human, possibly holding an object and speaking their language.

- The machine extracts emotional information from the text, face, and speech of the human to better tune its response.

- The machine manifests itself as a speaking avatar capable of displaying emotion in its face and speech.

In MPAI-MMC V2 the assumptions are significantly more demanding:

- More than one human may be talking with the machine.

- The machine must separate from and locate humans (“human objects”) in the real world.

- The machine extracts speech, face, and gesture from human objects.

- The machine ascertains the identity of the humans with whom it is conversing.

- The machine extracts Personal Status, information internal to a user that comprises Emotion, Cognitive State, and Attitude, from text, speech, face, and gesture.

- The machine interacts with humans and avatars.

- The machine manifests itself as a speaking avatar capable of animating face and gesture.

- The avatar can be an accurate replica of a human and may operate in a virtual environment.

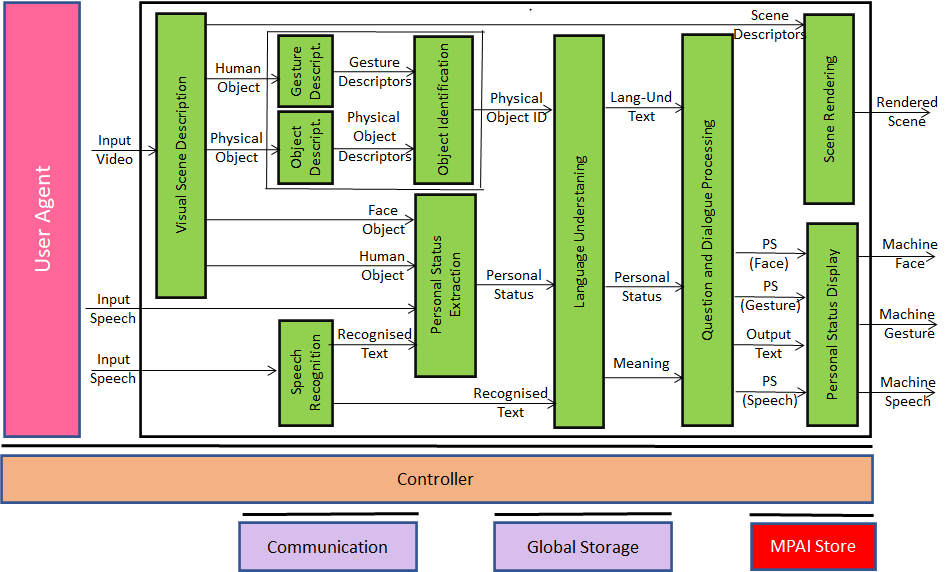

The functional requirements of the technologies enabling MPAI-MMC V2 have been driven by three use cases. In the first Conversation About a Scene (CAS) use case, the machine talks to a human which is part of a scene composed of several objects and uses gesture to indicate the object s/he refers to. The machine creates a scene representation with separated and located humans and objects, uses the human’s extracted personal status to understand how well s/he is satisfied by the conversation and produces textual responses with associated personal statuses that are used to synthesise and animate a speaking avatar.

|

|

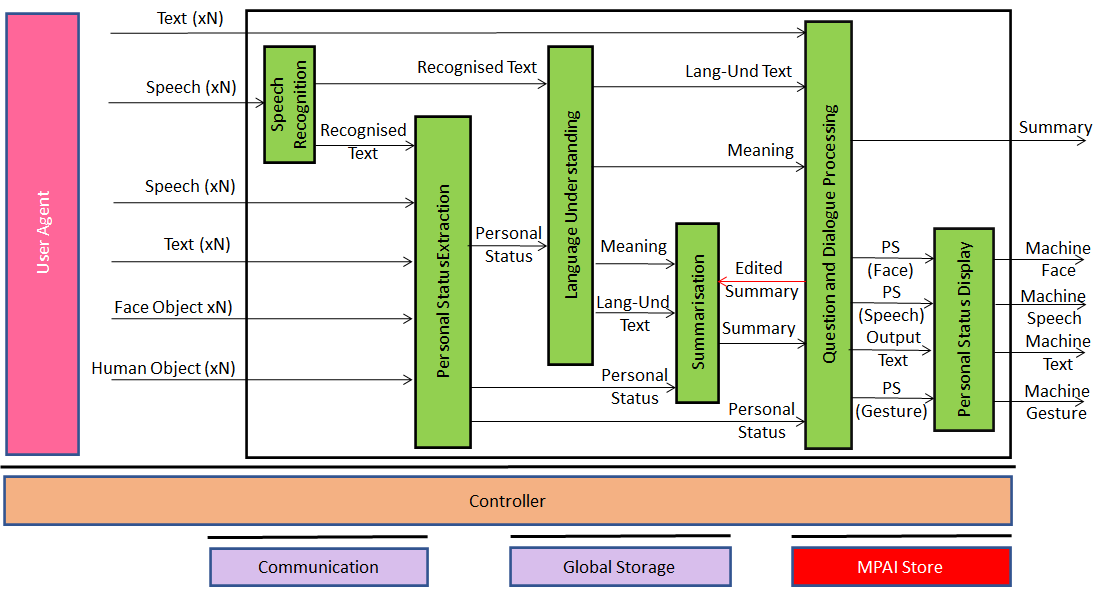

Figure 2 – Conversation About a Scene and Human-CAV Interaction use case

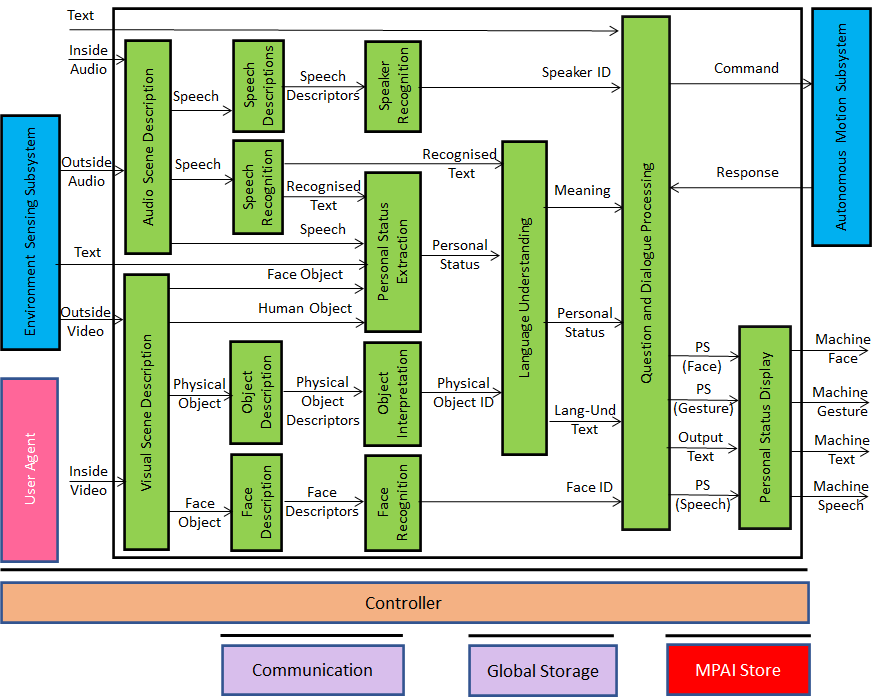

In the second Human-CAV Interaction use case (CAV stands for Connected Autonomous Vehicle), the machine (CAV) deals with a group of humans wishing to be taken somewhere. The CAV individually separates the humans, removes unnecessary background, separates and locates the individual speech sources, and recognises humans by extracting individual speech and face components. When the humans are inside the cabin, they converse among themselves and with the CAV. For an effective conversation, the CAV extracts the personal statuses of the humans and manifests itself as an avatar also displaying personal status.

In the third Avatar-Based Videoconference use case, groups of geographically disperse humans participate in a virtual videoconference through avatars that accurately represent their appearance and movements. A virtual secretary summarises what avatars are saying using their speech and their personal status and manifests itself as a speaking avatar. Each participant human has access to the individual human objects placing them around a virtual table with the appropriate speech. MPAI has already secured the technology to locate speech objects in an audioconference from its Context-based Audio Enhancement (MPAI-CAE) standard. However, it now needs technologies that enable a party to reproduce environments, avatar models and their animations without having access to additional information from the party having generated that data. In other words, MPAI needs technology allowing the import of an environment/avatar from metaverse1 into metaverse2 without the need to access additional information from the party in metaverse1.

|

|

Figure 3 – Avatar-Based Videoconference use cases: transmitting client and virtual secretary

There are many more technologies identified and characterised in the MPAI-MMC V2 Call for Technologies. Those wishing to get additional details should read/view:

- The “About MPAI-MMC” web page gives general information about MPAI-MMC V1 and V2.

- The 2 min video (YouTube) and video (non-YouTube) give a concise illustration of MPAI-MMC V2.

- The slides and video recording of the online presentation (Youtube, non-YouTube) made at the online 12 July presentation give a complete overview of MPAI-MMC V2.

- The 3 documents Call for Technologies, Use Cases and Functional Requirements, and Framework Licence.

Responses to the Call for Technologies shall be received by the MPAI secretariat by 10 October 2022 at 23:39 UTC.

Neural Network Watermarking

Watermarking is an established technique that has been used to mark an asset, such as a banknote, e.g., to guarantee the authenticity of that property. Digital media has made good use of watermarking, e.g., by injecting information conveying the identity of the owner and the identifier of the digital asset. Neural Networks are digital assets that are often the result of a training process that may have involved the use of millions of records with investments sometimes exceeding tens and even hundreds of k$. Watermarking can thus be a useful technique to achieve some of the goals that were achieved with digital media and possibly others.

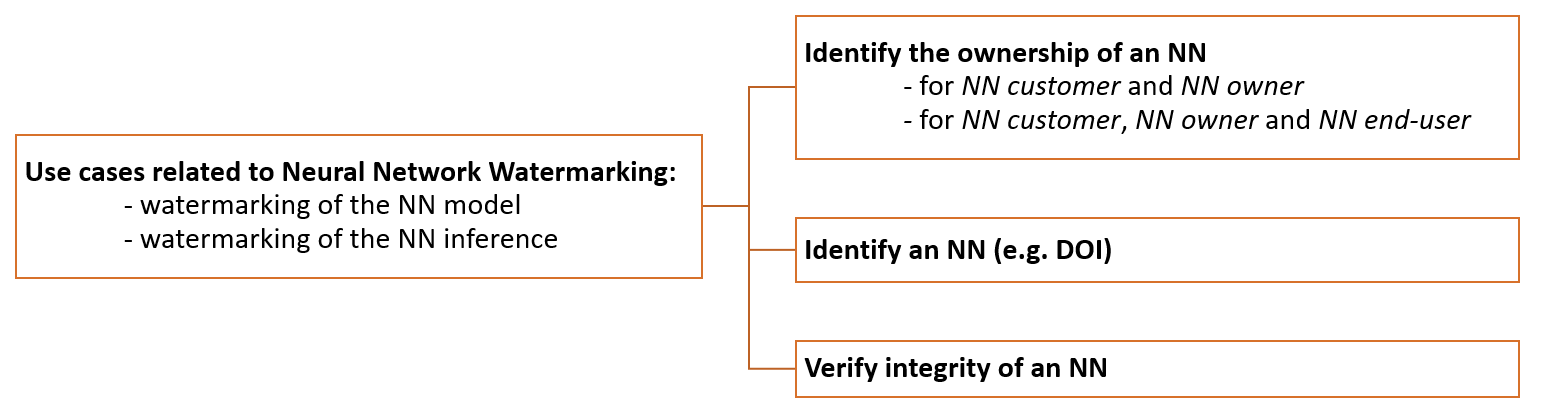

Figure 4 classifies the possible uses of watermarking in a neural network context.

Figure 4 – Use cases of neural network watermarking

MPAI has something to say about the use of watermarking in a neural network. For sure, it is not about providing a “standard” watermarking technology, but then what is it?

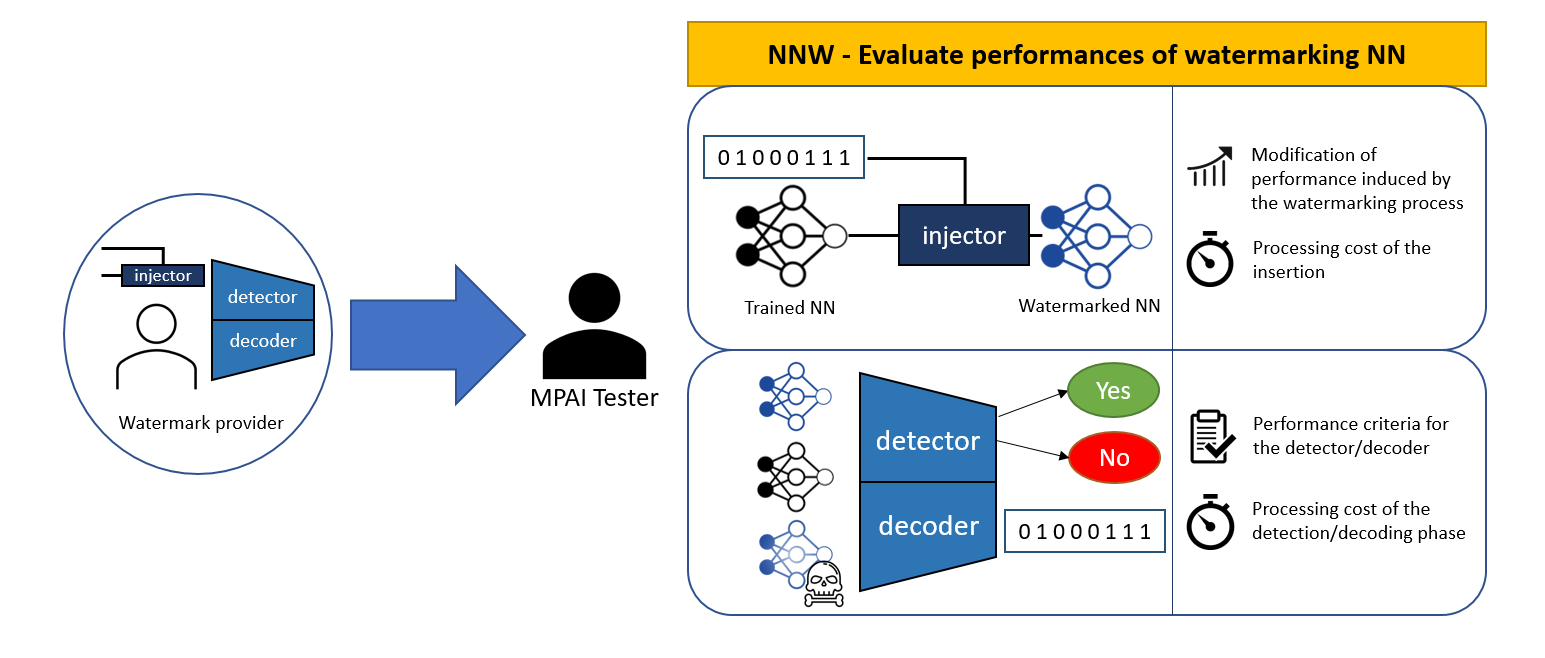

The purpose of the MPAI Neural Network Watermarking (MPAI-NNW) standard is to enable a watermarking technology user to qualify the technology. Thus, MPAI-NNW will provide the means to measure, for a given size of the watermarking payload, the ability of:

- The watermark inserter to inject a payload without deteriorating the performance of the Neural Network. This requires that, for a given application domain:

- A testing dataset for the watermarked and unwatermarked NN.

- A methodology to assess any performance change caused by the watermark.

- The watermark detector to ascertain the presence and the watermark decoder to retrieve the watermarking payload applied to:

- A watermarked network that has been subsequently modified (e.g., by transfer learning or pruning).

- An inference of the modified model.

- This item requires for a given application domain:

- Performance criteria for the watermark detector or decoder, e.g., relative numbers of missed detection and false alarm or percentage of the retrieved payload.

- A list of potential modification types expected to be applied to the watermarked NN as well as of their ranges (e.g., random pruning at 25%).

- The watermark inserter to inject a payload at a quantifiable computational cost, e.g., the execution time for a given processing environment.

- The watermark detector/decoder to detect/decode a payload from a watermarked model or any of its inferences, at a quantifiable computational cost, e.g., the execution time for a given processing environment.

Figure 5 – Some MPAI-NNW technologies

The MPAI-NNW Use Cases and Functional Requirements identify what the MPAI-NNW standard should do to enable users to qualify a neural network watermarking technology.

The requested technologies are identified and characterised in the MPAI-NNW Use Cases and Functional Requirements. Those wishing to get additional information should read/hear:

- The “About MPAI-NNW” web page gives general information about MPAI-NNW.

- The 1 min 30 sec video (YouTube) and video (non-YouTube) give a concise illustration of MPAI-NNW.

- The slides and video recording of the online presentation (Youtube, non-YouTube) used at the online 12 July presentation give a complete overview of MPAI-NNW.

- The 3 documents Call for Technologies, the Use Cases and Functional Requirements, and the Framework Licence are a “must read” for those who intend to respond to the MPAI-NNW Call for Technologies

Responses to the Call for Technologies shall be received by the MPAI secretariat on 10 October 2022 at 23:39 UTC.

The MPAI standards development process

If an idea of a standard is accepted, MPAI seeks individuals willing to work on the proposed standard, identifies use cases where the standard could play a role and develops functional requirements for the required technologies.

In this phase, non-members can participate in MPAI online meetings, contribute use cases, and propose functional requirements.

When the Use Cases and Functional Requirements document is stable, MPAI principal members develop the framework licence, i.e., a licence without critical data such as prices, percentages, dates, etc., and MPAI issues a Call for Technologies with attached Functional and Commercial Requirements.

Of the 3 Calls for Technologies approved by MPAI-22, AI Framework and Multimodal Conversation seek to extend already approved standards and Neural Network Watermarking one concerns a new area.

Respondents to the Calls shall submit their proposals by the 10th of October 2022 and present them at a time decided by the 25th General Assembly. A respondent who is not an MPAI member and has (a part of) their submission accepted for inclusion in the standard shall join MPAI or the proposal will be rejected and the technology not included in the standard.

Accepted responses will be used to develop the standard. Approval and publication are expected to happen in spring 2023.

Meetings in the coming July-August meeting cycle

Non-MPAI members may join the meetings in italics. If interested, please contact the MPAI secretariat.

| Group name | 25-29 July | 1-5 August | 8-12 August | 15-19 August | 22-26 August | Time (UTC) | |

| AI Framework | 25 | 1 | 8 | 15 | 22 | 15 | |

| Governance of MPAI Ecosystem | 14 | ||||||

| 25 | 1 | 8 | 15 | 22 | 16 | ||

| Multimodal Conversation | 26 | 2 | 9 | 16 | 23 | 14 | |

| Neural Network Watermaking | 13 | ||||||

| 26 | 16 | 23 | 15 | ||||

| Context-based Audio enhancement | 9 | 16 | 23 | 16 | |||

| XR Venues | 26 | 2 | 9 | 16 | 23 | 17 | |

| Connected Autonomous Vehicles | 27 | 3 | 10 | 17 | 24 | 12 | |

| AI-Enhanced Video Coding | 13 | ||||||

| 3 | 17 | 14 | |||||

| AI-based End-to-End Video Coding | 27 | 10 | 24 | 14 | |||

| Avatar Representation and Animation | 28 | 4 | 11 | 18 | 25 | 13:30 | |

| Server-based Predictive Multiplayer Gaming | 28 | 11 | 18 | 14:30 | |||

| Communication | 28 | 4 | 11 | 18 | 15 | ||

| Artificial Intelligence for Health Data | 5 | 26 | 14 | ||||

| Industry and Standards | 5 | 19 | 16 |

This newsletter serves the purpose of keeping the expanding the diverse MPAI community connected

We are keen to hear from you, so don’t hesitate to give us your feedback