Three MPAI standards now available from IEEE

Meetings in the coming May-June meeting cycle

MPAI announces publication of 3 MPAI standard adopted by IEEE without modification

Many activities are being carried out by MPAI. This news item will briefly describe the activities leading to a standard. Other activities will be reported in the next issue of this newsletter

The AI Framework Development Committee (AIF-DC) has already produced the MPAI-AIF Technical Specification for an environment called AI Framework (AIF) that is able to execute chains of AI Modules (AIM) organised in AI Workflows (AIW). The reference software is written in C for the Zephyr OS and available online. MPAI-AIF has been adopted without modification by IEEE as 3301-2022.

Currently, the group meets every Monday at 15 UTC to develop version 2 of the MPAI-AIF Technical Specification. This intends to provide support to developers who need strong security for their execution environments. The reference software is being developed jointly with the Technical Specification. In the future the group will complete the development of the MPAI-AIF Conformance Testing.

The Multimodal Conversation Development Committee (MMC-DC) has already produced the MPAI-MMC Technical Specification. This includes 5 use cases: “Conversation with Emotion”, supporting conversation of a human with a machine that estimates the emotion of the human and is impersonated by an avatar with a synthetic voice and an animated face; “Multimodal Question Answering”, supporting request for information about a displayed object; and three different arrangements of speech translation that preserves the emotion of the original speech in the translation. MPAI-MMC has been adopted without modification by IEEE as 3300-2022.

Currently the group meets all Tuesdays at 14 UTC to develop version 2 of the Technical Specification. This will include the use cases “Conversation About a Scene” where a human converses with a machine displaying its Personal Status in speech, face, and gesture while pointing at the objects scattered in a room; “Human-Connected Autonomous Vehicle Interaction” where humans converse with a machine displaying Personal Status in speech, face, and gesture after having been properly identified machine with their speech and face in outdoor and indoor conditions; and “Virtual Secretary for Videoconference” where an avatar in a virtual conference of virtual humans makes and displays a summary of what other avatars say, receives and interprets comments using the avatars’ utterances and Personal Statuses, and displays the edited summary. MPAI-MMC version 2 includes the specification of three composite AIMs: Personal Status Extraction analyses the Personal Status conveyed by Text, Speech, Face, and Gesture – of a human or an avatar – and provides the Personal Status estimate; Personal Status Display receives Text and Personal Status and generates an avatar producing Text and uttering Speech with the intended Personal Status while the avatar’s Face and Gesture show the intended Personal Status. Spatial Object Identification provides the Identifier of a Physical Object in an Environment that a human indicates by pointing with a finger. In the future the group will complete the development of Reference Software, Conformance Testing, and Performance Assessment.

The Neural Network Watermarking Development Committee (NNW-DC) has already produced the MPAI-NNW Technical Specification specifying methodologies to evaluate the following aspects of a neural network watermarking technology: The impact on the performance of a watermarked neural network and/or on its inference; the ability of a neural network watermarking detector/decoder to detect/decode a payload when the watermarked neural network has been modified; and the computational cost of injecting, detecting or decoding a payload in the watermarked neural network. The Reference Software has already been developed and is and available online.

MPAI-NNW is being processed by IEEE for adoption without modification as IEEE 3302-2022.

Currently the group meets on Tuesday at 15 UTC to develop a version of the Reference Software that can be executed in the AIF.

The Context-based Audio Enhancement Development Committee (CAE-DC) has already produced two versions of the MPAI-CAE Technical Specification. This includes supports for the following use cases: Emotion Enhanced Speech (EES), Audio Recording Preservation (ARP), Speech Restoration System (SSR), and Enhanced Audioconference Experience (EAE). EES allows a user to obtain the pronunciation of an emotionless speech segment as in a model sentence or giving one out of a list of standard emotions; ARP facilitates the preservation of audio reel tapes; SSR allows the replacement of a missing speech segment in a corpus when the text is known; and EAE improves the experience of audioconference participants by providing the description of the audio components in a room.

CAE-DC has recently completed version 2 of MPAI-CAE. This provides the specification of the composite AIM that creates an audio scene description from an indoor or an outdoor scenario and applies it to the case of the interface between humans and a connected autonomous vehicle.

Currently the group meets on Tuesday at 16 UTC to develop the reference software and conformance testing specifications and, in the future, the performance assessment specification. It is also starting a new activity. As this is a new activity, participation will be open to interested people.

The Avatar Representation and Animation (ARA) group of the Requirements Standing Committee meets all Thursday at 13:30 to develop the Avatar Representation and Animation Technical Specification (MPAI-ARA). The goal is to specify the representation of body and face (model and descriptors), the format for the transmission of descriptors, the synthesis of body and face from descriptors and the interpretation of body and face.

The MPAI Metaverse Model (MMM) group of the Requirements Standing Committee has produced two Technical Reports: MMM Functionalities and MMM Functionality Profiles. The former identifying functionalities that an M-Instance may be requested to provide to users, and the latter has developed several foundational elements of metaverse standardisation, namely, functional operation model; Actions, Items and Data Types; Use Cases, and Functionality Profiles.

Currently the group meets all Fridays at 15:00 to develop Metaverse API and Architecture. A Call for Technologies will be published soon.

Three MPAI standards now available from IEEE

Multimodal Conversation (MPAI-MMC), AI Framework (MPAI-AIF), and MPAI-CAE (Context-based Audio Enhancement) are now IEEE standards as IEEE 3300, 3301, and 3302, respectively.

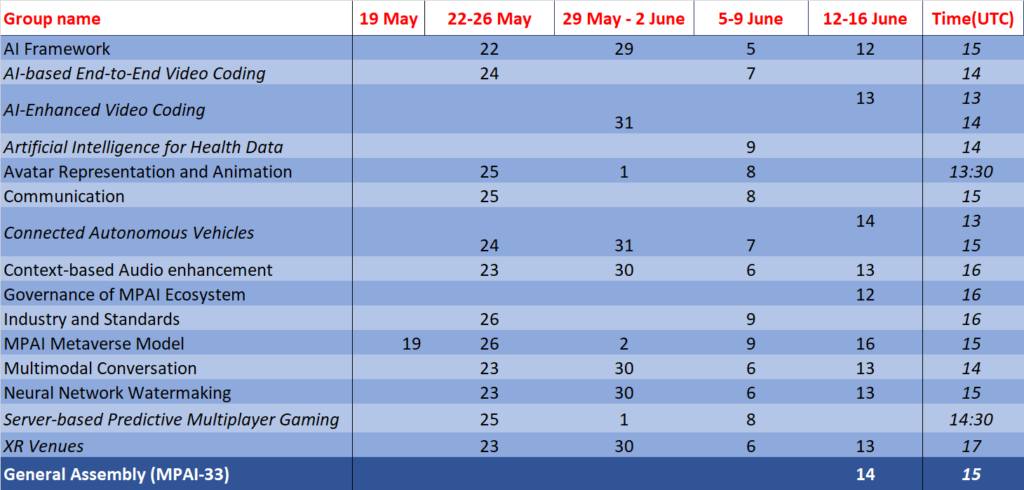

Meetings in the coming May-June meeting cycle

Non-MPAI members may join the meetings given in italics in the table below. If interested, please contact the MPAI secretariat.