In this issue:

MPAI-NNW ready for integration with your AI-based processing workflows

A standard to facilitate live multisensory immersive performances

More choices for MPAI standard users

Meetings in the coming October meeting cycle

MPAI-NNW ready for integration with your AI-based processing workflows

The MPAI-NNW standard was released early this year to provide the industry with a common methodology to evaluate the impact of watermarking in neural networks.

With its second version, MPAI-NNW leverages the MPAI-AIF standard to upgrade conventional AI-based processing workflows with the traceability and integrity checking functions proper of NN watermarking.

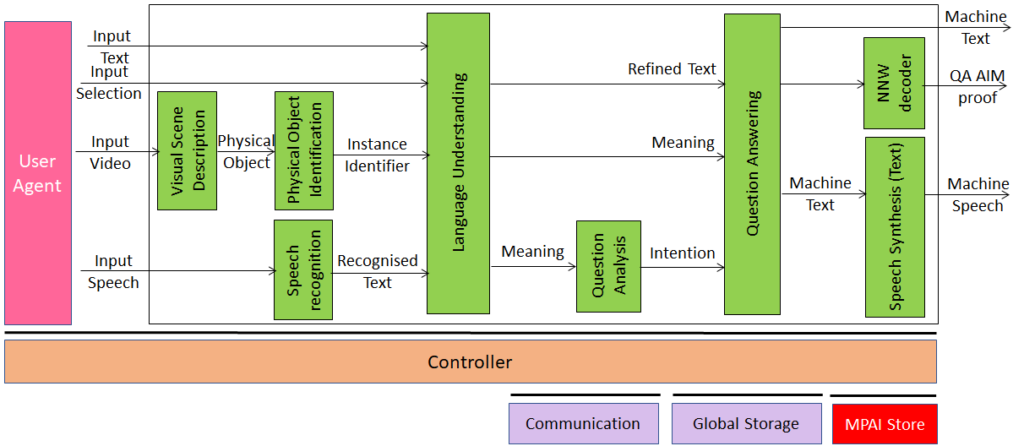

Figure 1 shows the Multimodal Question-Answering use case where the “QA AIM proof” output (QA AIM stands for Question-Answering AIM) produced by NNW decoder can detect whether a particular machine text generated by a Question Answering module was indeed produced by the expected service or device.

Join the online presentation on 2023:11:14, 15:00 UTC

figure 1

Join Moving Picture, Audio and Data Coding by Artificial Intelligence MPAI (http://mpai.community/)

A standard to facilitate live multisensory immersive performances

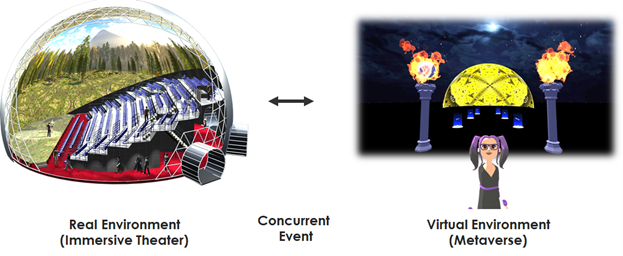

The MPAI community is now focused on developing standards for XR Venues – particularly venues supporting live theatrical performances where the user experience spans both real and virtual environments.

The purpose of the planned MPAI-XRV – Live Theatrical Stage Performance standard is to address AI functions that facilitate live multisensory immersive performances. Broadway theatres, musicals, dramas, operas, and other performing arts are increasingly using video scrims, backdrops, as well as projection mapping to create digital sets. Such shows ordinarily require extensive digital set design and on-site show control staff to operate. The use of AI will allow faster mounting of shows, more direct, precise yet spontaneous implementation and control to achieve the show director’s vision. It will also free staff from repetitive and technical tasks, allowing them to amplify their artistic and creative skills.

Ultimately, the MPAI-XRV standard will allow the entire performance stage to become an immersive digital virtual environment which, when merged with a metaverse environment, creates a “digital twin” representation of live performers within the virtual world. Major metaverse concert events can therefore originate as a live performance with an in-person audience while simultaneously being enjoyed by millions in virtual reality. Emerging immersive venues such as MSG Sphere and COSM and various immersive art galleries are already well suited to such an approach.

MPAI recently issued a Call for Technologies, inviting industry participation in the MPAI-XRV Live Theatrical Stage Performance standards effort. Participating companies are encouraged to respond to the call at https://mpai.community/standards/mpai-xrv/. Individuals wishing to participate may also join MPAI by contacting secretariat@mpai.community.

More choices for MPAI standard users

With the five new standards approved by the 36th General Assembly, MPAI has substantially increased the range of areas supported by MPAI standards:

1 – AI Framework (MPAI-AIF) V2 specifies the architecture, interfaces, protocols, and API of a secure environment specially designed for execution of AI-based applications implemented as AI workflows (AIW) of AI modules (AIM).

2 – Context-based Audio Enhancement (MPAI-CAE) V2 specifies technologies that use context information to improve the user experience for audio-related applications such as entertainment, teleconferencing, and restoration for use in contexts such as in the home and in the studio.

3 – Connected Autonomous Vehicle (MPAI-CAV) – Architecture specifies the Architecture of a CAV based on an AI Framework-based Reference Model comprising a CAV broken down into Subsystems (AIW) of which functions, I/O data, and topology are specified, and Subsystems broken down into Components (AIM) of which just functions and I/O data are specified.

4 – Compression and Understanding of Industrial Data (MPAI-CUI) specifies the data formats, the function and interface of the AIMs, and the function, topology, and interface of an AIW providing the default probability, the organisational model index, and the business discontinuity probability of a company within a given prediction horizon using its governance, financial and risk data.

5 – Governance of the MPAI Ecosystem sets the rules governing the MPAI Ecosystem composed of MPAI, Implementers, the MPAI Store, Performance Assessors, and Users.

6 – Multimodal Conversation (MPAI-MMC) specifies data formats for analysis of text, speech, and other non-verbal components, used in human-machine and machine-machine conversation, and AI Framework-based use cases providing recognised applications through the use of data formats from MPAI-MMC and other MPAI standards.

7 – MPAI Metaverse Model (MPAI-MMM) – Architecture specifies Terms; Operation Model; Functional Requirements of Processes, Actions, Items, and Data Types; and Functional Profiles enabling Interoperability of two or more metaverse instances (M-Instances) if they rely on the same Operation Model, and use the same Profile specified by MPAI-MMM – Architecture, and either use the same technologies or else independent technologies while accessing conversion services that losslessly transform data of an M-InstanceA to data of an M-InstanceB.

8 – Neural Network Watermarking specifies methodologies to evaluate aspects of a neural network watermarking technology: the impact on the performance of a watermarked neural network and its inference; the ability of a neural network watermarking detector/decoder to detect/decode a payload when the watermarked neural network has been modified; and the computational cost of injecting, detecting, or decoding a payload in the watermarked neural network.

9 – Portable Avatar Format (MPAI-PAF) specifies the Portable Avatar Format and related data formats, allowing a sender to enable a receiver to render an Avatar as intended by the sender, and AI Framework-conforming AIWs and AIMs required by the Avatar-Based Videoconference use case also using data types from other MPAI standards.

These are some results achieved by MPAI in the first three years since its foundation.

MPAI is continuing its work plan that involves the following development areas:

- AI Framework (MPAI-AIF): having completed MPAI-AIF V2, development of reference software and conformance testing, and study of application areas benefitting from the standard.

- AI Health (MPAI-AIH): while waiting for responses to the Call for Technologies, preparing for the development of the standard.

- Context-based Audio Enhancement (MPAI-CAE): having completed the original work, now investigating several new projects.

- Connected Autonomous Vehicle (MPAI-CAV): moving ahead to Functional Requirements of CAV architecture.

- Compression and Understanding of Industrial Data (MPAI-CUI): preparation for extension of the current standard.

- End-to-End Video Coding (MPAI-EEV): developing AI-based End-to-End Video coding.

- AI-Enhanced Video Coding (MPAI-EVC). developing video coding with AI tools added to existing tools in the MPEG EVC standard.

- Multimodal Conversation (MPAI-MMC): having completed MPAI-MMC V2, developing reference software, drafting conformance testing, and exploring new areas.

- MPAI Metaverse Model (MPAI-MMM): identifying the full set of metaverse technologies requiring standards.

- Neural Network Watermarking (MPAI-NNW): developing reference software for enhanced applications.

- Object and Scene Description (MPAI-OSD): completing the development of the standard.

- Avatar Representation and Animation (MPAI-PAF): having completed Portable Avatar Format (MPAI-PAF), developing reference software, conformance testing and new standardisation areas.

- Server-based Predictive Multiplayer Gaming (MPAI-SPG): developing a technical report on mitigation of data loss and cheating in online gaming.

- XR Venues (MPAI-XRV): while waiting for responses to the Call for Technologies, preparing for the development of the standard.

Meetings in the coming October meeting cycle

Meetings in normal font are open to MPAI members. Legal entities and representatives of academic departments supporting the MPAI mission and able to contribute to the development of standards for the efficient use of data can become MPAI members.

Meetings in italic are open to non-members. If you wish to attend, send an email to secretariat@mpai.community.

| Group name | 02-06 Oct | 09-13 Oct | 16-20 Oct | 23-27 Oct | Time (UTC) |

| AI Framework | 2 | 9 | 16 | 23 | 15 |

| AI-based End-to-End Video Coding | 24 | 13 | |||

| 11 | 14 | ||||

| AI-Enhanced Video Coding | 4 | 18 | 14 | ||

| Artificial Intelligence for Health Data | 20 | 14 | |||

| Avatar Representation and Animation | 5 | 12 | 19 | 13:30 | |

| Communication | 19 | 15 | |||

| Connected Autonomous Vehicles | 25 | 14 | |||

| 4 | 11 | 18 | 15 | ||

| Context-based Audio enhancement | 3 | 10 | 17 | 24 | 16 |

| Governance of MPAI Ecosystem | 23 | 16 | |||

| Industry and Standards | 6 | 20 | 16 | ||

| MPAI Metaverse Model | 6 | 13 | 20 | 27 | 15 |

| Multimodal Conversation | 3 | 10 | 17 | 24 | 14 |

| Neural Network Watermarking | 3 | 10 | 17 | 24 | 15 |

| Server-based Predictive Multiplayer Gaming | 5 | 12 | 19 | 14:30 | |

| XR Venues | 3 | 10 | 17 | 24 | 17 |

| General Assembly (MPAI-37) | 25 | 15 |

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.