Highlights

- Introducing the AI Module Profile standard

- Some results from the MPAI-EVC (AI-Enhanced Video Coding) project

- Meetings in the coming April-May meeting cycle.

Introducing the AI Module Profile standard

So far MPAI has published eleven standards and is actively working on five projects. Six standards and three projects rely on the AI Framework (MPAI-AIF) standard that is based on a notion like Lego. There are basic processing elements – called AI Modules (AIM) – that can be combined to produce Composite AI Modules or AI Workflows (AIW). AIWs can be executed in the AI Framework (AIF) to provide applications. MPAI has already specified about 70 AIMs and about 20 AIWs. Quite a few are already implemented as Reference Software.

MPAI is discovering that, the more it develops specifications in new domains, the more it has cause to reuse already specified AIMs be they Basic or Composite. However, MPAI also sees that in other use cases the function of an AIM may not stay exactly the same because more or fewer “features” may be required.

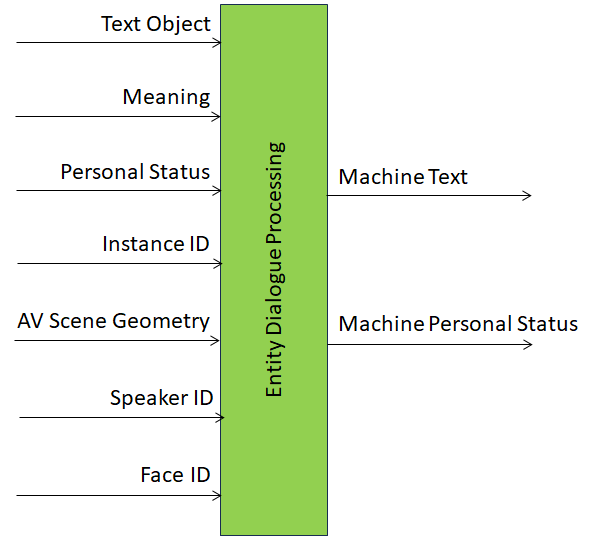

Let’s look at the Entity Dialogue Processing AIM. An AIM is defined by a standard – in this case, Multimodal Conversation (MPAI-MMC) – and identified by three letters – in this case, EDP. Thus, the AIM is identified as MMC-EDP. Actually, this identification is not unambiguous, because a standard has versions (MMC is at version 2.1). Therefore, the full identification can be MMC-EDP-V2.1.

Entity is a generic word used by MPAI to indicate the partner of a machine in an interaction (conversation, communication, etc.). Today, the partner is likely to be a human, but more and more it can be a machine. It can also be that the Entity does not intend to talk with the machine itself but uses it as a bridge – an interpreter – between the Entity on one side and a human that is served by the machine. This is the scope of the recently approved Human and Machine Communication (MPAI-HMC) standard: to enable unfettered communication between Entities.

Let’s now address the different features that the MMC-EDP AIM can take that are highly relevant for Entity-to-Entity communication. The basic function of an EDP AIM is to receive Text from the upstream AIMs that have processed the data issued by the Entity and produce the Text that the machine thinks is the appropriate reaction. This “minimal” but still highly sophisticated step can be improved by providing to EDP with the Meaning, a Data Type representing an input text such as syntactic and semantic information (so far, MPAI has specified some 60 Data Types). This will certainly help the machine generate a response that is more attuned to the context.

But we can do more. Humans typically express themselves not only in words, but also with their face and gestures of their body. MPAI has already produced a standard for a Data Type called Personal Status defined as a Data Type composed of three Factors: Emotion (such as “angry” or “sad”), Cognitive State (such as “surprised” or “interested”), and Social Attitude (such as “polite” or “arrogant”). The EDP AIM could also receive the Personal Status of the Entity (of course, an estimate of it) and use it to provide an even more attuned response. But the EDP AIM could do more, namely produce a “simulated” Personal Status of its own that could be used by other parts (AIMs) of the Machine for appropriate purposes, e.g., to change characteristics of its synthesised voice or to change the expression of its synthesised face.

Our story has not come to an end yet. The HMC-CEC (Communicating Entities in Context) assumes that the machine can generate – if the relevant information sources are available – a spatial description of the Entity’s audio-visual environment (called Scene). The EDP AIM can receive “spatial information” about the Audio and/or Visual Objects in terms of their position and orientation in the Scene. The Figure depicts an EDP AIM with all its input and output data.

Figure 1 – The input/output data of MMC-EDP

So far, we have been talking about the features of the EDP AIM in terms of input and output data. But there can be features that are internal to the AIM. For instance, the EDP AIM must be able to respond to a set of input data, but what if the AIM were able to reuse data received and produced in previous phases of the interaction?

It is clear that the value of an Entity-to-Entity interaction machine depends on many elements – certainly the ability to process the input data and to display the response. But the EDP AIM is the brain of the machine and that will fast evolve in the future. Today, some of the features outlined above are very sophisticated and difficult to implement in portable devices and we cannot expect they will all be supported in the short and even in the medium term.

Here is where the notion of Profiles, originally developed by MPEG in the summer of 1992 for the MPEG-2 standard and universally adopted in the media domain comes in handy. An AIM Profile is a label that uniquely identifies the set of AIM Attributes of an AIM instance. Attribute is a name formally defined as “input data, output data, or functionality that uniquely characterises an AIM instance” Attribute corresponds to the loosely introduced and used “feature” name.

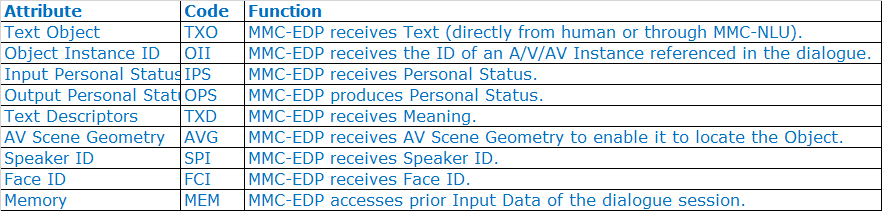

Table 1 lists the MMC-EDP Attributes with their 3-letter codes and definitions.

Table 1 – MMC-EDP Attributes

ow do we unambiguously identify an MMC-EDP instance? MPAI thinks that at this point in time it is too early to define specific Profiles. It is preferable to provide a standard method to signal the Attributes of an AIM.

Draft Technical Specification: AI Module Profiles (MPAI-PRF) offers two ways to signal the Attributes of an AIM: those that are supported or those that are not supported. A user can use either of the two. Possibly, the list of those that are supported if it is shorter than the list of those that are not supported.

Therefore, an MMC-EDP AIM instance that only supports, say, Text and Meaning (called Text Descriptors) as input and Text as Output will be represented as MMC-EDP-V2.1(+TXO+TSD). An MMC-EDP AIM instance that only misses the Memory Attribute will be represented as MMC-EDP-V2.1(-MEM).

The media context (MPEG) soon discovered that the quantisation of the functionality (“tools” in MPEG lingo) dimension was not sufficient. We needed also to specify how important the media dimension was. For instance, a Profile could be applied to a video with standard, high, and possibly higher definition. This new dimension was called “Level”. Not unexpectedly, the notion of Level also comes in handy to MPAI AIMs.

One case is that of the Personal Profile Extraction AIM. As we said above, this has three Factors (Cognitive State, Emotion, and Social Attitude) and four Modalities (Text, Speech, Face, and Gesture) for each Factor. If we have an EDP AIM instance that handles Text, Meaning, and Personal Status as input and produces Text and Personal Status as output, we may have the following case:

MMC-EDP-V2.1(NUL+TXO+TXD+ISP+OSP#PST#PSS)

This means that the Input Personal Status includes all Factors and Modalities, but the output Personal Status only produces Personal Status of Text and Personal Status of Speech.

A human can understand the representation of an AIM Profile described above. A machine can understand another representation expressed by JSON syntax and semantics.

Technical Specification: AI Module Profiles (MPAI-PRF) is published for Community Comments until 2024/05/08T23:59. Anybody is welcome to submit comments to the draft by sending an email to the MPAI secretariat by that date or earlier.

Some results from the MPAI-EVC (AI-Enhanced Video Coding) project

The EVC group has developed a data set by aggregating 350 4K video sequences from different sources to provide sufficient content diversity for its explorations:

1) BVI-DVC Part 1 (public)

2) The Ultravideo

3) The SVT Open Content

4) The Tencent Video

64 frames of each sequence have been used, for a total of 22400 frames. These datasets are important for testing the result of AI technology application.

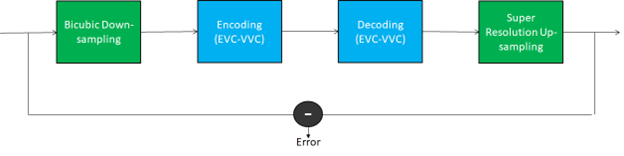

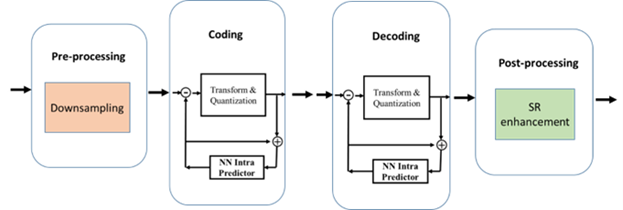

In the super-resolution (SR) exploration, pictures are downscaled with a standard method before encoding and then upscaled using super-resolution to the native resolution after decoding.

The goal is to:

- Devise a novel approach for enhancing the training process of state-of-the-art SR networks.

- Improve the performance of the trained SR network when dealing with images encoded with different codecs.

Figure 2 – Applying Super-resolution to coded video

The method enhances the performance of an SR network that up-samples images of different resolutions and evaluates the improvement obtained by using SR networks instead of traditional upscale filters.

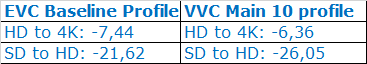

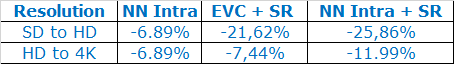

The BD-rate gain results (BD-PSNR, luminance) are:

In the Super Resolution with Intra Prediction exploration, we have investigated the performance improvement of the Essential Video Coding (EVC) modified by enhancing/replacing existing video coding tools with AI-based tools keeping an acceptable complexity increase.

We investigated the impact of replacing the two main video coding tools, such as directional mode and super resolution, with artificial intelligence-based tools to improve the performance of the EVC coding standard. In this work, the performance of the single proposed AI-based tool and the overall performance of the two proposed tools were analysed, showing a significant improvement in encoding performance compared to the baseline EVC video standard (Figure 3).

Figure 3 – Reference scheme of Encoder MPEG-5 EVC

We used the “Intra” of the 5 prediction modes of the MPEG-5 Baseline EVC codec for a learnable intra-prediction module realised as a Neural Network Auto-Encoder. We conducted an ablation study to evaluate the effectiveness of combining the EVC intraframe coding capabilities enhanced by a neural network with SR. As a point of reference, we included a baseline experiment that employed the MPEG-5 codec with classical bicubic interpolation algorithm.

The results of these experiments were then compared and analysed to gauge the impact of our integrated approach, particularly in contrast to the baseline method. In Table 2, column 1 is the resolution, column 2 the improvement of adding the NN Intra, column 3 is column 1 of Table 1 and column 4 the combination of NN Intra and SR.

Table 2 – Results of Intra-enhancement, Super-Resolution, and combined techniques (EVC).

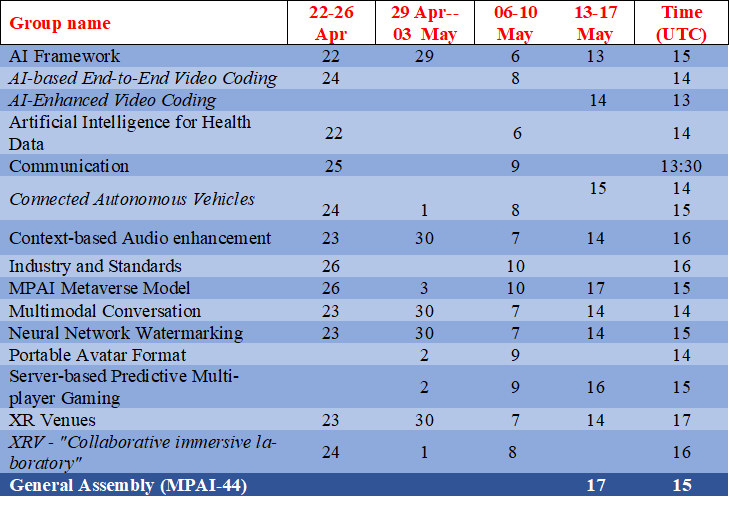

Meetings in the coming April-May meeting cycle

Participation in meetings indicated in normal font are open to MPAI members only. Legal entities and representatives of academic departments supporting the MPAI mission and able to contribute to the development of standards for the efficient use of data can become MPAI members.

Meetings in italic are open to non-members. If you wish to attend, send an email to secretariat@mpai.community.

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.