Highlights

- The MPAI-44 Press Release

- MPAI holds online presentations of three new Calls for Technologies

- Navigating the world of MPAI Standards

- Three Calls for Technologies

- Meetings in the coming May-June meeting cycle

The MPAI-44 Press Release

This newsletter is particularly rich in news. You can read a very concise summary from the MPAI-44 Press Release.

MPAI holds online presentations of three new Calls for Technologies

As reported in the press release and in the next news item, MPAI-44 has approved three new Calls for Technologies. They will be presented online as described below:

- Context-based Audio enhancement (MPAI-CAE) – Six degrees of Freedom (CAE-6DF). Register here to attend online presentation on 2024/05/28T16

- Connected Autonomous Vehicle (MPAI-CAV) – Technologies (CAV-TEC). Register here to attend online presentation on 2024/06/06T16

- MPAI Metaverse Model (MPAI-MMM) – Technologies (MMM-TEC). Register here to attend online presentation on 2024/05/15T16

Navigating the world of MPAI Standards

At its last General Assembly (MPAI-44), MPAI launched three new projects to extend some existing standards with more functionalities.

The first project is part of Context-based Audio Experience (MPAI-CAE) and the extension is called Six Degrees of Freedom Audio (to be called CAE-6DF). The goal is to enable users to have the spatial experience of a remote audio environment by “walking into it”. Based on the MPAI Statutes, MPAI has issued a Call for Technologies with associated Use Cases and Functional Requirements (collecting features that the new standard should support), Framework Licence (a set of guidelines that the eventual licence issued by patent holders should support), and Template for Responses to facilitate the drafting of a response.

The second project is part of Connected Autonomous Vehicle (MPAI-CAV) and the extension is called Technologies (to be called CAV-TEC). The extended Architecture standard (CAV-ARC) will have a specification of the technologies that are needed to make a full-fledged component-based connected autonomous vehicle standard. Here, too, MPAI has issued a Call for Technologies with associated Use Cases and Functional Requirements, a Framework Licence, and a Template for Responses.

The third project has a similar goal, even though the field is quite different: MPAI Metaverse Model (MPAI-MMM). The extension is also called Technologies and will be called MMM-TEC. Here, adding to the current Architecture standard (MMM-ARC), the goal is to develop a specification for the technologies needed to make a metaverse instance following the MMM-TEC standard interoperable with another. There is a Call for Technologies with associated Use Cases and Functional Requirements, a Framework Licence, and a Template for Responses.

Both CAV-TEC and MMM-TEC are a substantial step in moving the respective projects from the state of Functional Requirements specification to Technology Specifications.

Those wishing to respond to any of the three Calls should join one the following online presentations (times are UTC):

- Context-based Audio enhancement (MPAI-CAE) – Six degrees of Freedom (CAE-6DF), Register here to attend online presentation on 2024/05/28T16, send intention by 2024/06/04 and submit response by 2024/09/16.

- Connected Autonomous Vehicle (MPAI-CAV) – Technologies (CAV-TEC). Register here to attend online presentation on 2024/06/06T16, send intention by 2024/06/07 and submit response by 2024/07/05.

- MPAI Metaverse Model (MPAI-MMM) – Technologies (MMM-TEC). Register here to attend online presentation on 2024/06/06T16, send intention by 2024/06/07 and submit response by 2024/07/06.

The intention to submit a response and an actual response should be delivered to the MPAI Secretariat by 23:59 UTC of the relevant day. All relevant documents are accessible from the links above.

The mail bag from MPAI-44 has more to offer. Four existing standards have been republished with significant new material that extends the current functionalities of the four and supports the needs of the three new projects. They are:

- Object and Scene Description (MPAI-OSD) V1.1 adds a new Use Case for automatic audio-visual analysis of television programs and new functionalities for Visual and Audio-Visual Objects and Scenes.

- Use Cases of Context-based Audio Experience (MPAI-CAE) V2.2 standard (CAE-USC) supports new functionalities for Audio Object and Scene Descriptors.

- Multimodal Conversation (MPAI-MMC) V2.2 standard introduces new AI Modules and new Data Formats to support the new MPAI-OSD Television Media Analysis Use Case.

- Portable Avatar Format (MPAI-PAF) V1.2 standard extends the specification of the Portable Avatar to support new functionality requested by the CAV-TEC and MMM-TEC Calls.

The documents are published on the MPAI website at the links above. Everybody is welcome to review the draft standards (new versions of existing standards) and send comments to the MPAI Secretariat by 23:59 UTC of the relevant day.

| Standard | Deadline for comments |

| Context-based Audio Experience (MPAI-CAE) V2.2 | 2024/06/11 |

| Multimodal Conversation (MPAI-MMC) V2.2 | 2024/06/11 |

| Object and Scene Description (MPAI-OSD) V1.1 | 2024/07/05 |

| Portable Avatar Format (MPAI-PAF) V1.2 | 2024/07/05 |

The comments received will be considered for drafting the published versions.

One last news item: MPAI-44 has approved Context-based Audio Experience (MPAI-CAE) Use Cases (CAE-USC) V1.1. You can play with the CAE-USC reference software by registering at the MPAI GitLab.

The standards mentioned above cover a significant share of the MPAI portfolio, but your navigation in the MPAI standards world need not stop here. If you wish to delve into the other MPAI standards, you can go to their appropriate web page where you can read an overview of the standard and find many links to relevant web pages. Each page contains a web version and other support material such as PowerPoint presentations and video recordings.

More about three Calls for Technologies

Call for Technologies: Context-based Audio Enhancement (MPAI-CAE) – Six Degrees of Freedom Audio (CAE-6DF)

Humans experience the world by moving, and movement supports our immersion in the real world. This involves not only orienting our heads but also changing our location in an environment to observe scenes from different perspectives.

State-of-the-art VR headsets provide high-quality photorealistic visual content and track both the user’s orientation and position in 3D space. This capability opens new opportunities for enhancing the degree of immersion in VR experiences. VR games have significantly benefited from these developments, becoming increasingly immersive over the years.

Despite the success of synthetic virtual environments such as 3D first-person games, those that include content captured from the real world have not yet become mainstream. Recent developments, such as dynamic Neural Radiance Fields (NERFs) and 4D Gaussian splatting, allow the capture of visual scenes with both static and dynamic entities, promising full visual immersion.

Capturing audiovisual scenes with both static and dynamic entities promises a full immersion experience. Visual immersion alone, however, is not sufficient without an equally convincing auditory immersion. Consider an immersive theatre production experienced through a VR headset, where the user can walk around actors and get closer to different conversations. The experience of “being there” requires both visual and audio elements. Another example is a concert where a user can choose different seats to experience the performance with 360° videos associated with those viewing positions. Auditory experience should support experiencing the acoustics of the concert hall from different perspectives.

The CAE-6DF Call is seeking innovative technologies that enable and support such experiences. The parent MPAI-CAE project is specifically looking for technologies to efficiently represent content of scene-based or object-based formats, or a mixture of these, process it with low latency and provide high responsiveness to user movements, rendering the audio scene over loudspeakers or headphones. These technologies should also consider audio-visual cross-modal effects to present a high level of auditory immersion that complements the visual immersion provided by state-of-the-art volumetric environments.

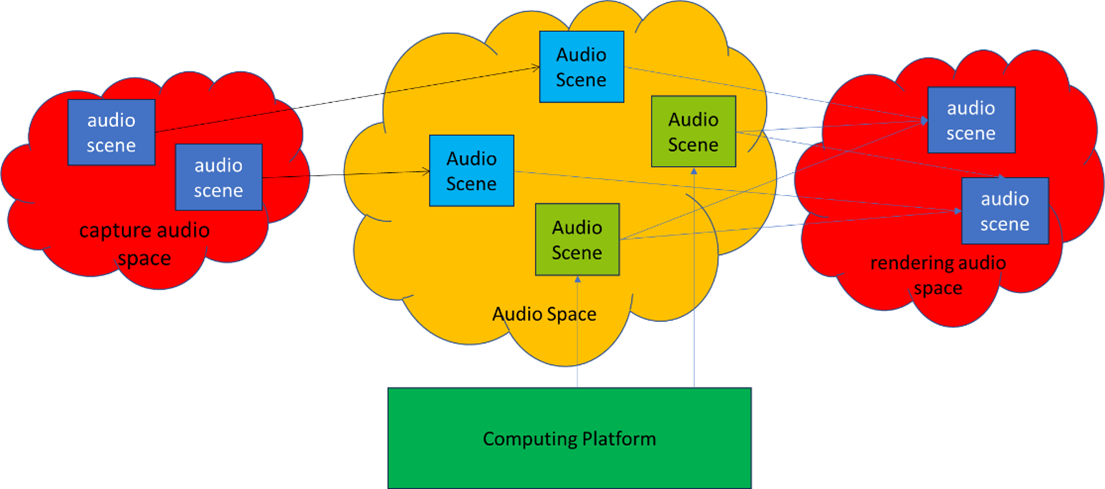

Figure 1 depicts real audio spaces and a Virtual Audio Space including Audio Scenes composed of digital representations of acoustical scenes and other synthetic Audio Objects. Rendering is performed in a perceptually veridical fashion of arbitrary user-selected points of the audio scene. Words in lowercase refer to the real space, in uppercase to Virtual Space.

Figure 1 – real spaces and Virtual Spaces in CAE-6DF

Moving to the other two Calls, Connected Autonomous Vehicle (MPAI-CAV) and MPAI Metaverse Model (MPAI-MMM) are two major MPAI projects that have each already delivered one standard and MPAI-MMM has also delivered 2 Technical Reports.

Call for Technologies: Connected Autonomous Vehicle (MPAI-CAV) – Technologies (CAV-TEC)

The previously published MPAI-CAV V1.0 – Architecture (CAV-TEC) standard partitions a CAV into four Subsystems that are further partitioned into Components. Subsystems and Components are both specified by their functions and interfaces and Subsystems additionally specified by the topology of their Components. The CAV-TEC Subsystems are expected to be implemented as AI Workflows (AIW) and their Components as AI Modules (AIM) as specified by the MPAI AI Framework (MPAI-AIF) standard.

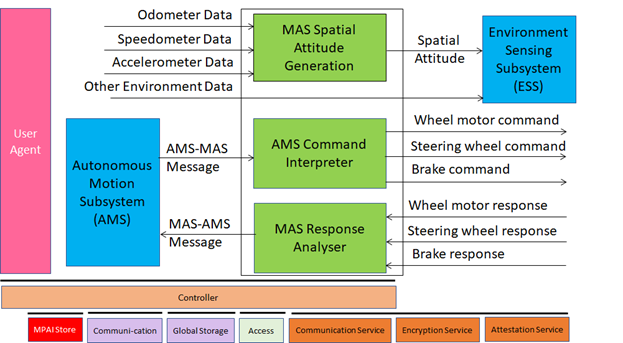

The CAV-TEC standard has provided the Functional Requirements of the MPAI-CAV AIMs and AIWs. However, the Data Types have only been identified by their connections, not by what they do and how. This should be clear from Figure 2 depicting the reference model of the Motion Actuation Subsystem. This performs several functions:

- It provides various environment-related data streams to the Environment Sensing Subsystem in charge of producing the best description of the environment travelled by the CAV using a variety of CAV sensors.

- It receives and implements the Autonomous Motion Subsystem’s instructions to displace the CAV. The AMS is the “brain” of the CAV.

- It produces reports to the AMS on how the instructions were executed.

Figure 2 – Reference Model of Motion Actuation Subsystem

The AMS-MAS instructions and MAS-AMS reports convey vital information. Therefore, we need standard formats for them just as we need standard formats for the MAS to communicate with its Brake, Motors, and Steering subsystems.

The purpose of Call for Technologies: Connected Autonomous Vehicle (MPAI-CAV) – Technologies is to fill in the elements that are missing to define a component-based CAV. The process of moving from the current vertically integrated automotive companies to companies that manufacture CAVs using components provided by a constellation of specialised companies need not take decades and the CAV-TEC standard is expected to facilitate that process. A net result will be a drastic reduction of CAV prices.

Call for Technologies: MPAI Metaverse Model (MPAI-MMM) – Technologies (MMM-TEC)

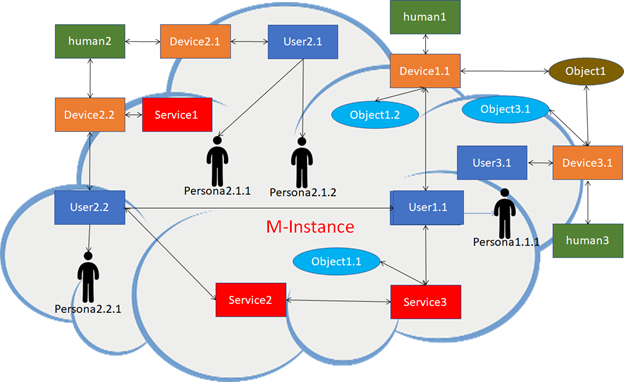

The MPAI Metaverse Model (MPAI-MMM) kicked off some 30 months ago. It has already produced two Technical Reports exploring the field as well as the MMM-Architecture Technical Specification (MMM-TEC). This has specified the MPAI Metaverse Model (MMM) Operation Model, composed of interacting Processes (Devices, Services, and Users representing humans), exchanging Items (Data Types of the MMM), and performing Actions. MMM-TEC enables interoperability between different metaverse instances if they adopt the MMM Operation Model and exchange Items with the same Functional Requirements so that Conversion Services can accommodate possibly incompatible technologies.

This is depicted in Figure 3 where there are three humans, the first and third represented by one User each and the second by two. The first User of the second human is rendered as two Personae (Avatars) and the User of the third human is not rendered (i.e., it is just a Process performing Actions in the MMM).

Figure 3 – MPAI-MMM Operation Model

The many arrows appearing in the Figure require standards for humans’ Processes to interact with other Processes. The MMM-TEC Call for Technologies is addressing this issue. It offers an initial JSON Syntax and Semantics of all Items (Data Types) containing suggestions of Data Formats and Attributes for which respondents to the Call may wish to make proposals.

It is interesting to note that MPAI assumes a CAV generates a “private” metaverse in order to plan its next decision to move. CAVs may decide to share part of their private metaverses to facilitate their understanding of the common real space(s) they traverse. Investigations carried out by MPAI have shown that CAVs’ private metaverses can be represented _and_ shared by the MPAI-MMM metaverse technologies.

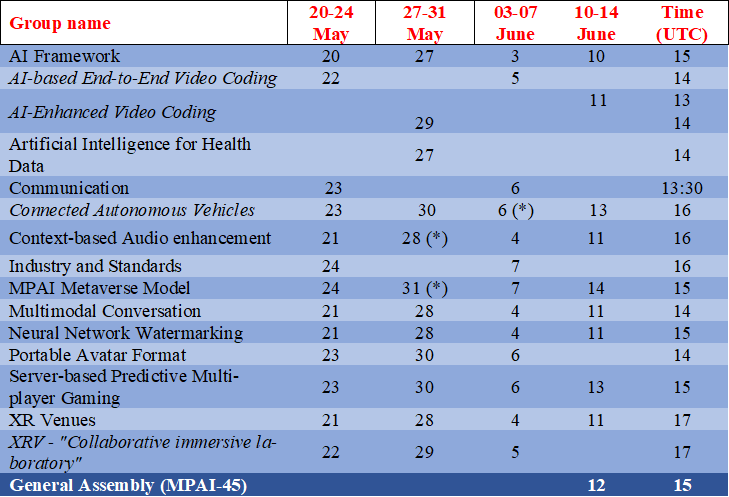

Meetings in the coming May-June meeting cycle

Participation in meetings indicated in normal font are open to MPAI members only. Legal entities and representatives of academic departments supporting the MPAI mission and able to contribute to the development of standards for the efficient use of data can become MPAI members.

Meetings in italic are open to non-members. If you wish to attend, send an email to secretariat@mpai.community.

(*) Online public presentation

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.