Highlights

Short report of the 45th MPAI General Assembly (MPAI-45)

Results and plans for AI-based End-to-End Video (MPAI-EEV)

Results and prospects of Neural Network Watermarking (MPAI-NNW)

Meetings in the coming June-July meeting cycle

Short report of the 45th MPAI General Assembly (MPAI-45)

At its 45th General Assembly, MPAI has approved for publication three new documents for publication: AI Module Profiles, Neural Network Watermarking Reference Software, and Multimodal Conversation Reference Software.

Technical Specification: AI Module Profiles (MPAI-PRF) V1.0 extends the MPAI architecture by enabling an AI Modules to signal their capabilities in terms of input and output data and specific functionalities. The signaling is easily understandable by humans as well.

Reference Software Specification: Neural Network Watermarking (MPAI-NNW) V1.2 makes available to the community software implementing the functionalities of the Neural Network Watermarking Standard in an AI Framework with the possibility to add and remove modules and using limited capability Microcontroller Units (MCU).

Conformance Testing Specification: Multimodal Conversation (MPAI-MMC) V2.1 publishes methods and data sets to enable developers or user to ascertain the claims of an implementation to conform with the specification of the following AI Workflows: Conversation with Emotion, Multimodal Question Answering, and Unidirectional Speech Translation AI Workflows.

At its previous meeting, MPAI has published three Calls for Technologies on Six Degrees of Freedom Audio, Connected Autonomous Vehicle – Technologies, and MPAI Metaverse Model – Technologies. Interested parties should check the mentioned links for update as there is still time to respond to the Calls.

MPAI is also happy to announce that the Institute of Electrical and Electronic Engineers has adopted the Connected Autonomous Vehicle – Architecture (a standard companion to the one target of the Call for Technologies above) as an IEEE standard identified as 3307-2024.

Results and plans for AI-based End-to-End Video (MPAI-EEV)

Project goals – Neural video coding methods are currently gaining popularity, and academia and industry are devoting significant efforts to explore its scientific and technological challenges. Industry and standardization groups are also providing artificial intelligence (AI) model-driven enablers for content production, coding, transmission, and consumption of visual media and for enhanced user experiences. End-to-end optimised video coding can play an important role in providing visual entertainment, enhancing collaboration, and transforming industries in the coming years.

In the last five years, increasing attention from both theoretical and implementation perspectives has accelerated the evolution of visual-understanding-aware compression technology, triggering a paradigm shift from traditional prediction-plus-transform-based hybrid frameworks to end-to-end neural approaches. Therefore, the functional requirements of video compression need to be updated considering AI technology. New types of formats and content are driving advancements in video coding standards.

The MPAI-EEV project kicked off in Dec. 2021 with the goal to meet the demand and requirements analyzed above. After studying the latest research literature, it developed a reference model to investigate the possibility of video codecs with capabilities beyond those of existing standards. The ultimate goal of EEV is to find the near-optimal NN structure benefitting both video and network coding.

Status of Exploration – As of June 2024, MPAI-EEV has released four versions of the verification model tagged as EEV-0.1 to EEV-0.4, with EEV-0.5 coming soon. The technical improvements made by EEV software are uni-directional enhanced neural prediction, bi-directional motion compensation prediction, advanced motion representation, and enhanced in-loop restoration.

The current EEV-0.4 software has much better coding efficiency than VVC/H.266 VTM software in the low-delay-P configuration. The performance of the EEV-0.5 software under development is very close to that of the VTM software in the random-access configuration.

EEV Plans – MPAI-EEV expects to reduce the bitrate compared to the VTM Random Access configuration reference model by 20% in the next few months. Once the goal is reached, MPAI plans to issue a call for technologies this year and further increase the performance of the reference model. Experts will be invited to join the world’s first end-to-end neural video codec standardization project. In the mean time, the group is also developing use cases and functional requirements to clarify the road ahead and minimize unexplored and unexpected problems.

To know more, visit the MPAI-EEV web page and join the online meetings of the group by sending an email to the MPAI Secretariat.

Results and prospects of Neural Network Watermarking (MPAI-NNW)

|

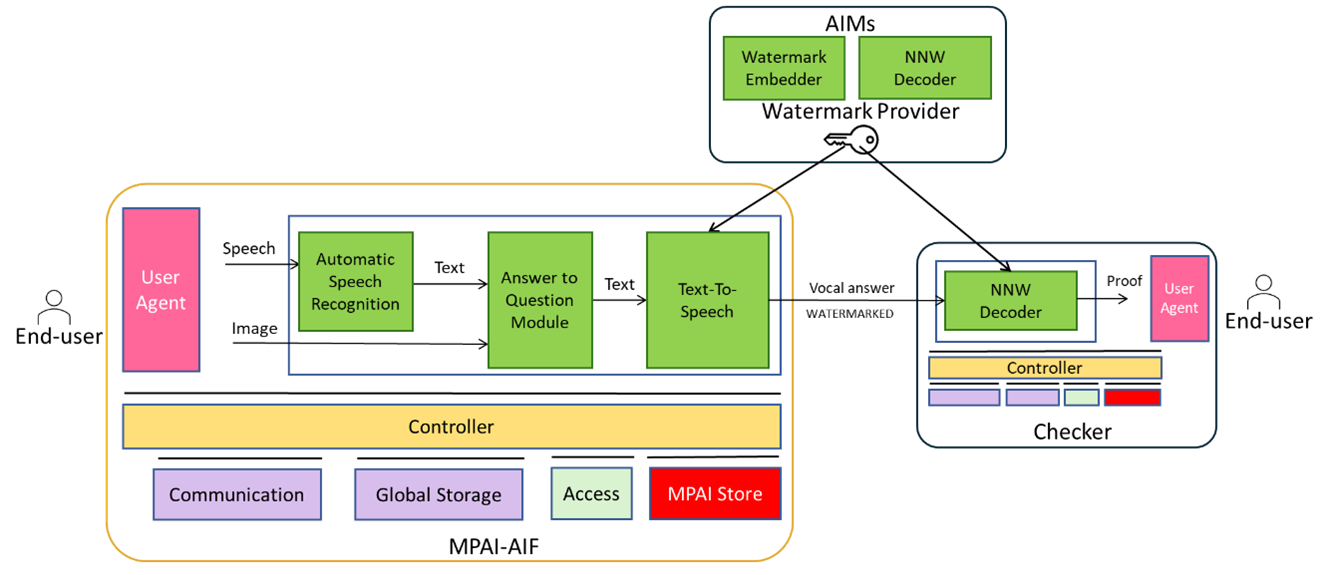

Figure 1. Workflow of the multimodal query with watermarking use case

Resource constraint (MCU) AI deployment with watermarking functionality illustrated in Figure 2. In this workflow a specific set of inputs is fed to the MCUs, its inferences are computed and the watermark retrieved. The considered model is a tiny ResNet8 available here and the board is ST MCU STM32H743ZI Nucleo (1MB of RAM; 2MB of ROM; 1 Core and Clock Frequency 480 MHz).

Figure 2. Worflow of the resource constraint deployment use case

MPAI is now ready to review and extend the scope NNW-DC so as to cover other types of security solution such as fingerprinting and cryptography. Join us with your use cases.

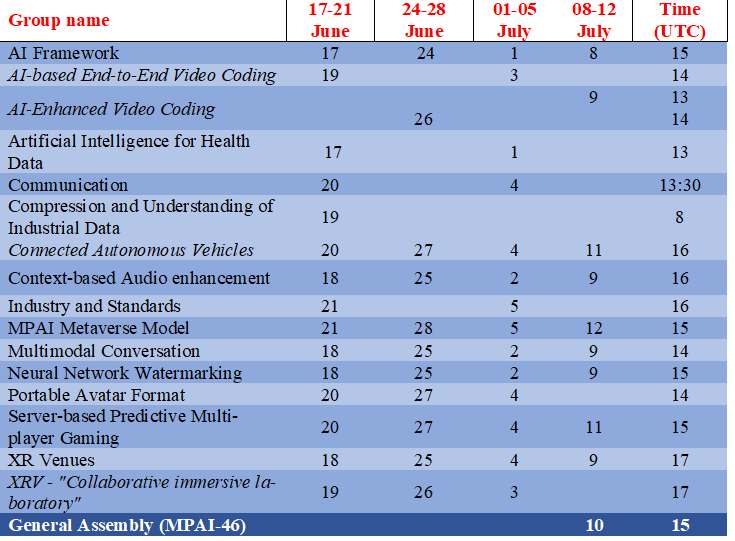

Meetings in the coming June-July meeting cycle

Meetings in normal font are open to MPAI members only. MPAI membership is open to legal entities and representatives of academic departments supporting the MPAI mission and able to contribute to the development of standards for the efficient use of data.

Meetings in italic are open to non-members. If you wish to attend, send an email to secretariat@mpai.community.

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.