Highlights

- A brief report of the 46th MPAI General Assembly (MPAI-46)

- MPAI-Approves Human and Machine Communication (MPAI-HMC) V1.1

- Meetings in the coming July-August meeting cycle

A brief report of the 46th MPAI General Assembly (MPAI-46)

Non-MPAI members may wonder what happens at an MPAI General Assembly. This article will try and respond to this question by making a brief report on the last – 46th – General Assembly (MPAI-46).

How often are General Assemblies (GA) held? As MPAI meetings are, so far, only held online, it is convenient to hold decision-orientated GAs as often as it is convenient. As a rule, GAs are held every 4 weeks. For members, they are an opportunity to become aware of the progress of all working groups and to timely approve standards without setting up a costly and onerous secretarial infrastructure. As an example, MPAI-45 approved one standard, one reference software and one conformance testing and MPAI-46 approved one standard.

Are meetings every four weeks not too many? For some activities, this may be true, but in general four weeks is a long time in MPAI as working groups typically meet every week for one hour.

Who may participate in a GA? MPAI member representatives may attend all GAs, but only Principal Members’ representatives may vote.

GAs handle the usual topics (GA meeting report, Secretariat report, Board report etc.), then the bulk of the meeting unfolds with reports made by those in charge of groups and Development Committees (in charge of developing standards). At MPAI-46 these were the topics of the various report.

- Development state of the implementation of the Television Media Analysis (OSD-TMA). This activity is about developing a system able describe a TV program in terms of Audio-Visual Event Descriptors composed of Audio-Visual Scene Descriptors, composed of Speech Object of the speakers and Visual Objects in terms of speakers’ faces. Speech and Visual Objects include Qualifiers providing additional information on the Objects.

- Progress report on the development of the AI for Health (MPAI-AIH) standard. This standard will specify the interfaces of a system collecting licensed user health data, processing and enabling third parties to process the data based on licence terms, collecting AI models, and redistributing updated models to system users.

- Progress reports on the development of Context-based Audio Enhancement (MPAI-CAE) V2.2, Multimodal Conversation (MPAI-MMC) V2.2, Object and Scene Description (MPAI-OSD) V1.1, and Portable Avatar Format (MPAI-PAF) V1.2. These standards address different application domains but the AI Workflows, AI Modules and data types specified by them contribute to the main MPAI asset – AI Workflows, AI Modules and data types – used by these and other standards.

- Report on the start of the Compression and Understanding of Industrial Data (MPAI-CUI) V2.0 standard. The previous version V1.1 including the Company Performance Prediction Use Case, will be extended with the capability to handle additional risks.

- Report on and approval of Human and Machine Communication (MPAI-HMC) V1.1. More on this in the next article, but it is important to stress that HMC specifies one AI Workflow, two AI Modules, and no data types because all the rest comes from the mentioned pool of AI Workflows, AI Modules, and data types.

- Report on the start of the development of MPAI Metaverse Model – Technologies (MMM-TEC) V1.0 after the closure of the MMM-TEC Call for Technologies. This standard will develop and make reference to specifications of the set of data types required to achieve interoperability between clients and Metaverse Instances (M-Instance) and between M-Instances.

- Progress report on the development of “Guidelines for data loss mitigation in online multiplayer gaming” (MPAI-SPG), a Technical Report that will describe a procedure to design neural network-based systems that mitigate the impact of game clients’ data loss.

- Progress report on the development of XR-Venues – Theatrical Stage Performance (XRV-TSP). This will specify methods to design AI Workflows and Modules to automate complex processes in application domains harnessed by XR and AI technologies.

- Progress report on the development of a Call for Technologies for the End-to-End Video Coding (MPAI-EEV) project. The future MPAI-EEV standard will apply AI technologies to compress video at bitrates significantly reduced compared to what is possible today.

- Progress report on the development of a Call for Technologies for Up-sampling Filter for Video applications (MPAI-UFV). The future MPAI-UFV standard will apply AI technologies to up-sample video to higher resolution in a context where down-sampling has been applied to a video before compression.

- Reports on Industry and Standards, IPR Support, and Communication. Five MPAI standards have been adopted by IEEE and four more are in the pipeline. IPR Support caters to the development of Framework Licences, and Communication manages the Communication activities.

The reader will see how much valuable information about standards being prepared is shared in a General Assembly.

MPAI-Approves Human and Machine Communication (MPAI-HMC) V1.1

The “V1.1” attribute to MPAI-HMC means that this is an extension of the previously approved V1.0 standard not a new standard. But what is MPAI-HMC and what is new in it?

Human and Machine Communication is clearly a vast area where new technologies are constantly introduced – more so today than before, thanks to the rapid progress of Artificial Intelligence. It is also a vast business area where new products, services, and applications are launched by the day, but many of them are conceived and deployed for them to operate autonomously.

Standards enable interoperable services and devices but MPAI standards, by breaking down monolithic systems into smaller components with known functions and interfaces, also enable the development of independent components powered by well-studied and refined technologies.

MPAI-HMC specifies one subsystem as “AI Workflow (AIW)” composed of interconnected “AI Modules (AIM)” exchanging data of a specific data type. AIM is the acronym that MPAI gives to components that can be integrated into a complete system implemented by the AIW.

The Communicating Entities in Context AI Workflow enables Machines to communicate with Entities in different Contexts where:

- Machine is software embedded in a device that implements the HMC-CEC specification.

- Entity refers to one of:

- A human in a real audio-visual scene.

- A human in a real scene represented as a Digitised Human in an Audio-Visual Scene.

- A Machine represented as a Virtual Human in an Audio-Visual Scene.

- A Digital Human in an Audio-Visual Scene rendered as a real audio-visual scene.

- Context is information describing attributes of an Entity, such as language, culture etc.

- Digital Human is either a Digitised Human, i.e., the digital representation of a human, or a Virtual Human, i.e., a Machine. Digital Humans can be rendered for human perception as humanoids.

- A word beginning with a small letter represents an object in the real world. A word beginning with a capital letter represents an Object in the Virtual World.

Entities communicate in one of the following ways when communicating to:

- humans:

- Using the Entities’ body, speech, Context, and the audio-visual scene that the Entities are immersed in.

- Using HMC-CEC-enabled Machines emitting Communication Items.

- Machines:

- Rendering Enties as speaking humanoids in audiovisual scenes, as appropriate.

- Emitting Communication Items.

Communication Items are implementations of the MPAI-standardised Portable Avatar Format. This is a data type providing information on an Avatar and its context to enable a receiver to render an Avatar as intended by the sender.

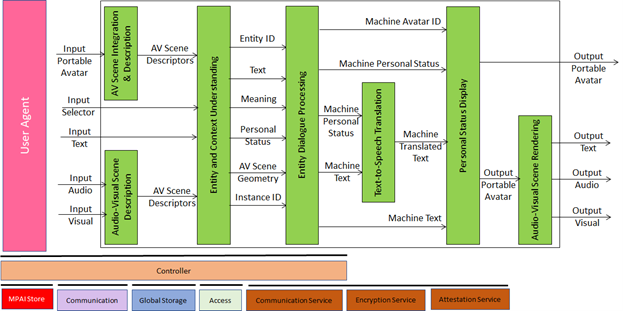

The following figure represents the reference model of HMC-CEC.

The components of the figure that are not in green belong to the infrastructure that enables dynamic configuration, initialisation, and control of AI Workflows in a standard AI Framework. The operation of HMC-CEC is as follows:

1.HMC-CEC may receive a Communication Item/Portable Avatar. AV Scene Integration and Description converts the Portable Avatar to AV Scene Descriptors.

2.HMC-CEC may also receive a real scene and has the capability to digital represent it as AV Scene Descriptors.

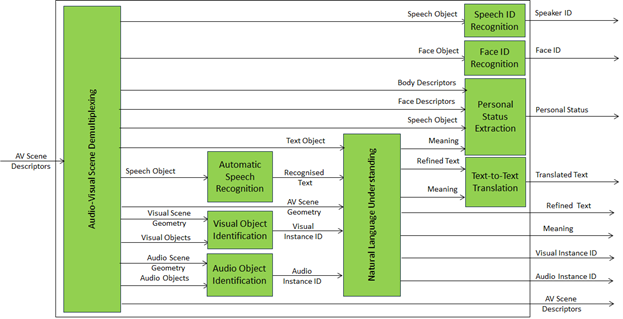

3.Therefore, Entity and Context Understanding always deals with AV Scene Descriptors independently of the source – real space or Virtual Space – of the information. The HMC-ECU is a Composite AIM demultiplexing the information streams inside the AV Scene Descriptors. Speech and Face are identified. Audio and Visual Object are identified. Speech is recognised, understood and possibly translated. The Personal Status conveyed by Text, Speech, Face, and Gesture.

4.The Entity Dialogue Processing AIM has the necessary information to generate a (textual) response but can also generate the Personal Status to be embedded in the response.

5.The Text-to-Text Translation AIM translates Machine Text to Machine Translated Text.

6.Text, Speech, Face, and Gesture – each possibly conveying the machine’s Personal Status – are the modalities that the machine can use in its response represented as a Portable Avatar that can be transmitted as is to the party it communicates with or rendered in an audible/visible form.

HMC-CEC is a complex machine, and the full implementation may not always be needed. MPAI-HMC V1.1 includes a mechanism to signal the capabilities – called Profiles – of an HMC-CEC implemenation.

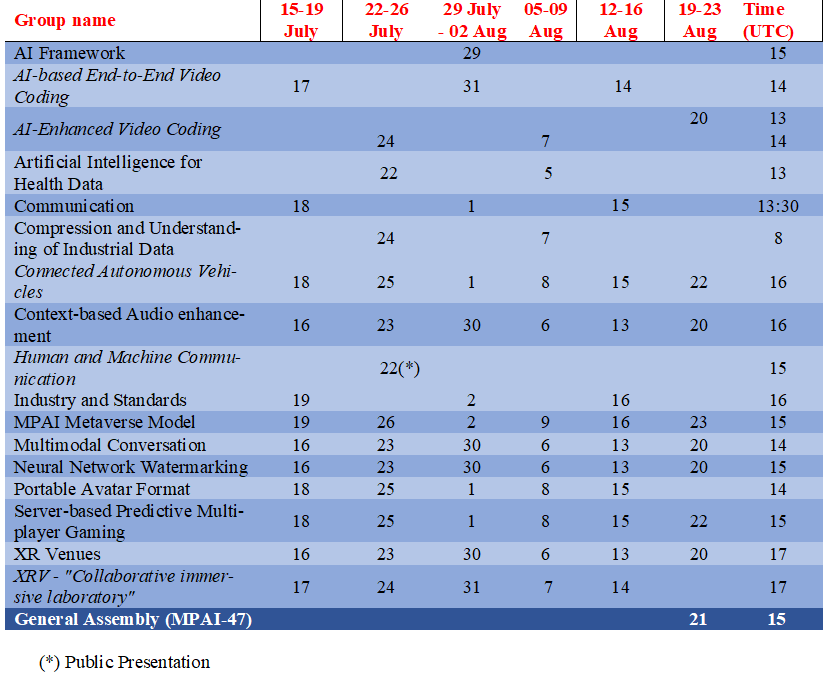

An online presentation of MPAI-HMC V1.1 will be held on 22 July at 15 UTC. To attend register at:

https://us06web.zoom.us/meeting/register/tZEtde-orTwqE9x9sSkauN9CxKsLvbJrIeSF

Meetings in the coming July-August meeting cycle

This newsletter serves the purpose of keeping the expanding diverse MPAI community connected.

We are keen to hear from you, so don’t hesitate to give us your feedback.