HighLight

Online presentations of the MPAI-UFV Call for Technologies and new/revised standards

The Up-sampling Filter for Video application (MPAI-UFV) V1.0 Call for Technologies

The Neural Network Traceability (MPAI-NNT) V1.0 standard

The Human and Machine Communication (MPAI-HMC) V2.0 standard

What makes an MPAI standard

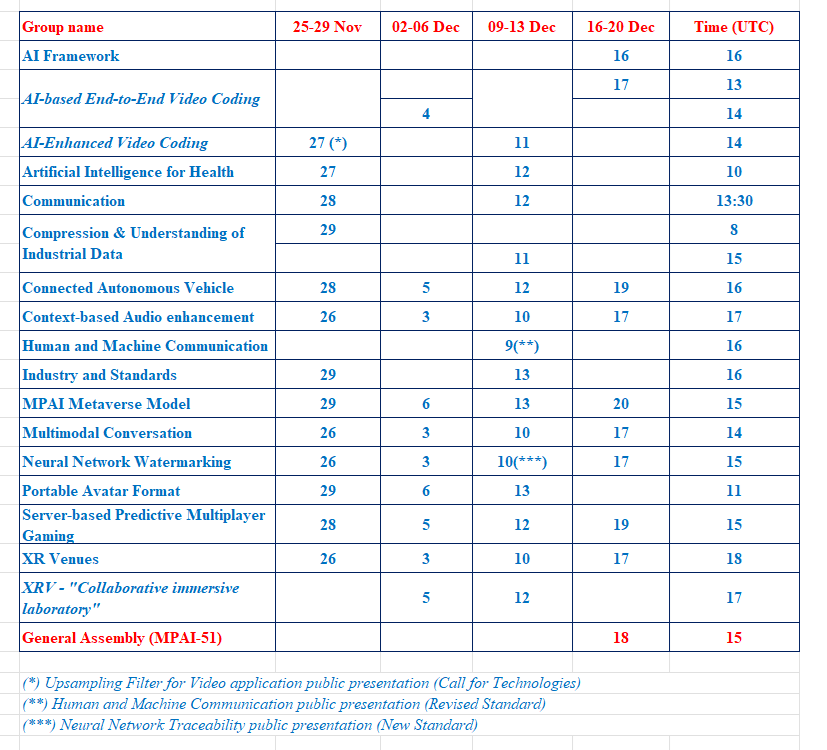

Meetings in the coming November/December meeting cycle

Online presentations of the MPAI-UFV Call for Technologies and new/revised standards

MPAI-50 has approved a Call for Technologies, a new standard and a new version of a standard that integrates technologies from five other standards. They will be presented online according to the schedule reported below (the link are for registration).

- Call for Technologies:Upsampling Filter for Video application (UFV) 2024/11/27T14

- New Standard: Neural Network Traceability (NMT) 2024/12/10T15

- Revised Standard: Human and Machine Communication (HMC) 2024/12/09T16

The Up-sampling Filter for Video application (MPAI-UFV) V1.0 Call for Technologies

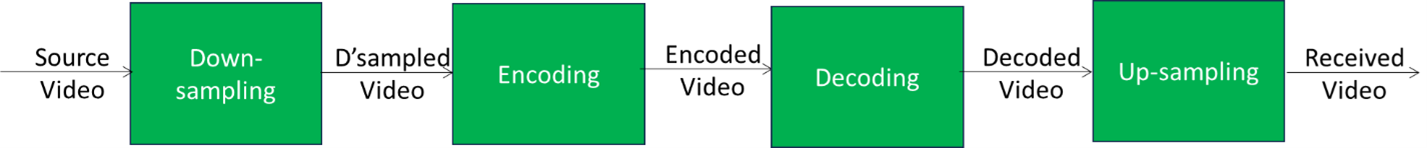

Up-sampling filters are commonly used in TV sets to bring the resolution of a received video up to the TV set resolution. They are also used in the set up displayed in Figure 1 where, a video frame is down-sampled and encoded for transmission, then decoded and up-sampled for display in the receiving device.

Figure 1 – Reference model of a video coding system with an up-sampling filter

Industry uses Super-Resolution (SR) to increase the resolution of images in several products and MPAI has conducted experiments to assess the performance of Super Resolution-based SD to HD and HD to 4K up-sampling of EVC- and VVC-encoded video sequences in four types of Training and Testing combinations.

Training: EVC Testing: EVC

Training: VVC Testing: VVC

Training: EVC Testing: VVC

Training: VVC Testing: EVC

It was found that:

- The PSNR of decoded and SR-up-sampled vs original sequences improves by ~20% compared to bicubic-up-sampling.

- The performance of SR networks trained with sequences encoded with the same codec as used in testing improves by just ~1%

The experiments were primarily conducted with luminance-only sequences but also confirmed using colour sequences with objective and subjective (experts viewing) methods. The results have led MPAI to conclude that an up-sampling filter standard with optimal performance applied to a video to generate a video with a higher number of lines and pixels would benefit many applications. The collaborative development based on responses to a Call for Technologies is expected to significantly improve on the results obtained by the MPAI experiments.

The MPAI-UFV Call for Technologies invites parties having rights to technologies satisfying the Use Cases and Functional Requirements and the Framework Licence to respond, preferably using the Template for Responses , to enable MPAI to develop the planned Technical Specification: Up-sampling Filter for Video Applications (MPAI-UFV) V1.0.

Respondents are required to provide a complete description of the proposed up-sampling filter with a level of detail allowing an expert in the field to develop and implement the proposed filter and the following software elements to be uploaded to the MPAI server:

- Docker image that contains the encoding and decoding environments, encoder, decoder, and bitstreams.

- Up-sampling filter in source code (preferred) or executable form (proponents of accepted proposals will be requested to provide the source code of their up-sampling filters).

- Python scripts to enable the evaluation team (composed of MPAI members and respondents) to replicate the following submitted results:

- Tables of objective quality results obtained by the submitter with their proposed solution.

- The decoded Test Sequences.

- The up-sampling results for SD to HD and HD to 4K obtained with the proposed solution.

- The VMAF-BD Rate assessment provided with a graph for each QP and a table with minimum, maximum and average value for each sequence.

- A Complexity assessment using MAC/pixel and number of parameters of the submitted up-sampling filter. Use of SADL (6) is recommended.

Those intending to respond should consult the four documents of the Call at here

The MPAI-UFV V1.0 Call will be presented online on 2024/11/27T14UTC. Register here to attend.

The Neural Network Traceability (MPAI-NNT) V1.0 standard

Since 2021, MPAI has been engaged in the development of standard methods to assess the performance of Watermarking techniques applied to Neural Networks producing Technical Specification: Neural Network Watermarking (MPAI-NNW) V1.0 and three versions of the (open source) Reference Software. MPAI-NNW V1.0 has been adopted by the IEEE Standards Association as IEEE Standard 3304-2023.

Recently, MPAI has developed an extension of MPAI-NNW also including methods to assess the performance of fingerprinting technologies applied to Neural Networks. The result of this effort is the new Technical Specification: Neural Network Traceability (MPAI-NNT) V1.0 approved by MPAI-50 for publication with a request for Community Comments.

What is Traceability? A technology enabling identification of the origin or verification of the integrity of data. Neural Network Traceability is essential for understanding and tracking the development, use, and evolution of deployed Neural Network models. MPAI-NNT includes methods to assess the performance of two types of traceability technologies: Watermarking, an active method which alters the parameters of the NN to insert Traceability data and Fingerprinting, a passive method which does not alter the parameters of the NN.

MPAI-NNT specifies methodologies to evaluate the following aspects of active and passive neural network traceability technologies in terms of:

- Ability of a neural network traceability detector/decoder to detect/decode/match neural network traceability data when the traced neural network has been modified.

- Computational cost of injecting, extracting, detecting, decoding, or matching neural network traceability data.

- Specifically for active tracing technologies, impact on the performance of a neural network with inserted traceability data and the output of the network (inference).

The Technical Specification assumes that:

- The neural network tracing technology to be evaluated is publicly available.

- The performance of the neural network tracing technology does not depend on secret keys.

The methods are specified in three chapters dedicated to methods for assessing Imperceptibility, Robustness, and Computational cost.

The MPAI-NNT V1.0 standard will be presented online on 2024/12/10T15UTC. Register here to attend.

The Human and Machine Communication (MPAI-HMC) V2.0 standard

MPAI has recently undertaken the task of developing the Conformance Testing of a group of its standards: Context-based Audio Enhancement (MPAI-CAE) V2.3, Multimodal Conversation (MPAI-MMC) V2.3, Object and Scene Description (MPAI-OSD) V1.2, Portable Avatar Format (MPAI-PAF) V1.3, and Human and Machine Communication (MPAI-HMC) V2.0, the last of which integrates many technologies from the four other Technical Specifications.

MPAI-HMC includes one AI Workflow named Communicating Entities in Context (HMC-CEC). This enables Entities to communicate with other Entities possibly in different Contexts where:

This enables Entities to communicate with other Entities possibly in different Contexts where:

- Entity refers to one of:

- human in an audio-visual scene or represented as a Digitised Human in an Audio-Visual Scene.

- Digital Human – representing a human (Digitised Human) or a Machine (Virtual Human) – in an Audio-Visual Scene rendered as an audio-visual scene. Context is information describing Attributes of an Entity, such as language, culture etc.

- Note that the same non-capitalised and capitalised word represents an object in the real world and its digital representation in the Virtual World, respectively.

Depending on its real or virtual nature, an Entity communicates with another Entity by:

- Using the human’s body, speech, Context, and the audio-visual scene the human is immersed in, or

- Rendering the Virtual Entity as speaking humanoids in an audio-visual scene,

and by emitting Communication Items.

A Communication Item is a Portable Avatar, a Data Type including Data related to an Avatar and its Context enabling a receiver to render an Avatar as intended by the sender.

HMC-CEC V2.0 assumes that:

- Input/Output Audio and Visual are Audio Object and Visual Object, respectively.

- The real space is digitally represented as an Audio-Visual Scene that includes the communicating human and may include other humans and generic objects.

- The Virtual Space contains a Digital Human and/or its Speech components and may include other Digital Humans and generic Objects in an Audio-Visual Scene.

- The Machine can:

- Understand the semantics of the Communication Item at different layers of depth.

- Produce a multimodal response expected to be congruent with the received information.

- Render the response as a speaking Virtual Human in an Audio-Visual Scene.

- Convert the Data produced by an Entity to Data whose semantics is compatible with the Context of another Entity.

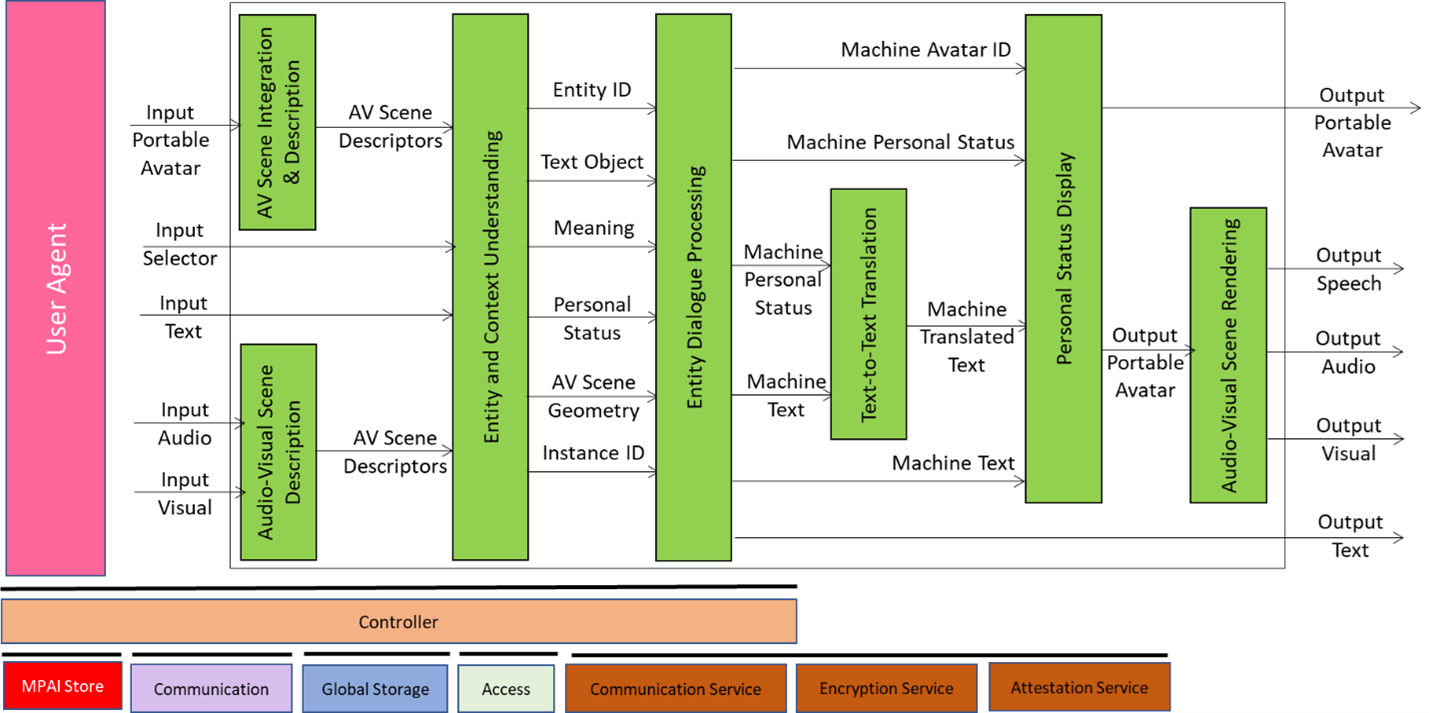

Figure 2 gives the reference model of MPAI-HMC V2.0

Figure 2 -Reference Model of Communicating Entities in Context (HMC-CEC) V2.0 AIW

HMC-CEC can receive Portable Avatars or real scenes that are converted into Audio-Visual Scenes. Entity and Context Understanding AIM processes and understands either or both Scenes irrespective of their origin. The Entity Dialogue Processing AIM produces the Machine response to the input Scenes as Text and (fictitious) Personal Status of the Machine that is converted to a Portable Avatar and displayed.

The MPAI-HMC V2.0 standard will be presented online on 2024/12/09T16UTC. Register here to attend.

Standards are tools to build societies – a special kind of society. Some standard-enabled societies are monocratic. This happens when an authority – a state or a company – sets a standard as was the case of the Roman mile and the meter. A standard-enabled society can be democratic when interested parties (or their representatives) gather to agree on a standard. The latter type of society can be based on an economy when the use of a standard is either paid by one or more of the parties involved in its use or offered for free use.

A society is generally based on rules. In the case of a standard-enabled society, the rule book is the standard itself. The standard specifies – hopefully in an unambiguous way – how a “thing” should be made so that it can seamlessly be used by society members. Another rule book (sometimes this is included in the first) also specifies how “disputes” on the correct use of the standard can be resolved.

So far, MPAI has produced 14 standards (the last is Neural Network Traceability approved by MPAI-50 and briefly introduced in this newsletter). The formal name of an MPAI standard is Technical Specification (rule book #1). Some standards include rule book #2 and this is called Conformance Testing Specification.

What is Conformance Testing of an MPAI AI Module (AIM) or AI workflow (AIW) when the technology used to implement them is Artificial Intelligence? The only possible answer in general is that the AIM/AIW should have the function specified in the Technical Specification and produce syntactically correct data when fed with syntactically correct data. This guarantees that the AIW continues its operation if an AIM in an AIW is replaced with a new Conformance Tested AIM.

Of course, this does not guarantee that the AIW will continue its operation with the same level of performance, but only that the AIW “does not crash”.

To help users select a “good” AIM (or AIW) for their needs, MPAI has introduced “rule book #3” called Performance Assessment Specification. This Performance Assessment provides methods that assess the performance of an AIM or AIW.

But Performance is a multidimensional entity because it can have various connotations, and the Performance Assessment Specification should provide methods to measure how well an AIM/AIW performs its function, using a metric that depends on the nature of the function, such as:

- Quality: the Performance of an Automatic Speech Recognition (ASR) AIM can be the word error rate (WER).

- Bias: the Performance of an Automatic Speech Recognition (ASR) AIM can measure its ability to have a higher or lower WER when the speaker is from a particular geographic area.

- Legal compliance: the Performance of an AIM can measure the compliance of an AIM/AIW to a regulation, e.g., the European AI Act.

- Ethical compliance: the Performance Assessment can measure the compliance of an AIM/AIW to a target ethical standard.

The scope of Performance Assessment is simply enormous. Publishing Performance Assessment Specifications can be the role of MPAI, but how is the Performance of an AIM/AIW actually assessed? MPAI envisages that there will be individuals and institutions who have the technical capabilities to carry out such assessments. They will be identified and appointed by MPAI as “Performance Assessors”. They will issue their Assessments and users will make their decisions based on them.

There is one last aspect of a standard-enabled society that needs to be considered – multilingualism. By this word we do not refer to natural languages – English is good enough for technical purposes – but to computer languages. MPAI develops Reference Software Implementations of Technical Specifications.

We are now ready to introduce the notion of MPAI Standard. This is a selection of the four “rule books”: Technical Specification, Reference Software, Conformance Testing, and Performance Assessment.

Meetings in the coming November-December meeting cycle