Highlights

- An overview of the Connected Autonomous Vehicle – Technologies standard

- The AIW and AIM implementation Guidelines Technical Report

- Meetings in the coming May/June 2025 meeting cycle

An overview of the Connected Autonomous Vehicle – Technologies standard

- Introduction

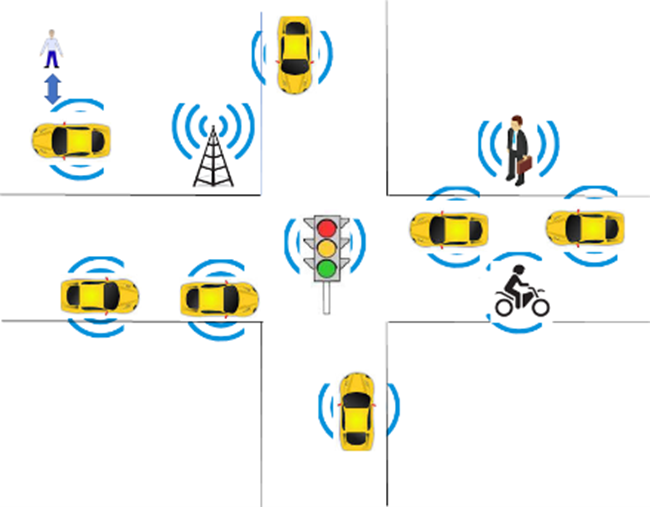

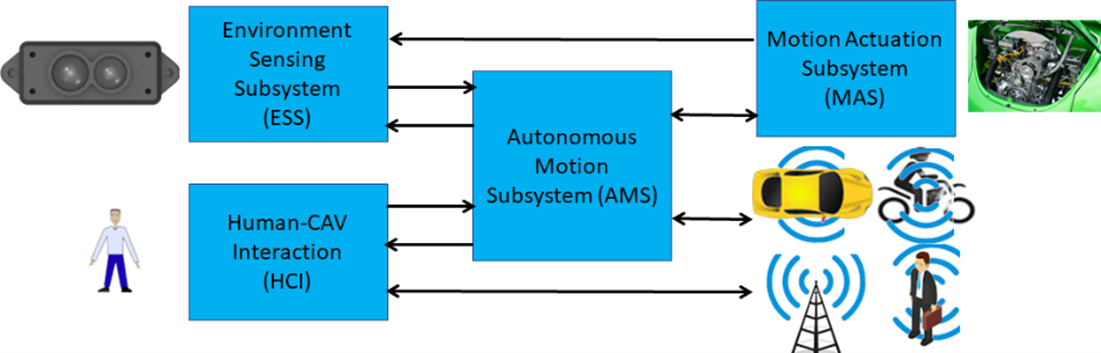

Technical Specification: Connected Autonomous Vehicle (MPAI-CAV) – Technologies (CAV-TEC) V1.0 specifies CAV as a system that instructs a vehicle with at least three wheels to reach a Destination from a current Pose at the request of a human or a process respecting the local traffic law, exploiting information that is captured and processed by the CAV and communicated by other CAVs. Figure 1 represents an example of the type of environment that a CAV is requested to traverse and Figure 2 depicts the four subsystems of which a CAV is composed, although this partitioning is not a functional requirement as components of a subsystem may be located in another subsystem, provided the interfaces specified by CAV-TEC are preserved.

Figure 1 – An example of an environment traversed by a CAV

In Figure 2, a human approaches a CAV and requests the Human-CAV Interaction Subsystem (HCI) to be taken to a destination using a combination of four media – Text, Speech, Face, and Gesture. Alternatively, a remote process may make a similar request to the CAV.

Figure 2 – The subsystems of a CAV

Either request is passed to the Autonomous Motion Subsystem (AMS), which requests the Environment Sensing Subsystem (ESS) to provide the current CAV Pose. With this information from ESS (current Pose), the Destination, and access to Offline Maps, the AMS can propose one or more Routes, one of which the human or process can select.

With the human aboard, the AMS continues to receive environment information from the ESS – possibly complemented with information received from other CAVs in range – and instructs the Motion Actuation Subsystem to make appropriate motions.

- Human-CAV Interaction

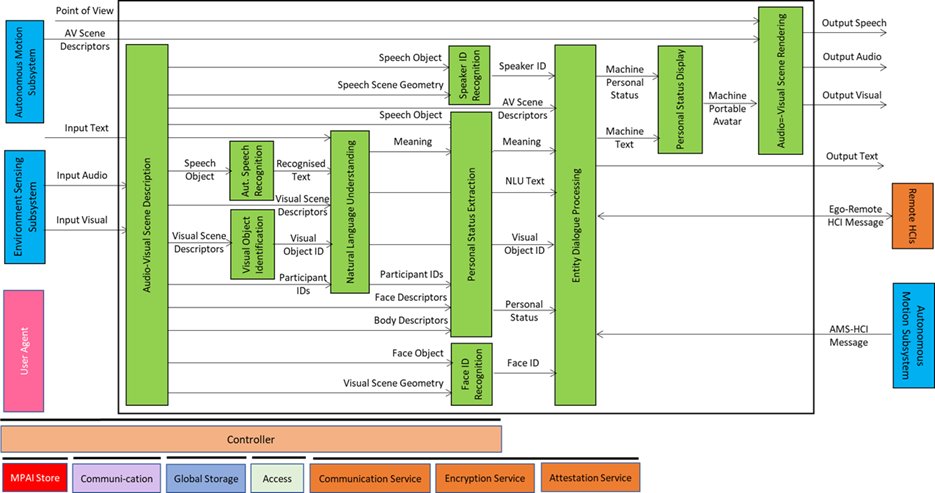

The operation of the Human-CAV Interaction (HCI) in its interaction with humans is best explained using the CAV-HCI Reference Model of Figure 3.

Figure 3 – Reference Model of CAV-HCI

The Audio-Visual Scene Description (AVS) monitors the environment and produces Audio-Visual Scene Descriptors from which it extracts Speech Scene Descriptors and from these, Speech Objects corresponding to any speaking humans in the environment surrounding the CAV. Visual Scene Descriptors may also provide the Face and Body Descriptors of all humans present.

The CAV activates Automatic Speech Recognition (ASR) to get the speech of each human recognised and converted into Recognised Text. Each Speech Object is identified according to their position in space. The CAV also activates the Visual Object Identification (VOI) that is able to produce the Instance IDs of Visual Objects as indicated by humans.

Natural Language Understanding (NLU) processes the Speech Objects, produces Refined Text, and extracts Meaning from the Text of each input Speech. This process is facilitated by the use of the IDs of the Visual Objects provided by VOI.

Speaker Identity Recognition (SIR) and Face Identity Recognition (FIR) help the CAV to reliably obtain the Identifiers of the humans the HCI is interacting with. If the Face ID(s) provided by FIR correspond to the ID(s) provided by SIR, the CAV may proceed to attend to further requests. Especially with humans aboard, Personal Status Extraction (PSE) provides useful information regarding the humans’ state of mind by extracting their Personal Status.

The CAV interacts with humans through Entity Dialogue Processing (EDP). When a human requests to be taken to a Destination, the EDP interprets and communicates the request to the Autonomous Motion Subsystem (AMS). A dialogue may then ensue where the AMS may offer different choices to satisfy potentially different human needs (e.g., a long but comfortable Route or short but less predictable).

While the CAV moves to the Destination, the HCI may have a conversation with the humans, show the Full Environment Descriptors developed by the AMS to the passengers, and may communicate information about the CAV from the Ego AMS or even from the HCIs of remote CAVs.

The HCI responds using the two main outputs of the EDP: Text and Personal Status. These are used by the Personal Status Display (PSD) to produce the Portable Avatar of the HCI conveying Speech, Face, and Gesture synthesised to render the HCI Text and Personal Status. Audio-Visual Scene Rendering (AVR) renders Audio, Speech, and Visual information using the HCI Portable Avatar. Alternatively, it can display the AMS’s Full Environment Descriptors from the Point of View selected by the human.

The HCI interacts with passengers in several ways:

- By responding to commands/queries from one or more humans at the same time, e.g.:

- Commands to go to a waypoint, park at a place, etc.

- Commands with an effect in the cabin, e.g., turn off air conditioning, turn on the radio, call a person, open a window or door, search for information, etc.

- By conversing with and responding to questions from one or more humans at the same time about travel-related issues, e.g.:

- Humans request information, e.g., time to destination, route conditions, weather at destination, etc.

- Humans ask questions about objects in the cabin.

- By following the conversation on travel matters held by humans in the cabin if

- The passengers allow the HCI to do so, and

- The processing is carried out privately inside the CAV.

3. Environment Sensing Subsystem

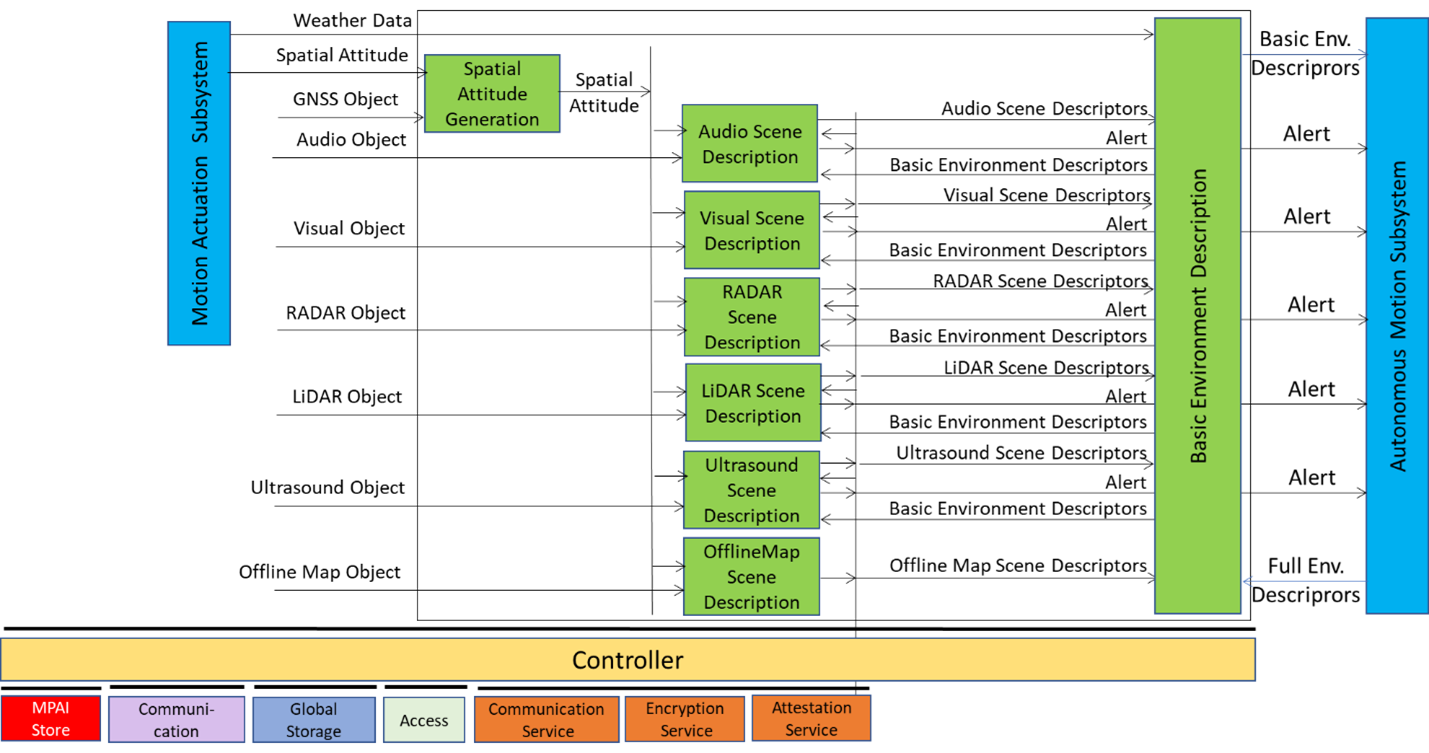

The operation of the Environment Sensing Subsystem (ESS) is best explained using the Reference Model of the CAV-ESS subsystem depicted in Figure 4.

Figure 4 – Reference Model of CAV-ESS

When the CAV is activated in response to a request by a human owner or renter or by a process, Spatial Attitude Generation continuously computes the CAV’s Spatial Attitude relying on the initial Motion Actuation Subsystem’s Spatial Attitude, and information from the Global Navigation Satellite Systems (GNSS), if available.

An ESS may be equipped with a variety of Environment Sensing Technologies (EST). CAV-TEC assumes they are (but not required to all be supported by an ESS implementation) Audio, LiDAR, RADAR, Ultrasound, and Visual. Offline Map is considered as an EST.

An EST-specific Scene Description receives EST-specific Data Objects, produces EST specific Scene Descriptors which are integrated into the Basic Environment Descriptors (BED) by the Basic Environment Description using all available sensing technologies, Weather Data, Road State, and possibly the Full Environment Descriptors of previous instants provided by the AMS. Note that, although in Figure 4 each sensing technology is processed by an individual EST, an implementation may combine two or more Scene Description AIMs to handle two or more ESTs, provided the relevant interfaces are preserved. An EST-specific Scene Description may need to access the BED of previous instants and may produce Alerts that are immediately communicated to AMS.

The Objects in the BEDs may carry Annotations specifically related to traffic signalling, e.g.: Position and Orientation of traffic signals in the environment, Traffic Policemen, Road signs (lanes, turn right/left on the road, one way, stop signs, words painted on the road), Traffic signs – vertical signalisation (signs above the road, signs on objects, poles with signs), Traffic lights, Walkways, and Traffic sounds (siren, whistle, horn).

4. Autonomous Motion Subsystem

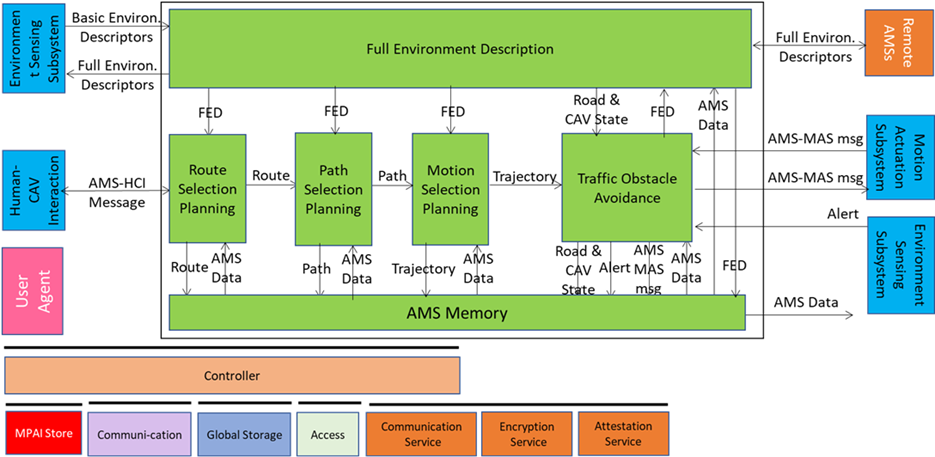

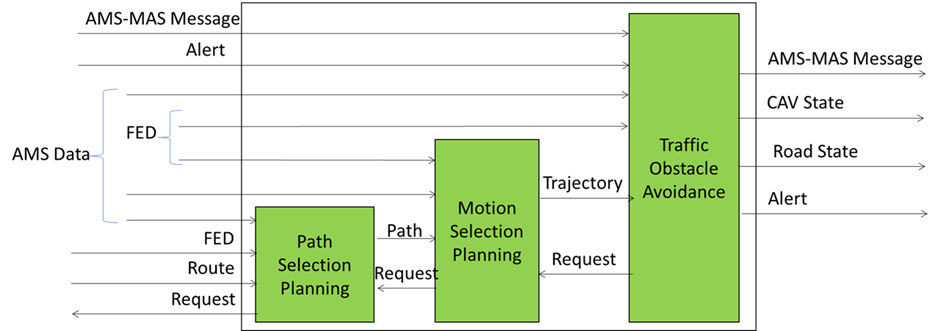

The operation of the Autonomous Motion Subsystem (AMS) is best explained using the Reference Model of the CAV-AMS subsystem depicted in Figure 5.

Figure 5 – Reference Model of CAV-AMS

When the HCI sends the AMS a request of a human or a process to move the CAV to a Destination, Route Planning uses the Basic Scene Descriptors from the ESS and produces a set of Waypoints starting from the current Pose up to the Destination.

When the CAV is in motion, Route Planning causes Path Selection Planning to generate a set of Poses to reach the next Waypoint. Full Environment Description may request the AMSs of Remote CAVs to send (subsets of) their Scene Descriptors and integrates all sources of Environment Descriptors into its Full Environment Descriptors (FED), and may also respond to similar requests from Remote CAVs.

Motion Selection Planning generates a Trajectory to reach the next Pose in each Path. Traffic Obstacle Avoidance receives the Trajectory and checks if any Alert was received that would cause a collision with the current Trajectory. If a potential collision is detected, Traffic Obstacle Avoidance requests a new Trajectory from Motion Planner, otherwise Traffic Obstacle Avoidance issues an AMS-MAS Message to Motion Actuation Subsystem (MAS).

The MAS sends an AMS-MAS Message to AMS informing it about the execution of the AMS-MAS Message received. The AMS, based on the received AMS-MAS Messages, may discontinue the execution of the earlier AMS-MAS Message, issue a new AMS-MAS Message, and inform Traffic Obstacle Avoidance. The decision of each element of the chain may be recorded in the AMS Memory (“black box”).

5. Motion Actuation Subsystem

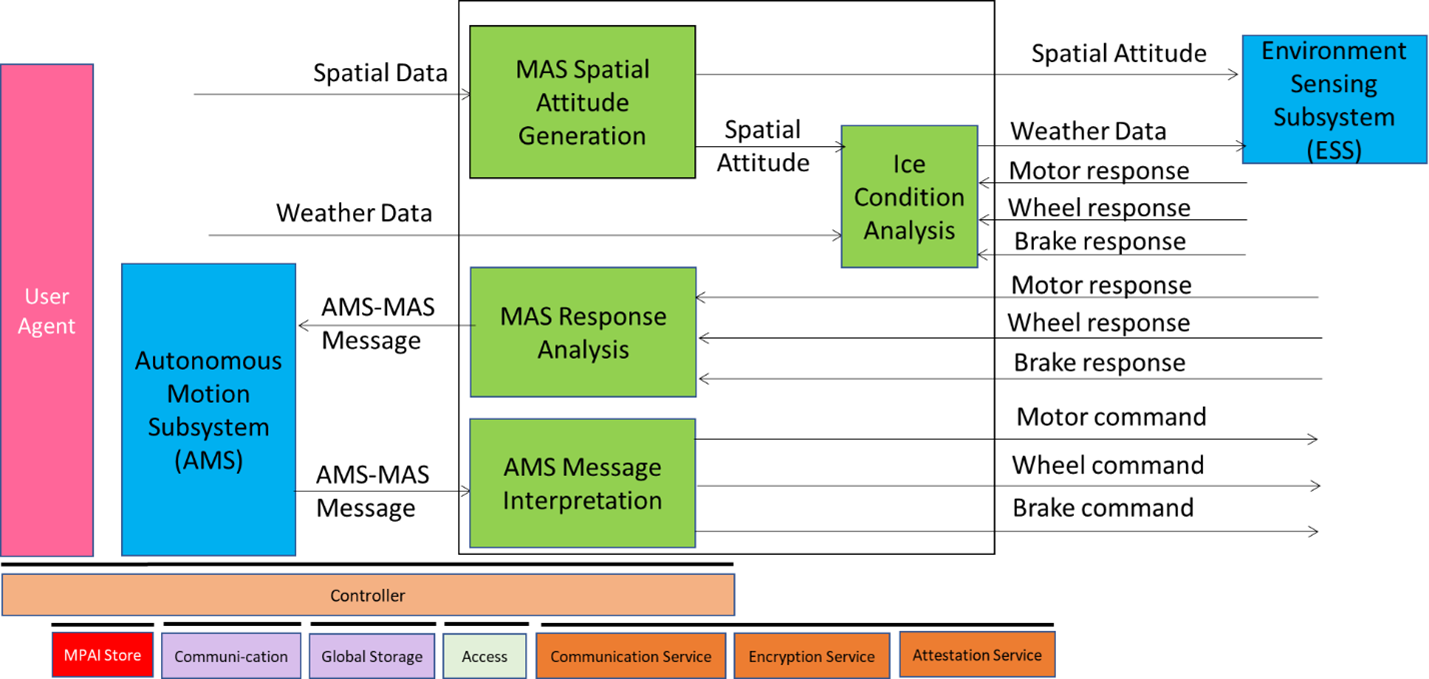

The operation of the Motion Actuation Subsystem (MAS) is best explained using the Reference Model of the CAV-MAS subsystem depicted in Figure 6.

Figure 6 – Reference Model of CAV-AMS

Figure 6 – Reference Model of CAV-AMS

When the AMS Message Interpretation receives the AMS-MAS Message from the AMS, it interprets the Messages, partitions it into commands, and sends them to the Brake, Motor, and Wheel mechanical subsystems. CAV-TEC is silent on how the three mechanical subsystems process the commands but specifies the format of the commands issued to AMS Message Interpretation. The result of the interpretation is sent as an AMS-MAS Message to AMS.

MAS includes two more AIMs. Spatial Attitude Generation computes the initial Ego CAV’s Spatial Attitude using the Spatial Data provided by Odometer, Speedometer, Accelerometer, and Inclinometer. This initial Spatial Attitude is sent to the ESS to integrate its GSNN-based Spatial Attitude. Ice Condition Analysis augments the Weather Data by analysing the Brake, Motor, and Wheel mechanical subsystems’ responses and sends the augmented Weather Data to the ESS.

The AIW and AIM Implementation Guidelines Technical Report

- Introduction

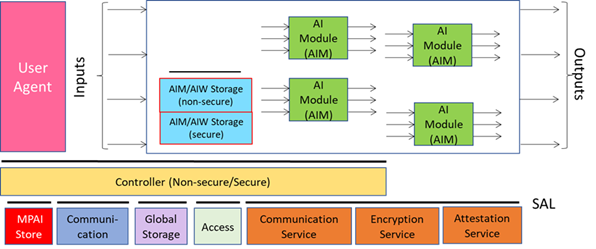

Technical Specification: AI Framework (MPAI-AIF) V2.1 is the standard that has enabled MPAI to implement the idea of exposing the internal structure of AI systems, enabling a competitive market of interchangeable components while making AI systems more user-friendly and “explainable”. An AI system is implemented as an AI Workflow (AIW) composed of AI Modules (AIM) executed in an AI Framework (AIF).

Figure 7 – The reference model of the MPAI-AIF standard

MPAI standards use the AI Framework but, as is obvious, they are silent on how AI Framework-based implementations can be made.

Technical Report: AIW and AIM implementation Guidelines (MPAI-WMG) V1.0 published for Community Comments by the 56th MPAI General Assembly (MPAI-56) tries to cover this gap. MPAI-WMG can do that because it is not a standard but a Technical Report (TR) and a Guideline.

The TR covers three related aspects:

- Clarification of the text of AIWs and some of their AIM specifications where appropriate.

- Analysis of issues regarding the implementation of all AIWs and a subset of their AIMs.

- Study the applicability of the Perceptible and Agentive AI (PAAI) model to AIWs and AIMs.

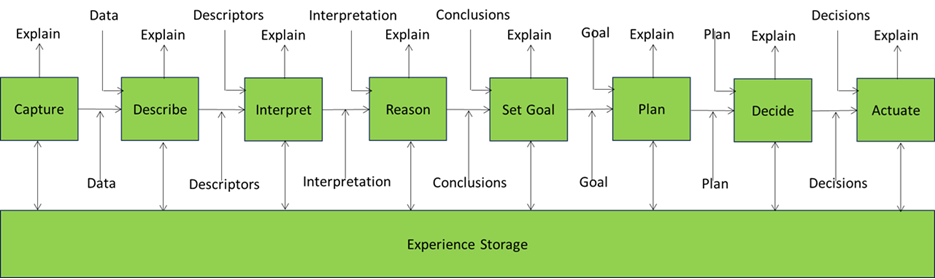

The last aspect is to be seen from the perspective of extending the capabilities of the MPAI-AIF standard using the MPAI-developed PAAI notion of an AI system that includes some or all the following elementary functionalities:

Table 1 – Functions of a PAAI

| Representation | To represent captured AV information as Data. |

| Description | To represent Data as Descriptors, e.g., AV Scene Descriptors. |

| Interpretation | To represent Descriptors as Interpretations, e.g., speech recognition. |

| Conclusion | To represent the result of reasoning about Interpretations as Conclusions. |

| Communication | To exchange Data with another PAAI. |

| Goal setting | To establish the goals to be reached. |

| Planning | To reach the goal through structured plans. |

| Decision | To decide how to implement a Conclusion. |

| Action | To actuate a Decision. |

| Explanation | To explain the path that led to a Decision. |

| Storage/Retrieval | To store/retrieve Experiences (relevant data from any of the stages above). |

| Learning | To improve the performance of a stage while experiencing. |

A PAAI Experience is defined as the relevant set of data produced by a PAAI during its operation.

Figure 8 depicts the flow of data in its different forms across the stages of a PAAI.

Figure 8 – Graphical representation of a Perceptible and Agentive AI (PAAI)

All AIWs and AIMs considered by MPAI-WMG are treated as PAAIs. However, in some cases the function of an AIM is limited to performing basic data processing functions that are required to enable the use of the MPAI-AIF “Lego” approach to building AI Systems.

The analysis is done for the following standards:

- Connected Autonomous Vehicle (MPAI-CAV) – Technologies (CAV-TEC) V1.0

- Context-based Audio Enhancement (MPAI-CAE) – Use Cases (CAE-USC) V2.3

- Human and Machine Communication (MPAI-HMC) V2.0

- Multimodal Conversation (MPAI-MMC) V2.3

- Object and Scene Description (MPAI-OSD) V1.3

- Portable Avatar Format (MPAI-PAF) V1.4

This article will consider two examples drawn from MPAI-WMG, the Entity Dialogue Processing (MMC-EDP) of MPAI-MMC and Trajectory Planning and Decision (CAV-TPD) of CAV-TEC.

- Entity Dialogue Processing

The basic function of the Entity Dialogue Processing (MMC-EDP) PAAI is to receive an input Text and produce an output Text. For reasons of completeness, we include the case of an EDP that is only able to respond to a finite set of questions and provide the corresponding answers, even though this case is not part of any Use Case considered so far. In general, however, this function is performed by a general-purpose or purpose-built Large Language Model (LLM) or Small Language Model (SLM). We can assume that an LLM or SLM includes the Reasoning function (PAAI).

In its use cases, MPAI has considered EDPs that also produce Personal Status as additional output. This is done when the EDP tries to infer, from the input Text or from other data types provided as input, the internal state of the Entity – which MPAI assumes can be either a human or a process rendering an avatar – that has generated the Text, because there are other AIMs in the chain that can produce or utilise this information. Note that the internal state that is inferred may have a good correspondence with a human’s internal state or can just be a simulated internal state of an avatar.

Other MPAI Use Cases assume that the EDP can handle more data types as input.

- Entity ID. An EDP may be provided with the ID of the Entity producing the Text. This information may be used to access previously stored PAAI data (Storage/Retrieval).

- Meaning. Some AIWs include an AIM called Natural Language Understanding (NLU) that produces Refined Text and Meaning from Recognised Text. The Meaning can help the EDP to produce a better response.

- Personal Status. Some AIWs include one or more AIMs able to produce the Personal Status of the Entity generating Text – directly or by using an Automatic Speech Recognition (ASR). Since it may include information extracted from Text, Speech, Face, and Gesture, the Personal Status can significantly improve the performance of an EDP.

- Audio-Visual Scene Geometry. Some AIWs are able to provide information about the spatial context in which the information-providing Entity operates.

- Audio-Visual Object IDs. Other AIWs can also provide information about the identity of one or more Audio or Visual or Audio-Visual Objects present in the spatial environment of the Entity.

In conclusion, the LLM implementing the EDP could be provided with the following prompt:

Please respond to Text provided by the Entity which is believed to hold the Personal Status and is located in a scene populated by the following identified objects and Entities located at the following coordinates of the scene.

The data referred to by the words in italics are provided by the data provided to the EDP.

The EDP AIM performs Interpretation and Reasoning Level Operations (see Table 1).

- Trajectory Planning and Decision

CAV-TPD is the PAAI that executes the Route that has been requested to a CAV. It does so by planning, deciding, and sending messages containing the Spatial Attitude that the CAV should reach in an assigned time frame.

CAV-TEC proposes three PAAIs – Path Selection Planning, Motion Selection Planning, and Traffic Obstacle Avoidance.

Figure 9 – Reference Model of Trajectory Planning and Decision

The Path Selection Planning PAAI receives the Route and breaks it down to a sequence of Paths. A Path is sent to the Motion Selection Planning PAAI which decides the Trajectory that should be used to execute the Path. The Trajectory is sent to the Traffic Obstacle Avoidance (CAV-TOA) PAAI which makes a final check on any Alert messages that CAV-TOA might have received. If this last check is successful, CAV-TOA sends an AMS-MAS Message to the CAV Motion Actuation Subsystem containing the request to move the CAV to a Pose with a specified Spatial Attitude. If the Alert makes the execution of the Trajectory impossible, a request for a new Trajectory is sent to CAV-MSP. If this is unable to find another Trajectory, it requests a new Path to CAV-PSP. If another Path is not possible, it requests a new Route.

CAV-TPD stores and retrieves relevant internal data from AMS Memory. It is “adaptable” because it can dynamically adjust its operation based on an Alert coming from a Scene Description AIM.

CAV-TPD performs Descriptors, Interpretation, Reasoning, Goal Setting, Planning, Conclusion, and Decision Level Operation as defined in Table 1.

Meetings in the coming May/June 2025 meeting cycle

| Group name | 19-23 May | 26-30 May | 02-06 June | 09-13 June | Time (UTC) |

| AI Framework | 19 | 26 | 2 | 9 | 16 |

| AI-based End-to-End Video Coding | 21 | 4 | 14 | ||

| AI-Enhanced Video Coding | 21 | 28 | 4 | 10 | 13 |

| Artificial Intelligence for Health | 11 | 14 | |||

| 26 | 15 | ||||

| Communication | 22 | 5 | 13:30 | ||

| Compression & Understanding of Industrial Data | 30 | 09 | |||

| 5 | 09:30 | ||||

| Connected Autonomous Vehicle | 22 | 29 | 5 | 12 | 15 |

| Context-based Audio enhancement | 20 | 27 | 3 | 10 | 16 |

| Industry and Standards | 23 | 6 | 16 | ||

| MPAI Metaverse Model | 23 | 30 | 6 | 13 | 15 |

| Multimodal Conversation | 20 | 27 | 3 | 10 | 14 |

| Neural Network Watermarking | 20 | 27 | 3 | 10 | 15 |

| Portable Avatar Format | 23 | 30 | 6 | 13 | 10 |

| XR Venues | 20 | 27 | 3 | 10 | 17 |

| General Assembly (MPAI-57) | 11 | 15 |