Highlights

- Online presentation of MPAI Metaverse Model – Technologies (MMM-TEC) V2.1 standard

- Exploring the innovations of the MMM-TEC V2.1 standard

- Soon MPAI will be five – part 3

- Meetings in the coming August/September 2025 meeting cycle

Online presentation of MPAI Metaverse Model – Technologies standard

Version 2.1 of the MPAI Metaverse Model -Technologies (EVC-TEC) adds major functionalities to the previous V2.0 These are your available options to learn about it:

- Read the online text of the standard here.

- Read a brief introduction to the standard here.

- Attend the online presentation on 12 September at 15 UTC by registering here.

- The MMM-TEC V2.1 standard on 12 September at 15 UTC (link).

- The MPAI-OSD V1.4 and MPAI-PAF V1.5 standards on 12 September at 10 UTC (link).

- The MPAI-TFA standard on Wednesday 17 September at 15 UTC (link)

- The MPAI-GME V2.0 standard on Friday 26 September at 14 UTC (link).

In any case, provide your comments to the MPAI Secretariat by 2025/09/27 T23:59 UTC.

Exploring the innovations of the MMM-TEC V2.1 standard

The MPAI Metaverse Model (MPAI-MMM) – Technologies (MMM-TEC) specification is based on an innovative approach. As in the real world (Universe) we have animate and inanimate things, in an MPAI Metaverse (M-Instance) we have Processes and Items. Processes can animate Items (things) in the metaverse but can also act as a bridge between metaverse and universe. For convenience, MMM-TEC defines four classes of Processes: Apps, Devices, Services, and Users.

Probably, the most interesting one is the User, defined as the “representative” of a human where representation means that the human is responsible for what their Users do in the metaverse. The representation function can be very strict because the human drives everything one of their User does or very loose because the User is a fully autonomous agent (still under the human’s responsibility). As the User is a Process, it cannot be “perceived” except from what it does, but it can render itself in a perceptible form, called Persona that may visually appear as a humanoid. A human can have more than one User, and a User can be rendered with more than one Persona.

Humans can do interesting things in the world, but what interesting things can they do in the metaverse? MMM-TEC answers this question by offering a range of 28 basic Actions, called Process Actions. An important one is Register. By Registering, a human gets the Rights to import (via the UM-Send Action) and deploy (via the Execute Action) Users and render (by e.g., MM-Adding) Personae. UM-Send means sending things from the universe to the metaverse and MM-Add means placing an Avatar and then possibly animating it (MM-Animate) with a stream or rendering it (MU-Actuate) in the universe.

Universe and metaverse are connected, but they should be mutually “protected”. One example of what this means is data from the universe cannot be simply imported into the metaverse, but is first captured (UM-Capture), then identified (Identify) – i.e., converted into an Item – and finallu acted upon, e.g., used to animate an avatar. Also, a User is not entitled to do just anything anywhere in the metaverse because its operation is governed by three basic notions: Rights, expressing the fact that a User (in general, a Process) may perform a certain Process Action; Rules, expressing the fact a Process may, may not, or must perform a Process Action; and P-Capabilities expressing that the Process can perform certain Process Actions.

What if a Process wants to perform a Process Action, has the Rights to perform it, and its performance complies with the Rules, but it cannot, i.e., it does not know how to perform it? MMM-TEC makes use of a philosophy of language notion called Speech Act that is expressed by an individual and contains both information and action. For instance, User MU-Actuates Persona At M-Location At U-Location With Spatial Attitude will mean that the User renders at U-Location in the universe with a certain Position and Orientation the Persona that is placed at an M-Location in the Metaverse. If the User can – i.e., it has the P-Capabilities to – MU-Actuate the Persona, for instance because it is connected to the universe via an appropriate device, and may, i.e., it has the Rights to MU-Actuate, and the planned Process Action complies with the Rules, then the Process Action is performed. However, if the User does not have the necessary P-Capabilities or does not have the Rights to MU-Actuate the Persona, it can ask an Import-Export Service to do this on its behalf. Possibly, the Service will request that a Transaction be made in order to perform the requested Process Action.

As a last point, we should describe how MMM-TEC represents Rights and Rules. MMM-TEC states that Rights are, in general, a collection of Process Actions that the Process can perform. Each of them is preceded by Internal, Acquired, or Granted to indicate if the Rights were obtained at the time of Registration, were acquired (e.g., by a Transaction), or are Granted (and then possibly withdrawn) by another Process. Similarly, Rules are expressed by Process Actions each of which is preceded by May, May not, or Must.

We could add many more details to give a complete description of the MMM-TEC potential. You can directly access the standard here, but now we want to address some of the innovations introduced by MMM-TEC V2.1.

The first is the set of new capabilities provided by the Property Change Process Action. We said that we can MM-Add a Persona and then MM-Animate it. But what if we are preparing a theatre performance and we do not want “to be seen” while rehearsing? Property Change can set the Perceptibility Status of an Item but can also change:

- The properties of a visual Item in terms of its size, mass, material (i.e., to signal that the object is material or immaterial), gravity (is subject to gravity or not), and texture map.

- The audio characteristics of an object: Reflectivity, Reverberation, Diffusion, and Absorption.

- The properties of a light source: Type (Point, Directional, Spotlight, Area), colour, and intensity of the light source.

- The properties of an audio source: Diffuseness, Directional Patterns, Shape, and Size.

- The Personal Status (i.e., emotion) of an avatar.

Another important set of functionalities is provided by significant extensions of how a Process in the metaverse can affect the universe. MMM-TEC V2.1 allows a User to MU-Actuate at a U-Location an Item MM-Added at an M-Location. How can this Process Action be performed? We assume that the M-Instance is connected to a special Device that can perform the following in the universe:

- Pick an existing object.

- Drive a 3d printer that produces the analogue version of the Item.

- Render a 2D or a 3D media object.

MMM-TEC V2.1 calls R-Item any physical object in the universe, including the object produced by a 3D printer and the 2D or 3D media object produced. It also defines the following additional Process Actions:

- MU-Add an R-Item: to place an R-Item (a physical object) somewhere in the universe with a Spatial Attitude.

- MU-Animate an R-Item: to animate, e.g., a robot, with a stream.

- MU-Move an R-Item from a U-Location to another U-Location along a Trajectory.

MMM-TEC is rigorous in defining how Process Actions can be performed in an M-Instance, but what about the universe? Do we want Processes to perform actions in the universe in an uncontrolled way?

The answer is clear: the M-Instance does not control the Universe through some supernatural force but through Devices whose operation is conditional on the Rights and P-Capabilities held by the Device to perform the desired Process Actions in the universe. The Process Actions beginning with “MU-” include the Rights of a Device to act on the universe.

V2.1 adds several new use cases to the long list of V2.0. One of these is called “Emergency in Industrial Metaverse”:

- An M-Location includes the Digital Twin of a real factory (R-Factory) where the regular operation is separated from emergency operation described by the use case.

- An “emergency” User in the Digital Twin (V-Factory):

-

- Has the Rights to actuate and animate an “emergency” robot in the R-Factory.

- Can be rendered as a Persona having the appearance of the corresponding robot.

- In case of an emergency, the User:

-

- Activates an alarm in the R-Factory.

- Actuates its “emergency” robot (Analogue Twin) in the R-Factory.

- Animates the robot to solve the problem.

- Renders its Persona so that humans can see what is happening in the R-Factory.

- When the emergency is resolved, the robot is moved to its repository.

You are invited to register to attend the online presentation on 12 September at 15 UTC and provide your comments to the MPAI Secretariat by 2025/09/27 T23:59 UTC.

Soon MPAI will be five – Part 3

This is the third episode of a series of articles that revisit the driving force that allowed MPAI to develop 15 standards in five years in a variety of fields where AI can make the difference and to have half a score under development. The articles intend to pave the way for the next five years of MPAI.

3 The MPAI mission

According to Article 3 of the MPAI Statutes, the mission of MPAI is to develop standards for data coding using Artificial Intelligence (AI). However, to fully understand this mission, two fundamental questions must be addressed: What exactly is a data coding standard? And what role does AI play in data coding?

In today’s digital society, it is widely recognized that we are inundated with data. One estimate predicts that by 2025, approximately 180 zettabytes (10²¹ bytes) of data will be generated – a 20% increase from the estimated 150 zettabytes produced in 2024. This raises critical questions: What does all this data represent? What are the costs associated with storing even a portion of it? And how expensive is it to transmit such vast amounts of information?

These concerns are not new. Since the advent of the digital era, it has been clear that applications often do not require all available data, or that data can be represented in more compact forms using fewer bits. This concept laid the foundation for data processing techniques that have been refined over time, ultimately enabling the widespread use of technologies like video. But new problems and needs surface. We continue to need less data with the same scope but there is a growing need to automatically “understand” the meaning of this many data and we are using ever larger amounts of data to transfer human knowledge to machines to equip them with new capabilities – what we have been accustomed Artificial Intelligence.

What does it mean to develop AI standards, then?

It is one thing to say that we need standards for AI and quite another to say what a standard for AI should specify. The first norm is that the standard should specify what flows through the interface of an AI System – data – but not what is in the AI System that processes input data and produces output data. What is an AI System is left undefined. It can be a large system, or a small one as long as the system retains a practical value as an individual entity.

A standard that enables the building of larger AI Systems from smaller components has advantages because it allows users to have a better idea of the operation of the system, it facilitates integration of components from disparate competence fields, enables optimisation of individual components, promotes the availability of components made available by competing developers, and more.

Component systems are thus a target for AI-based data coding standards, but what is the process that can produce candidate components for standardisation? The answer to this question is in identifying application domains and, within these, representative use cases. For each use case, an analysis is made of what components might be needed and which data enter and exit systems and components. The process could be implemented because the MPAI membership comes indeed from a variety of domains: media, finance, human-machine interaction, online gaming, entertainment, and more.

Having components – the building blocks – is a necessity but how should components interconnect with other components to make a system? And, once there is a system, how is it going to be executed?

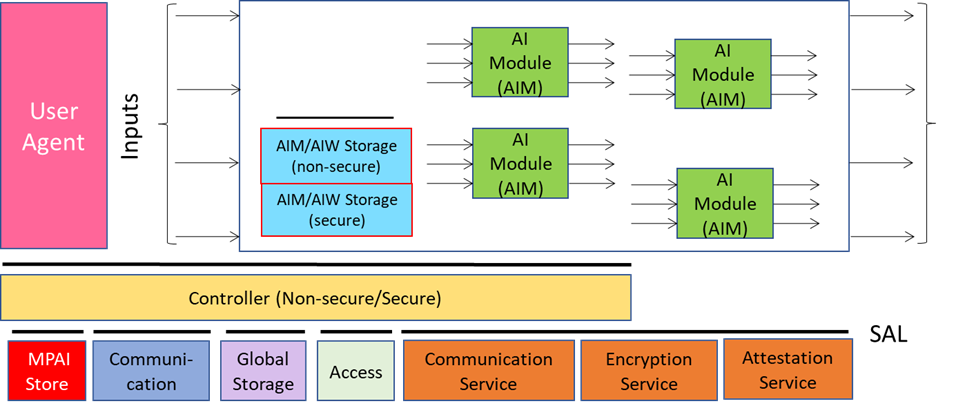

This was one of the first questions that MPAI had to address. The solution found was called AI Framework, an environment enabling initialisation, dynamic configuration, execution, and control of components called AI Modules (AIM) assembled in systems called AI Workflows (AIW).

The reference model of the standard – called AI Framework (MPAI-AIF), now at Version 2.1 – is depicted in Figure 2. The model assumes that there is:

- A User Agent so that users can act on the system.

- A Controller that

- Provides basic functionalities such as scheduling, communication between AIMs and other AIF Components such as AIM-specific and global storage.

- Acts as a resource manager, according to instructions given by the User through the User Agent.

- Exposes API for User Agent, AIMs, and Controller-to-Controller.

- Downloads AIWs and AIMs from the MPAI Store.

- Communication connecting an output Port of an AIM with an input Port of another AIM.

Figure 2 – Reference Model of MPAI-AIF

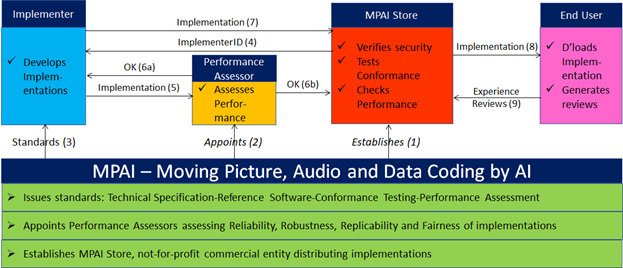

The MPAI Store is a foundational element of the AIF architecture. Assume that you are an AIM developer and that you want to make available your latest AIM for users to download and use in their systems, e.g., to replace an existing AIM with the same functionality in an app. Who certifies that the AIM is a correct implementation of an MPAI standard, and is also secure?

This is a new problem because, in the current context, the integration of components is done by a device, service, or application developer who has both the means and the technical expertise to do a proper job. Instead, MPAI is attempting to make possible a world where components – not full-fledged applications – can be downloaded by a user without particular expertise and integrated in an application developed by a third party.

This is an issue because any standard gives rise to an ecosystem whose actors are: the entity developing the standard, the implementer of the standard, the user of the standard, and the guarantor of the correct functioning of the ecosystem. Depending on the application domain, the guarantor can be the state, a certification authority, or even the manufacturer. Making the state the guarantor is not a solution for the current reality. In principle, the “manufacturer” of MPAI solutions (AIW) may very well not exist, but only the developer of basic components distributed as AIMs. So, we need a new entity – the MPAI Store.

The MPAI Store is incorporated in Scotland as a company limited by guarantee, a not-for-profit business set up to serve social, charitable, community-based or other non-commercial objectives. MPAI has signed an agreement with the MPAI Store giving it the exclusive rights to act as Implementer ID Registration Authority (IIDRA). Any entity wishing to develop and distribute AI Systems (AIW) and components (AIM) for use in an AI Framework (AIF) shall obtain an ID from the IIDRA.

How is an AIW or AIM implementation distributed? The registered implementer submits the implementation to the MPAI Store. The implementation is tested for conformance according to the Conformance Testing specification, a document developed, approved, and published by MPAI for each MPAI standard. If the implementation passes conformance testing, it is verified for security and posted on the MPAI Store website for users to access, download, and install.

All done, then? Well, in general at least, no. Conformance simply checks that an implementation receives data with the correct format, does what the reference standard specifies, and produces data with the correct format. But there is no guarantee that the AIM or AIW does a “good job”.

This is the consequence of the MPAI standard specifying input and output data and functionality but being silent of how the functionality is achieved. The Performance Assessment specification mentioned above makes up for the need of a measure of how “good” an implementation is. As we have already mentioned “good” has many dimensions and it would make no sense for the MPAI Store to attempt to issue statements on how good an implementation is. MPAI can appoint entities as Performance Assessors for specific domains and the MPAI Store can publish Performance Assessments of an implementation.

Figure 3 depicts the actors of the MPAI ecosystem and their functions.

Figure 3 – Management of the MPAI Ecosystem

Technical Report: Governance of the MPAI Ecosystem (MPAI-GME) V2.0 specifies the functions and responsibilities of the MPAI Ecosystem’s actors.

(continue)

Meetings in the coming August/September 2025 meeting cycle

| Group name | 25-29 Aug | 01-05 Sep | 08-12 Sep | 15-19 Sep | 22-26 Sep | 29 Sep-03 Oct | Time (UTC) |

| AI Framework | 1 | 8 | 15 | 22 | 29 | 16 | |

| AI-based End-to-End Video Coding | 27 | 10 | 24 | 14 | |||

| AI-Enhanced Video Coding | 27 | 3 | 10 | 17 | 24 | 1 | 13 |

| Artificial Intelligence for Health | 8 | 15 | |||||

| Communication | 28 | 11 | 25 | 13:30 | |||

| Compression & Understanding of Industrial Data | 28 | 8:30 | |||||

| 15 | 15 | ||||||

| Connected Autonomous Vehicle | 28 | 4 | 11 | 18 | 25 | 2 | 15 |

| Context-based Audio enhancement | 26 | 2 | 9 | 16 | 23 | 16 | |

| Data Types, Formats, and Attributes | 17 (*) | 15 | |||||

| Governance of the MPAI Ecosystem | 26 (*) | 14 | |||||

| Industry and Standards | 29 | 12 | 26 | 16 | |||

| MPAI Metaverse Model | 29 | 5 | 12 (*) | 19 | 26 | 3 | 15 |

| Multimodal Conversation | 26 | 2 | 9 | 16 | 23 | 14 | |

| Neural Network Watermarking | 26 | 2 | 9 | 16 | 23 | 15 | |

| Portable Avatar Format | 29 | 5 | 12 (*) | 19 | 26 | 3 | 10 |

| XR Venues | 26 | 2 | 9 | 16 | 23 | 17 | |

| General Assembly (MPAI-60) | 30 | 15 |

(*) public presentations