Highlights

- Try the performance of the Up-sampling Filter for Video applications online

- A web of intertwining standards

- More about the new project on Autonomous Users in an MMM metaverse instance

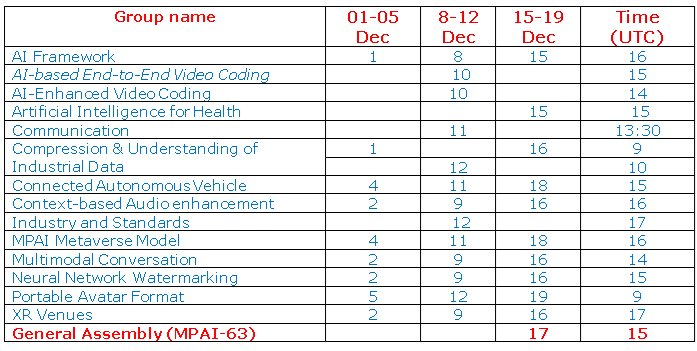

- Meetings in the December 2025 cycle

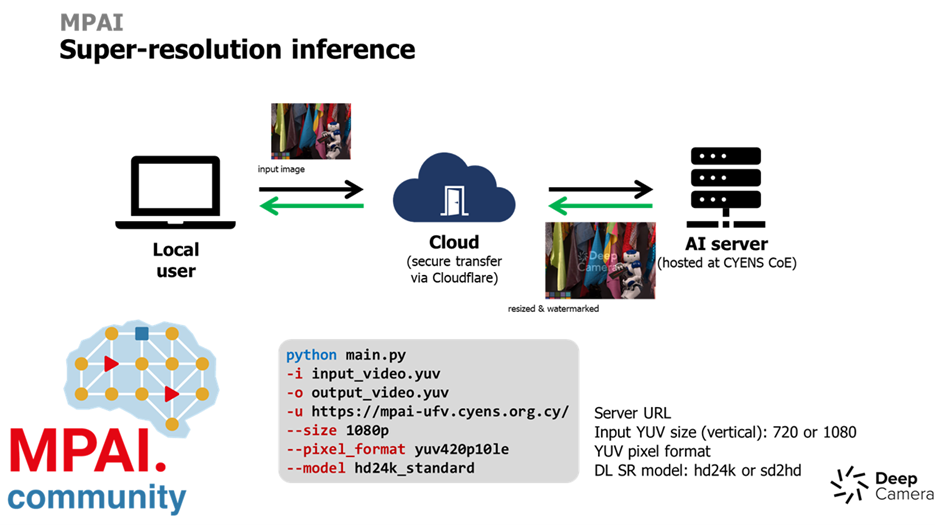

Try the Up-sampling Filter for Video applications standard online

The sixty-second MPAI General Assembly (MPAI-62) has published the latest MPAI standard: AI-Enhanced Video Coding (MPAI-EVC) – Up-sampling Filter for Video applications (EVC-UFV). The standard specifies two methods 1) design of AI-based super-resolution up-sampling filters for video applications and 2) reduction of the complexity of the designed filters without substantially affecting their performance.

The EVC-UFV standard includes the parameters of an example neural network for up-sampling standard resolution TV images to HDTV resolution and for up-sampling HDTV resolution images to Ultra-High Resolution (4K). You can use this network to test the filter performance. Alternatively, you can use this application to submit an image and receive an up-sampled version of the image.`

Here is how it works with this command line example:

python main.py -i input_video.yuv -o output_video.yuv -u https://mpai-ufv.cyens.org.cy/ –size 1080p –pixel_format yuv420p10le –model hd24k_standard

Here

| -i | the input file name |

| -o | the output file name |

| -u | the server URL (https://mpai-ufv.cyens.org.cy/) |

| –size | the number of lines of the input frame |

| –pixel format | yuv420p10le |

| –model | choose one of sd2hd (standard def to HD), hd24k (HD to 4K) |

| _full | filter not reduced in complexity |

| _standard | filter reduced in complexity |

A web of intertwining standards

With the approval Up-sampling Filter for Video applications, the number of approved standards has reached 15. MPAI implement its mission of developing standards for AI-based data coding for any application domains in need of efficient representation of data and 2/3 of its standards are standards that define the components – AI Modules (AIM) – often reused in other MPAI standards.

What are these 15 MPAI standards? Here is the list:

| Acronym | Title | Acronym | Title |

| MPAI-AIF | AI Framework | MPAI-MMM | MPAI Metaverse Model |

| MPAI-CUI | Compression and Understanding of Industrial Data | MPAI-MMC | Multimodal Conversation |

| MPAI-CAV | Connected Autonomous Vehicle | MPAI-NNW | Neural Network Traceability |

| MPAI-CAE | Context-based Audio Enhancement | MPAI-OSD | Object and Scene Description |

| MPAI-TFA | Data Types, Formats, & Attributes | MPAI-PAF | Portable Avatar Format |

| MPAI-GME | Governance of the MPAI Ecosystem | MPAI-PRF | AI Module Profiles |

| MPAI-HMC | Human and Machine Communication | MPAI-SPG | Server-based Predictive multiplayer Gaming |

What is the meaning of components re-usability? Let’s first see which standards provide components to which other standards. Here is the table to be read by columns where rows indicate which standards receive components from the standard in the column. For instance, AI Framework (AIF) Provides technologies (the AI Framework) to.

This is the complete table of how a standard given in the first row – say – AIF impacts other standards – in this case, CUI, CAV, CAE, HMC, MMC, NNT, OSD, PAF, and SPG given in the AIF column.

| AIF | CUI | CAV | CAE | HMC | EVC | MMM | MMC | NNT | OSD | PAF | PRF | SPG | TFA | |

| AIF | X | |||||||||||||

| CUI | X | |||||||||||||

| CAV | X | X | X | X | X | X | ||||||||

| CAE | X | X | X | |||||||||||

| EVC | ||||||||||||||

| HMC | X | X | X | X | X | |||||||||

| MMM | X | X | X | X | X | |||||||||

| MMC | X | X | X | X | X | X | ||||||||

| NNT | X | |||||||||||||

| OSD | X | X | X | X | X | X | ||||||||

| PAF | X | X | X | X | X | |||||||||

| PRF | ||||||||||||||

| SPG | X | |||||||||||||

| TFA |

You can see that the AI Framework (definition of the environment where AI Workflows composed of AI Modules are executed) provide components to 9 out of 14 standards and TFA (Data Types, Formats, and Attributes of data) feeds 7 out of 14 standards. OSD (Object and Scene Description) is another foundational standard feeding six out of 1 4 standards. Three more standards – CAE (Context-based Audio Enhancement), MMC (Multimodal Communication), and PAF (Portable Avatar Format) feed five standards each. PRF (Profile) is a standard at an initial phase defining profiles for AI Modules and in the future also for AI Workflows. At the moment, profiles have only been defined for three standards – MMC, OSD, and PAF.

What about the future? The trend will only continue. Judging from the current state of the draft standards, AI for health (AIH) will receive technologies from AIF and TFA; Autonomous Agent Architecture (AUA) will receive technologies from AIF, CAE, MMC, OSD, PAF, and TFA; and Live Theatrical Performance (LTP) will also receive technologies from AIF, CAE, MMC, OSD, PAF, and TFA.

Informing readers about the Autonomous User Architecture project

MPAI has made efforts in various directions to disseminate information about the Pursuing Goals in metaverse – Autonomous User Architecture (PGM-AUA) standard project whose Call for Technologies was approved by the 61st General Assembly for which responses shall reach the MPAI Secretariat by 2025/01/21. The first is the online presentation of the project made on 17 November. The video recording and the PowerPoint slides presented are available online.

A first post A new MPAI standard project for Autonomous Users in a metaverse has presented the scope of the Autonomous User Architecture (PGM-AUA) standard project, the specification of the ten components of an autonomous agent (A-User) located in a metaverse and interacting in words and deeds with a metaverse User. This can be another A-User or an H-User, i.e., an avatar driven by a human.

The first has so far been followed by five posts published on the MPAI website each introducing an AI Module of the architecture adopted in the Tentative Technical Specification attached to the Call for Technologies:

A-User Control: The Autonomous Agent’s Brain: A-User Control decides what the A-User should do, which AIM should do it, and how it should do it – all while respecting the Rights granted to the A-User and the Rules defined by the M-Instance.

Context Capture: The A-User’s First Glimpse of the World: Context Capture is the A-User’s sensory front-end – the AIM that opens up perception by scanning the environment and assembling a snapshot of what’s out there in metaverse.

Audio Spatial Reasoning: The Sound-Aware Interpreter: Audio Spatial Reasoning is the A-User’s acoustic intelligence module – the one that listens, localises, and interprets sound as data having a spatially anchored meaning.

Visual Spatial Reasoning: The Vision Aware Interpreter embedded in the “brain” of the Autonomous User that makes sense of what the Autonomous User sees understanding objects’ geometry, relationships, and salience.

Prompt Creation: Where Words Meet Context is the storyteller and translator in the Autonomous User’s “brain”, It takes raw sensory input – audio and visual spatial data of Context and the Entity State – and turns it into a well‑formed prompt that Basic Knowledge can respond to.

Meetings in the December 2025 cycle.