Contents

2 Scope of CAE-6DoF Use Cases and Functional Requirements

Foreword

This document, issued by Moving Picture, Audio, and Data Coding by Artificial Intelligence (MPAI), collects Use Cases and Functional Requirements relevant to Technical Specification: Context-based Audio Enhancement (MPAI-CAE) – Six Degrees of Freedom Audio (CAE-6DF) that MPAI intends to develop. This document is part of the set of documents comprising CAE-6DF Call for Technologies [4], CAE-6DF Framework Licence [5], and CAE-6DF Template for Responses [6].

MPAI is an international non-profit organisation having the mission to develop standards for Artificial Intelligence (AI)-enabled data coding and technologies facilitating integration of data coding components into Information and Communication Technology (ICT) systems [1]. The MPAI Patent Policy [2] guides the accomplishment of the mission.

1 Introduction

Established in September 2020, MPAI has developed eleven Technical Specifications relevant to its mission such as execution environment of multi-component AI applications, portable avatar format, object and scene description, neural network watermarking, context-based audio enhancements, multimodal human-machine conversation and communication, company performance prediction, metaverse, and governance of the MPAI ecosystem. Five Technical Specifications have been adopted by IEEE without modification and four more one more are in the pipeline. Several other standard projects – such as AI for Health, online gaming and XR Venues – are under way and are expected to deliver specifications in the next few months.

MPAI specifications are the result of a process whose main steps are:

- Development of functional requirements in an open environment.

- Adoption of “commercial requirements” (Framework Licence) by MPAI principal members setting main elements of the future licence to be issued by standard essential patents holders.

- Publication of a Call for Technologies referring the two sets of requirements inviting the submission of contributions by parties who accept to licence their technologies according to the Framework Licence, if their technologies are accepted to be part of the target Technical Specification.

This document is the Use Cases and Functional Requirements related to the planned Technical Specification: Context-based Audio Enhancement (MPAI-CAE) – Six Degrees of Freedom Audio (in the following called CAE-6DF).

2 Scope of CAE-6DF Use Cases and Functional Requirements

MPAI has developed Technical Specification: Context-based Audio Enhancement (MPAI-CAE) V2.1 specifying the Audio Scene Descriptors enabling the description of the Audio Scene and the identification of the Audio Objects.

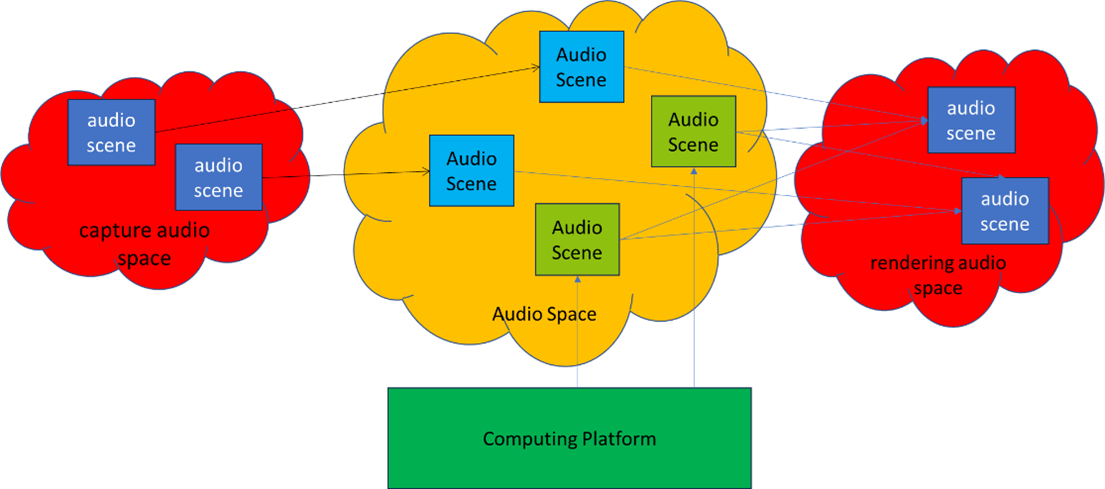

This document collects Use Cases and Functional Requirements of Audio Spaces including Audio Scenes composed of digital representations of acoustical scenes and other synthetic Audio Objects that can be rendered in a perceptually veridical fashion of arbitrary user-selected points of the audio scene. This is depicted in Figure 1 (words in lowercase refer to the real space, in uppercase to Virtual Space.

Figure 1 – real spaces and Virtual Spaces in CAE-6DF

3 Use Cases

Use Case 1 – Immersive Concert Experience (Music plus Video)

User is sitting at home listening to a concert rendered in the space of his/her living room. User can go to a point in his/her living room area and have the audio experience of a human in the equivalent position in the concert hall. The user will be able to synchronise spatially and temporally to 360°Video perspectives.

Use Case 2 – Immersive Radio Drama (Speech plus Foley/Effects)

User is sitting at home listening to an immersive radio drama rendered in a space of his/her living room. User can physically walk or be virtually teleported to a position in his/her living room to get closer to voice actors/actresses in the drama, experiencing the same play from a different auditory perspective.

Use Case 3 – Virtual lecture (Audio plus Video)

User is sitting at home and using an immersive audiovisual display while:

- Watching 360° videos captured at different points in a lecture theatre.

- Listening to a lecturer teaching.

User can physically walk or be virtually teleported to a position in his/her living room to experience the same lecture from another fixed perspective that corresponds to the position where the 360° video is captured. The user will be able to synchronise spatially and temporally to 360° Video perspectives.

Use Case 4 – Immersive Opera/Ballet/Dance/Theatre experience (Music, Drama plus 360° Video/6DoF Visual)

User is sitting at home and using an immersive audiovisual display while:

- Watching 360° videos, 6DoF Visual of a captured scene (for example, obtained using dynamic Neural Radiance Fields, NeRF, or dynamic Gaussian splatting), or a combination of both, also including singers/actors within and,

- Listening to immersive opera or theatre recorded during an actual performance, in the space of his/her living room.

User can move (i.e., walk or be virtually teleported) within his/her living room to experience the same scene from another arbitrary perspective, for example at the orchestra pit, sitting in a seat at the stalls, or among the singers/actors.

4 Functional Requirements

- The Functional Requirements apply to the Audio experience and to the impact of visual conditions on the Audio experience. For instance:

- Audio-Visual Contract, i.e. alignment of audio scenes with visual scenes.

- Effects of locomotion on a human audio-visual perception.

- Orientation response, i.e., turning toward a sound source of interest.

- Distance perception such that visual and auditory modalities affect each other.

- One or more of the following three content profiles should be addressed:

- Scene-based, i.e., the captured audio scene, for example Ambisonics, is accurately reconstructed so that the Audio Scene provides a high degree of correspondence to the acoustic ambient characteristics of the captured audio scene.

- Object-based, i.e., the Audio Scene comprises Audio Objects and associated metadata to allow synthesising a perceptually veridical, but not necessarily physically accurate, representation of the captured audio scene.

- Mixed, i.e., a combination of scene-based and object-based profiles where Audio Objects can be overlaid or mixed with scene-based content.

- One or both of the following rendering modalities should be addressed:

- Loudspeaker-based, i.e., the content is rendered through at least two loudspeakers.

- Headphone-based, i.e., the content is rendered through headphones.

- If the audio content is rendered through loudspeakers, the rendering space should have the following characteristics:

- Shape and dimensions:

- Not larger than the captured space.

- Acoustic ambient characteristics:

- Early decay time (EDT) lower than the captured space.

- Frequency mode density lower than the captured space.

- Shape and dimensions:

- Echo density lower than the captured space.

- Reverberation time (T60) lower than the captured space.

- Energy decay curve characteristics same or lower than the captured space.

- Background noise less than 50dB(A) SPL.

- If the audio content is rendered through headphones that can successfully block the audibility of the ambient acoustical characteristics of the rendering space the rendering space should have the following characteristics:

- Shape and dimensions:

- Not larger than the captured space.

- Acoustic ambient characteristics: No constraints on the ambient characteristics defined in point 2.b.

- Shape and dimensions:

- The User movement in the rendering space may be the result of actual or virtual locomotion or orientation.

- Actual locomotion/orientation of the User as tracked by sensors.

- Virtual locomotion/orientation is actuated by controlling devices.

- The maximum responsive latency of the audio system to user movement should be 20 ms or less, however, some applications may have higher latency.

5 Definitions

Table 1 gives Terms and Definitions for CAE-6DF.

Note that: A non-defined Term is defined as the digital representation of the non-capitalised term used for the real space.

Table 1 – Terms and Definitions

| Term | Definition |

| 360° Video | Digital representation of video allowing a user to change their 360° Video experience by rotating Point of View of their Digitised Human. |

| 6 DoF | Six-degree-of-freedom Audio, Visual, or Audio-Visual. |

| – Audio | An Audio Environment where a Digital Human can walk and rotate their Head and experience the environment as if it were a real environment. |

| – Visual | A Visual Environment where a Digital Human can walk and rotate their Body and experience the environment as if it were a real environment. |

| Ambisonics | Sound field recording and representation based on decomposition of the sound field into spherical harmonic components. |

| Boundary | A surface that bounds the volume of an enclosure in the real or Virtual space. |

| Captured Environment | The Environment where a Scene is digitally represented. |

| Digital Human | The digital representation of a human or a Virtual Human. |

| Early Decay Time | The time it takes for the sound pressure level of a source to decay by 10 dB after the sound source has been turned off. |

| Echo Density | A measure of the number of reflections (or echoes) in a Room Impulse Response per unit time. The number of reflections per unit time as calculated from a Room Impulse Response. |

| Energy Decay Curve | The sound energy curve after an impulsive sound source is turned off. |

| Environment | |

| – Audio | A Virtual Environment that can be rendered with audio attributes. |

| – Virtual | A computing platform-generated domain populated by Objects that can be rendered with spatial attributes. |

| – Visual | A Virtual Environment that can be rendered with visual attributes. |

| Foley | Reproduction of quotidian sounds that are added to audiovisual media to enhance the audio experience. |

| Frequency Mode Density | The number of resonant modes that occur within a given frequency range in an acoustic space. |

| Higher Order Ambisonics (HOA) | Second or higher order Ambisonics. |

| Human | A human being in a real space. |

| – Digital | A Digitised or a Virtual Human. |

| – Digitised | An Object that has the appearance of a specific human when rendered. |

| – Virtual | An Object created by a computer that has a human appearance when rendered but is not a Digitised Human. |

| Neural Radiance Field (NeRF) | A Radiance Field reconstructed by using a neural network applied to a partial set of two-dimensional images. |

| Object | A digital representation of an object or a computing platform-generated Object. |

| Point of View | The Position and Orientation of a Digital Human experiencing a Scene. |

| Radiance | The measure of the amount of light or electromagnetic radiation traveling through a particular area in a specific direction. |

| Radiance Field | The digital representation of the distribution of radiance in a three-dimensional space. |

| Reverberation Time (T60) | The time it takes for the sound pressure level of a source to decay by 60 dB after an impulsive sound source has been turned off. |

| Room Impulse Response (RIR) | Sound pressure signal obtained in a room in response to an impulsive excitation. |

| Rendering Space | The physical space where content is experienced. |

| Scene | A composition of spatially arranged Objects and Scenes in a Virtual Environment. |

6 References

- MPAI Statutes

- MPAI Patent Policy

- MPAI Technical Specifications

- MPAI; Call for Technologies: Context-based Audio Enhancement (MPAI-CAE) – Six Degrees of Freedom Audio (CAE-6DF); N1763

- MPAI; Framework Licence: Use Cases and Functional Requirements: Context-based Audio Enhancement (MPAI-CAE) – Six Degrees of Freedom Audio (CAE-6DF); N1765

- MPAI; Template for Responses: Use Cases and Functional Requirements: Context-based Audio Enhancement (MPAI-CAE) – Six Degrees of Freedom Audio (CAE-6DF); N1766