6 AIW, AIMs, and JSON Metadata

1 Functions

Speech carries information not only about its lexical content, but also about several other aspects including age, gender, identity, and emotional state of the speaker. Speech synthesis is evolving towards support of these aspects.

In many use cases, emotional force can usefully be added to speech which by default would be neutral or emotionless, possibly with grades of a particular emotion. For instance, in a human-machine dialogue, messages conveyed by the machine can be more effective if they carry emotions appropriately related to the emotions detected in the human speaker.

Emotion-Enhanced Speech (EES):

- Enables a user to indicate a model utterance or an Emotion to obtain an emotionally charged version of a given utterance.

- Converts an individual emotionless speech segment to a segment that has a specified emotion. Both input and output speech segments are contained in files. The desired emotion is expressed either as a tag belonging to a standard list of emotions or derived by extracting features from a model utterance. EES produces an output speech segment with emotion.

CAE-EES implementations can be used to create virtual agents communicating as naturally as possible, and thus improve the quality of human-machine interaction by bringing it closer to human-human interchange.

2 Reference Architecture

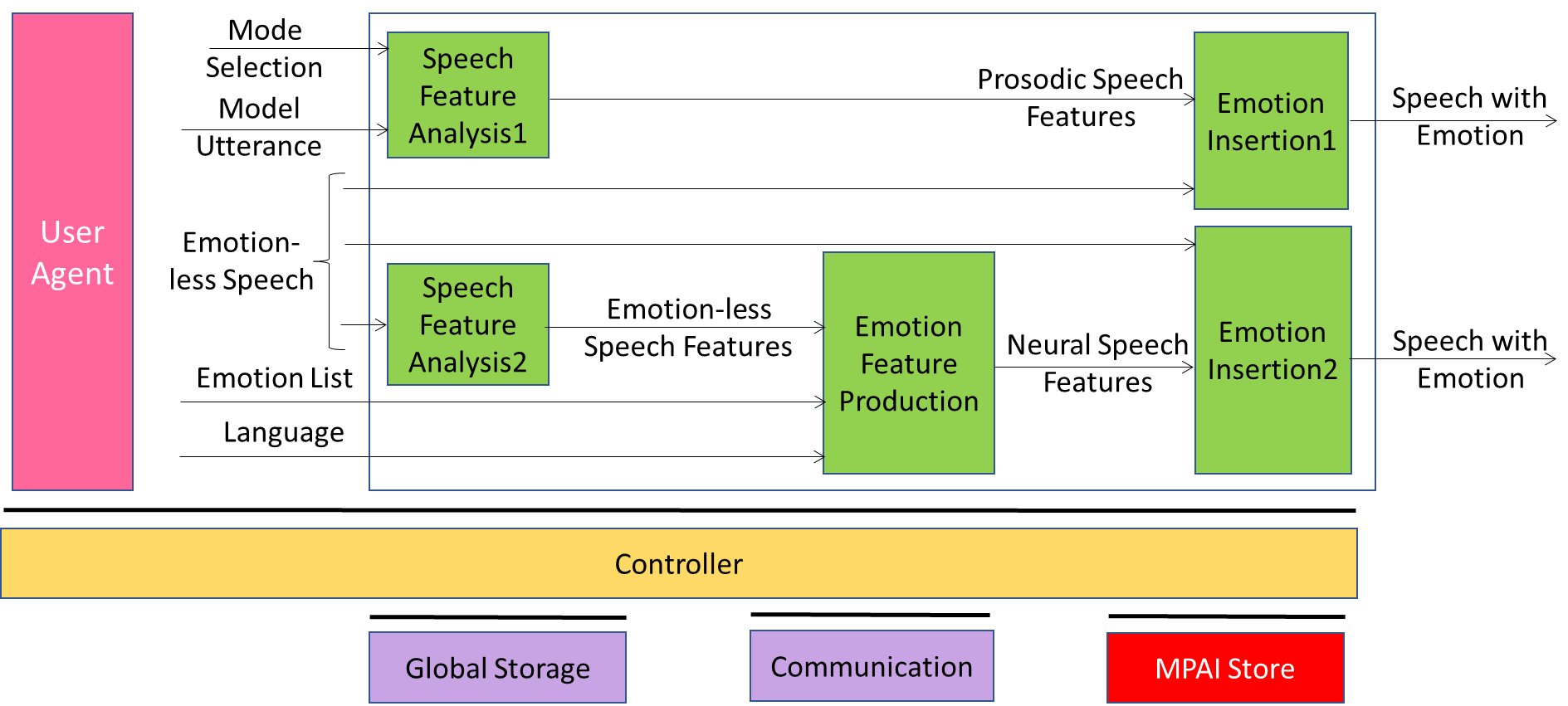

The Emotion-Enhanced Speech Reference Model depicted in Figure 2 supports two Modes or pathways enabling addition of emotional charge to an emotionless or neutral input utterance (Emotion-less Speech).

Figure 2 – Emotion-Enhanced Speech Reference Model

3 I/O data of AI Workflow

Table 2 gives the input and output data of Emotion-Enhanced Speech.

Table 2 – I/O data of Emotion-Enhanced Speech

| Input data | Comments |

| Emotionless Speech | An Audio File containing speech without music and other sounds, and in which little or no identifiable emotion is perceptible by native listeners. |

| Emotion | A Data Type representing the internal status of a human or avatar resulting from their interaction with the context or subsets of it, such as “Angry”, and “Sad”. |

| Model Utterance | An Audio Segment used as a model or demonstration of the Emotion to be added to Emotionless Speech in order to produce Speech with Emotion. |

| Output data | Comments |

| Speech with Emotion | An Audio File containing speech with emotional features. |

4 Functions of AI Modules

The AI Modules of Figure 2 perform the functions described in Table 3.

Table 3 – AI Modules of Emotion-Enhanced Speech

| AIM | Function |

| Speech Feature Analysis 1 | Extracts Prosodic Speech Features of a model emotional Utterance and transfers them to Emotion Insertion1. |

| Speech Feature Analysis 2 | Extracts Emotionless Speech Features of an emotionless input utterance, passing these to the Emotion Feature Production. |

| Emotion Feature Production | Receives the Emotionless Speech Features produced by Speech Feature Analysis2 plus a list of Emotions to be added and Produces Neural Speech Features. (If the Degree of an Emotion is not specified, the Medium value is used.) |

| Emotion Insertion 1 | Integrates the (emotional)Prosodic Speech Features with those of the Emotionless Speech input, yielding and delivering an emotionally modified utterance. |

| Emotion Insertion 2 | Integrates the (emotional) Neural Speech Features with those of the Emotionless Speech input, yielding and delivering an emotionally modified utterance. |

5 I/O Data of AI Modules

Table 4 – CAE-EES AIMs and their data

| AIM | Input Data | Output Data |

| Speech Feature Analysis 1 | Model Utterance | Prosodic Speech Descriptors |

| Speech Feature Analysis 2 | Emotionless Speech | Emotionless Speech Descriptors |

| Emotion Feature Production | Emotionless Speech Descriptors Emotion List Language Identifier |

Neural Speech Descriptors |

| Emotion Insertion 1 | Emotionless Speech Prosodic Speech Descriptors |

Speech with Emotion |

| Emotion Insertion 2 | Emotionless Speech Neural Speech Descriptors |

Speech with Emotion |

6 AIW, AIMs, and JSON Metadata

Table 5 – AIW, AIMs, and JSON Metadata

| AIW | AIMs | Name | JSON |

| CAE-EES | Emotion Enhanced Speech | File | |

| CAE-SF1 | Speech Feature Analysis 1 | File | |

| CAE-SF2 | Speech Feature Analysis 2 | File | |

| CAE-EFP | Emotion Feature Production | File | |

| CAE-EI1 | Emotion Insertion 1 | File | |

| CAE-EI2 | Emotion Insertion 2 | File |