Go To CAV-TEC V1.0 Use Cases and Functional Requirements home page

1 Functions of Connected Autonomous Vehicle

A Connected Autonomous Vehicle is defined as a physical system that:

- Converses with humans by understanding their utterances, e.g., a request to be taken to a destination.

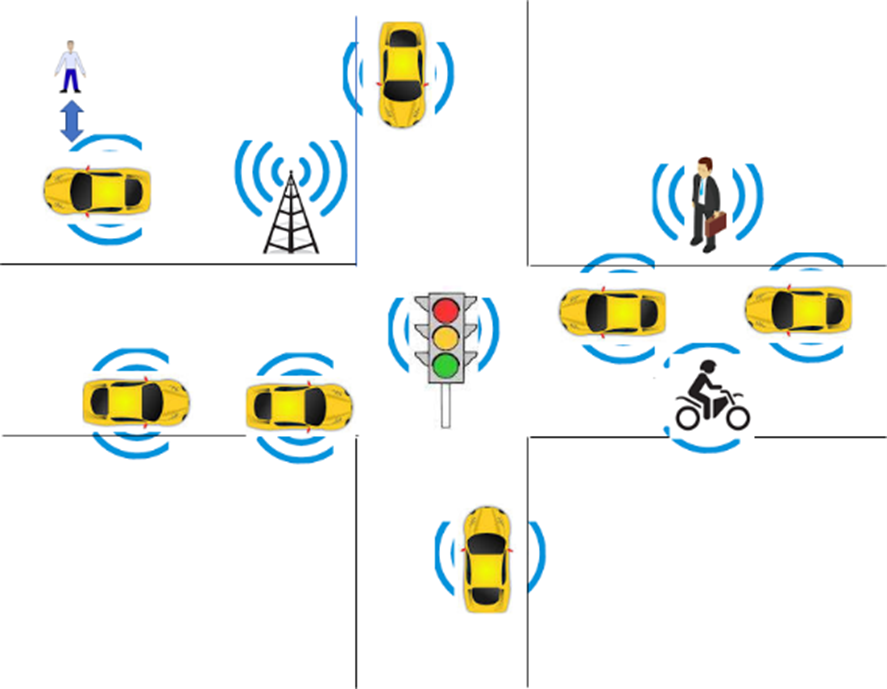

- Senses the environment where it is located or traverses like the one depicted in Figure 2.

- Plans a Route enabling the CAV to reach the requested destination.

- During the travel it informs passengers and converses with them.

- Autonomously reaches the destination by:

- Moving in the physical environment.

- Building Digital Representations of the Environment.

- Exchanging elements of such Representations with other CAVs and CAV-aware entities.

- Making decisions about how to execute the Route.

- Actuating the CAV motion to implement the decisions.

- Reconsidering motion decisions based on feedback from actuator.

Figure 2 – An environment of CAV operation

2 Reference Model of Connected Autonomous Vehicle

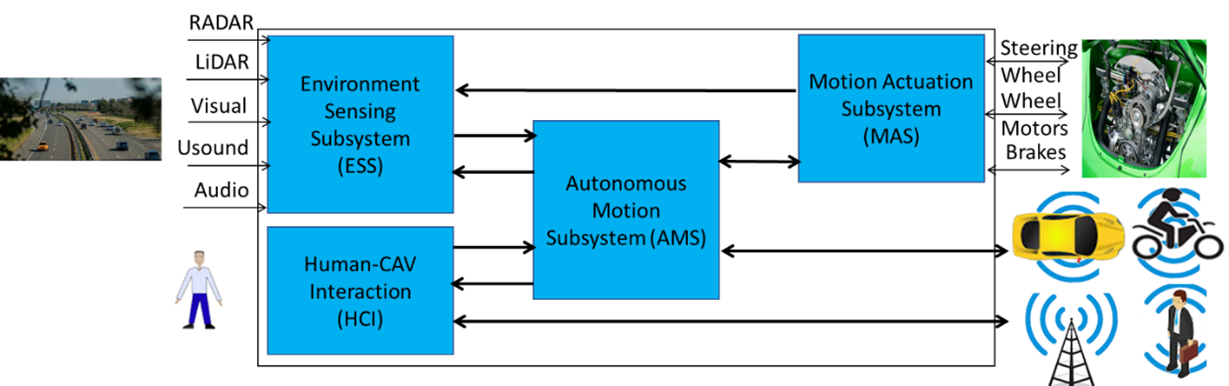

The MPAI-CAV Reference Model is composed of four Subsystems:

- Human-CAV Interaction (HCI).

- Environment Sensing Subsystem (ESS),

- Autonomous Motion Subsystem (AMS).

- Motion Actuation Subsystem (MAS).

The Subsystems are represented in Figure 3 where the arrows refer to the exchange of information between Subsystems and between a Subsystem and other CAVs or CAV-aware systems. The sensing of the Environment and the Motion Actuation are represented by icons.

Figure 3 – The MPAI-CAV Subsystems

The ESS uses the CAV Sensors to develop a representation of the external environment called Basic Environment Representation (BER). The AMS receives the BER and may share part of it with CAVs in range. By receiving similar information from CAVs in range, the AMS can refine its BER and create the Full Environment Representation (FER).

The operation of a CAV unfolds according to the following workflow:

Human Requests the CAV, via HCI, to take the human to a destination.

HCI 1. Authenticates humans.

2. Interprets the request of humans.

3. Issues commands to the AMS.

AMS 1. Requests ESS to provide the current Pose.

ESS 1. Computes and sends the BER to AMS.

AMS 1. Computes and sends Route(s) to HCI.

HCI 1. Sends travel options to Human.

Human 1. May integrate/correct their instructions.

2. Selects and communicates a Route to HCI.

HCI 1. Communicates Route selection to AMS.

AMS 1. Sends the BER to the AMSs of other CAVs.

2. Computes the FER.

3. Decides best motion to reach the destination.

4. Issues appropriate commands to MAS.

MAS 1. Executes the command via Steering Wheel, Wheel Motors and Brakes.

2. Sends response to AMS.

Human 1. Interacts and holds conversation with other humans on board and the HCI.

2. Issues commands to HCI.

3. Requests HCI to render the FER.

4. Navigates the FER.

5. Interacts with humans in other CAVs.

HCI Communicates with HCIs of other CAVs.

1.3 I/O Data of Connected Autonomous Vehicle

Table 3 gives the input/output data of the Connected Autonomous Vehicle. Note that E and R prefixed to CAV, HCI, and AMS stand for Ego and Remote.

Table 3 – I/O data of Human-CAV Interaction

| Input data | From | Comment |

| Input Audio | Environment | For User authentication, command/ interaction with HCI, etc. |

| Input LiDAR | Environment, Passenger Cabin | Environment perception and command/interaction with HCI |

| Input RADAR | Environment | Environment perception |

| Input Ultrasound | Environment | Environment perception |

| Input Visual | Environment, Passenger Cabin | Environment perception, User authentication, command/interaction with HCI, etc. |

| Input Text | User | Text complementing/replacing User input |

| Remote-Ego HCI Message | Remote HCI | Remote HCI to Ego HCI message |

| Remote-Ego AMS Message | Remote AMS | Remote AMS to Ego AMS message |

| Global Navigation Satellite System (GNSS) Data | ~1 & 1.5 GHz Radio | Various GNSS Data sources |

| Other Environment Data | Environment | Temperature, Air pressure, Humidity, etc. |

| Steering Wheel Response | Ego CAV | Response of Steering Wheel |

| Wheel Motor Response | Ego CAV | Response of Wheel Motors |

| Brake Response | Ego CAV | Response of Brake |

| Output data | To | Comment |

| Output Audio | Cabin Passengers | HCI’s avatar Audio |

| Output Visual | Cabin Passengers | AMS’s avatar Visual |

| Ego-Remote HCI Message | Remote HCI | Ego HCI to Remote HCI |

| Ego-Remote AMS Message | Remote AMS | Ego AMS to Remote AMS |

| Steering Wheel Command | Ego CAV | Action on Steering Wheel |

| Wheel Motor Command | Ego CAV | Action on Wheel Motor |

| Brake Command | Ego CAV | Action on Brake |