<–Annex 2 – The Governance of the MPAI Ecosystem Go to ToC

Index

2.2 Personal Status Extraction

1 AI Framework

In recent years, Artificial Intelligence (AI) and related technologies have been introduced in a broad range of applications, have started affecting the life of millions of people and are expected to do so even more in the future. As digital media standards have positively influenced industry and billions of people, so AI-based data coding standards are expected to have a similar positive impact. Indeed, research has shown that data coding with AI-based technologies is generally more efficient than with existing technologies for, e.g., compression and feature-based description.

However, some AI technologies may carry inherent risks, e.g., in terms of bias toward some classes of users. Therefore, the need for standardisation is more important and urgent than ever.

The international, unaffiliated, not-for-profit MPAI – Moving Picture, Audio and Data Coding by Artificial Intelligence Standards Developing Organisation has the mission to develop AI-enabled data coding standards. MPAI Application Standards enable the development of AI-based products, applications, and services.

As a rule, MPAI standards include four documents: Technical Specification, Reference Software Specifications, Conformance Testing Specifications, and Performance Assessment Specifications.

The last type of Specification includes standard operating procedures to enable users of MPAI Implementations to make informed decision about their applicability based on the notion of Performance, defined as a set of attributes characterising a reliable and trustworthy implementation.

In the following, if a Term begins with a small letter, it has the commonly used meaning and if with a capital letter, it has either the meaning defined in Table 2 if it is specific to this Technical Report and in Table 16 if it is common to all MPAI Standards.

In general, MPAI Application Standards are defined as aggregations – called AI Workflows (AIW) – of processing elements – called AI Modules (AIM) – executed in an AI Framework (AIF). MPAI defines Interoperability as the ability to replace an AIW or an AIM Implementation with a functionally equivalent Implementation.

MPAI also defines 3 Interoperability Levels of an AIF that executes an AIW. The AIW and its AIMs may have 3 Levels:

Level 1 – Implementer-specific and satisfying the MPAI-AIF Standard.

Level 2 – Specified by an MPAI Application Standard.

Level 3 – Specified by an MPAI Application Standard and certified by a Performance Assessor.

MPAI offers Users access to the promised benefits of AI with a guarantee of increased transparency, trust and reliability as the Interoperability Level of an Implementation moves from 1 to 3. Additional information on Interoperability Levels is provided in [1].

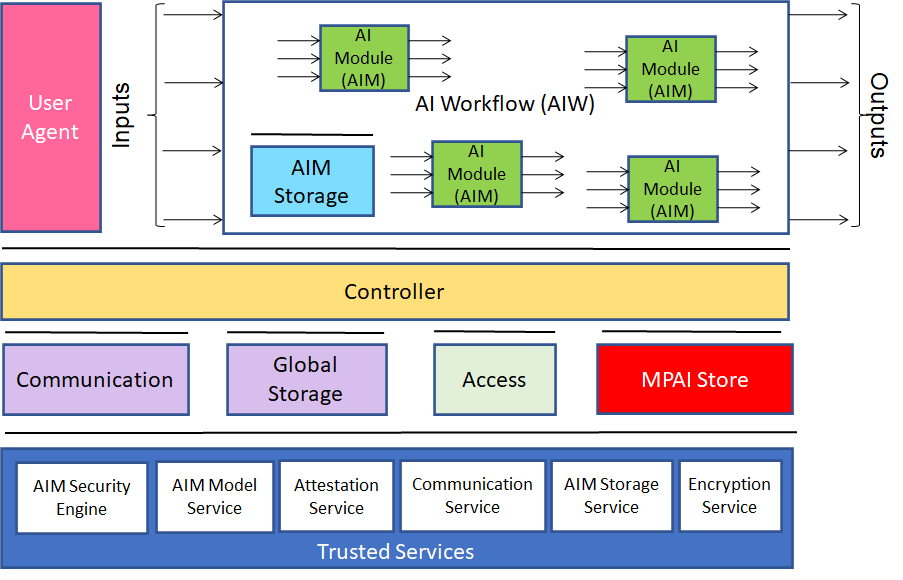

Figure 10 depicts the MPAI-AIF Reference Model under which Implementations of MPAI Application Standards and user-defined MPAI-AIF Conforming applications operate [2].

MPAI Application Standards normatively specify the Syntax and Semantics of the input and output data and the Function of the AIW and the AIMs, and the Connections between and among the AIMs of an AIW.

Figure 10 – The AI Framework (MPAI-AIF) V2 Reference Model

It should be noted that an AIM is defined by its Function and data, but not by its internal architecture, which may be based on AI or data processing, and implemented in software, hardware or hybrid software and hardware technologies.

MPAI Standards are designed to enable a User to obtain, via standard protocols, an Implementation of an AIW and of the set of corresponding AIMs and execute it in an AIF Implementation. The MPAI Store in Figure 10 is the entity from which Implementations are downloaded. MPAI Standards assume that the AIF, AIW, and AIM Implementations may have been developed by independent implementers. A necessary condition for this to be possible, is that any AIF, AIW, and AIM implementations be uniquely identified. MPAI has appointed an ImplementerID Registration Authority (IIDRA) to assign unique ImplementerIDs (IID) to Implementers.[1]

A necessary condition to make possible the operations described in the paragraph above is the existence of an ecosystem composed of Conformance Testers, Performance Assessors, the IIDRA and an instance of the MPAI Store. Reference [1] provides an example of such ecosystem.

[1] At the time of publication of this Technical Report, the MPAI Store was assigned as the IIDRA.

2 Personal Status

2.1 General

Personal Status is the set of internal characteristics of a human and a machine making a conversation. Reference [4] identifies three Factors of the internal state:

- Cognitive State is a typically rational result from the interaction of a human/avatar with the Environment (e.g., “Confused”, “Dubious”, “Convinced”).

- Emotion is typically a less rational result from the interaction of a human/avatar with the Environment (e.g., “Angry”, “Sad”, “Determined”).

- Social Attitude is the stance taken by a human/avatar who has an Emotional and a Cognitive State (e.g., “Respectful”, “Confrontational”, “Soothing”).

The Personal Status of a human can be displayed in one of the following Modalities: Text, Speech, Face, or Gesture. More Modalities are possible, e.g., the body itself as in body language, dance, song, etc. The Personal Status may be shown only by one of the four Modalities or by two, three or all four simultaneously.

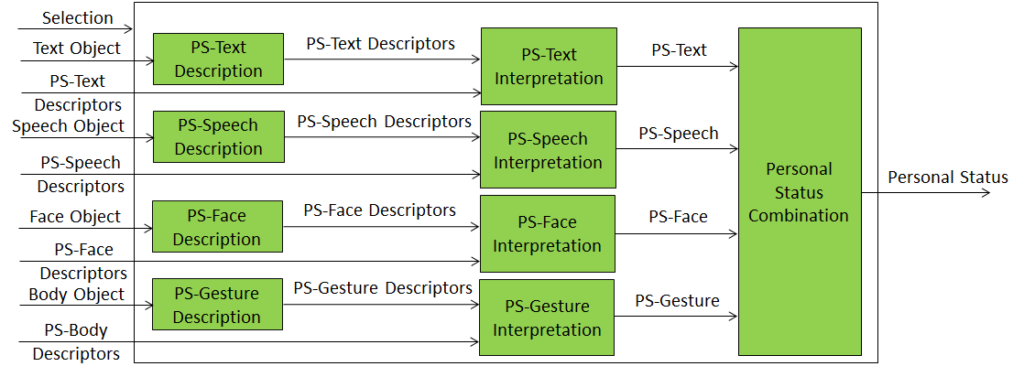

2.2 Personal Status Extraction

Personal Status Extraction (PSE) is a composite AIM that analyses the Personal Status conveyed by Text, Speech, Face, and Gesture – of a human or an avatar – and provides an estimate of the Personal Status in three steps:

- Data Capture (e.g., characters and words, a digitised speech segment, the digital video containing the hand of a person, etc.).

- Descriptor Extraction (e.g., pitch and intonation of the speech segment, thumb of the hand raised, the right eye winking, etc.).

- Personal Status Interpretation (i.e., at least one of Emotion, Cognitive State, and Attitude).

Figure 11 depicts the Personal Status estimation process:

- Descriptors are extracted from Text, Speech, Face Object, and Body Object. Depending on the value of Selection, Descriptors can be provided by an AIM upstream.

- Descriptors are interpreted and the specific indicators of the Personal Status in the Text, Speech, Face, and Gesture Modalities are derived.

- Personal Status is obtained by combining the estimates of different Modalities of the Personal Status.

Figure 11 – Reference Model of Personal Status Extraction

An implementation can combine, e.g., the PS-Gesture Description and PS-Gesture Interpretation AIMs into one AIM, and directly provide PS-Gesture from a Body Object without exposing PS-Gesture Descriptors.

2.3 Personal Status Display

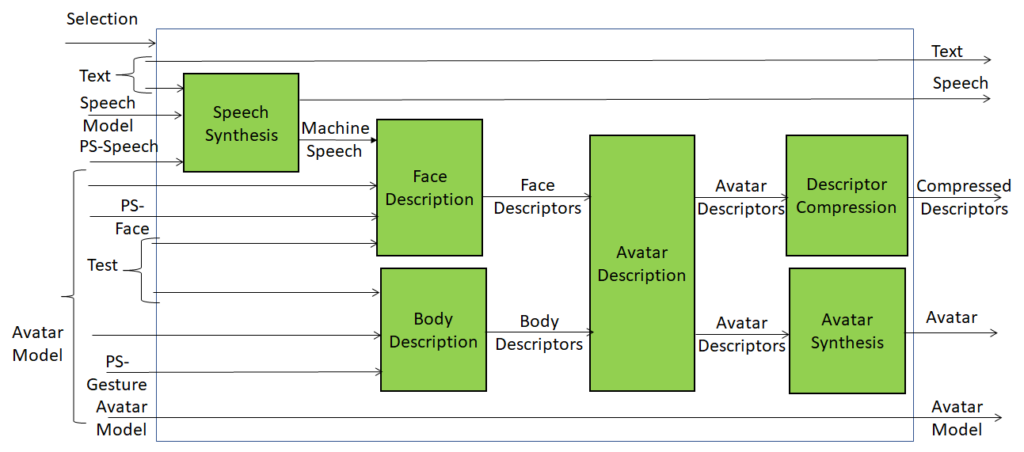

A Personal Status Display (PSD) is a Composite AIM receiving Text and Personal Status and generating an avatar producing Text and uttering Speech with the intended Personal Status while the avatar’s Face and Gesture show the intended Personal Status. Instead of a ready-to-render avatar, the output can be provided as Compressed Avatar Descriptors. The Personal Status driving the avatar can be extracted from a human or can be synthetically generated by a machine as a result of its conversation with a human or another avatar. Reference Architecture.

Figure 12 represents the AIMs required to implement Personal Status Display.

Figure 12 – Reference Model of Personal Status Display

The Personal Status Display operates as follows:

- Selection determines the type of avatar output – ready-to-render avatar or compressed avatar descriptors.

- Text is passed as output and synthesised as Speech using the Personal Status provided by PS (Speech).

- Machine Speech and PS (Face) are used to produce the Face Descriptors.

- PS (Gesture) and Text are used for Body Descriptors using the Avatar Model.

- Avatar Description produces a complete set of Avatar Descriptors.

- Descriptor Compression produces Compressed Avatar Descriptors.

- Avatar Synthesis produces a ready-to-render Avatar.

3 Human-machine dialogue

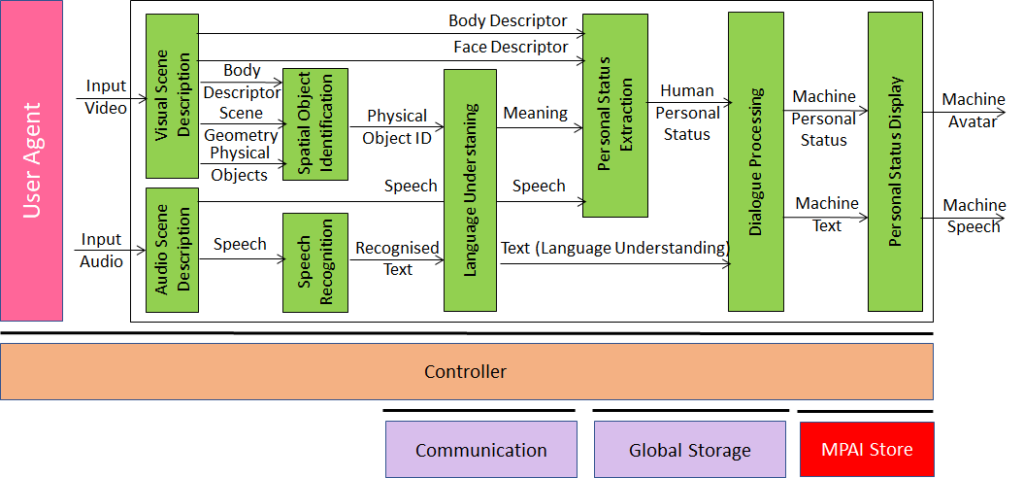

Figure 13 depicts the model of the MPAI Personal-Status-based human-machine dialogue.

Audio Scene Description and Visual Scene Description are two front-end AIMs. The former produces 1) Physical Objects, Face and Body Descriptors of the humans, and Visual Scene Geometry; the latter produces Audio Objects and Audio Scene Geometry.

Body Descriptors, Physical Objects and Visual Scene Geometry are used by the Spatial Object Identification AIM. This provides the identifier of the Physical Object the human body is indicating by using the Body Descriptors and the Scene Geometry. The Speech extracted from the Audio Scene Descriptor is recognised and passed to the Language Understanding AIM together with the Physical Object ID. The AIM provided a refined text (Text (Language Understanding)) and Meaning (semantic, syntactic, and structural information extracted from input data).

Face and Body Descriptors, Meaning and Speech are used by Personal Status Extraction to extract the Personal Status of the human. Dialogue Processing produces a textual response with an associated machine Personal Status that is congruent with the input Text (Language Understanding) and human Personal Status. The Personal Status Display AIM produces a synthetic Speech and an avatar representing the machine.

Figure 13 – Personal Status-based Human-Machine dialogue