1 Introduction

2 Information Service

3 Cross-Cultural Information Service

4 Virtual Assistant

5 Conversation companion

6 Strolling in the metaverse

7 Travelling in a Connected Autonomous Vehicle

1 Introduction

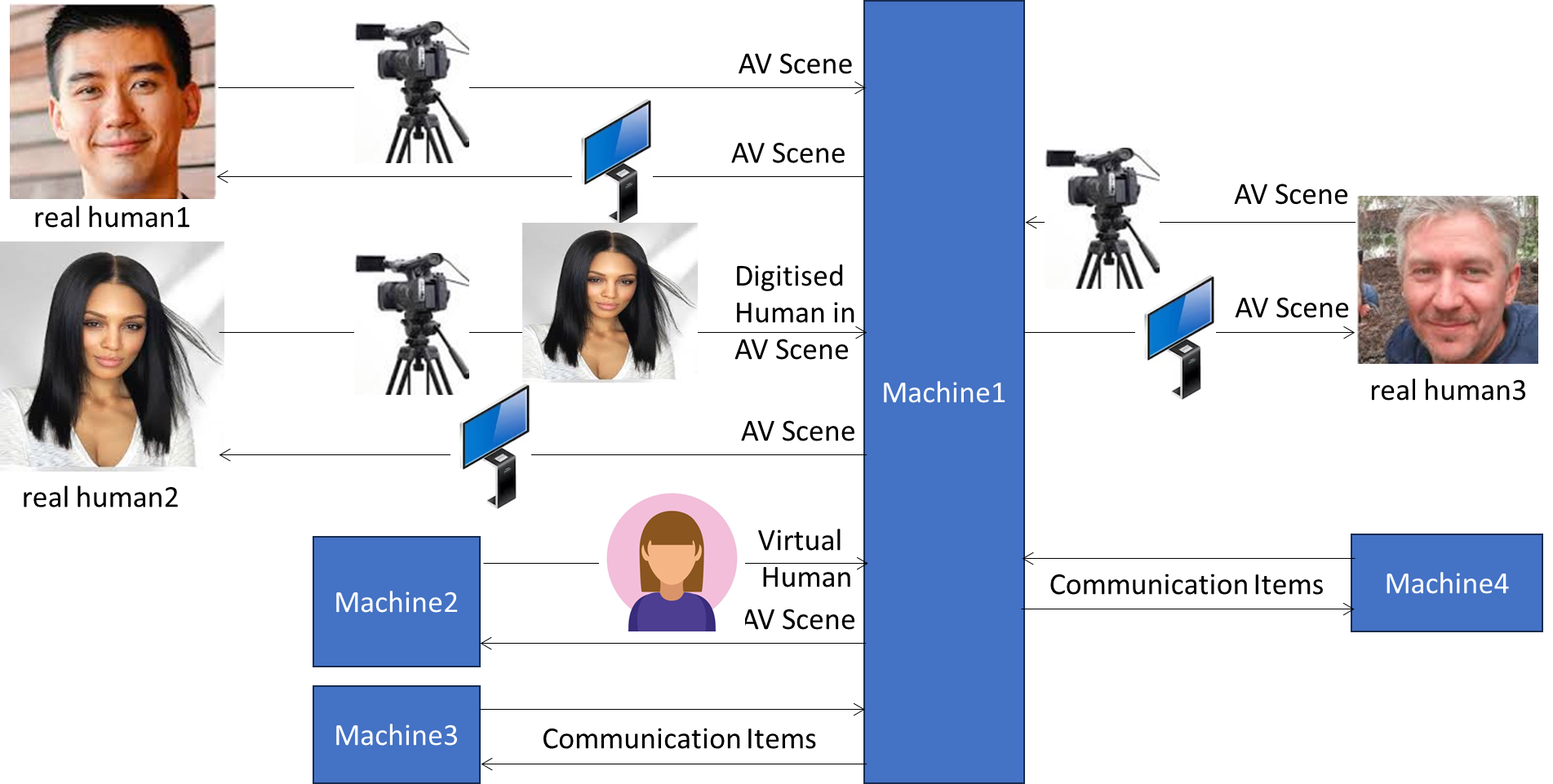

This Annex includes six usage scenarios mostly described as particular cases of the combined usage scenarios of Figure 2 that combines some of the communication settings between Humans and Machines targeted by HMC-CEC. In Figure 2, the term Machine followed by a number indicates an HMC-CEC Implementation. A Machine can be an application, a device, or a function of a larger system. For the sake of simplicity, the Text component is not included in Figure 2, but is supported by HMC-CEC.

Figure 2 – Combined usage scenarios of MPAI-HMC communication

Figure 2 describes the following usage scenarios in which :

- real human1 in his real environment and Machine1 communicate if:

- real human1 emits audio-visual signals in an audio-visual scene that the sensors of Machine1 convert to Audio-Visual Scenes.

- Machine1 generates Audio-Visual Scenes that its actuators convert to audio-visual signals.

- real human1 and real human3 – belonging to different cultural environments – communicate if:

- Both real humans emit audio-visual signals in audio-visual scenes that the sensors of Machine1 convert to Audio-Visual Scenes.

- Machine1 converts (e.g., translates) the semantics of the Audio-Visual Scenes sensed from the audio-visual scenes where real human1 or real human 3 reside to those of the cultural environment of real human3 or real human 1, and generates Audio-Visual Scenes that its actuators convert to audio-visual signals.

- real human1 and Machine4 communicate if:

- real human1 emits audio-visual signals that the Sensors of Machine1 convert to Audio-Visual Scenes.

- Machine1 converts the semantics of the Audio-Visual Scenes to Machine4’s cultural environment and generates either Audio-Visual Scenes or Communication Items – called Communication Items – formatted according to the Portable Avatar Format [8].

- Machine4 generates and emits either Visual Scenes or Communication Items in its own cultural environment.

- Machine1 converts (e.g., translates) the semantics of Audio-Visual Scenes or Communication Items to the semantics of Audio-Visual Scenes in real human1’s cultural environment, and emits Audio-Visual Scenes that real human1 can perceive.

- real human2 in her real environment communicates with Machine 1 if:

- real human2 locates her Digitised Human in a Virtual Environment, such as the one specified by the MPAI Metaverse Model – Architecture [7].

- Machine1 perceives the Digitised Human in the Virtual Environment and generates a Virtual Human that real human2 can perceive. The Virtual Environment may use various means to enable real human2 to perceive the Virtual Environment.

- Machine2 communicates with Machine1 if both Machines generate Virtual Humans in a Virtual Environment. Both Machines may communicate with the Digitised Human of point 2. above if all participants are in the same Virtual Environment.

- Machine3 communicates with:

- real human3 by using the same process as in point 2. above.

- Machine4 by exchanging Audio-Visual Scenes or Communication Items.

Note that Communication Items may include a multimodal message (Text, Speech, Face, and Gesture), an associated Personal Status specifying Emotion, Cognitive State, and Social Attitude [6], language, and information about a Virtual Space [8].

Usage Scenarios analysed:

- Information Service

- Cross-Cultural Information Service

- Virtual Assistant

- Conversation companion

- Strolling in the metaverse

- Travelling in a Connected Autonomous Vehicle

2 Information Service

A human in a public space wants to access an information service implemented as a kiosk equipped with audio-visual sensors able to capture the space containing the human and processing functions to extract the human as an audio (speech) and visual object, while ignoring other humans and other audio and visual objects. The human may request information on an object present in the real space that the human indicates with a forefinger (see Conversation About a Scene in MPAI-MMC V2 [6]). The kiosk responds with a speaking avatar displayed by its actuators.

Figure 3 depicts the usage scenario using an appropriate subset of Figure 2.

Figure 3 – Information Service

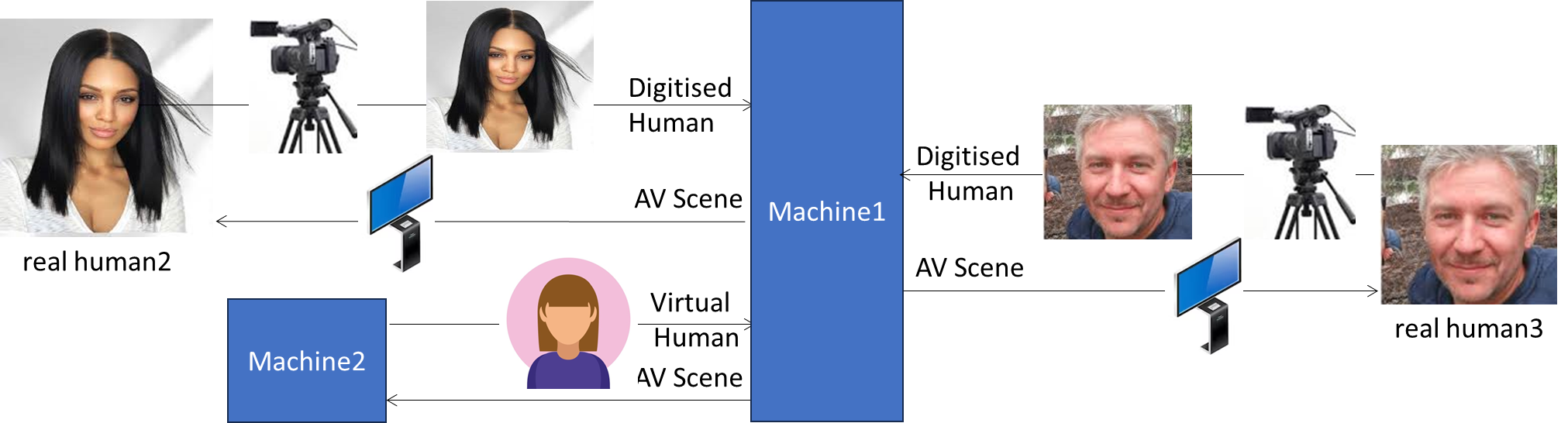

3 Cross-Cultural Information Service

A frequently travelling human uses his portable HMC-enabled device (Machine1) designed and trained to capture the subtleties of that human’s Culture. The human interacts with a local Information Service (Machine4) using Machine1 that acts as an interpreter between the human and Machine4 by exchanging Communication Items that may include the human’s avatar.

Figure 4 depicts the usage scenario using the appropriate subset of Figure 2.

Figure 4 – Cross-Cultural Information Service

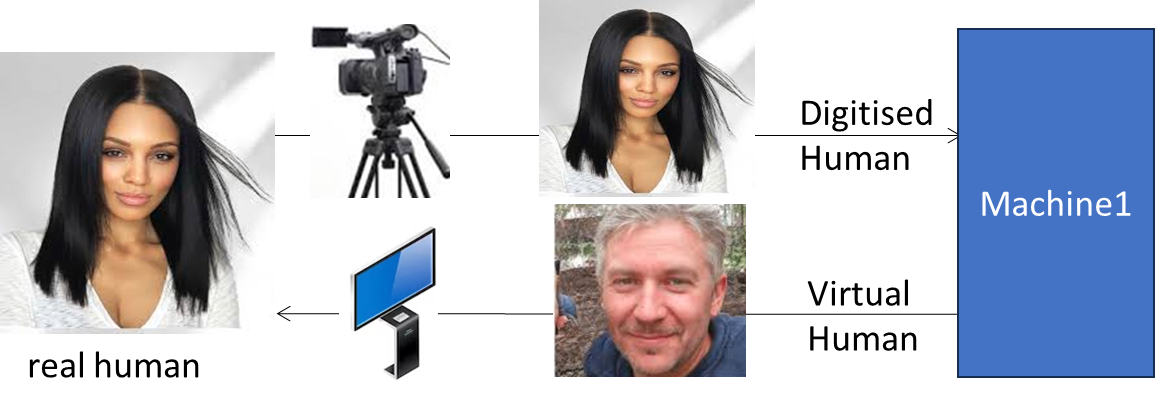

4 Virtual Assistant

This usage scenario has already been developed by MPAI-MMC V2 [6] and is used by the MPAI-PAF Avatar-Based Videoconference (PAF-ABV) [8], a videoconference whose participants are speaking avatars realistically impersonating the human participants. A speaking avatar not representing a human participant is the Virtual Secretary (generated by Machine2) which plays the role of note-taker and summariser by:

- Listening to all Avatars’ Speech.

- Monitoring their Personal Statuses [6].

- Drafting a Summary using the Avatars’ Personal Status and Text, which may be obtained via Face and Body analysis, Speech Recognition, or Text input.

The Portable Avatar of the Virtual Secretary is distributed to all participants, who can then place it around the meeting table.

Figure 5 depicts two Digitised Human participants and one Virtual Human participant (Virtual Secretary). Machine 1 acts as cultural mediator between real human2 and real human3.

Figure 5 – Virtual Assistant

5 Conversation companion

A human is sitting in her living room wishing to converse about a topic with a Machine, represented and displayed as a speaking avatar. The human asks questions, and the Machine responds. The human displays pleasure, dissatisfaction, or other indications of Personal Status (including Emotion, Cognitive State, and Social Attitude). The Machine responds appropriately, with appropriate vocal and facial expressions.

Figure 6 illustrates the usage scenario.

Figure 6 – Conversation Companion

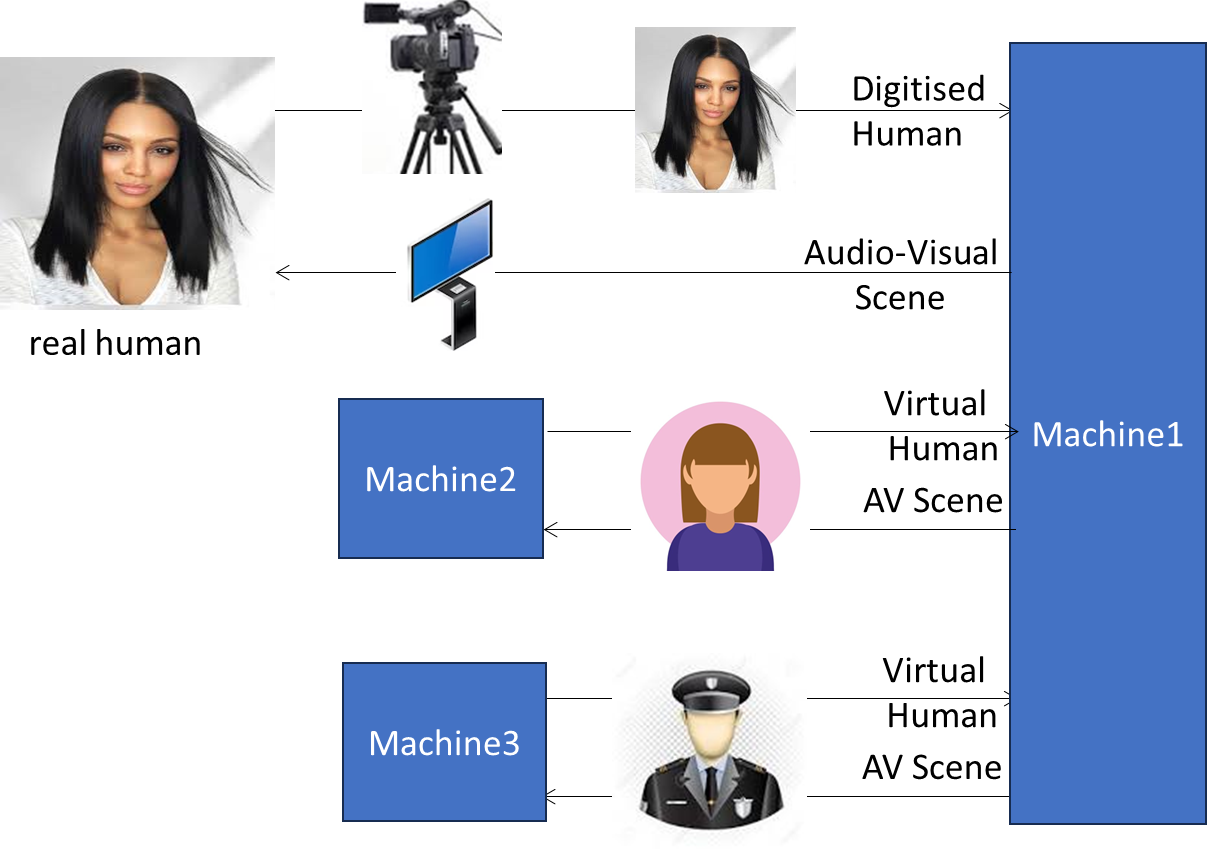

6 Strolling in the metaverse

UserA – a Process representing a human in an M-Instance (a metaverse instantiation) rendered as a speaking Avatar – is in a public area in the M-Instance. She is approached by UserB, a Process rendered as an animated speaking Avatar representing personnel of a company promoting a particular product. UserA does not reject the encounter. UserB captures all relevant information from the speech, face, and body of UserA’s Avatar, and expresses itself by uttering relevant speech and appropriately moving its face and body. Eventually, UserA gets annoyed and calls a security entity (Machine3 in Figure 7) that deals with the complaints of UserA, using audio and visual information as if it were representing a real human.

Figure 7 illustrates the usage scenario. Note that Machine1 includes the function that enables hosting of Digitised and Virtual Humans.

Figure 7 – Strolling in the metaverse

7 Travelling in a Connected Autonomous Vehicle

Two humans travel in a Connected Autonomous Vehicle (CAV) conversing with a Machine that performs some of the functions of the CAV’s Human-CAV Interaction Subsystem [15]. The Machine is aware of the position of the human it is talking to at a particular time and directs its gaze accordingly.