MPAI-MMM makes reference to a metaverse instance (M-Instance), a platform offering a subset or all the following general functions:

- Senses Data from U-Locations, i.e., the real world.

- Transforms the sensed Data into processed Data.

- Produces one or more M-Environments (i.e., subsets of the M-Instance) populated by Objects that can be:

- Digitised, i.e., sensed from the Universe, possibly animated by activities in the Universe.

- Virtual and possibly autonomous or driven by activities in the Universe, imported or internally generated.

- Acts on Objects from the M-Instance or potentially from other M-Instances on its initiative or driven by the actions of humans or machines in the Universe.

- Affects U- and/or M-Environments using Objects in ways that are:

- Consistent with the goals set for the M-Instance.

- Within the Capabilities of the M-Instance.

- According to the Rules of the M-Instance.

- Respecting applicable laws and regulations.

MPAI-MMM assumes that an M-Instance behaves as a system composed of Processes with various degrees of autonomy and interaction. An implementation may merge MMM-specified Processes into one or even split an MMM-specified Process into more than one process provided the behaviour of the resulting system is as specified by MMM. Some Processes exercise their activities strictly inside the M-Instance, while others have various degrees interaction with Data sensed or actuated in the Universe. For convenience, Processes may be identified as Services providing specific Functionalities such as content authoring, Devices connecting the Universe to the M-Instance and the M-Instance to the Universe, Apps running on Devices, and Users representing and acting on behalf of a human entity in the Universe. A Process, especially a User, may be actuated (i.e., rendered) as a Persona, a static or dynamic avatar.

Processes perform their activity by communicating with other Processes or by acting on Items, i.e., instances of MMM-specified Data Types that have been identified in the M-Instance. Examples of Items are Asset, 3D Model, Audio Object, Audio-Visual Scene, etc. MMM specifies the Functional Requirements of some 30 Actions, i.e., Functionalities that are performed by Processes and some 60 Items. A Process holds Process Actions, Items expressing the Action that a Process has performed, or may perform on certain Items at certain M-Locations during certain Times. Actions inside the Metaverse are prefixed with MM, Actions in Metaverse influencing the Universe with MU, and Actions in the Universe influencing the Metaverse with UM. For example, MM-Animate is the Action that uses a stream to animate a 3D Model; MU-Actuate is the Action of a Device rendering an Item at a Device to a U-Location as Media with a Spatial Attitude (Position, Orientation, and their velocities and accelerations); and UM-Embed is the Action of placing at an M-Location with a Spatial Attitude an Item produced by Identifying a scene MM-Captured at a U-Location. Many Actions, such as UM-Embed, are Composite Actions, i.e., a combination of Basic Actions.

Rights are a basic notion underpinning the operation of the MMM and are defined are the combination of Process Action and Rights Level that can be Internal, i.e., granted by the M-Instance, Acquired by initiative of the Process or Granted to the Process by another Process.

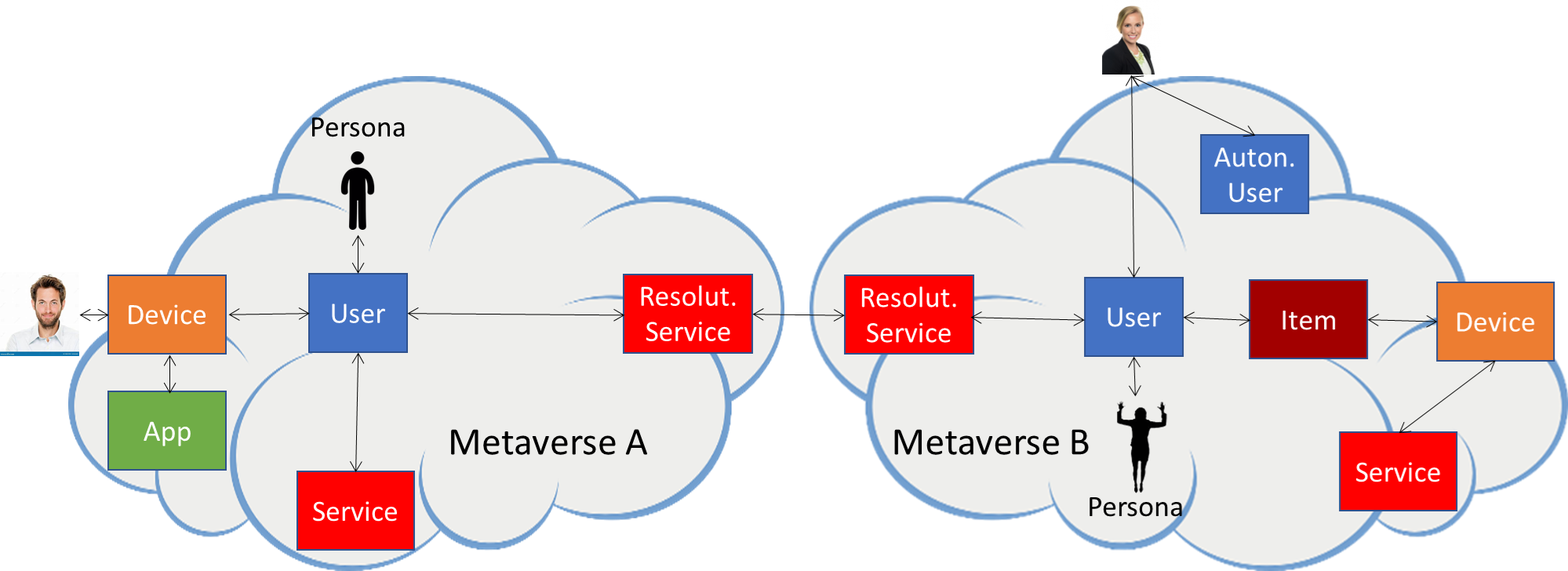

A Process can request another Process to perform an Action on its behalf by using the Inter-Process Protocol. If the requested Process is in another M-Instance, it will use the Inter-M-Instance Protocol to request a Resolution Service of its M-Instance to establish a communication with another Resolution Service in the other M-Instance. The Backus Naur form of the MMM-Script enables efficient communication between Processes. This is depicted in Figure 1.

Figure 1 – Inter-Process Communication

To be admitted to an M-Instance, a human may be requested to provide a subset of their Personal Profile and to Transact a Value (Amount in a Currency). The M-Instance then grants certain Rights to identified Processes of the Registered human, including the import of Personae for their Users.

One of the issues preventing early development of metaverse standards to enable interoperable metaverses is the fast development of certain technology areas. MMM deals with this issue by providing JSON syntax and semantics for all Items. When needed, the JSON syntax references Qualifiers, MPAI-defined Data Types that provide additional information to the Data Type in the form of Sub-Type (e.g., the colour space of a Visual Data Type), Format (e.g., the compression or the file/streaming format of Speech), and Attributes (e.g., the Binaural Cues of Audio). An M-Instance or a Client receiving a Visual Object can understand whether it has the technology to render that Visual Object, or it should rely on a Conversion Service to have a version of the Object suitable to the M-Instance or Client.

A M-Instance can be a costly undertaking if all technologies required by the MMM Technical Specification are implemented. MMM-Profiles are introduced to facilitate the take-off of the metaverse. A Profile includes a subset of Actions and Items that are expected to be needed by a sizeable number of applications.

Some use cases were introduced in the two Technical Reports published in 2023 to drive the development of Technical Specifications. MMM includes several Verification Use Cases that use MMM-Script to verify that the currently specified Actions and Items enable full support of the Use Cases.