<- Data Types Go to ToC Functionality Profiles –>

Table of Contents of Chapter 8 – Use Cases

| 8.1 Introduction | 8.5 eSports Tournament | 8.9 Virtual Car Showroom

8.10 Drive a Connected Autonomous Vehicle

|

8.1 Introduction

This Chapter collects Metaverse Use Cases to facilitate the development of Functionality Profiles.

Use Cases are populated by Users that request to perform Actions on different types of Items. In a Use Case, Users are identified by one subscript and Items by the same subscript of the User performing an Action on the Item followed by a sequential number. For instance:

- Useri MM-Embeds Personaj at M-Locationi.k, etc.

- Useri MU-Renders Entityj at U-Locationi.k, etc.

- Useri MM-Sends Objectj to Userk.

| Note1 | The following abbreviations will be used: A = Audio, AV = Audio-Visual, AVH = Audio-Visual-Haptic, SA=Spatial Attitude. |

| Note2 | The Basic Actions of a Composite Action are not listed unless they are independently used by the Use Case. |

8.2 Virtual Lecture

8.2.1 Description

A student attends a lecture held by a teacher in a classroom created by a school manager:

- A School Manager

- Authors and embeds a virtual classroom.

- Pays the teacher.

- A Teacher

- Embeds its persona from home at the classroom’s desk.

- Embeds and animates a 3D model.

- Leaves the classroom.

- A Student

- When “at home” pays to attend and make a copy of their lecture Experience.

- Then Embeds a persona in the classroom.

- Goes close to the teacher’s desk to feel the 3D model with haptic gloves.

- Stores their lecture Experience.

- Leaves the classroom.

The detailed workflow is:

- User1 (Manager):

- Authors Entity1.

- MM-Embeds Entity1 at M-Location1.1.

- User3 (Teacher):

- Tracks Persona1 (AV) at M-Location3.1 with SA (establishes a connection between the human and the Persona).

- MM-Embeds Persona1 at M-Location3.2 (desk in classroom).

- MM-Disables Persona1 at Location3.1.

- MM-Embeds Model1 at M-Location3.3 (close to M-Location3.2).

- MM-Animates Model1.

- User2 (Student):

- Tracks Persona1 (AV) at M-Location2.1 with SA.

- Transacts Value1.

- MM-Embeds Persona1 (AV) at M-Location2.2 with SA.

- MM-Disables Persona1 at Location2.1.

- User3 (Teacher):

- MM-Embeds Model1 (AVH) at M-Location3.3 (close to M-Location3.2).

- MM-Animates Model1.

- User2 (Student)

- MM-Adds Persona1 (AV) at M-Location2.3 (close to the desk).

- MU-Captures Model1 (AVH).

- MU-Exports Experience1 at Address2.1.

- User1 (Manager):

- Transacts Value1 to User3 (Teacher).

- User3 (Teacher):

- MM-Disables Persona1 from M-Location3.2

- MM-Embeds Persona1 at M-Location3.1.

- User2 (Student)

- MM-Disables Persona1 from M-Location2.2

- MM-Embeds Persona1 at M-Location2.1.

8.2.2 Workflow and Actions

Table 10 – Virtual Lecture workflow and Actions.

| User1 (Manager) | Authors | Entity1.1 | (VClassroom). |

| MM-Embeds | Entity1.1 | M-Location1.1. | |

| User3 (Teacher) | Tracks | Persona3.1 (AV) | M-Location3.1 w/ SA. |

| MM-Embeds | Persona3.1 | M-Location3.2 (desk). | |

| MM-Disables | Persona3.1 | M-Location3.1 | |

| MM-Embeds | Model3.1 | M-Location3.3 (close to desk). | |

| MM-Animates | Model3.1. | ||

| User2 (Student) | Tracks | Persona2.1 (AV) | M-Location2.1 w/ SA. |

| Transacts | Value2.1 | (Lecture & Experience) | |

| MM-Embeds | Persona2.1 (AV) | Location2.2 with SA. | |

| MM-Disables | Persona2.1 | M-Location2.1. | |

| User3 (Teacher) | MM-Embeds | Model3.1 (AVH) | M-Location3.3 (close to desk). |

| MM-Animates | Model3.1. | ||

| User2 (Student) | MM-Adds | Persona2.1 (AV) | M-Location2.3 (close to desk). |

| MU-Captures | Model3.1 (AVH) | ||

| MU-Exports | Experience2.1 | Address2.1 | |

| User1 (Manager) | Transacts | Value1.1 | User3 (Lecture fees). |

| User3 (Teacher) | MM-Disables | Persona3.1 | M-Location3.2 |

| MM-Embeds | Persona3.1 | M-Location3.1 | |

| User2 (Student) | MM-Disables | Persona2.1 | M-Location2.2 |

| MM-Embeds | Persona2.1 | M-Location2.1 |

8.2.3 Actions, Items, and Data Types

Table 11 – Virtual Lecture Actions, Items, and Data Types.

| Actions | Items | Data Types |

| Authenticate | Service | Amount |

| Author | Entity | Coordinates |

| MM-Embed | M-Location | Currency |

| MM-Disable | U-Location | Spatial Attitude |

| MM-Animate | Value | Value |

| Register | User | Orientation |

| Track | Persona | Position |

| Transact | Experience | |

| MU-Export |

8.3 Virtual Meeting

8.3.1 Description

A participant attends a meeting in a room created by a meeting manager. The manager deploys a virtual secretary to produce a summary of the conversations, enriched by information about participants’ personal statuses. The participant gets a translation of sentences uttered in languages other than their own and makes a presentation using a 3D model.

This is the workflow of the use case:

- User1 (Meeting Manager)

- MM-Embeds a virtual meeting room at an M-Location1.

- MM-Embeds Persona1 (a Virtual Secretary, a Process MM-Animating a Persona of User1 at M-Location1.2).

- MM-Animates the Virtual Secretary.

- User2 (a meeting participant):

- Tracks Persona1 at Location2.1 (“its home”).

- MM-Embeds Persona1 (AV) at M-Location2.2 (“the meeting room”. A Persona can be MM-Enabled as Audio only, Audio-Visual, or Audio-Visual-Haptic).

- MM-Disables Persona1 (A-V) from Location2.1 (disappears from “home”).

- Interprets (requests translation of speech) of Persona1 of User3 (2nd meeting participant).

- MM-Embeds Entity1 (a 3D model) at M-Location2.3 (“place in the meeting room”).

- MM-Animates Entity1 (to make the presentation controlling the 3D model).

- Virtual Secretary:

- Interprets Persona1’s Personal Status (requests estimation of the internal state of Persona3.1, possibly in addition to Persona3.1’s Speech Objects translation).

- Produces a text Summary of Persona1’s Speech Object (with added graphical signs expressing Persona3.1’s Personal Status).

- MM-Embeds the Summary at an M-Location3 (“meeting room” for participants to comment).

8.3.2 Workflow and Actions

Table 12 – Virtual Meeting workflow and actions.

| Who | Does | What | Where/comment |

| User1 (Manager) | MM-Embeds | Entity1.1 | (VMeeting room) M-Location1.1 |

| MM-Embeds | Persona1.1 | (Virtual Secretary) M-Location1.2 | |

| MM-Animates | Persona1.1 | Operates Virtual Secretary. | |

| User2 (Participant) | Tracks | Persona2.1 (AV) | M-Location2.1 w/ SA |

| MM-Embeds | Persona2.1 (AV) | M-Location2.2 w/ SA | |

| MM-Disables | Persona2.1 (AV) | M-Location2.1 | |

| User3 (Participant) | Tracks | Persona3.1 (AV) | M-Location3.1 w/ SA |

| MM-Embeds | Persona3.1 (AV) | M-Location3.2 w/ SA | |

| MM-Disables | User3 | M-Location3.1 | |

| User2 (Participant) | Interprets | Persona3.1 | (Requests translation) |

| MM-Embeds | Model2.1 | (3D presentation) M-Location2.2 | |

| MM-Animates | Model2.1 | ||

| Virtual Secretary | Interprets | Persona2.1 | (Personal Status) |

| Produces | Entity1.2 | (Summary) | |

| MM-Embeds | Entity1.2 | M-Location1.3 (VMeeting room) | |

| MM-Disables | Persona1.1 | M-Location1.2 | |

| User2 (Participant) | MU-Exports | Event2.1 | Address2.1 |

| MM-Embeds | Persona2.1 (AV) | M-Location2.1 (back home) | |

| MM-Disables | Persona2.1 (AV) | Location2.2 | |

| User3 (Participant) | MM-Embeds | Persona3.1 (AV) | M-Location2.1 (back home) |

| MM-Disables | Persona3.1 (AV) | Location3.2 (back home) |

8.3.3 Actions, Items, and Data Types

Table 13 – Virtual Meeting Actions, Items, and Data Types.

| Actions | Items | Data Types |

| Authenticate | Entity | Coordinates |

| Call | Persona | Orientation |

| Interpret | Service | Position |

| MM-Animate | User | Spatial Attitude |

| MM-Capture | ||

| MM-Disable | ||

| MM-Embed | ||

| MU-Export | ||

| Register | ||

| Track |

8.4 Hybrid working

8.4.1 Description

A company applies mixed in-presence and remote working policy.

- Physical Workers attend Company physically.

- All Workers

- Are Authenticated.

- Are present in the Virtual office.

- Communicate by Sharing AV messages (except R-worker to R-worker).

- Participate in Virtual meetings.

This is the detailed workflow:

- User1 (Manager):

- Authors Entity1 (Virtual office).

- Embeds Entity1 (Virtual office).

- Embeds Entity2 at Location1.1 (Office gateway)

- MM-Animates Persona1 as “Virtual time clock”.

- real worker:

- Comes to real office.

- User2 (R-worker):

- MM-Embeds Persona1 at M-Location2.1 (Virtual office).

- User3 (V-worker):

- Tracks Persona1 at M-Location3.1

- MM-Embeds Persona1 at Location3.2 (Virtual office).

- User1 (“virtual time clock”) Authenticates:

- User2 (R-worker)

- User3 (V-worker).

- User3 (V-worker):

- MM-Disables Persona1 at Location3.1

- MM-Sends Object1 (A) to User2 (R-worker).

- MM-Embeds Persona1 at Location3.3 (close to R-worker).

- MM-Disables Persona1 at Location3.2

- MM-Embeds Persona1 at Location3.4 (Vmeeting room).

- MM-Disables Persona1 at Location3.3

- User2 (R-worker)

- MM-Embeds Model1 (VWiteboard) at M-Location2.2 (Vmeeting room).

- MM-Animates VWhiteboard

- MM-Disables Persona1 at Location2.2 (Vmeeting room).

- User3 (V-worker):

- MM-Embeds Persona1 at Location3.1 (Home).

- MM-Disables Persona1 at Location3.1 (Vmeeting room).

8.4.2 Workflow and Actions

Table 14 – Hybrid Working workflow and actions.

| Who | Does | What | Where/comment |

| User1 (Manager) | Authors | Entity1.1 (AV) | V-Office |

| MM-Embeds | Entity1.1 | M-Location1.1 | |

| MM-Embed | Persona1.1 (AV) | M-Location1.2 (VTime clock) | |

| MM-Animates | Persona1.1 (AV) | M-Location1.2 | |

| User2 (R-Worker) | Tracks | Persona2.1 (AV) | M-Location2.1 (VOffice) |

| Registers | |||

| MM-Embeds | Persona2.1 | M-Location12.1 (VOffice) | |

| User3 (V-Worker) | Tracks | Persona3.1 (AV) | M-Location3.1 (home) |

| MM-Embeds | Persona3.1 | M-Location3.2 w/ SA (V-Desk) | |

| User1 (VTime clock) | Authenticates | User2 | |

| Authenticates | User3 | ||

| User3 (V-Worker) | MM-Sends | Objects3.1 (A) | Persona2.1 (AV) |

| MM-Embeds | Persona3.1 | M-Location3.3 (talk “in person”) | |

| MM-Disables | Persona3.1 (AV) | M-Location3.2 | |

| MM-Embeds | Persona3.1 | M-Location3.4 (V-Meeting) | |

| MM-Disables | Persona3.1 (AV) | M-Location3.3 | |

| User2 (R-Worker) | MM-Embeds | Persona2.1 | M-Location3.4 (V-Meeting) |

| MM-Disables | Persona3.1 (AV) | M-Location2.2 | |

| MM-Embeds | Entity2.1 | (Whiteboard) M-Location3.4 | |

| MM-Animate | Entity2.1 | To operate Whiteboard | |

| MM-Embeds | Persona2.1 (AV) | M-Location2.1 (back home) | |

| MM-Disables | Persona2.1 | From M-Location3.4 | |

| User3 (V-Worker) | MM-Embeds | Persona3.1 (AV) | M-Location3.1 (back home) |

| MM-Disables | Persona3.1 (AV) | From M-Location3.4 |

8.4.3 Actions, Items, and Data Types

Table 15 – Hybrid Working Actions, Items, and Data Types

| Actions | Items | Data Types |

| Author | Entity | Coordinates |

| Call | M-Location | Orientation |

| MM-Disable | Object (A) | Position |

| MM-Embed | Persona (AV) | Spatial Attitude |

| MM-Send | Service | |

| Track | U-Location | |

| User |

8.5 eSports Tournament

8.5.1 Description

A site manager makes a game landscape available to a game manager. The game manager deploys autonomous characters and places virtual camera and microphone in the landscape. They display the whole landscape onto a big screen and stream the whole landscape.

This is the detailed workflow:

- User1 (Site Manager)

- Authors Entity1 (game landscape).

- MM-Embeds Entity1 (game landscape) at M-Location1.1.

- User2 (Game Manager)

- MM-Embeds Personaei with Spatial Attitude at M-Locations2.i (Autonomous characters).

- MM-Animates Personaei.

- Calls Service1 (virtual camera/microphone control).

- MU-Renders the Entities at M-Location1 (landscape) to:

- U-Location1 via Device1.1 (screen).

- Various U-Locations (via streaming).

- User3 (Player) tracks Persona1 (AV) at Location3.1 with Spatial Attitude

- Calls Process1 to provide role-specific:

- Costumes (e.g., magician, warrior).

- Forms, physical features, and abilities (e.g., cast spells, shoot, fly, jump).

8.5.2 Workflow

Table 16 – eSports Tournament workflow and actions.

| User1 (Site Manager) | Authors | Entity1.1 | (Game landscape). |

| MM-Embeds | Entity1.1 | (Landscape) at M-Location1.1. | |

| User2 (Game Manager) | MM-Embeds | Personae2.i w/SA | M-Locations2.i (AA). |

| MM-Animates | Personae2.i. | M-Locations2.i (AA). | |

| Calls | Service2.1 | (Vcamera/microphone control). | |

| MU-Renders | Entities | (Landscape) to:

U-Location2.1 (via screen). U-Locations (via streaming). |

|

| User3 (Player) | Tracks | Persona3.1 w/ SA | Location3.1 |

| Calls | Process3.1 | to provide role-specific:

Costumes (magician, warrior). Forms, features, abilities. |

8.5.3 Actions, Items, and Data Types

Table 17 – eSports Tournament Actions, Items, and Data Types.

| Actions | Items | Data Types |

| Author | User | Spatial Attitude |

| Call | Persona (AV) | Coordinates |

| MM-Animate | Entity | Orientation |

| MM-Embed | Service | Position |

| Track | U-Location | |

| M-Location |

8.6 Virtual performance

8.6.1 Description

Participant buys a ticket for an event with the right to stay close to the performance stage for 5 minutes. The event is organised by an organiser who has created a virtual auditorium and generates special effects by calling a service to collect participants’ preferences and another service to extract the participants status. The participant utters a private speech to another participant.

- User1 (Organiser)

- Transacts Value1 (buys a parcel at M-Location1.1).

- Authors Entity1 (virtual auditorium).

- MM-Embeds Entity1 at M-Location1.1.

- Calls Service1 (to collect Users’ Preferences).

- User2 (Performer)

- Tracks Persona1 at Location2.1.

- Embeds Persona1 (AV) with Spatial Attitude at MLocation2.2 (in virtual auditorium).

- MM-Disables Persona1 from Location2.1.

- User3 (Participant)

- Tracks Persona1 at M-Location3.1 (at home).

- Transacts Value1 (buys ticket).

- Embeds Persona1 (AV) with Spatial Attitude at Location3.2 (in virtual auditorium).

- MM-Disables Persona1 (AV) from Location3.1.

- MM-Sends Object1(A) to Persona4.1 (Participant).

- Calls Service1 (expresses preferences).

- MM-Adds Persona1 at Location3.2 (close to stage for 5 minutes).

- User1 (Organiser)

- MM-Disables Persona1 from Location3.2 (5 minutes passed).

- Calls Service1 (Collects preferences).

- Interprets Participants Status (of all participants).

- MM-Embeds Entitiesi (SFX).

- Transacts Value2 to User2 (performance fees).

- User2 (Performer)

- MM-Embeds Persona1 (AV) to M-Location2.1.

- MM-Disables Persona1 from M-Location2.2.

- User3 (Participant)

- MM-Embeds Persona1 (AV) to M-Location3.1.

- MM-Disables Persona1 from M-Location3.2.

1.6.2 Workflow and Actions

Table 18 – Virtual Event workflow and actions.

| User1 (Organiser) | Transacts | Value1.1 | (Parcel at M-Location1.1). |

| Authors | Entity1.1 | (Virtual auditorium). | |

| MM-Embeds | Entity1.1 | M-Location1.1. | |

| Calls | Service1.1 | Collect Users’ Preferences). | |

| User2 (Performer) | Tracks | Persona2.1 | Location2.1. |

| Embeds | Persona2.1 (AV) w/ SA | MLocation2.2 (Vauditorium). | |

| MM-Disables | Persona2.1 | at Location2.1. | |

| User3 (Participant) | Tracks | Persona3.1 | M-Location3.1 (at home). |

| Transacts | Value | (Buys ticket). | |

| Embeds | Persona3.1 (AV) w/ SA | Location3.2 (in Vauditorium). | |

| MM-Disables | Persona3.1 (AV) | Location3.1. | |

| MM-Sends | Object3.1 (A) | Persona4.1 (Participant). | |

| Calls | Service1.1 | (Expresses preferences). | |

| MM-Adds | Persona3.1 | Location3.2 (close to stage). | |

| User1 (Organiser) | MM-Disables | Persona3.1 | Location3.2 (after 5’). |

| Calls | Service1.1 | (Collects preferences). | |

| Interprets | Participants Status1.1. | ||

| MM-Embeds | Entities1.i | (SFX). | |

| Transacts | Value1.2 | User2 (performance fees). | |

| User2 (Performer) | MM-Embeds | Persona2.1 (AV) | M-Location2.1. |

| MM-Disables | Persona2.1 | M-Location2.2. | |

| User3 (Participant) | MM-Embeds | Persona3.1 (AV) | M-Location3.1. |

| MM-Disables | Persona3.1 | M-Location3.2. |

8.6.3 Actions, Items, and Data Types

Table 19 – Virtual Event Actions, Items, and Data Types.

| Actions | Items | Data Types |

| Author | Entity (AV) | Amount |

| Call | Object (A) | Cognitive State |

| Interpret | Persona (AV) | Coordinates |

| MM-Disable | M-Location | Currency |

| MM-Embed | Service | Emotion |

| MM-Send | U-Location | Orientation |

| Register | User | Personal Status |

| Track | Value | Position |

| Transact | Social Attitude | |

| Spatial Attitude | ||

| Value |

8.7 AR Tourist Guide

8.7.1 Description

In his Use Case human3 engages the following humans:

- human1 to cause their User1 to buy a virtual parcel and develop a virtual landscape suitable for a tourist application.

- human2 to cause their User2 to develop scenes and autonomous agents for the different places of the landscape.

- human4 to create an app that alerts the holder of a smart phone where the app is installed.

- human5 holding a smart phone with the app to perceive Entities and talk to Personae MM-Embedded at M-Locations and MM-Animated by autonomous agents (AA).

This is the detailed workflow of the Use Case:

- User1

- Buys M-Location1 (parcel) in an M-Environment.

- Authors Entity1 (landscape suitable for a virtual path through n sub-M-Locations).

- Embeds Entity1 (landscape) on M-Location1.1 (parcel).

- Sells Entity1 (landscape) and M-Location1.1 (parcel) to a User2.

- User2

- Authors Entity1 to Entity2.n for the M-Locations.

- Embeds the Entities at M-Location1 to M-Location2.n.

- Sells the result to User3.

- human4

- Develops

- Map recording the pairs M-Locationi – U-Location2.i

- App alerting a human5 holding the Device with the App installed that a key U-Location has been reached.

- Sells Map and App to human3.

- Develops

- User3 MM-Embeds one or more autonomous Personae at M-Location1 to M-Location2.n.

- human5 gets close to key U-Location1:

- App1 MM-Sends Message5.1 to Device5.1

- Device1

- MU-Renders Entity1 MM-Embedded at M-Location5.1 to key U-Location5.1.

- MU-Rendered Entity1.

- MU-Animated Persona1.

- UM-Animated Persona2.

1.7.2 Workflow

Table 20 – AR Tourist Guide workflow.

| Who | Does | What | Where/comment |

| User1 | Transacts | Value1.1 | M-Location1.1’s parcel. |

| Authors | Entity1.1 | (Path of n M-Locations). | |

| Embeds | Entity1.1 | (Parcel). | |

| Transacts | Value1.1 | (Landscape & parcel to User2). | |

| User2 | Authors | Entity2.1 to Entity2.n | (For M-Locations2.1-2.n). |

| Embeds | Entity2.1-2.n | M-Location2.1-2.n | |

| Transacts2.1 | Entity2.1-2.n | User3. | |

| human4 | develops | Map | M-Locations & U-Locations |

| develops | App | ||

| sells | Map and App | To human3. | |

| User3 | MM-Embeds | Personae | M-Location2.1-2.n. |

| MM-Animates | Personae | M-Location2.1-2.n. | |

| human5 | comes to | At key U-Location2.i. | |

| App5.1 | MM-Sends | Message5.1 | Device5.1 |

| Device5.1 | MU-Renders | Entity5.1 @M-Location5.1 | At key U-Location5.1. |

| MU-Renders | MM-Animated Persona5.1 | At key U-Location5.1. | |

| MU-Renders | UM-Animated Persona5.2 | At key U-Location5.1. |

1.7.3 Actions, Items, and Data Types

Table 21 – AR Tourist Guide Actions, Items, and Data Types.

| Actions | Items | Data Types |

| Author | App | Amount |

| Author | Device | Coordinates |

| MM-Animate | Entity | Currency |

| MM-Animate | Map | Orientation |

| MM-Embed | M-Location | Position |

| MM-Send | Persona | Spatial Attitude |

| MU-Export | Service | Value |

| MU-Render | U-Location | |

| Send | User | |

| Transact | ||

| UM-Animate |

8.8 Virtual Dance

8.8.1 Description

This Use Cases envisages that:

- A dance teacher:

- Is in its office.

- Places a virtual secretary in the dance school animated by an autonomous agent.

- A student #1:

- Is at home.

- Shows up at the school.

- Greets the secretary.

- The secretary

- Reciprocates the greeting.

- Sends a private vocal message to the teacher.

- The teacher:

- Places its persona1 (AVH) in the dance school.

- Dances with student #1.

- A student #2:

- Is at home.

- Shows up at school.

- The teacher:

- Places its persona1 (AVH) close to student #2.

- Places its persona3 (AVH) where persona2.1 was before.

- Animates its persona3 with autonomous agent to dance with student #1.

- Dances with student #2.

- User2 (dance teacher)

- Tracks Persona1 at M-Location2.1

- MM-Embeds Persona2 (AV) (another of its Personae) at M-Location2.2.

- MM-Animates Persona2 (AV) (as virtual secretary to attend to students coming to learn dance).

- User1 (dance student #1):

- MM-Embeds its Persona1 (AV) at Location1.1 (its “home”).

- MM-Embeds Persona1 (AVH) at Location1.2 close to virtual secretary).

- MM-Sends Object1 (A) to Persona2.2 (greets virtual secretary).

- MM-Disables Persona1 from Location1.1.

- User2 (Persona2):

- MM-Sends Object1 (A) (to dance student #1 to reciprocate greeting).

- MM-Send Object2 (A) (to call teacher’s Persona2.1).

- Dance teacher (Persona1):

- MM-Embeds (AVH) Persona1 at Location2.3 (classroom).

- UM-Animates Persona1 (dances with student #1).

- While Persona1 (student #1) and Persona2.1 (teacher) dance, User3 (dance student #2):

- MM-Embeds (AV) Persona1 (its digital twin) at Location3.1 (its “home”).

- MM-Embeds (AVH) Persona1 at Location3.2 (close to secretary).

- MM-Disables Persona1 from Location3.1.

- After a while, User2 (dance teacher):

- MM-Embeds (AVH) Persona1 at Location2.4 (close to student #2’s position).

- MM-Disables Persona1 (from where it was dancing with student #1).

- MM-Embeds (AVH) Persona3

- MM-Animates Persona3 with autonomous agent (to dance with student #1).

8.8.2 Workflow

Table 22 – Virtual Dance workflow.

| User2 (teacher) | Tracks | Persona2.1 | M-Location2.1 |

| MM-Embeds (AV) | Persona2.2 | M-Location2.2. | |

| MM-Animates (AV) | Persona2.2 | (As VS for students). | |

| User1 (student1) | MM-Embeds (AV) | Persona1.1 | Location1.1 (its “home”). |

| MM-Embeds (AVH) | Persona1.1 | Location1.2 (close to VS). | |

| MM-Sends | Object1.1 (A) | Persona2.2 (greets VS). | |

| MM-Disables | Persona1.1 | from Location1.1. | |

| User2 (Persona2.1) | MM-Sends | Object2.1 (A) | (Responds to student #1). |

| MM-Send | Object2.2 (A) | (Calls teacher’s Persona2.1). | |

| User2 (Persona2.2) | MM-Embeds (AVH) | Persona2.1 | Location2.3 (classroom). |

| User2 (Persona2.2) | UM-Animates | Persona2.1 | (Dances with student #1). |

| User3 (student2) | MM-Embeds (AV) | Persona3.1 | Location3.1 (its “home”). |

| MM-Embeds (AVH) | Persona3.1 | Location3.2 (close to VS). | |

| MM-Disables | Persona3.1 | from Location3.1. | |

| User2 (teacher) | MM-Embeds (AVH) | Persona2.1 | Location2.4 (near student2). |

| MM-Disables | Persona2.1 | (From previous position). | |

| MM-Embeds (AVH) | Persona2.3 | ||

| MM-Animates | Persona2.3 | (w/ AA with student #1). |

8.8.3 Actions, Items, and Data Types

Table 23 – Virtual Dance Actions, Items, and Data Types.

| Actions | Items | Data Types |

| MM-Animate | M-Location | Amount |

| MM-Disable | Object (A) | Currency |

| MM-Embed | Persona (AV) | Orientation |

| MM-Send | Persona (AVH) | Position |

| Track | Service | Spatial Attitude |

| Transact | U-Location | |

| Value |

8.9 Virtual Car Showroom

8.9.1 Description

This Use Cases envisages that:

- A car dealer

- Is in its office.

- Embeds a persona animated by an autonomous agent in the car showroom (attendant).

- A customer in its home

- Embeds its persona in the car showroom.

- Greets the showroom attendant.

- The Showroom attendant

- Reciprocates the greeting.

- Privately calls the dealer.

- The dealer

- Animates the attendant with the human car dealer.

- Converses with the customer.

- Embeds a 3D AVH model of a car.

- The customer

- Interacts with the model (has a virtual drive).

- Buys the car.

- Returns home.

This is the detailed workflow of the Use Case:

- User1 (car dealer):

- Tracks Persona1 at M-Location1.1 (“office”).

- MM-Embeds Persona2 with Spatial Attitude1.1 at M-Location1.2 (“showroom”).

- MM-Animates Persona2 (with an autonomous agent – showroom attendant).

- User2 (customer):

- Tracks Persona1 at M-Location2.1 (“home”).

- MM-Embeds Persona1 at M-Location2.1 (“in the showroom”).

- MM-Sends Object1 (A) to Persona1.2 (greets showroom attendant).

- MM-Disables Persona1 at M-Location2.1 (“home”).

- User1 (Persona2):

- MM-Sends Object1 (A) to Persona2.1 (responds to greetings).

- MM-Sends Object2 (A) to Persona1.1 (“come attend customer”).

- User1 (Persona1)

- MM-Embeds Persona1 at M-Location1.3 (“in the showroom”).

- MM-Sends Object2 (A) to Persona2.1 (engages in conversation).

- MM-Embeds Model1 (AVH) at M-Location1.4 (model car “in the showroom”).

- MM-Animates Model1 (“animate model car”).

- User2 (customer)

- MM-Embeds Persona1 at M-Location2.3 (where the virtual car is located)

- UM-Animates Persona1.

- Transacts Value1 (buys car).

- MM-Disables Persona1 at M-Location1.3.

- MM-Embeds Persona1 at M-Location2.1 (“at home”).

1.9.2 Workflow

Table 24 – Virtual Car Showroom workflow.

| User1 (car dealer) | Tracks | Persona1.1 | M-Location1.1 (“office”). |

| MM-Embeds | Persona1.2 w/ SA1.1 | M-Location1.2 (“showroom”). | |

| MM-Animates | Persona1.2 w/ AA | (Showroom attendant). | |

| User2 (customer) | Tracks | Persona2.1 | M-Location2.1 (“home”). |

| MM-Embeds | Persona2.1 | M-Location2.1 (“showroom”). | |

| MM-Sends | Object1.1 (A) | Persona1.2 (greets attendant). | |

| MM-Disables | Persona2.1 | M-Location2.1 (“home”). | |

| User1 (Persona1.2) | MM-Sends | Object1.1 (A) | Persona2.1 (responds to greetings). |

| MM-Sends | Object1.2 (A) | Persona1.1 (“attend customer”). | |

| User1 (Persona1.1) | MM-Embeds | Persona1.1 | M-Location1.3 (“showroom”). |

| MM-Sends | Object1.2 (A) | Persona2.1 (converses). | |

| MM-Embeds | Model1.1 (AVH) | M-Location1.4 (“in showroom”). | |

| MM-Animates | Model1.1 w/ AA | (“Animate model car”). | |

| User2 (customer) | MM-Embeds | Persona2.1 | M-Location2.3 (in virtual car) |

| UM-Animates | Persona2.1 | (Drives virtual car) | |

| Transacts | Value2.1 | (Buys car). | |

| MM-Disables | Persona2.1 | M-Location1.3. | |

| MM-Embeds | Persona2.1 | M-Location2.1 (“at home”). |

8.9.3 Actions, Items, and Data Types

Table 25 – Virtual Car Showroom Actions, Items, and Data Types.

| Actions | Items | Data Types |

| Call | Object (A) | Amount |

| Embeds | Persona | Currency |

| MM-Animate | Scene (AVH) | Orientation |

| MM-Disable | Value | Position |

| MM-Embed | Spatial Attitude | |

| MM-Send | ||

| Track | ||

| Transacts | ||

| UM-Animate |

8.10 Drive a Connected Autonomous Vehicle

8.10.1 Description

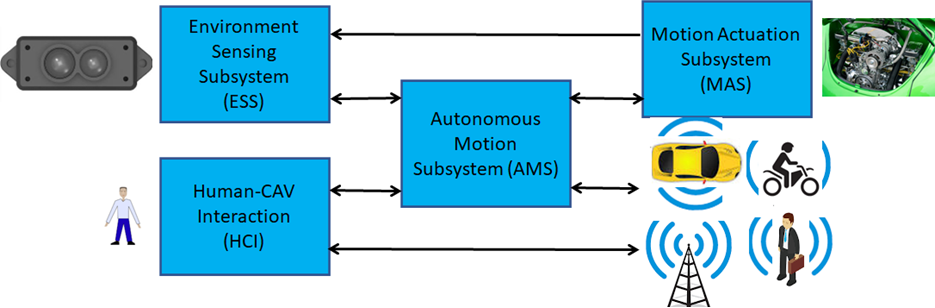

MPAI is developing a Technical Report that includes a reference model of a Connected Autonomous Vehicle (CAV) based on the subdivision of a CAV in 4 subsystem each implemented as a workflow of AI Modules executed in the standard MPAI-AIF framework [5]. A CAV has the capability to autonomously reach a U-Location by understanding human utterances, planning a Route, sensing the U-environment, building a representation of it, exchanging such representations with other CAVs and CAV-aware entities, making decisions about how to execute the Route, and acting on the Motion Actuation Subsystem to implement the decision (see Figure 2).

Figure 2 – MPAI-CAV Reference Model

This Use Case assumes that a human having rights to or owning a CAV Registers with the CAV by providing:

- The requested subset of their Personal profile.

- Two User Processes required to operate a CAV:

- User1 to operate the Human-CAV Interaction Subsystem.

- User2 to operate the Autonomous Motion Subsystem.

- User1’s Personae.

The Processes of a CAV generate a persistent M-Instance resulting from the integration of:

- The Environment Representation generated by the Environment Sensing Subsystem by UM-Capturing the U-Location being traversed by the CAV.

- The Scenes MM-Captured from the M-Locations of the M-Instances that are digital twins of the U-Locations being traversed by other CAVs that are close to the U-Location of the Ego CAV and improve the accuracy of the CAV’s M-Locations.

- Relevant Experiences of the Autonomous Motion Subsystem at the M-Location.

For simplicity, the Use Case assumes that there are only two CAVs: CAVA and CAVB. The convention of having Users identified by a sequential number is extended as follows: “a User of CAVA is identified by A followed by a sequential number”. Items affected by UserA.1 are identified as A.1 followed by a sequential number.

- User1

- Authenticates the human and gets it driving instructions.

- Requests a series of options satisfying the instructions.

- User2

- Gets information about CAVA

- Gets options from Route Planner.

- Communicates options to User1.

- User1

- Converts the options to an utterance.

- Communicates the utterance to the human.

- human sends utterance with the option to User1

- User1 converts utterance to a command.

- User2

- Authenticates its peer User2

- Gets the Environment Representation from its ESS and User2

- Sends a command to the Motion Actuation Subsystem.

- Requests permission to place Persona1.1 in the virtual cabin of CAVB.

- Receives permission and places Persona1.1

- Watches the landscape through the window of CAVB.

This is detailed workflow:

- humanA

- User1

- Tracks Persona1.1 at M-LocationA.1.1 (`connects CAVA’s M-Instance with U-Location).

- Authenticates Object1.1(AV) (recognises humanA).

- Interprets Object1.1(A) (humanA’s request to go home).

- MM-Sends HCI-AMSCommand1.1 to UserA.2.

- User2

- MM-Sends ESS’s Scene2.1 to RoutePlanner.

- MM-Sends Route2.1 to UserA.1.

- User1

- MU-Renders Object1.2 (A) (to humanA).

- UM-Renders Object1.3 (A) (humanA’s Route selection).

- Interprets Object1.3 (A) (understand Route).

- MM-Sends HCI-AMSCommand1.2 to UserA.2.

- User2

- Authenticates User2.

- MM-Sends

- ESS’s Scene2.2 to Environment Representation Fusion (ERF).

- Scene2.3 at M-LocationA.2.1 (in CAVB’s M-Instance) to ERF.

- Path1 to Motion Planner.

- Trajectory2.1 to Obstacle Avoider (does not request a new Trajectory).

- Trajectory2.1 to Command Issuer.

- AMS-MASCommand2.1 to Motion Actuation Subsystem.

- MAS-AMS Response2.1.

- User1

- Authenticates User2.

- MM-Sends Object1.4 (A) (request to Embed Persona1.1 in CAVB virtual cabin) to UserB.1.

- User1 MM-Sends ObjectB.1.1 (A) (request accepted) to UserA.1.

- User1

- MM-Embeds Persona1.1 at M-LocationA.1.2 (UserB.1’s cabin).

- MM-Captures Scene1.1 M-LocationA.1.3 (Environment outside of UserB.1’s cabin).

UserA.1 can now view and navigate the M-instance created by CAVA traversing the U-Environment (M-LocationA.1.2) and DeviceA.1. allows UserA.1 to be MM-Embedded, i.e., to become part of the M-LocationA.1.1 (UserB.1’s cabin).

8.10.2 Workflow

Table 26 – Drive a Connected Autonomous Vehicle workflow.

| Who | Does | What | Where/(comment) |

| humanA | Registers | (With CAVA). | |

| UserA.1 | Tracks | PersonaA.1.1 | (M-LocationA.1.1 connects U-LocationA.1.1). |

| Authenticates | ObjectA.1.1(AV) | (Recognises humanA). | |

| Interprets | ObjectA.1.1(A) | (humanA’s request to go). | |

| MM-Sends | HCI-AMSCmdA.1.1 | UserA.2. | |

| UserA.2 | MM-Sends | ESS’s SceneA.2.1 | Route Planner. |

| MM-Sends | AMS-HCIRespA.2.1 | RouteA.2.1 to UserA.1 | |

| UserA.1 | MU-Renders | ObjectA.1.2 (A) | (To humanA). |

| UM-Renders | ObjectA.1.3 (A) | (Route selection). | |

| Interprets | ObjectA.1.3 (A) | (Understand Route). | |

| MM-Sends | HCI-AMSCmdA.1.2 | UserA.2 | |

| UserA.2 | Authenticates | UserB.2 | |

| MM-Sends | ESS’s SceneA.2.2 | (To Environment Representation Fusion). | |

| SceneA.2.3 | M-LocationA.2.1 (in CAVB’s to ERF). | ||

| PathA2.1 | Motion Planner. | ||

| TrajectoryA.2.1 | Obstacle Avoider. | ||

| TrajectoryA.2.1 | Command Issuer. | ||

| AMS-MASCmdA.2.1 | MAS. | ||

| MAS-AMS RespA.2.1. | From MAS. | ||

| UserA.1 | Authenticates | UserA.2. | |

| MM-Sends | ObjectA.1.4 (A) | (Request to Embed to UserB.1) | |

| UserB.1 | MM-Sends | ObjectB.1.1 (A) | (Request accepted to UserA.1). |

| UserA.1 | MM-Embeds | PersonaA.1.1 | M-LocationA.1.2 (UserB.1’s cabin). |

| MM-Captures | SceneA.1.1 | M-LocationA.1.3 (out of UserB.1’s cabin). |

8.10.3 Actions, Items, and Data Types

Note: The MPAI-CAV specific Items are included.

Table 27 – Drive a Connected Autonomous Vehicle Actions, Items, and Data Types.

| Action | Item | Data Types |

| Authenticate | AMS-MASCommand | Spatial Attitude |

| Interpret | AMS-HCIResponse | Coordinates |

| MM-Capture | Environment Representation | Orientation |

| MM-Capture | HCI-AMSCommand | Position |

| MM-Embed | MAS-AMS Response | |

| MM-Send | M-Location | |

| MM-Send | Object (A) | |

| MU-Render | Object (AV) | |

| Register | PathA2.1 | |

| Request | Persona | |

| Request | Route | |

| Track | Scene | |

| UM-Render | Trajectory | |

| User |