(Tentative)

| Function | Reference Model | Input/Output Data |

| SubAIMs | JSON Metadata | Profiles |

1. Function

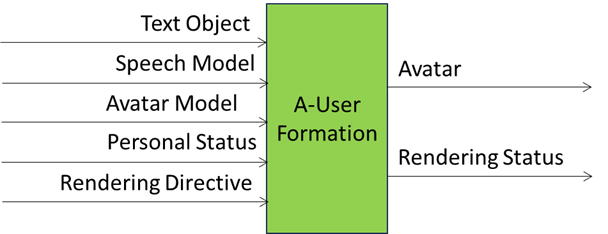

The A-User Formation AIM (PGM-AUF) receives structured semantic and expressive inputs – Text, Personal Status, and Avatar Model parameters. Its primary function is to synthesise a coherent, expressive A-User embodiment by transforming semantic content and multimodal guidance into speech, facial expression, gesture, and avatar animation.

Internally, PGM-AUF performs the following operations:

- Personal Status Demultiplexing: Separates incoming Personal Status into modality-specific components – PS-Speech, PS-Face, and PS-Gesture – each guiding a distinct expressive channel.

- Speech Synthesis: Converts Machine Text into Machine Speech using a Speech Model, modulated by PS-Speech and formatted according to Speech Model specifications.

- Face Descriptor Construction: Combines Machine Speech and PS-Face to generate Entity Face Descriptors that encode expressive timing, gaze, and facial expression.

- Body Descriptor Construction: Uses PS-Gesture and Machine Text to produce Entity Body Descriptors, capturing posture, gesture rhythm, and spatial framing.

- Speaking Avatar Synthesis: Integrates Machine Speech, Face and Body Descriptors, and the Avatar Model to render a fully animated A-User Persona capable of expressive, multimodal communication.

The resulting outputs ensure that the A-User’s rendered behaviour is expressively coherent, semantically aligned, and visually synchronised with the User’s Personal Status – supporting emotionally resonant embodiment and reinforcing conversational trust through consistent multimodal expression.

2. Reference Model

Figure 1 gives the Reference Model of the A-User Formation (PGM-AUF) AIM.

Figure 1 – Reference Model of A-User Formation (PGM-AUF) AIM

3. Input/Output Data

Table 1 gives Input and Output Data of A-User Formation (PGM-AUF) AIM.

Table 1 – Input and Output Data of A-User Formation (PGM-AUF) AIM

| Input | Description |

| Text Object | Input Text. |

| Speech Model | Model used for speech synthesis. |

| Avatar Model | Model used for Avatar synthesis. |

| Personal Status | The A-User Personal Status of Speech, Face, and Gesture. |

| Rendering Directive | Commands driving avatar’s Personal Status and spatial output. |

| Output | Description |

| Avatar | Speaking Avatar uttering speech synthesised from Text and Personal Status. |

| Rendering Status | Rendering success and avatar expression state report to PGM-AUC. |

4. SubAIMs

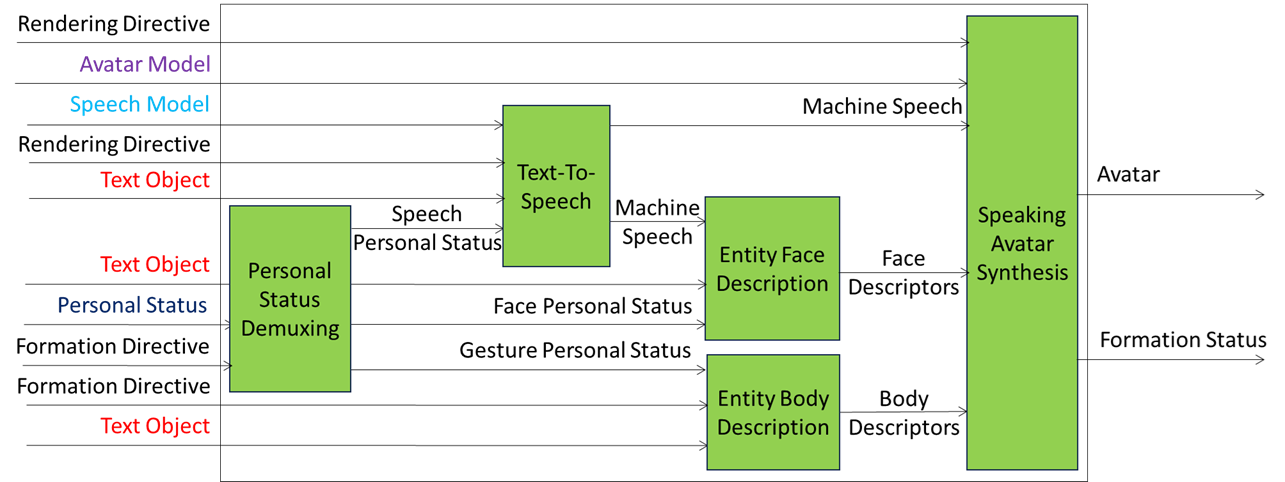

Figure 2 gives the Reference Model of the A-User Formation Composite AIM.

Figure 2 – Reference Model of the A-User Formation (PGM-AUF) Composite AIM

A-User Formation Composite AIM operates as follows:

- Personal Status Demultiplexing makes available the component PS-Speech, PS-Face, and PS-Gesture Modalities.

- Machine Text is synthesised as Speech using a Speech Model in a format specified by NN Format and the Personal Status provided by PS-Speech.

- Machine Speech and PS-Face are used to produce the Entity Face Descriptors.

- PS-Gesture and Text are used for Entity Body Descriptors.

- Speaking Avatar Synthesis uses Avatar Model, Machine Speech, and Face and Body Descriptors to produce the Avatar.

- Rendering Directive overrides Avatar Model, Speech Model, Text,

- Avatar includes associated Speech.

- Rendering Status reports the success or otherwise of the Directive implementation.

Table 21 gives the list of PSD AIMs with their input and output Data.

Table 3 – AIMs of A-User Formation Composite AIM and JSON Metadata

| AIW | AIMs | Name and Specification | JSON |

| PGM-AUF | A-User Formation | X | |

| MMC-PDX | Personal Status Demultiplexing | X | |

| MMC-TTS | Text-to-Speech | X | |

| PAF-EFD | Entity Face Description | X | |

| PAF-EBD | Entity Body Description | X | |

| PAF-PMX | Speaking Avatar Synthesis | X |

Table 4 maps AUF Inputs/Outputs to Unified Messages.

Table 4 — AUF Inputs/Outputs mapped to Unified Messages

| AUF Data Name | Role | Origin / Destination | Unified Schema Mapping |

|---|---|---|---|

| A‑User Entity State | Input | From Personality Alignment (PAL) | Consumed by AUF for actuation and embodiment; correlated via Envelope.CorrelationId; MUST include Trace.Origin and Trace.Timestamp. |

| A‑User Formation Directive | Input | From A‑User Control (AUC) | Directive → TargetAIM=AUF; includes actuation parameters (gesture, posture, timing); correlation maintained via Envelope.CorrelationId. |

| Final Response | Input | From BKN | Input → Payload (final prompt); correlated via Envelope.CorrelationId; includes Trace.Origin and Trace.Timestamp. |

| Avatar | Output | To M‑Instance | Status → Result (ready-to-render Avatar representation); maintain Envelope.CorrelationId; includes Trace.Origin and Trace.Timestamp. |

| A‑User Formation Status | Output | To A‑User Control (AUC) | Status → State/Progress/Summary/Result; includes confidence, execution progress, and fallback logic; MUST include Trace.Origin and Trace.Timestamp. |

https://mpai.community/standards/PGM1/V1.0/AIMs/AUserFormation.json

6. Profiles

No Profiles.