An avatar is generally intended as a representation of a real or fictitious human in a virtual space. Research has dedicated much effort to creating and animating realistic avatars. However, the scope of use is typically assumed to be a closed environment such as a proprietary video game. Therefore, the portability of avatars has seldom been a priority.

Some 30 years ago, the Humanoid Animation (H-Anim) standard was developed that defined a human skeleton composed of joints, segments, and sites exhibiting four levels of articulation and the default skeleton pose. The latest versions of the H-Anim standards are ISO/IEC 19774-1:2019, ISO/IEC 19774-2:2019).

An avatar is a basic but not the only element in an application. What if you want to convey to a third party a speaking avatar immersed in an environment with all its features so that it is displayed as you intended?

Technical Specification: Portable Avatar Format (MPAI-PAF), whose Version 1.4 has recently been approved offers a solution to this problem for a broad range of applications called Portable Avatar. Personal Status is a package of data conveying the following information:

- The ID of the virtual space (M-Instance) where the Portable Avatar is to be placed.

- The space and time information of the “environment” to be placed in the M-Instance.

- The Audio-Visual Scene representing the “environment”.

- The space and time information of the Avatar in the scene.

- The Avatar represented as a 3D Model, its Face Descriptors and Body Descriptors.

- The Language Preference of the Avatar.

- The Text Object the Avatar is associated with, or which will be converted into a Speech Object.

- The Speech Model used to synthesise the Text Object.

- The Speech Object alternative to the Text Object that the Avatar utters.

- The Personal Status of the Avatar.

Here is a brief description of the Portable Avatar components.

- The ID of the virtual space (M-Instance). This is the ID of a virtual space where the Portable Avatar is to be placed. It can be a metaverse (for MPAI, this would be an M-Instance of MMM-TEC).

- The space and time information of the “environment”. MPAI has defined a data type called Space-Time that defines:

- The space information as Spatial Attitude, Position, Orientation, and optionally their velocities and acceleration.

- Time defined as either absolute (from 1970/01/01T00:00 or from an arbitrary origin of time.

- The Audio-Visual Scene. MPAI has defined Scene as a data type that describes a scene as composed of scenes and objects with their Space-Time information. The MPAI scene is thus hierarchical (see Audio-Visual Scene Descriptors).

- The space and time information of the Avatar considered a particular type of object in the scene. The Avatar data type has its own space-time information. This is overridden by the scene time information, if different.

- The Avatar. The MPAI Avatar data type is a structure that includes:

- The space-time information (that is overridden by the space-time information of the scene).

- The 3D Model Object composed of data and Qualifier giving additional information to the data, e.g., the format.

- The Face Descriptors. MPAI has adopted the Actions Units of the Facial Action Coding System (FACS).

- The Body Descriptors. MPAI has adopted the H-Anim standard, but the 3D Model Qualifier allows the use of other standards.

- The Language Preference. MPAI supports the signalling of a large number of language codes.

- The Text Object. An avatar may have a textual description represented by a Text Object (text data and Qualifier providing various types of information on the text, e.g., language and character code).

- The Text Object may be used to synthesise a Speech Object (speech data and Qualifier). The Portable Avatar can convey a neural network speech model to synthesise the text. In MPAI, a neural network model (more generally, a Machine Learning Model) has an associated Qualifier providing various types of information such as the conformity of the model to a particular regulation.

- The Speech Object. In some cases, the speech associated with the avatar is conveyed by the Portable Avatar. Same as for the Text Object, a Speech Object includes speech data and a Qualifier that may be used to provide information on the language, compression format, speaker identity etc.

- The Personal Status. The Personal Status is an MPAI data type including information on the Cognitive State, Emotion, and Social Attitude of the Text, Speech, Face, and Gesture of an Entity (in MPAI Entity is used to indicate either a human or the process animating an avatar).

- Cognitive State represents the internal state of an Entity that has knowledge of the context such as “surprised” or “interested”.

- Emotion represents the internal state of an Entity such as that resulting from its interaction with the context, such as “angry” or “sad”.

- Social Attitude represents the internal state of an Entity related to the way it intends to position itself vis-à-vis the context, e.g., “respectful” or “soothing”.

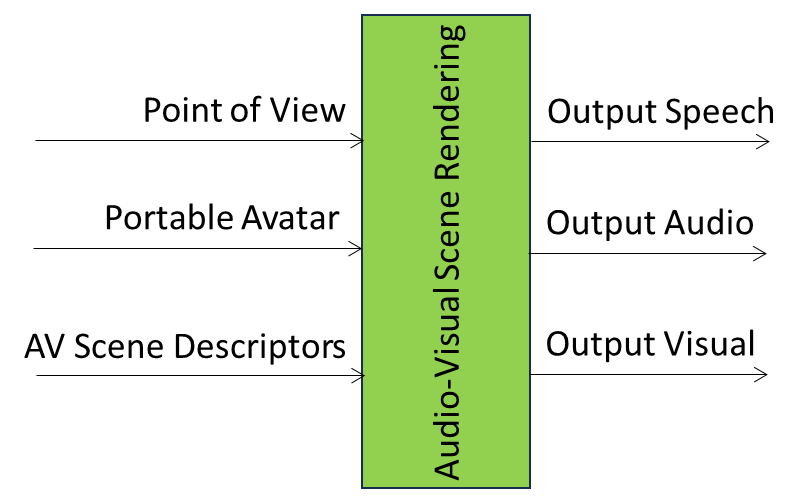

The Portable Avatar is an essential component of a variety of use cases. It is typically used as input to the Audio-Visual Scene Rendering AI Module that produces Speech, Audio, and Visual Objects from Portable Avatar, Audio-Visual Scene Descriptors (in case one is not available in the Portable Avatar), and a Point of View as depicted in Figure 1.

Figure 1 – Audio-Visual Scene Rendering AI Module

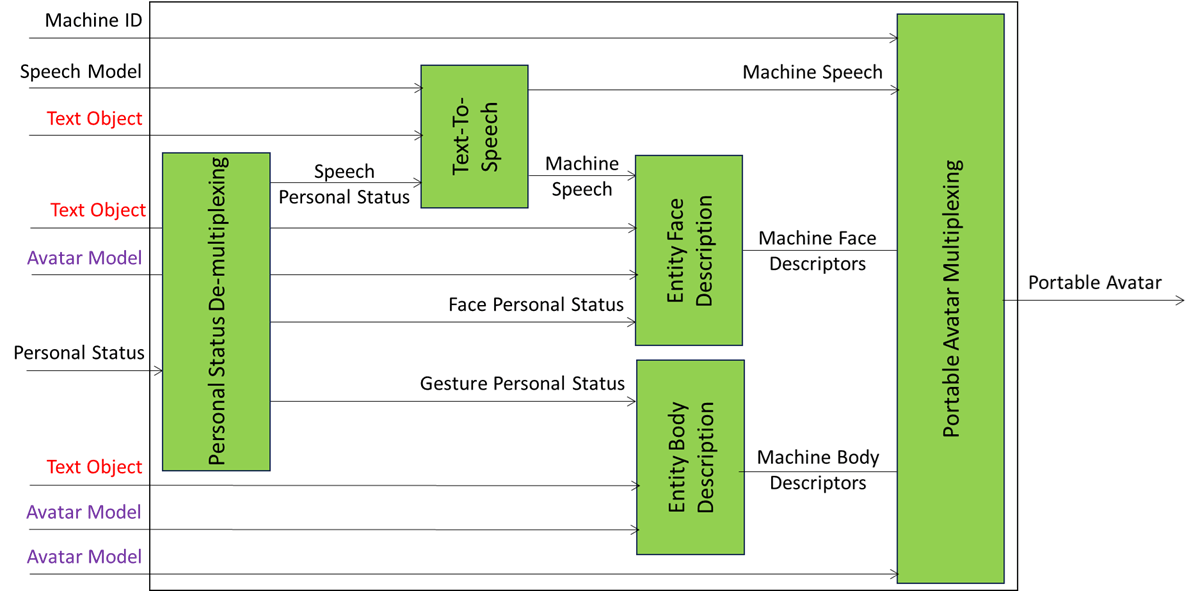

The Personal Status Display (PAF-PSD) AIM produces a Portable Avatar corresponding to an Avatar Model uttering a Speech Object synthesised from a Text Object with a Speech Model and displaying a Personal Status:

Figure 2 – Personal Status Display

Here, the input is a Text Object, a Neural Network Speech Model (in case the PAF-PSD does not have one embedded), an Avatar Model, and a Personal Status:

- The Text is used to synthesise speech modulated with the Speech component of the input Personal Status.

- The Speech, input Text and Face component of the input Personal Status, and the input Avatar Model are used to synthesise a face;

- The Text, the Gesture component of the input Personal Status, and the input Avatar Model are used to synthesise the body.

In MPAI, the Lego approach to avatar deployment in applications is a reality.