With 5 standards approved, MPAI enters a new phase

Geneva, Switzerland – 26 January 2022. Today the Moving Picture, Audio and Data Coding by Artificial Intelligence (MPAI) standards developing organisation has concluded its 16th General Assembly, the first of 2022, approving its 2022 work program.

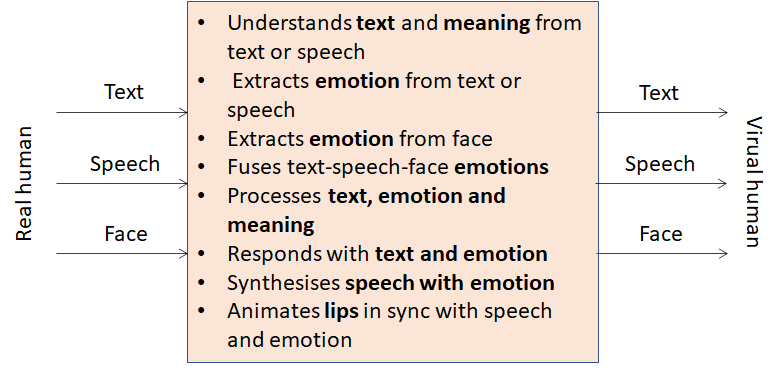

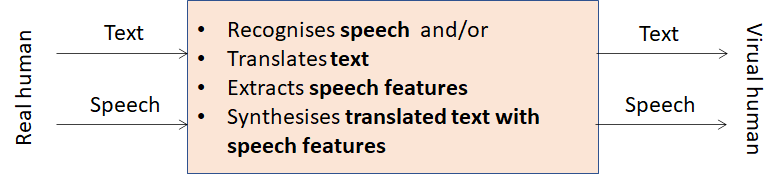

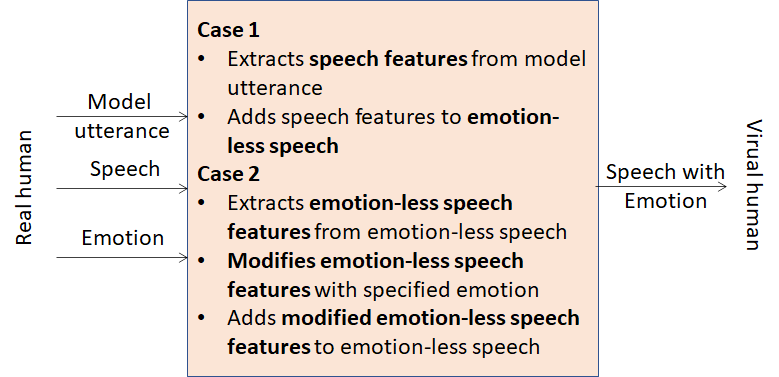

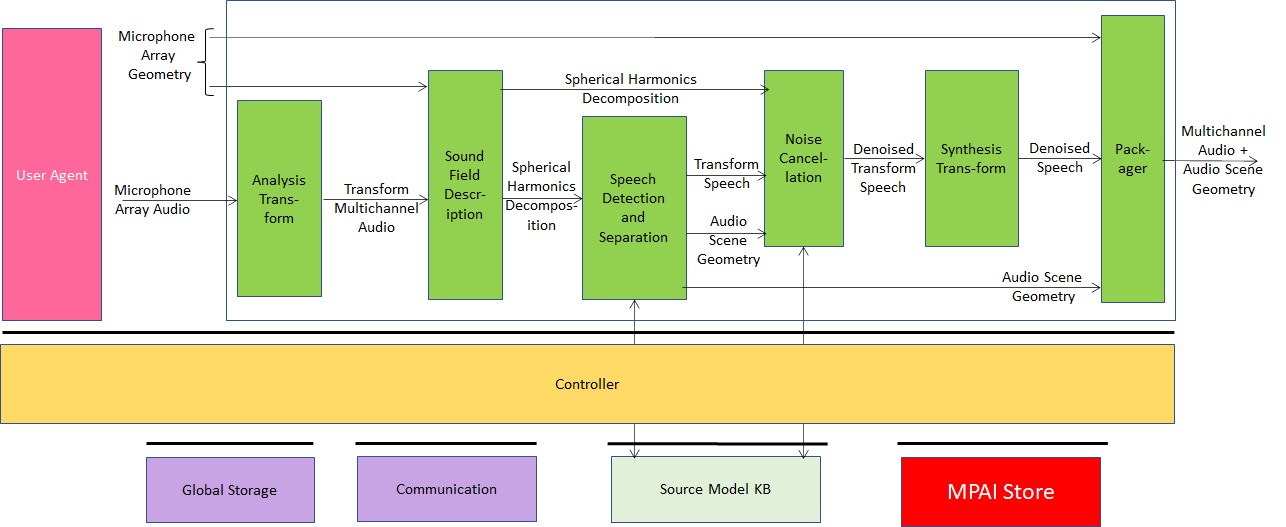

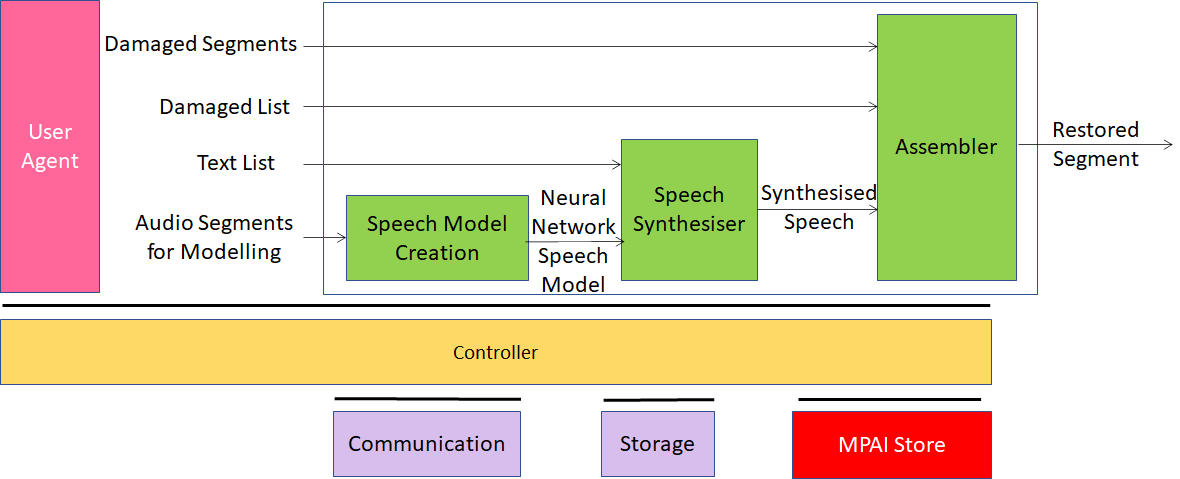

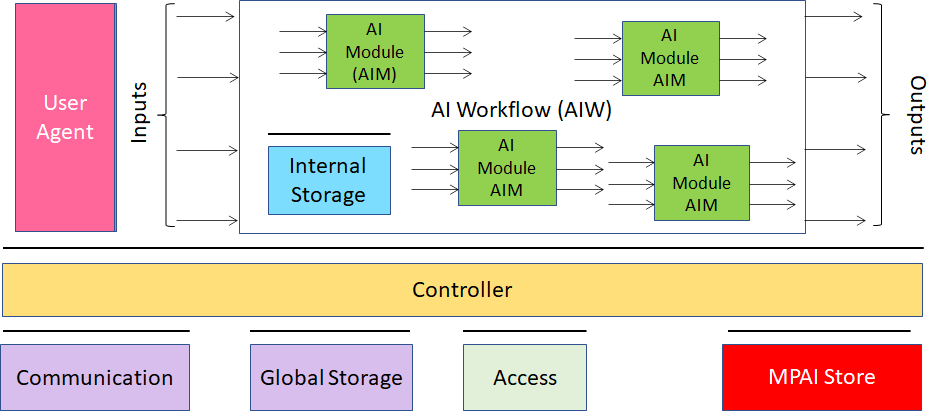

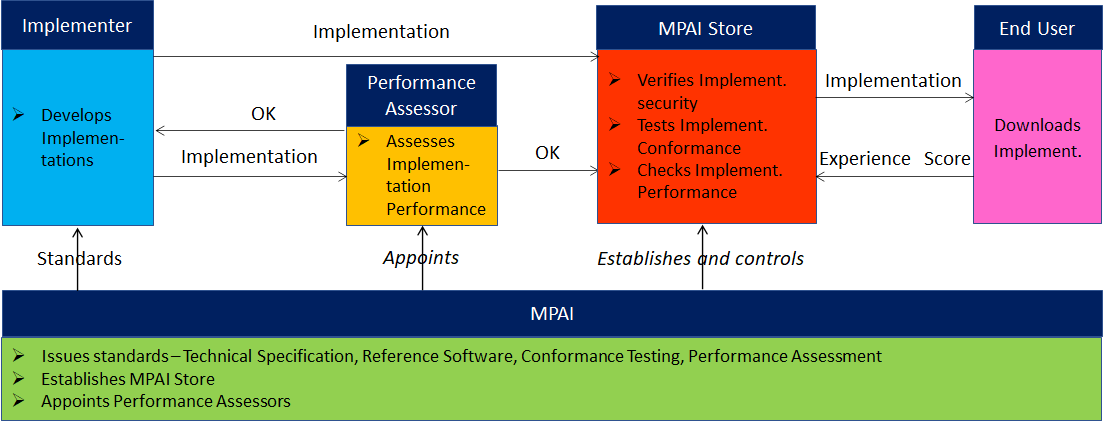

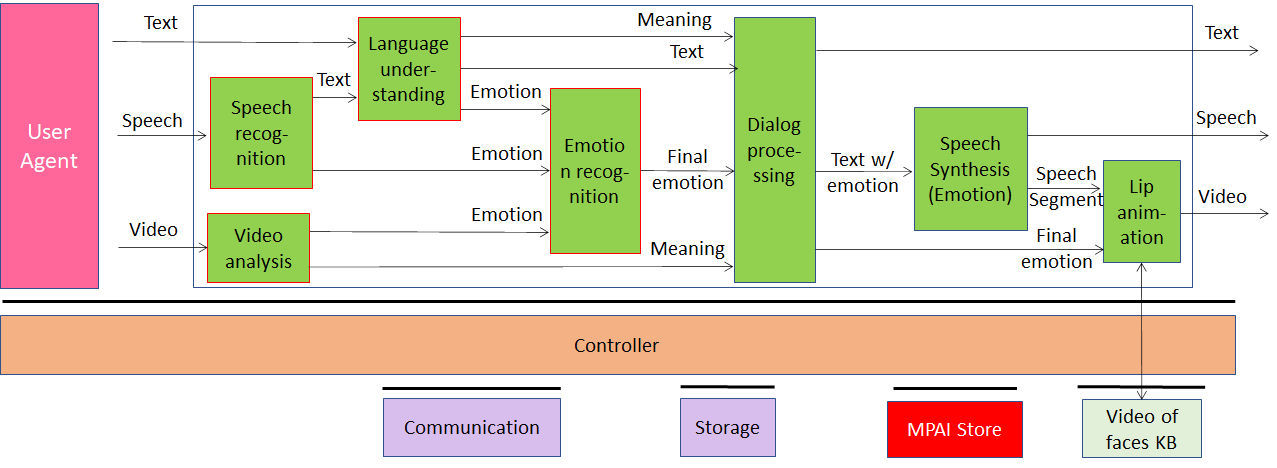

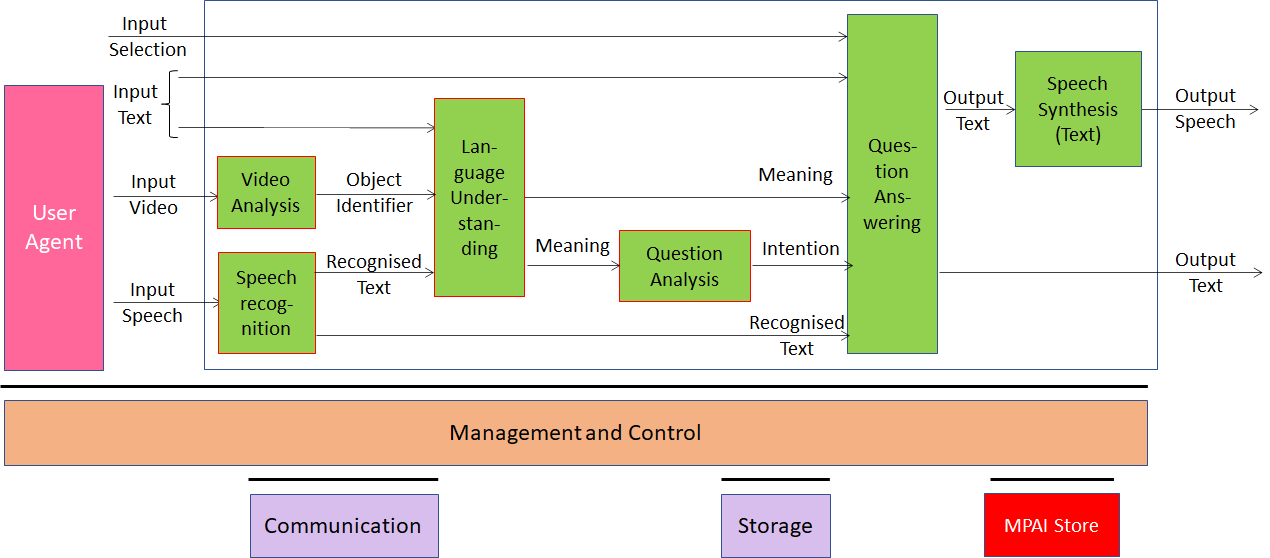

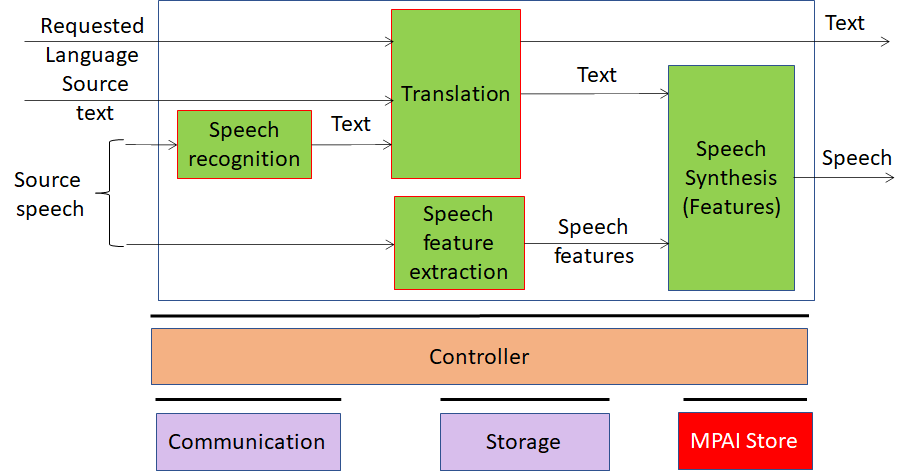

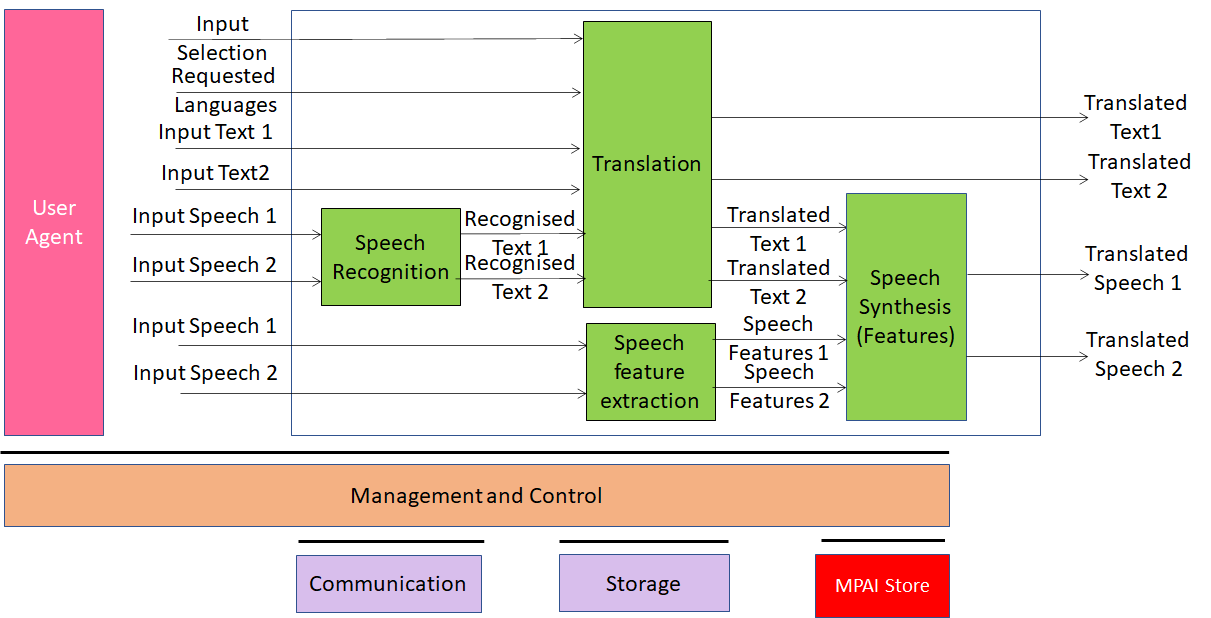

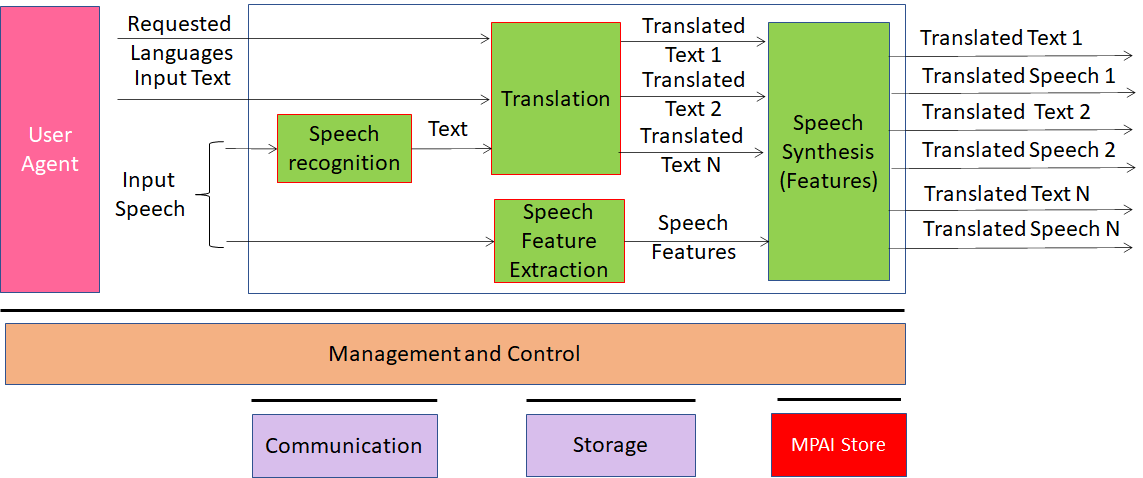

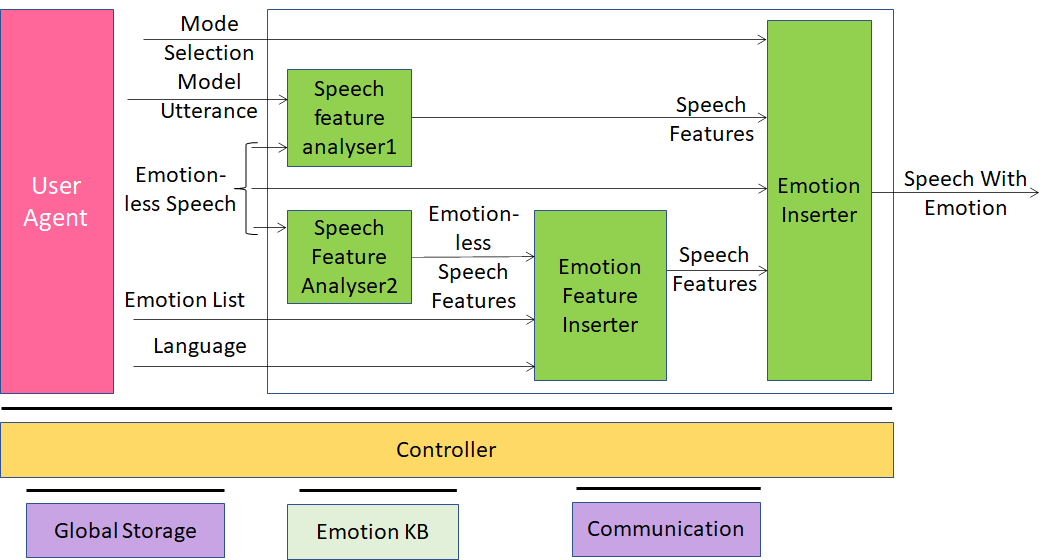

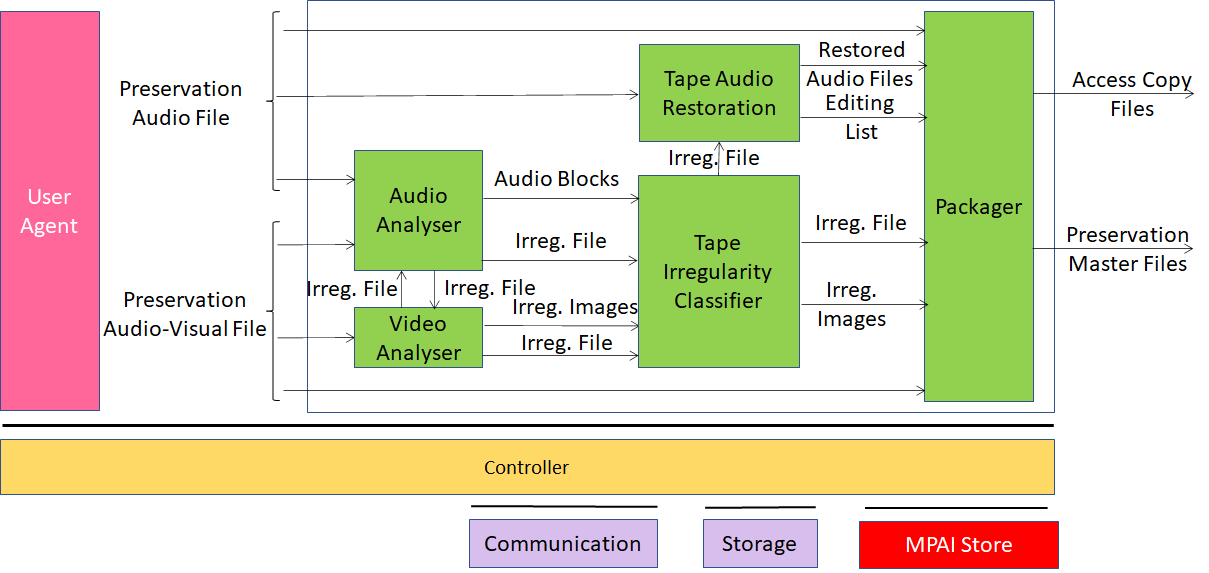

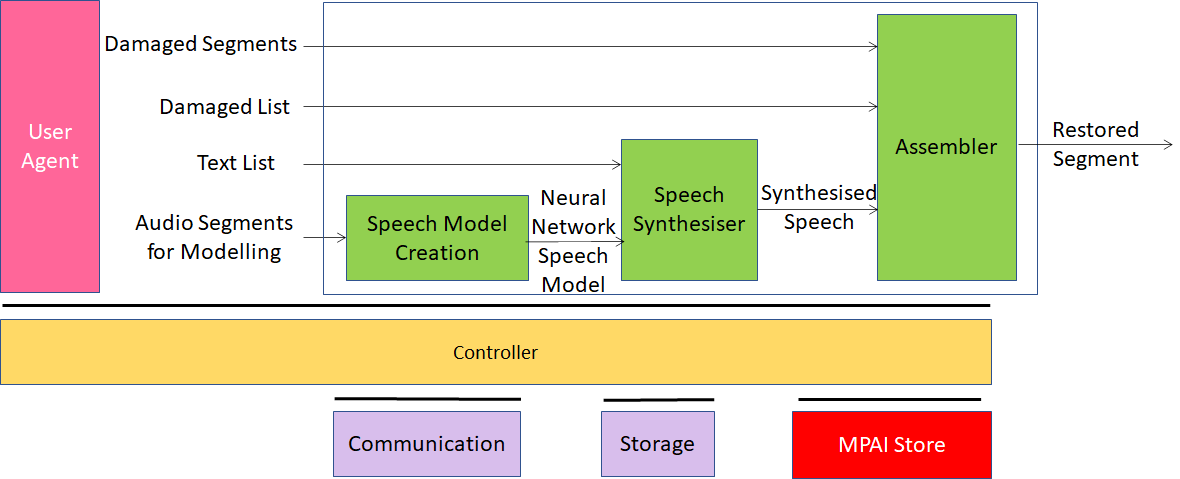

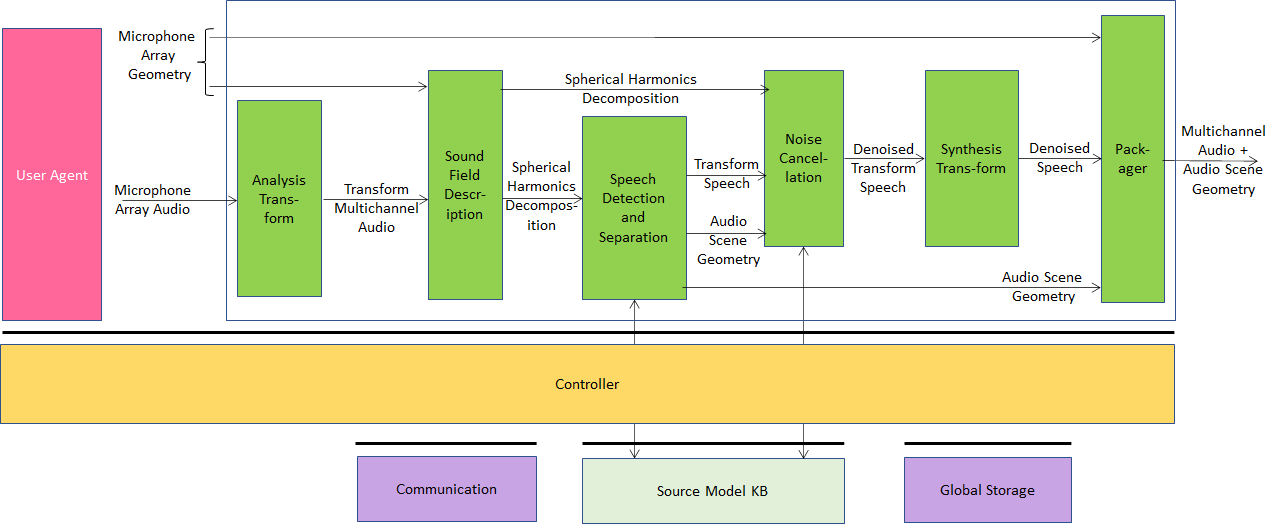

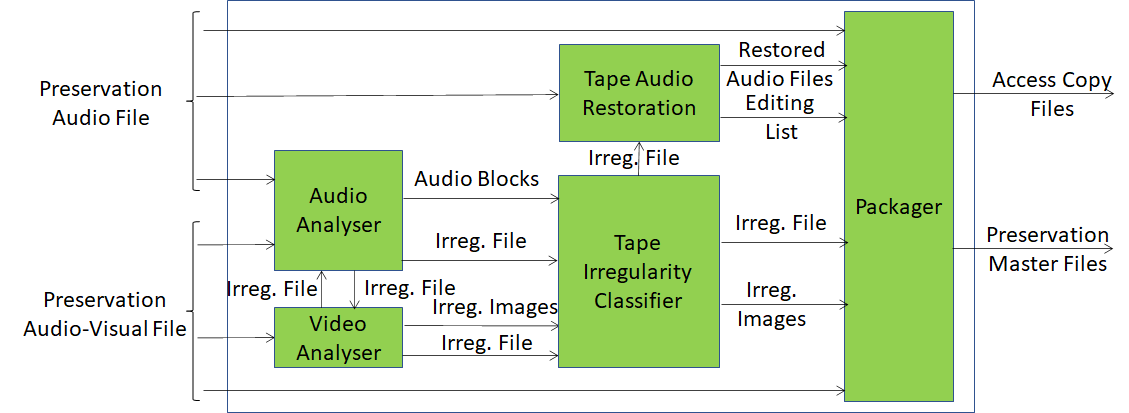

The work program includes the development of reference software, conformance testing and performance assessment for 2 application standards (Context-based Audio Enhancement and Multimodal Conversation), reference software, conformance assessment for 1 infrastructure standard (AI Framework), and the establishment of the MPAI Store, a non-profit foundation with the mission to distribute verified implementations of MPAI standards, as specified in another MPAI infrastructure standard (Governance of the MPAI Ecosystem).

An important part of the work program addresses the development of performance assessment specifications for the 2 application standards. The purpose of performance assessment is to enable MPAI-appointed entities to assess the grade of reliability, robustness, replicability and fairness of implementations. While performance will not be mandatory for an implementation to be posted to the MPAI Store, users downloading an implementation will be informed of its status.

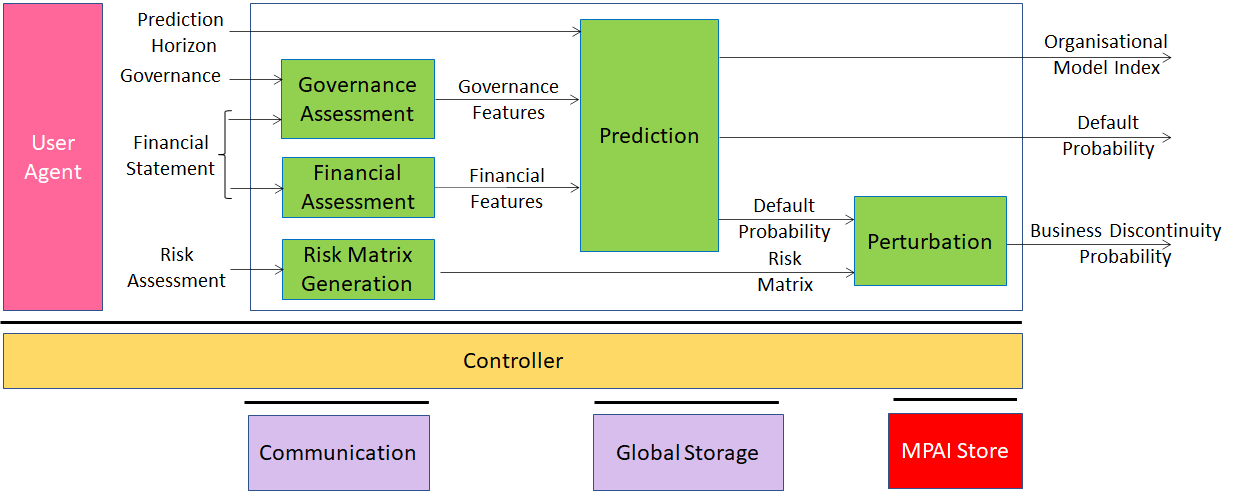

Another section of the work program concerns the development of extensions of existing standards. Company Performance Prediction (part of Compression and Understanding of Industrial Data) will include more risks in addition to seismic and cyber; Multimodal Conversation will enhance the features of some of its use cases, e.g., by applying them to the interaction of a human with a connected autonomous vehicle; and Context-based Audio Enhancement will enter the domain of separation of useful sounds from the environment.

An important part of the work program is assigned to developing new standards for the areas that have been explored in the last few months, such as:

- Server-based Predictive Multiplayer Gaming (MPAI-SPG) using AI to train a network that compensates data losses and detects false data in online multiplayer gaming.

- AI-Enhanced Video Coding (MPAI-EVC) improving existing video coding with AI tools for short-to-medium term applications.

- End-to-End Video Coding (MPAI-EEV) exploring on the promising area of AI-based “end-to-end” video coding for longer-term applications.

- Connected Autonomous Vehicles (MPAI-CAV) using AI for such features as Environment Sensing, Autonomous Motion, and Motion Actuation.

Finally, MPAI welcomes new activities proposed by its members to its work program:

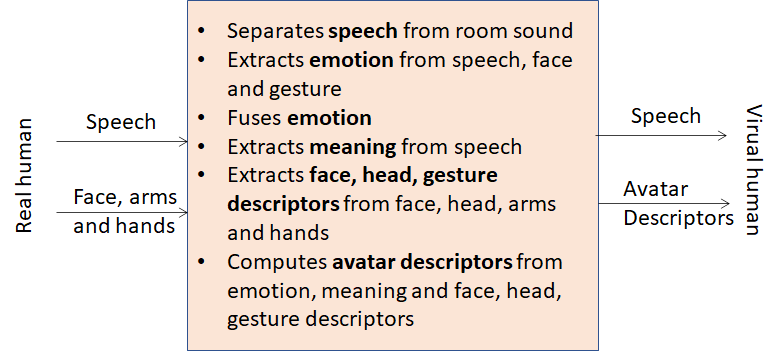

- Avatar Representation and Animation (MPAI-ARA) targeting the specification of avatar descriptors.

- Neural Network Watermarking (MPAI-NNW) developing measures of the impact of adding ownership and licensing information inside a neural network.

MPAI develops data coding standards for applications that have AI as the core enabling technology. Any legal entity supporting the MPAI mission may join MPAI, if able to contribute to the development of standards for the efficient use of data.

Visit the MPAI web site, contact the MPAI secretariat for specific information, subscribe to the MPAI Newsletter and follow MPAI on social media: LinkedIn, Twitter, Facebook , Instagram and YouTube.

Most important: join MPAI, share the fun, build the future.