2021/06/30

MPAI has been established on 30 September 2020 as a not-for-profit unaffiliated organisation with the mission (https://mpai.community/statutes/)

- to develop data coding standards based on Artificial Intelligence and

- to bridge the gap between standards and their practical use through Framework Licences.

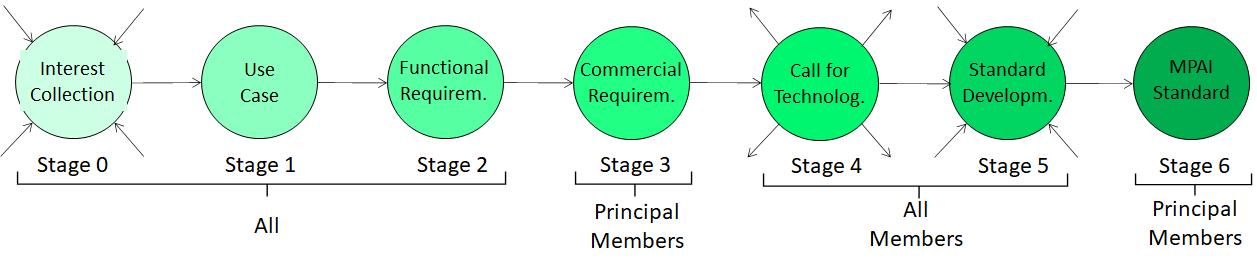

MPAI develops its standards through a rigorous process depicted in following figure:

The MPAI standard development process

An MPAI standard passes through 6+1 stages. Anybody can contribute to the first 3 stages. The General

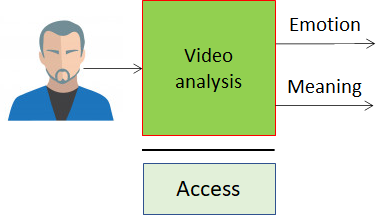

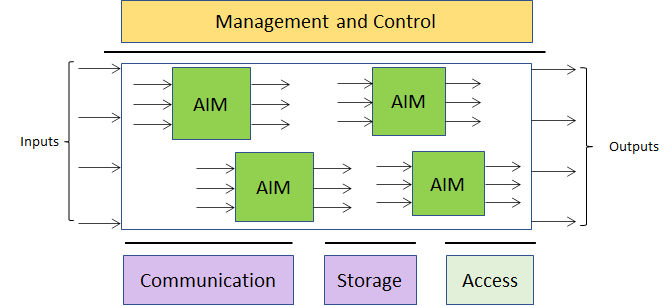

Assembly approves the progression of a standard to the next stage. MPAI defines standard interfaces of AI Modules (AIM) combined and executed in an MPAI-specified AI-Framework (AIF). AIMs receive

data with standard formats and produce output data with standard formats.

|

|

| An MPAI AI Module (AIM) | The MPAI AI Framework (AIF) |

MPAI is currently developing 10 technical specifications.

In the following, the MPAI name and acronym and the scope of each standard are provided. The first 4 standards will be approved within 2021,

AI Framework – MPAI-AIF (Stage 5)

Specifies 6 elements: Management and Control, AIM, Execution, Communication, Storage and Access to enable creation and automation of mixed ML-AI-DP processing and inference workflows.

Context-based Audio Enhancement – MPAI-CAE (Stage 5)

Improves the user experience in audio applications, e.g., entertainment, communication, teleconferencing, gaming, post-production, restoration etc. for different contexts, e.g., in the home, in the car, on-the-go, in the studio etc.

Multimodal Conversation – MPAI-MMC (Stage 5)

Enables human-machine conversation that emulates human-human conversation in completeness and intensity

Compression and understanding of industrial data – MPAI-CUI (Stage 5)

Enables AI-based filtering and extraction of governance, financial and risk data to predict company performance.

Server-based Predictive Multiplayer Gaming – MPAI-SPG (Stage 3)

Minimises the audio-visual discontinuities caused by network disruption during an online real-time game and provides a response to the need to detect who amongst the players is cheating.

Integrative Genomic/Sensor Analysis – MPAI-GSA (Stage 3)

Understands and compresses the result of high-throughput experiments combining genomic/proteomic and other data, e.g., from video, motion, location, weather, medical sensors.

AI-Enhanced Video Coding – MPAI-EVC (Stage 3)

Substantially enhances the performance of a traditional video codec by improving or replacing traditional tools with AI-based tools.

Connected Autonomous Vehicles – MPAI-CAV (Stage 3)

Uses AI to enable a Connected Autonomous Vehicle with 3 subsystems: Human-CAV interaction, Autonomous Motion Subsystem and CAV-Environment interaction

Visual object and scene description – MPAI-OSD (Stage 2)

A collection of Use Cases sharing the goal of describing visual object and locating them in the space. Scene description includes the usual description of objects and their attributes in a scene and the semantics of the objects.

Mixed-Reality Collaborative Spaces – MPAI-MCS (Stage 1)

Enables mixed-reality collaborative space scenarios where biomedical, scientific, and industrial sensor streams and recordings are to be viewed where AI can be utilised for immersive presence, spatial map rendering, multiuser synchronisation etc.

Additionally, MPAI is developing a standard titled “Governance of the MPAI ecosystem”. This will specify how:

- Implementers can get certification of the adherence of an implementation to an MPAI standard from the technical (Conformance) and ethical (Performance) viewpoint.

- End users can reliably execute AI workflows on their devices.