2021/10/30

The foundational AI Framework standard is coming to the fore

One of the distinctive characteristics of MPAI standardisation is componentisation. For instance, a complex system like Conversation with Emotion, where a human holds a conversation with a machine impersonated by a synthetic voice and an animated face using text, speech and video, is reduced to 7 interconnected subsystems for each of which the function, the format and semantics of the input and output data, and their interconnections is specified. The result is a workflow of connected processing elements.

This approach has several benefits. One is that it is easier to work with smaller rather than larger entities, another that it is easier to trace back the result to its source, a notion that experts call Explainability and a third one is that a component used in a workflow may often be reused “as is” in another workflow.

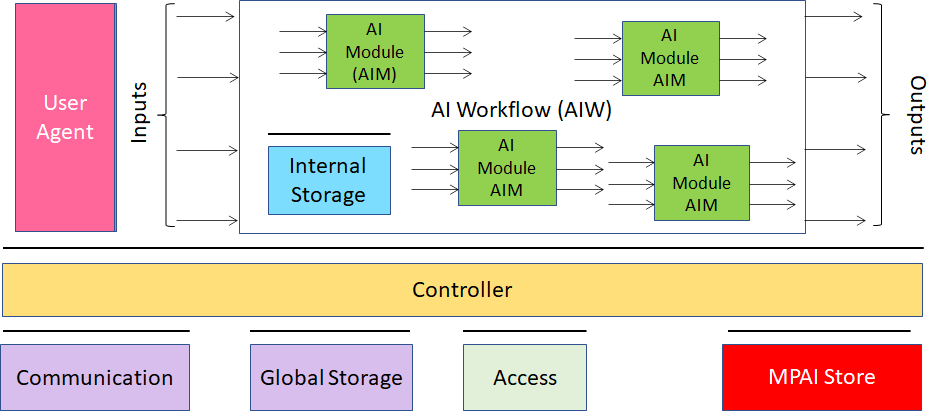

The problem is that, to execute such a workflow, you need an environment that allows easy interconnection and interoperability of modules organised in workflows. This is one of the functions of the MPAI AI Framework standard. MPAI-AIF can execute AI Workflows (AIW) composed of AI Modules (AIM). AIMs can be based on data processing, AI or Machine Learning functions implemented in hardware, software and hybrid hardware and software.

Figure 1 represents the MPAI-AIF Reference Model and its components.

Figure 1 – The AI Framework (AIF) Reference Model and its Components

The Controller plays a key role because:

- It provides basic functionalities such as scheduling, inter AIMs communication, access to all AIF components such as the Internal and Global Storage.

- It activates/suspends/resumes/deactivates AIWs or AIMs according to the user’s or other inputs

- It can support complex application scenarios by balancing load and resources.

- It exposes three APIs:

- AIM API through which modules can communicate with it (register themselves, communicate and access the rest of the AIF environment)

- User API through which the user or other Controllers can perform high-level tasks (e.g., switch the Controller on and off, give inputs to the AIW through the Controller).

- MPAI Store API to enable communication between the AIF and the Store.

- May run one or more AIWs.

The MPAI Store is another critical component. It is a repository of AIF, AIW and AIM implementations that users can access to set up an environment, and download an application (AIW) and its modules (AIM).

An AIW executed in an AIF may have one of the following MPAI-defined Interoperability Levels:

- Interoperability Level 1, if the AIW is proprietary and composed of AIM with proprietary functions using any proprietary or standard data Format.

- Interoperability Level 2, if the AIW is composed of AIMs having all their Functions, Formats and Connections specified by an MPAI Application Standard.

- Interoperability Level 3, if the AIW has Interoperability Level 2, and the AIW and its AIMs are certified by an MPAI-appointed Assessor to hold the attributes of Reliability, Robustness, Replicability and Fairness – collectively called Performance.

MPAI-AIF is now at the WD0.12 level. MPAI plans on approving MPAI-AIF V1 as a standard at its next General Assembly (MPAI-15) on 24 November. The MPAI standard development process, however, has a stage called Community Comments that takes place before final approval. WD0.12 can be downloaded from the MPAI web site (https://mpai.community/standards/mpai-aif/). Anybody may submit comments to the MPAI Secretariat (secretariat@mpai.community). Comments are especially requested on the suitability of the standard in its current form, and suggestions for future work.

Comments will not be shared outside MPAI but considered by AIF-DC, the Development Committee in charge of the standard. The Secretariat will provide individual responses to comments.

Comments shall not include any Intellectual Property matters. If any will be received, the Secretariat will return the email and not forward it to any MPAI member or Development Committee.

AI and Video, a partnership destined to last

Since the day MPAI was announced, there has been considerable interest in the application of AI to video coding. In the Video Codec world research effort focuses on radical changes to the classic block-based hybrid coding framework to face the challenges of offering more efficient video compression solutions. AI approaches can play an important role in achieving this goal.

According to a literature survey of AI-based video coding papers, performance improvements up to 30% can be expected. Therefore, MPAI has investigating whether it is possible to improve the performance of the MPEG-5 Essential Video Coding (EVC) standard modified by enhancing/replacing existing video coding tools with AI tools keeping complexity increase to an acceptable level.

While the MPAI-EVC Use Cases and Requirements documents is ready and would enable the MPAI General Assembly to proceed to the Commercial Requirements phase, MPAI has made a deliberate decision not to move to the next stage because it first wanted to make sure that the results collected from different papers were indeed confirmed when implemented in a unified platform.

As MPAI members are globally distributed and they work across multiple software frameworks, the first challenge faced by MPAI was to enable them to collaborate in real-time in a shared environment. The main goals of this environment are:

- to allow testing of independently developed AI tools on a common EVC code base.

- to run the training and inference phases in “plug and play” manner.

To address these requirements, MPAI decided to adopt a solution based on a networked server application listening for inputs over an UDP/IP socket.

MPAI has been is working on three tools (Intra prediction, Super Resolution, In-loop Filtering). For each tool there are three phases: database building, learning phase and inference phase.

Significant gains have already been obtained.

Once the MPAI-EVC Evidence Project – as the current activity is called – will demonstrate that AI tools can improve the MPEG-5 EVC efficiency by at least 25%, MPAI will be in a position to initiate work on its own MPAI-EVC standard. The functional requirements already developed need only to be revised while the framework licence needs to be developed before a Call for Technology can be issued.

However, there is consensus in the video coding research community – and some papers make claims grounded on results – that so-called End-to-End (E2E) video coding schemes can yield significantly higher performance. However, many issues need to be examined, e.g., how such schemes can be adapted to a standard-based codec. End-to-End E2E VC promises AI-based video coding standard with significantly higher performance in the longer term.

As a technical body unconstrained by IP legacy and whose mission is to provide efficient and usable data coding standards, MPAI has initiated the study of what we can call End-to-End Video Coding (MPAI-EEV). This decision is an answer to the needs of the many who need not only environments where academic knowledge is promoted but also a body that develops common understanding, models and eventually standards-oriented End-to-End video coding.

Of course, the MPAI-EVC Evidence Project continues and new resources have been found to support the new activity. MPAI-EEV is at the Interest Collection stage, the first of the 8 stages through which an activity can become a standard.

MPAI-EEV is designed to serve long-term video coding needs. In the first phase of work MPAI-EEV researchers are engaged in cycles comprising:

- Coordinated research

- Comparing results within a common model

- Definition of new rounds of investigation.

The definition of a reference model was the first step. The method envisaged is by extracting the models implicitly or explicitly assumed by published End-to-End Video Coding papers, including published Open Source Software.

Work is progressing. Stay tuned.

MPAI meetings in November

| Group name | 1-5 | 8-12 | 15-19 | 22-26 | Time |

| Mixed-reality Collaborative Spaces | 1 | 8 | 15 | 22 | 14 |

| AI Framework | 1 | 8 | 15 | 22 | 15 |

| Multimodal Conversation | 2 | 9 | 16 | 23 | 14:30 |

| Context-based Audio enhancement | 2 | 9 | 16 | 23 | 15:30 |

| Connected Autonomous Vehicles | 3 | 10 | 17 | 24 | 13 |

| AI-Enhanced Video Coding | 10 | 24 | 14 | ||

| AI-based End-to-End Video Coding | 3 | 17 | 14 | ||

| Compression and Understanding of Industrial Data | 13 | 27 | 15 | ||

| Server-based Predictive Multiplayer Gaming | 4 | 11 | 18 | 14:30 | |

| Communication | 4 | 18 | 15 | ||

| Industry and Standards | 5 | 19 | 14 | ||

| General Assembly (MPAI-13) | 24 |