MPAI presents Context-based Audio Enhancement and Reference Software online

MPAI issues Call for Technologies: MPAI Metaverse Model – Architecture

MPAI presents Context-based Audio Enhancement and Reference Software online

The Context-based Audio Enhancement standard – MPAI-CAE/IEEE 3302 – improves the user experience for a range of audio-related applications including entertainment, communication, teleconferencing, gaming, post-production, and restoration in a variety of contexts such as in the home, in the car, on-the-go, and in the studio.

MPAI has recently completed the development of the MPAI-CAE V1 Reference Software for the award-winning Audio Recording Preservation (ARP) and the Enhanced Audioconference Experience (EAE) Use Cases. MPAI releases Reference Software as open source with a BSD licence.

MPAI will make an online presentation of the MPAI-CAE standard and the reference software of the two Use Cases on 2023/07/10 at 14:00 UTC.

The Audio Recording Preservation (CAE-ARP) Use Case enables a user to create a digital copy of a digitised audio stream from open-reel magnetic tapes. The copy is suitable for long-term preservation and correct playback (restored, if necessary). During the presentation, a practical demonstration of the ARP Reference Software will be made.

The Enhanced Audioconference Experience (CAE-EAE) Use Case enables a user to improve the auditory quality of their audioconference experience by processing speech signals captured by a group of microphones and then provides individual speech signals that are free from background noise and acoustics-related artifacts. During the presentation, the performance of the EAE Reference Software will be demonstrated with real signals containing multiple speech sources showing how the individual speech sources can be separated.

MPAI issues Call for Technologies: MPAI Metaverse Model – Architecture

After preparatory work carried out over the last 18 months that has produced two Technical Reports – Metaverse Functionalities and Functionality Profiles (see here for a summary) – MPAI is now ready to undertake the challenging task of developing a standard for the MPAI Metaverse Model (MPAI-MMM) Architecture.

MPAI has a rigorous process to develop standards involving eight steps. The promotion of a standard project from one step to another is approved by the MPAI General Assembly. In the current Call for Technologies, the MPAI process requires two other accompanying documents from previous steps: the Use Cases and Functional Requirements and the Framework Licence.

The former contains a description of use cases considered as representative uses of the capabilities of the standard project and the list of required capabilities (functional requirements). Anybody can respond to the Call provided they attach to their response a statement of acceptance of the Framework Licence, a licence that provides a set of clauses to be used in the formulation of the eventual licence. The Framework Licence, however, does not provide numerical values of costs, dates, percentages etc.

The documents are accessible from the MPAI-MMM webpage.

Online presentations will be made at two events, on 2023/06/24 at 09:00 UTC and at 15:00 UTC.

The Call for Technologies specifically requests comments on, modification of and additions to:

- Use Cases of the MPAI Metaverse Model.

- Functionalities of the MPAI Metaverse Model.

- Functional Requirements derived from the Use Cases.

- Comments on the Processes, Items, Actions, and Data Types and justified proposals for new ones.

Inquiries can be made, and responses shall be sent to the MPAI Secretariat. The deadline for responses is 2023/07/10 T23:59 UTC.

More on the Call for Technologies: Use Cases of the MPAI-MMM – Architecture

The MPAI-MMM Functionalities document has collected Use Cases from 18 application areas: Automotive, Defence, Education, Enterprise, eSports, Events, Finance, Food, Gaming, Healthcare, Hospitality, Professional training, Real estate, Remote work, Retail, Social media, Travel, and Virtual spaces. The document has also made an in-depth analysis of 11 workflows: Attend a Metaverse Event; Buy a personal wearable; Buy the real twin of an Object; Establish a Metaverse Environment; Interact with a Metaverse Call Centre; Navigate a 3D Object; Relax in a Metaverse Environment; Social gathering across Metaverse Environments; Train a Metaverse Hospital staff; Visit a Metaverse Environment; Work in a Metaverse Environment.

The MPAI-MMM Functionality Profiles document has further explored the following Use Cases: Virtual Lecture, Virtual Meeting, Hybrid working, eSports Tournament, Virtual performance, AR Tourist Guide, Virtual Dance, Virtual Car Showroom and Drive a Connected Autonomous Vehicle. It has also developed an original method to graphically represent the Use Cases.

While the identified Use Cases and Workflows cannot be considered an exhaustive representation of all the potential Metaverse Use Cases, they do represent a significant range of application domains.

Respondents to the Call for Technologies are invited to comment on these and/or propose new Use Cases.

More on the Call for Technologies: Basic requirements of MPAI-MMM – Architecture

This is the first of a set of snapshots of the MPAI-MMM Use Cases and Functional Requirements. Words beginning with a capital letter have the meaning of the MPAI-MMM terminology.

The MPAI Metaverse Model assumes that an M-Instance includes a set of interacting Processes performing specific Actions (i.e., to provide metaverse functionalities) on Items (i.e., data having a format supported by MPAI-MMM) and relying on Data Types (e.g., a measure of time or the coordinates of a coordinate system).

Note that the MPAI-MMM Architecture does not specify how to implement an operational M-Instance. It only specifies how its components can interoperate within and without an M-Instance. Therefore, components that are important for an implementation may not be considered relevant to the MPAI-MMM – Architecture standard.

Also note that the MPAI-MMM – Architecture is agnostic of:

- The type of architecture (the model is applicable to centralised, decentralised, or blockchain-based architectures).

- The identification technology (the model only assumes that identification is possible, it does not make assumption on how identification is achieved).

- The security technology (the model only assumes that the environment in which the operation takes place is secure, it does not make assumption on how security is achieved).

- The data formats (the model only identifies the functionalities that the data format should provide, it does not assume any specific data format).

- The network (the model only assumes that the network has the required capabilities, it does not make assumption on how they can be provided).

An M-Instance is defined as a set of Processes providing some or all the following functions:

- Senses data from U-Environments.

- Processes the sensed data and produce data whose format is supported by the metaverse.

- Produces one or more M-Environments populated by objects that can either be digitised or virtual, with or without autonomy.

- Processes and uses objects – from the same or other M-Instances – to affect real or virtual environments in ways that are:

- Consistent with the goals set for the M-Instance.

- Effected within the capabilities of the M-Instance and the Rules set for the M-Instance.

- Identifies Processes and Items with one or more than one Identifier that uniquely references one Process or Item and includes an Identifier.

- May contain one or more M-Environments each of which:

- Includes an Identifier.

- May include M-Locations with space and time attributes.

- May require a Registration specific to the M-Environment.

- May display its Capabilities.

- May require Registration for use:

- A human can request to deploy their Users rendered by Personae in an M-Instance.

- An M-Instance may request a subset of the Personal Profile of the Registering human.

- A human’s Users in an M-Instance shall comply with the Rules of the M-Instance.

- An M-Instance can penalise a User for lack of compliance with the Rules.

The Call for Technologies requests comments on, proposed revisions of, and justified proposals for new MPAI-MMM Basic Functional Requirements.

More on the Call for Technologies: Operation of the MPAI-MMM – Architecture

A Process

- Is Program and Metadata supported by the M-Instance where they perform Actions on Items.

- May request another Process to perform Actions on Items by transmitting a Request-Action Item to it. The other Process receiving the request may perform it and respond with a Response-Action.

- May need to be certified by the M-Instance Manager for use in an M-Instance.

- May contain one or more M-Environments each of which:

- Includes an Identifier.

- May include M-Locations with space and time attributes.

- May require a Registration specific to the M-Environment.

- May make available its Capabilities.

It is convenient to characterise four types of Process performing Actions:

- User represents a human rendered as:

- A Model animated by a stream generated by the human or by an autonomous agent.

- An Object rendering the human.

- Device connects User with a U-Environment:

- From Universe to Metaverse: captures a scene as Media and provides Media as Data and Metadata.

- From Metaverse to Universe: receives an Entity and renders the Entity as Media with a Spatial Attitude.

- App is a Program running on a Device.

- Service provides specific Functionalities.

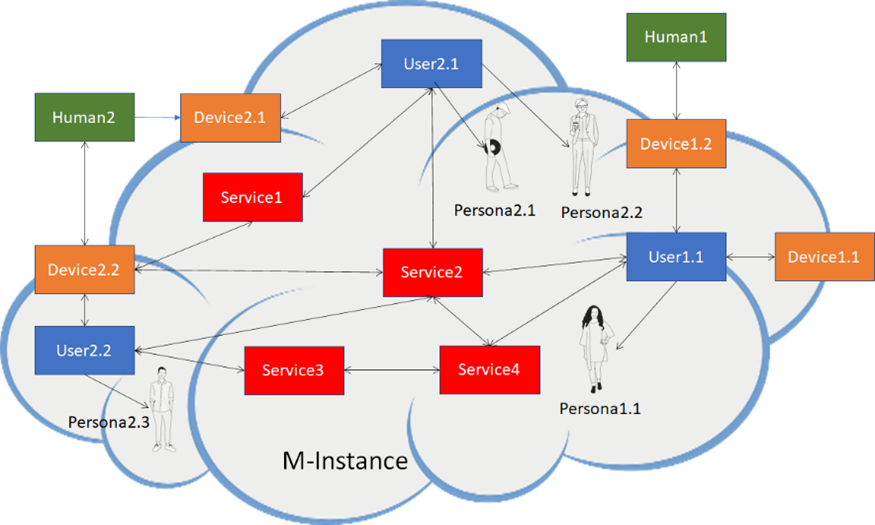

The relationships of Human-Device-User-Service are depicted by:

Figure 1 – Relationship of Human-Device-User-Service

The Call for Technologies requests comments on, proposed revisions of, and justified proposals for new facets of the MPAI-MMM operation.

More on the Call for Technologies: Interoperability between M-Instances

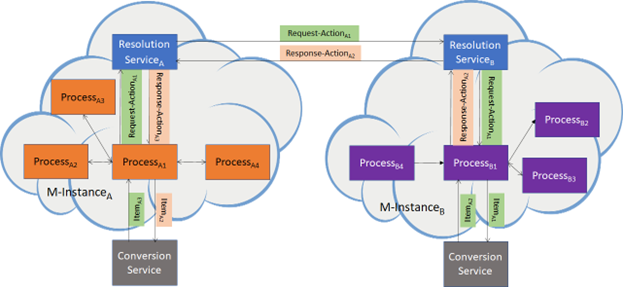

When ProcessA in MetaverseA requests ProcessB in MetaverseB to perform Action on an ItemA.1, the following workflow enables interoperability between MetaverseA and MetaverseB (RS=Resolution Service, CS=Conversion Service).

- ProcessA transmits Request-Action1 to RSA.

- RSA transmits Request-Action1 to RSB.

- RSB transmits Item1 to CS.

- CS produces and transmit Item2 containing Converted Data to RSB.

- RSB transmits the new Request-Action2 to ProcessB.

- ProcessB

- Performs the Action specified in Request-Action2 using ItemA.2.

- Produces Response-Action2.

- Requests RSB to transmit to RSA Response-Action2 containing ItemA.3 (result of performing Request-ActionA.2).

- RSB transmits Response-Action2 to RSA.

- RSA transmits Item3 to CS.

- CS produces and transmits to RSA Item4, corresponding to ItemA.3 with converted Data.

- RSA produces and transmits to ProcessA a new Response-Action4 that references ItemA.4.

- An M-Instance may allow Processes to communicate directly with Processes in other M-Instances without calling RSA.

Figure 2 – Processes communicating across M-Instances

The Call for Technologies requests comments on, proposed revisions of, and justified proposals for Metaverse Interoperability protocol.

More on the Call for Technologies: Processes, Items, Actions, and Data Types

The following tables provides the list of Processes, Items, Actions, and Data Types on which comments and proposals are requested.

Table 1 – Processes of the metaverse model

| App | Device | Service | User |

Table 2 – Actions of the metaverse model

| 1 | Authenticate | 9 | Inform | 17 | Modify | 25 | Transact |

| 2 | Author | 10 | Interpret | 18 | MU-Actuate | 26 | UM-Animate |

| 3 | Change | 11 | MM-Add | 19 | MU-Render | 27 | UM-Capture |

| 4 | Convert | 12 | MM-Animate | 20 | MU-Send | 28 | UM-Render |

| 5 | Discover | 13 | MM-Disable | 21 | Post | 29 | UM-Send |

| 6 | Execute | 14 | MM-Embed | 22 | Register | 30 | Validate |

| 7 | Hide | 15 | MM-Enable | 23 | Resolve | ||

| 8 | Identify | 16 | MM-Send | 24 | Track |

Table 3 – Items of the metaverse model

| Account | Experience | Message | Rights |

| Activity Data | Identifier | M-Location | Rules |

| Asset | InformIn | Model | Scene |

| AuthenticateIn | InformOut | Object | Social Graph |

| AuthenticateOut | Interaction | Persona | Stream |

| Contract | InterpretIn | Personal Profile | Transaction |

| DiscoverIn | InterpretOut | Program | U-Environment |

| DiscoverOut | Ledger | Provenance | User Data |

| Entity | Map | Request-Action | Value |

| Event | M-Environment | Response-Action | Wallet |

Table 4 – Data Types of the metaverse model

| 1 | Address | 5 | Currency | 9 | Point of View | 13 | Time |

| 2 | Amount | 6 | Emotion | 10 | Position | ||

| 3 | Cognitive State | 7 | Orientation | 11 | Social Attitude | ||

| 4 | Coordinates | 8 | Personal Status | 12 | Spatial Attitude |

The Call for Technologies requests comments on, proposed revisions of, and justified proposals for new Processes, Items, Actions, and Data Types.

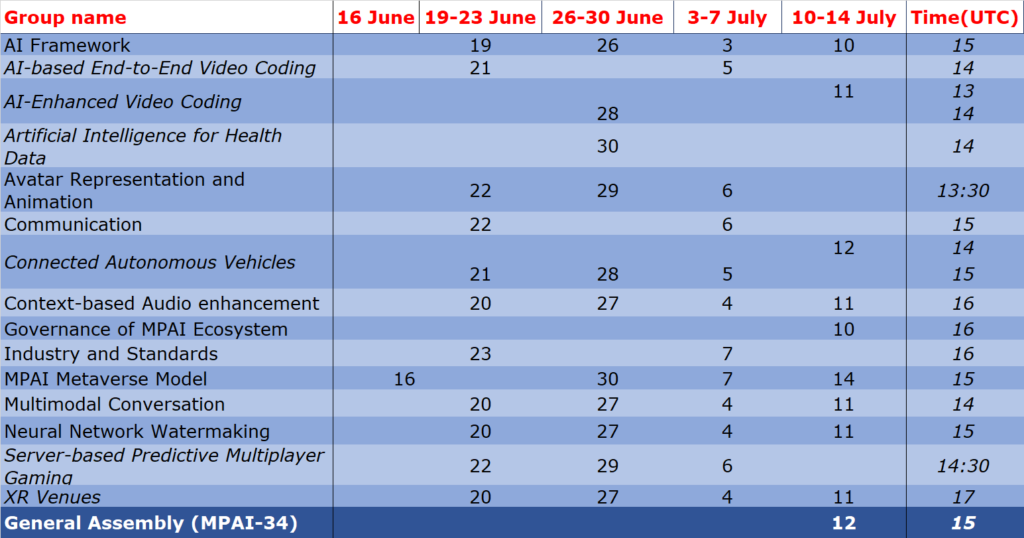

Meetings in the coming May-June meeting cycle

Non-MPAI members may join the meetings given in italics in the table below. If interested, please contact the MPAI secretariat.