| 1. Functions | 2. Reference Model | 3. Input/Output Data |

| 4. JSON Metadata | 5. SubAIMs | 6. Profiles |

| 7. Reference Software | 8. Conformance Testing | 9. Performance Assessment |

1 Functions

Speech Feature Analysis 1 (CAE-SF1):

| Receives | Model Utterance containing emotion. |

| Extracts | Speech Features1 from the Model Utterance. |

| Produces | Prosodic Speech Features. |

2 Reference Model

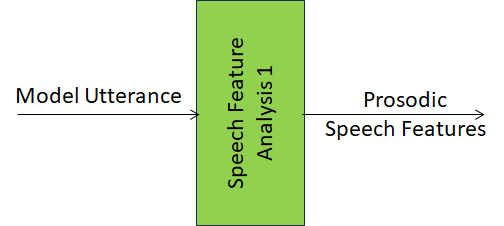

Figure 1 depicts the Speech Feature Analysis 1 (CAE-SF1) AIM:

Figure 1 – Speech Feature Analysis 1 (CAE-SF1) AIM

3 Input/Output Data

Table 1 gives the Input/Output Data of the Speech Feature Analysis 1 (CAE-SF1) AIM.

Table 1 – Input/Output Data of the Speech Feature Analysis 1 (CAE-SF1) AIM

| Input data | Semantics |

| Model Utterance | Utterance provided as a model. |

| Output data | Semantics |

| Prosodic Speech Features | A type of Speech Features (Descriptors). |

4 JSON Metadata

https://schemas.mpai.community/CAE1/V2.4/AIMs/SpeechFeatureAnalysis1.json

5 SubAIMs

No SubAIMs.

6 Profiles

No Profiles

7 Reference Software

Reference Software not available.

8 Conformance Testing

| Receives | Model Utterance | Shall validate against the Audio Object schema. The Qualifier shall validate against the Audio Qualifier schema. The values of any Sub-Type, Format, and Attribute of the Qualifier shall correspond with the Sub-Type, Format, and Attributes of the Audio Object Qualifier schema. |

| Produces | Prosodic Speech Features | Shall validate against the Speech Features Schema. |

9 Performance Assessment

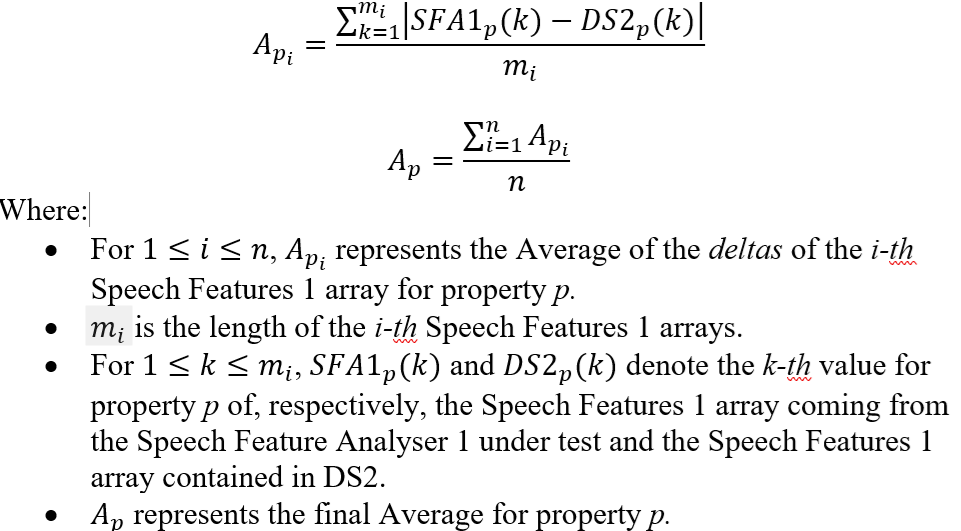

Table 6 gives the Emotion Enhanced Speech (EES) Speech Feature Analyser1 Means (verification procedures) and how they are used.

Table 6 – Means and use of Emotion Enhanced Speech (EES) Speech Feature Analyser1 AIM

| Means | Actions |

| Conformance Testing Dataset | DS1: a dataset of at least n > M Model Utterances.

DS2: a dataset of n Speech Features 1 arrays, where each is associated with a specific utterance of DS1 used as input, and thus represents one correct output, given this input. |

| Procedure | For each of the n Model Utterances in input:

Then, compute the Average for each of the three properties among the n Model Utterances. Considering one of the three properties (pitch, intensity and duration) and denoting it as p, a mathematical representation of the computation for each property is:

|

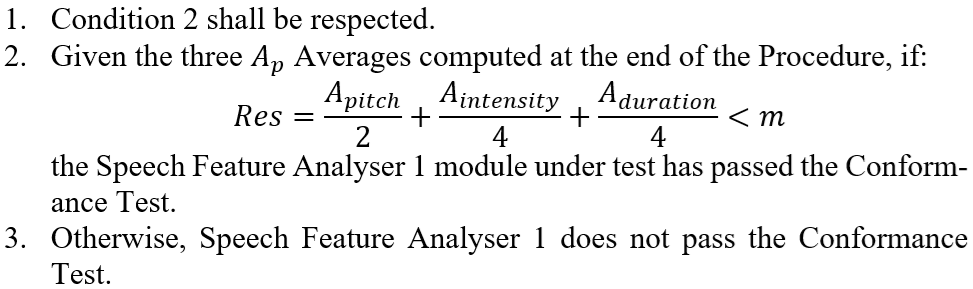

| Evaluation |  |

Figure 3 – EES Speech Feature Analyser1.

After the Tests, Conformance Tester shall fill out Table 7.

Table 7 – Conformance Testing form of Emotion Enhanced Speech (EES) Speech Feature Analyser1 (AIM1)

| Conformance Tester ID | Unique Conformance Tester Identifier assigned by MPAI |

| Standard, Use Case ID and Version | Standard ID and Use Case ID, Version and Profile of the standard in the form “CAE:EES:1.2:0”. |

| Name of AIM | Speech Feature Analyser1 |

| Implementer ID | Unique Implementer Identifier assigned by Conformance Tester. |

| AIM Implementation Version | Unique Implementation Identifier assigned by Implementer. |

| Neural Network Version* | Unique Neural Network Identifier assigned by Implementer. |

| Identifier of Test Dataset | Unique Dataset Identifier assigned by Conformance Tester. |

| Test ID | Unique Test Identifier assigned by Conformance Tester. |

| Actual output | Actual output provided as a matrix of n+1 rows containing all computed Average values:

Result: Threshold: m Final evaluation: Passed / Not passed |

| Execution time* | Duration of test execution. |

| Test comment* | |

| Test Date | yyyy/mm/dd. |

* Optional field